SOCIAL

Is Facebook Really to Blame for Increasing Political Division?

There is a lot to take in from the latest New York Times’ latest report on an internal memo sent by Facebook’s head of VR and AR Andrew Bosworth in regards to Facebook’s influence over voter behavior and societal shifts.

Bosworth is a senior figure within The Social Network, having first worked as an engineer in 2006, before moving on to head the platform’s mobile ad products from 2012 to 2017, to his current leadership role. As such, ‘Boz’ has had a front row seat to see the company’s rise, and in particular, given his position at the time, to see how Facebook ads influenced (or didn’t) the 2016 US Presidential Election.

And Boz says that Facebook ads did indeed influence the 2016 result, though not in the way that most suspect.

In summary, here are some of the key points which Bosworth addresses in his long post (which he has since published on his Facebook profile), and his stance on each:

- Russian interference in the 2016 election – Bosworth says that this happened, but that Russian troll farms had no major impact on the final election result

- Political misinformation – Bosworth says that most misinformation in the 2016 campaign came from people “with no political interest whatsoever” who were seeking to drive traffic to “ad-laden websites by creating fake headlines and did so to make money”. Misinformation from candidates, Boz says, was not a significant factor

- Cambridge Analytica – Bosworth says that Cambridge Analytica was a ‘non-event’ and that the company was essentially a group of ‘snake oil salesman’ who had no real influence, nor capacity for such. “The tools they used didn’t work, and the scale they used them at wasn’t meaningful. Every claim they have made about themselves is garbage.”

- Filter bubbles – Bosworth says that, if anything, Facebook users see content from more sources on a subject, not less. The problem is, according to Boz, that broader exposure to different perspectives actually pushes people more to one side: “What happens when you see more content from people you don’t agree with? Does it help you empathize with them as everyone has been suggesting? Nope. It makes you dislike them even more.”

But despite dismissing all of these factors, Bosworth says that Facebook is responsible for the 2016 election result:

“So was Facebook responsible for Donald Trump getting elected? I think the answer is yes, but not for the reasons anyone thinks. He didn’t get elected because of Russia or misinformation or Cambridge Analytica. He got elected because he ran the single best digital ad campaign I’ve ever seen from any advertiser. Period.”

Bosworth says that the Trump team simply ran a better campaign, on the platform where more people are now getting their news content.

“They weren’t running misinformation or hoaxes. They weren’t microtargeting or saying different things to different people. They just used the tools we had to show the right creative to each person. The use of custom audiences, video, ecommerce, and fresh creative remains the high watermark of digital ad campaigns in my opinion.”

The end result, Bosworth says, didn’t come about because people are being polarized by the News Feed algorithm showing them more of what they agree with (and less of what they don’t), nor through complex neuro-targeting of ads based on people’s inherent fears. Any subsequent societal division we’re now seeing which may have come about because of Facebook’s algorithms is due to the fact that its systems “are primarily exposing the desires of humanity itself, for better or worse”.

“In these moments people like to suggest that our consumers don’t really have free will. People compare social media to nicotine. […] Still, while Facebook may not be nicotine I think it is probably like sugar. Sugar is delicious and for most of us there is a special place for it in our lives. But like all things it benefits from moderation.”

Boz’s final stance here is that people should be able to decide for themselves how much ‘sugar’ they consume.

“…each of us must take responsibility for ourselves. If I want to eat sugar and die an early death that is a valid position.”

So if Facebook users choose to polarize themselves with the content available on its platform, then that’s their choice.

The stance is very similar to Facebook CEO Mark Zuckerberg’s position on political ads, and not subjecting such to fact-checks:

“People should be able to see for themselves what politicians are saying. And if content is newsworthy, we also won’t take it down even if it would otherwise conflict with many of our standards.”

Essentially, Facebook is saying it has no real stake in this, that it’s merely a platform for information sharing. And if people get something out of that experience, and they come back more often as a result, then it’s up to them to regulate just how much they consume.

As noted there’s a lot to consider here – and it is worth pointing out that these are Bosworth’s opinions only, and they are not necessarily representative of Facebook’s company stance more generally, though they do align with other disclosures from the company on these issues.

It’s interesting to note, in particular, Boz’s dismissal of filter bubbles, which are considered by most to be a key element in Facebook’s subsequent political influence. Bosworth says that, contrary to popular opinion, Facebook actually exposes users to significantly more content sources than they would have seen in times before the internet.

“Ask yourself how many newspapers and news programs people read/watched before the internet. If you guessed “one and one” on average you are right, and if you guessed those were ideologically aligned with them you are right again. The internet exposes them to far more content from other sources (26% more on Facebook, according to our research).”

Facebook’s COO Sheryl Sandberg quoted this same research in October last year, noting more specifically that 26% of the news which Facebook users see in their represents “another point of view.”

So the understanding that Facebook is able to radicalize users by aligning their feed with their established views is flawed, at least according to this insight – but then again, providing more sources surely also enables users to pick and choose the publishers and Pages that they agree with, and subsequently follow, which must eventually influence their opinion through a constant stream of content from a larger set of politically-aligned Pages, reinforcing their perspective.

In fact, that finding seems majorly flawed. Facebook’s News Feed doesn’t show you a random assortment of content from different sources, it shows you posts from Pages that you follow, along with content shared by your connections. If you’re connected to people who post things that you don’t like or disagree with, what do you do? You mute them, or you remove them as connections. In this sense, it seems impossible that Facebook could be exposing all of its users a more balanced view of each subject, based on a broader variety of inputs.

But then again, you are likely to see content from a generally broader mix of other Pages in your feed, based on what friends have Liked and shared. If Facebook is using that as a proxy, then it seems logical that you would see content from a wider range of different Pages, but few of them would likely align with political movements. It may also be, as it is with most social platforms, that a small number of users have outsized influence over such trends – so while, on average, more people might see a broader variety of content overall, the few active users who are sharing certain perspectives could have more sway overall.

It’s hard to draw any significant conclusions without the internal research, but it feels like that can’t be correct, that users can’t be exposed to more perspectives on a platform which enables you, so easily, to block other perspectives out, and follow the Pages which reinforce your stance.

That still seems to be the biggest issue, that Facebook users can pick and choose what they want to believe, and build their own eco-system around that. A level of responsibility, of course, also comes back to the publishers who are sharing more divisive, biased, partisan perspectives, but they’re arguably doing so because they know that it will spark debate, because it’ll spark comments and shares and lead to further distribution of their posts, gaining them more site traffic. Because Facebook, via its algorithm, has made such engagement a key measure in generating maximum reach through its network.

Could Facebook really have that level of influence over publisher decisions?

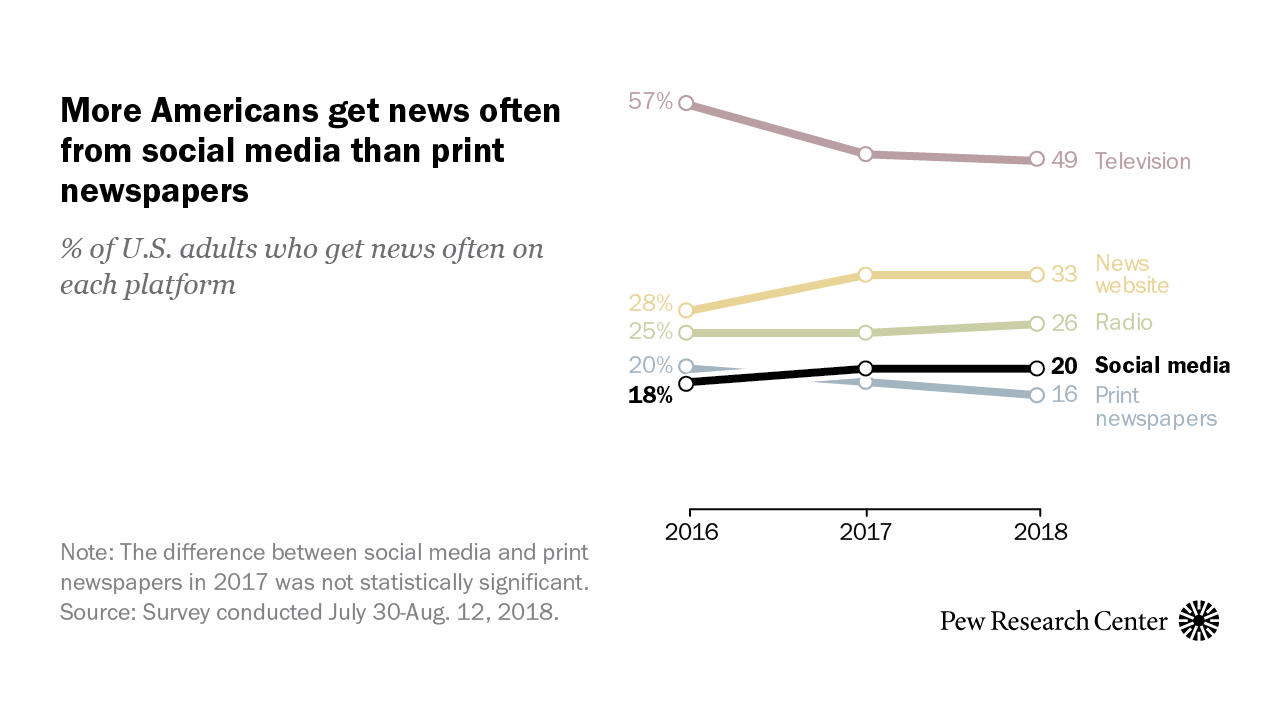

Consider this – in 2018, Facebook overtook print newspapers as a source of news content in the US.

Facebook clearly does have the sway to influence editorial decisions – so while Facebook may say that it’s not on them, that they don’t have any influence over what people think, or the news that they choose to believe, it could arguably be blamed for the initial polarization of news coverage in the first place, and that alone could have lead to more significant societal division.

But really, what Boz says is probably right. Russian interference may have nudged a few voters a little more in a certain direction, misinformation from politicians, specifically, is likely less influential than random memes from partisan Pages. People have long questioned the true capabilities of Cambridge Analytica and its psychographic audience profiling, while filter bubbles, as noted, seem like they have to have had some impact, but maybe less than we think.

But that doesn’t necessarily mean that Facebook has no responsibility to bear. Clearly, given its influence and the deteriorating state of political debate, the platform is playing a role in pushing people more towards the left or right respectively.

Could it be that Facebook’s algorithms have simply changed the way political content is covered by outlets, or that giving every person a voice has lead to more people voicing their beliefs, which has awakened existing division that we just weren’t aware of previously?

That then could arguably be fueling more division anyway – if you see that your brother, for example, has taken a stance which opposes your own, you’re more likely to reconsider your own position based on it coming from someone you respect.

Add to that the addictive dopamine rush of self-validation which comes from Likes and comments, prompting more personal sharing of such, and maybe Boz is right. Maybe, Facebook is simply ‘sugar’, and we only have ourselves to blame for coming back for more.

SOCIAL

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

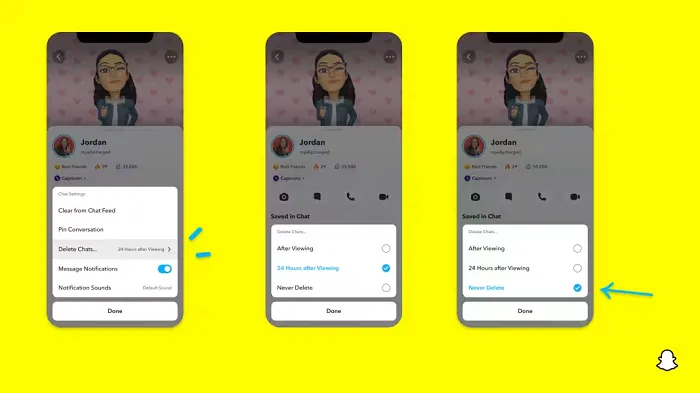

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

SOCIAL

Instagram Tests Live-Stream Games to Enhance Engagement

Instagram’s testing out some new options to help spice up your live-streams in the app, with some live broadcasters now able to select a game that they can play with viewers in-stream.

As you can see in these example screens, posted by Ahmed Ghanem, some creators now have the option to play either “This or That”, a question and answer prompt that you can share with your viewers, or “Trivia”, to generate more engagement within your IG live-streams.

That could be a simple way to spark more conversation and interaction, which could then lead into further engagement opportunities from your live audience.

Meta’s been exploring more ways to make live-streaming a bigger consideration for IG creators, with a view to live-streams potentially catching on with more users.

That includes the gradual expansion of its “Stars” live-stream donation program, giving more creators in more regions a means to accept donations from live-stream viewers, while back in December, Instagram also added some new options to make it easier to go live using third-party tools via desktop PCs.

Live streaming has been a major shift in China, where shopping live-streams, in particular, have led to massive opportunities for streaming platforms. They haven’t caught on in the same way in Western regions, but as TikTok and YouTube look to push live-stream adoption, there is still a chance that they will become a much bigger element in future.

Which is why IG is also trying to stay in touch, and add more ways for its creators to engage via streams. Live-stream games is another element within this, which could make this a better community-building, and potentially sales-driving option.

We’ve asked Instagram for more information on this test, and we’ll update this post if/when we hear back.

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING6 days ago

MARKETING6 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS5 days ago

WORDPRESS5 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO6 days ago

SEO6 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS6 days ago

WORDPRESS6 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

WORDPRESS7 days ago

[GET] The7 Website And Ecommerce Builder For WordPress