SEO

Google PageRank Explained for SEO Beginners

PageRank was once at the very core of search – and was what made Google the empire it is today.

Even if you believe that search has moved on from PageRank, there’s no denying that it has long been a pervasive concept in the industry.

Every SEO pro should have a good grasp of what PageRank was – and what it still is today.

In this article, we’ll cover:

- What is PageRank?

- The history of how PageRank evolved.

- How PageRank revolutionized search.

- Toolbar PageRank vs. PageRank.

- How PageRank works.

- How PageRank flows between pages.

- Is PageRank still used?

Let’s dive in.

What Is PageRank?

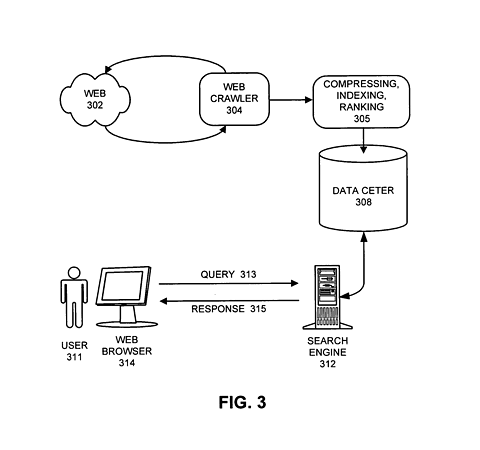

Created by Google founders Larry Page and Sergey Brin, PageRank is an algorithm based on the combined relative strengths of all the hyperlinks on the Internet.

Most people argue that the name was based on Larry Page’s surname, whilst others suggest “Page” refers to a web page. Both positions are likely true, and the overlap was probably intentional.

When Page and Brin were at Stanford University, they wrote a paper entitled: The PageRank Citation Ranking: Bringing Order to the Web.

Published in January 1999, the paper demonstrates a relatively simple algorithm for evaluating the strength of web pages.

The paper went on to become a patent in the U.S. (but not in Europe, where mathematical formulas are not patentable).

Image from patents.google.com, April 2023

Image from patents.google.com, April 2023Stanford University owns the patent and has assigned it to Google. The patent is currently due to expire in 2027.

Image from patents.google.com, April 2023

Image from patents.google.com, April 2023The History Of How PageRank Evolved

During their time at Stanford in the late 1990s, both Brin and Page were looking at information retrieval methods.

At that time, using links to work out how “important” each page was relative to another was a revolutionary way to order pages. It was computationally difficult but by no means impossible.

The idea quickly turned into Google, which at that time was a minnow in the world of search.

There was so much institutional belief in Google’s approach from some parties that the business initially launched its search engine with no ability to earn revenue.

And while Google (known at the time as “BackRub”) was the search engine, PageRank was the algorithm it used to rank pages in the search engine results pages (SERPs).

The Google Dance

One of the challenges of PageRank was that the math, whilst simple, needed to be iteratively processed. The calculation runs multiple times, over every page and every link on the Internet. At the turn of the millennium, this math took several days to process.

The Google SERPs moved up and down during that time. These changes were often erratic, as new PageRanks were being calculated for every page.

This was known as the “Google Dance,” and it notoriously stopped SEO pros of the day in their tracks every time Google started its monthly update.

(The Google Dance later became the name of an annual party that Google ran for SEO experts at its headquarters in Mountain View.)

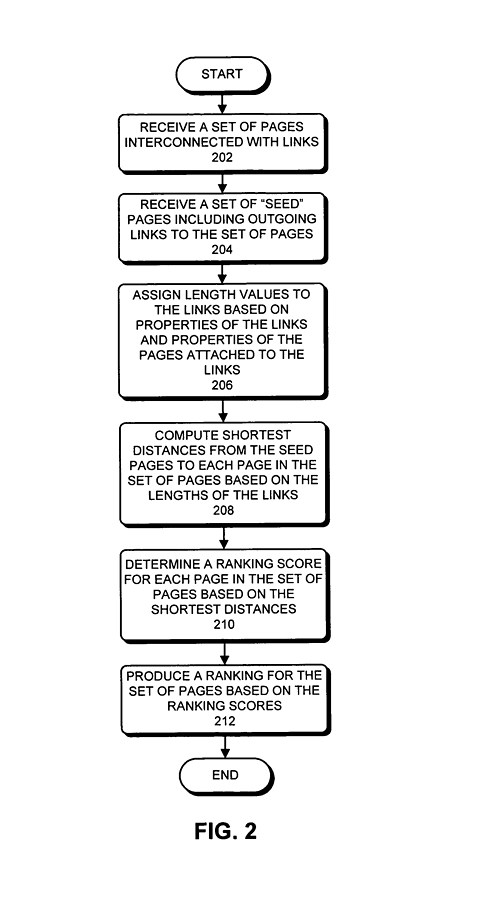

Trusted Seeds

A later iteration of PageRank introduced the idea of a “trusted seed” set to start the algorithm rather than giving every page on the Internet the same initial value.

Reasonable Surfer

Another iteration of the model introduced the idea of a “reasonable surfer.”

This model suggests that the PageRank of a page might not be shared evenly with the pages it links out to – but could weight the relative value of each link based on how likely a user might be to click on it.

The Retreat Of PageRank

Google’s algorithm was initially believed to be “unspam-able” internally since the importance of a page was dictated not just by its content but also by a sort of “voting system” generated by links to the page.

Google’s confidence did not last, however.

PageRank started to become problematic as the backlink industry grew. So Google withdrew it from public view, but continued to rely on it for its ranking algorithms.

The PageRank Toolbar was withdrawn by 2016, and eventually, all public access to PageRank was curtailed. But by this time, Majestic (an SEO tool), in particular, had been able to correlate its own calculations quite well with PageRank.

Google spent many years encouraging SEO professionals away from manipulating links through its “Google Guidelines” documentation and through advice from its spam team, headed up by Matt Cutts, until January 2017.

Google’s algorithms were also changing during this time.

The company was relying less on PageRank and, following the purchase of MetaWeb and its proprietary Knowledge Graph (called “Freebase” in 2014), Google started to index the world’s information in different ways.

Toolbar PageRank Vs. PageRank

Google was initially so proud of its algorithm that it was happy to publicly share the result of its calculation to anyone who wanted to see it.

The most notable representation was a toolbar extension for browsers like Firefox, which showed a score between 0 and 10 for every page on the Internet.

In truth, PageRank has a much wider range of scores, but 0-10 gave SEO pros and consumers an instant way to assess the importance of any page on the Internet.

The PageRank Toolbar made the algorithm extremely visible, which also came with complications. In particular, it meant that it was clear that links were the easiest way to “game” Google.

The more links (or, more accurately, the better the link), the better a page could rank in Google’s SERPs for any targeted keyword.

This meant that a secondary market was formed, buying and selling links valued on the PageRank of the URL where the link was sold.

This problem was exacerbated when Yahoo launched a free tool called Yahoo Search Explorer, which allowed anyone the ability to start finding links into any given page.

Later, two tools – Moz and Majestic – built on the free option by building their own indexes on the Internet and separately evaluating links.

How PageRank Revolutionized Search

Other search engines relied heavily on analyzing the content on each page individually. These methods had little to identify the difference between an influential page and one simply written with random (or manipulative) text.

This meant that the retrieval methods of other search engines were extremely easy for SEO pros to manipulate.

Google’s PageRank algorithm, then, was revolutionary.

Combined with a relatively simple concept of “nGrams” to help establish relevancy, Google found a winning formula.

It soon overtook the main incumbents of the day, such as AltaVista and Inktomi (which powered MSN, amongst others).

By operating at a page level, Google also found a much more scalable solution than the “directory” based approach adopted by Yahoo and later DMOZ – although DMOZ (also called the Open Directory Project) was able to provide Google initially with an open-source directory of its own.

How PageRank Works

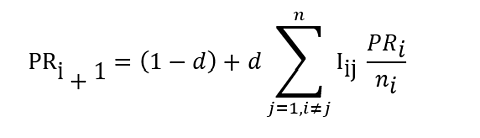

The formula for PageRank comes in a number of forms but can be explained in a few sentences.

Initially, every page on the internet is given an estimated PageRank score. This could be any number. Historically, PageRank was presented to the public as a score between 0 and 10, but in practice, the estimates do not have to start in this range.

The PageRank for that page is then divided by the number of links out of the page, resulting in a smaller fraction.

The PageRank is then distributed out to the linked pages – and the same is done for every other page on the Internet.

Then for the next iteration of the algorithm, the new estimate for PageRank for each page is the sum of all the fractions of pages that link into each given page.

The formula also contains a “damping factor,” which was described as the chance that a person surfing the web might stop surfing altogether.

Before each subsequent iteration of the algorithm starts, the proposed new PageRank is reduced by the damping factor.

This methodology is repeated until the PageRank scores reach a settled equilibrium. The resulting numbers were then generally transposed into a more recognizable range of 0 to 10 for convenience.

One way to represent this mathematically is:

-

Image from author, April 2023

Image from author, April 2023

Where:

- PR = PageRank in the next iteration of the algorithm.

- d = damping factor.

- j = the page number on the Internet (if every page had a unique number).

- n=total number of pages on the Internet.

- i = the iteration of the algorithm (initially set as 0).

The formula can also be expressed in Matrix form.

Problems And Iterations To The Formula

The formula has some challenges.

If a page does not link out to any other page, then the formula will not reach an equilibrium.

In this event, therefore, the PageRank would be distributed amongst every page on the Internet. In this way, even a page with no incoming links could get some PageRank – but it would not accumulate enough to be significant.

Another less documented challenge is that newer pages, whilst potentially more important than older pages, will have a lower PageRank. This means that over time, old content can have a disproportionately high PageRank.

The time a page has been live is not factored into the algorithm.

How PageRank Flows Between Pages

If a page starts with a value of 5 and has 10 links out, then every page it links to is given 0.5 PageRank (less the damping factor).

In this way, the PageRank flows around the Internet between iterations.

As new pages come onto the Internet, they start with only a tiny amount of PageRank. But as other pages start to link to these pages, their PageRank increases over time.

Is PageRank Still Used?

Although public access to PageRank was removed in 2016, it is believed the score is still available to search engineers within Google.

A leak of the factors used by Yandex showed that PageRank remained as a factor that it could use.

Google engineers have suggested that the original form of PageRank was replaced with a new approximation that requires less processing power to calculate. Whilst the formula is less important in how Google ranks pages, it remains a constant for each web page.

And regardless of what other algorithms Google might choose to call upon, PageRank likely remains embedded in many of the search giant’s systems to this day.

Dixon explains how PageRank works in more detail in this video:

Original Patents And Papers For More In-Depth Reading:

More resources:

Featured Image: VectorMine/Shutterstock

SEO

Google On Hyphens In Domain Names

Google’s John Mueller answered a question on Reddit about why people don’t use hyphens with domains and if there was something to be concerned about that they were missing.

Domain Names With Hyphens For SEO

I’ve been working online for 25 years and I remember when using hyphens in domains was something that affiliates did for SEO when Google was still influenced by keywords in the domain, URL, and basically keywords anywhere on the webpage. It wasn’t something that everyone did, it was mainly something that was popular with some affiliate marketers.

Another reason for choosing domain names with keywords in them was that site visitors tended to convert at a higher rate because the keywords essentially prequalified the site visitor. I know from experience how useful two-keyword domains (and one word domain names) are for conversions, as long as they didn’t have hyphens in them.

A consideration that caused hyphenated domain names to fall out of favor is that they have an untrustworthy appearance and that can work against conversion rates because trustworthiness is an important factor for conversions.

Lastly, hyphenated domain names look tacky. Why go with tacky when a brandable domain is easier for building trust and conversions?

Domain Name Question Asked On Reddit

This is the question asked on Reddit:

“Why don’t people use a lot of domains with hyphens? Is there something concerning about it? I understand when you tell it out loud people make miss hyphen in search.”

And this is Mueller’s response:

“It used to be that domain names with a lot of hyphens were considered (by users? or by SEOs assuming users would? it’s been a while) to be less serious – since they could imply that you weren’t able to get the domain name with fewer hyphens. Nowadays there are a lot of top-level-domains so it’s less of a thing.

My main recommendation is to pick something for the long run (assuming that’s what you’re aiming for), and not to be overly keyword focused (because life is too short to box yourself into a corner – make good things, course-correct over time, don’t let a domain-name limit what you do online). The web is full of awkward, keyword-focused short-lived low-effort takes made for SEO — make something truly awesome that people will ask for by name. If that takes a hyphen in the name – go for it.”

Pick A Domain Name That Can Grow

Mueller is right about picking a domain name that won’t lock your site into one topic. When a site grows in popularity the natural growth path is to expand the range of topics the site coves. But that’s hard to do when the domain is locked into one rigid keyword phrase. That’s one of the downsides of picking a “Best + keyword + reviews” domain, too. Those domains can’t grow bigger and look tacky, too.

That’s why I’ve always recommended brandable domains that are memorable and encourage trust in some way.

Read the post on Reddit:

Read Mueller’s response here.

Featured Image by Shutterstock/Benny Marty

SEO

Reddit Post Ranks On Google In 5 Minutes

Google’s Danny Sullivan disputed the assertions made in a Reddit discussion that Google is showing a preference for Reddit in the search results. But a Redditor’s example proves that it’s possible for a Reddit post to rank in the top ten of the search results within minutes and to actually improve rankings to position #2 a week later.

Discussion About Google Showing Preference To Reddit

A Redditor (gronetwork) complained that Google is sending so many visitors to Reddit that the server is struggling with the load and shared an example that proved that it can only take minutes for a Reddit post to rank in the top ten.

That post was part of a 79 post Reddit thread where many in the r/SEO subreddit were complaining about Google allegedly giving too much preference to Reddit over legit sites.

The person who did the test (gronetwork) wrote:

“…The website is already cracking (server down, double posts, comments not showing) because there are too many visitors.

…It only takes few minutes (you can test it) for a post on Reddit to appear in the top ten results of Google with keywords related to the post’s title… (while I have to wait months for an article on my site to be referenced). Do the math, the whole world is going to spam here. The loop is completed.”

Reddit Post Ranked Within Minutes

Another Redditor asked if they had tested if it takes “a few minutes” to rank in the top ten and gronetwork answered that they had tested it with a post titled, Google SGE Review.

gronetwork posted:

“Yes, I have created for example a post named “Google SGE Review” previously. After less than 5 minutes it was ranked 8th for Google SGE Review (no quotes). Just after Washingtonpost.com, 6 authoritative SEO websites and Google.com’s overview page for SGE (Search Generative Experience). It is ranked third for SGE Review.”

It’s true, not only does that specific post (Google SGE Review) rank in the top 10, the post started out in position 8 and it actually improved ranking, currently listed beneath the number one result for the search query “SGE Review”.

Screenshot Of Reddit Post That Ranked Within Minutes

Anecdotes Versus Anecdotes

Okay, the above is just one anecdote. But it’s a heck of an anecdote because it proves that it’s possible for a Reddit post to rank within minutes and get stuck in the top of the search results over other possibly more authoritative websites.

hankschrader79 shared that Reddit posts outrank Toyota Tacoma forums for a phrase related to mods for that truck.

Google’s Danny Sullivan responded to that post and the entire discussion to dispute that Reddit is not always prioritized over other forums.

Danny wrote:

“Reddit is not always prioritized over other forums. [super vhs to mac adapter] I did this week, it goes Apple Support Community, MacRumors Forum and further down, there’s Reddit. I also did [kumo cloud not working setup 5ghz] recently (it’s a nightmare) and it was the Netgear community, the SmartThings Community, GreenBuildingAdvisor before Reddit. Related to that was [disable 5g airport] which has Apple Support Community above Reddit. [how to open an 8 track tape] — really, it was the YouTube videos that helped me most, but it’s the Tapeheads community that comes before Reddit.

In your example for [toyota tacoma], I don’t even get Reddit in the top results. I get Toyota, Car & Driver, Wikipedia, Toyota again, three YouTube videos from different creators (not Toyota), Edmunds, a Top Stories unit. No Reddit, which doesn’t really support the notion of always wanting to drive traffic just to Reddit.

If I guess at the more specific query you might have done, maybe [overland mods for toyota tacoma], I get a YouTube video first, then Reddit, then Tacoma World at third — not near the bottom. So yes, Reddit is higher for that query — but it’s not first. It’s also not always first. And sometimes, it’s not even showing at all.”

hankschrader79 conceded that they were generalizing when they wrote that Google always prioritized Reddit. But they also insisted that that didn’t diminish what they said is a fact that Google’s “prioritization” forum content has benefitted Reddit more than actual forums.

Why Is The Reddit Post Ranked So High?

It’s possible that Google “tested” that Reddit post in position 8 within minutes and that user interaction signals indicated to Google’s algorithms that users prefer to see that Reddit post. If that’s the case then it’s not a matter of Google showing preference to Reddit post but rather it’s users that are showing the preference and the algorithm is responding to those preferences.

Nevertheless, an argument can be made that user preferences for Reddit can be a manifestation of Familiarity Bias. Familiarity Bias is when people show a preference for things that are familiar to them. If a person is familiar with a brand because of all the advertising they were exposed to then they may show a bias for the brand products over unfamiliar brands.

Users who are familiar with Reddit may choose Reddit because they don’t know the other sites in the search results or because they have a bias that Google ranks spammy and optimized websites and feel safer reading Reddit.

Google may be picking up on those user interaction signals that indicate a preference and satisfaction with the Reddit results but those results may simply be biases and not an indication that Reddit is trustworthy and authoritative.

Is Reddit Benefiting From A Self-Reinforcing Feedback Loop?

It may very well be that Google’s decision to prioritize user generated content may have started a self-reinforcing pattern that draws users in to Reddit through the search results and because the answers seem plausible those users start to prefer Reddit results. When they’re exposed to more Reddit posts their familiarity bias kicks in and they start to show a preference for Reddit. So what could be happening is that the users and Google’s algorithm are creating a self-reinforcing feedback loop.

Is it possible that Google’s decision to show more user generated content has kicked off a cycle where more users are exposed to Reddit which then feeds back into Google’s algorithm which in turn increases Reddit visibility, regardless of lack of expertise and authoritativeness?

Featured Image by Shutterstock/Kues

SEO

WordPress Releases A Performance Plugin For “Near-Instant Load Times”

WordPress released an official plugin that adds support for a cutting edge technology called speculative loading that can help boost site performance and improve the user experience for site visitors.

Speculative Loading

Rendering means constructing the entire webpage so that it instantly displays (rendering). When your browser downloads the HTML, images, and other resources and puts it together into a webpage, that’s rendering. Prerendering is putting that webpage together (rendering it) in the background.

What this plugin does is to enable the browser to prerender the entire webpage that a user might navigate to next. The plugin does that by anticipating which webpage the user might navigate to based on where they are hovering.

Chrome lists a preference for only prerendering when there is an at least 80% probability of a user navigating to another webpage. The official Chrome support page for prerendering explains:

“Pages should only be prerendered when there is a high probability the page will be loaded by the user. This is why the Chrome address bar prerendering options only happen when there is such a high probability (greater than 80% of the time).

There is also a caveat in that same developer page that prerendering may not happen based on user settings, memory usage and other scenarios (more details below about how analytics handles prerendering).

The Speculative Loading API solves a problem that previous solutions could not because in the past they were simply prefetching resources like JavaScript and CSS but not actually prerendering the entire webpage.

The official WordPress announcement explains it like this:

Introducing the Speculation Rules API

The Speculation Rules API is a new web API that solves the above problems. It allows defining rules to dynamically prefetch and/or prerender URLs of certain structure based on user interaction, in JSON syntax—or in other words, speculatively preload those URLs before the navigation. This API can be used, for example, to prerender any links on a page whenever the user hovers over them.”

The official WordPress page about this new functionality describes it:

“The Speculation Rules API is a new web API… It allows defining rules to dynamically prefetch and/or prerender URLs of certain structure based on user interaction, in JSON syntax—or in other words, speculatively preload those URLs before the navigation.

This API can be used, for example, to prerender any links on a page whenever the user hovers over them. Also, with the Speculation Rules API, “prerender” actually means to prerender the entire page, including running JavaScript. This can lead to near-instant load times once the user clicks on the link as the page would have most likely already been loaded in its entirety. However that is only one of the possible configurations.”

The new WordPress plugin adds support for the Speculation Rules API. The Mozilla developer pages, a great resource for HTML technical understanding describes it like this:

“The Speculation Rules API is designed to improve performance for future navigations. It targets document URLs rather than specific resource files, and so makes sense for multi-page applications (MPAs) rather than single-page applications (SPAs).

The Speculation Rules API provides an alternative to the widely-available <link rel=”prefetch”> feature and is designed to supersede the Chrome-only deprecated <link rel=”prerender”> feature. It provides many improvements over these technologies, along with a more expressive, configurable syntax for specifying which documents should be prefetched or prerendered.”

See also: Are Websites Getting Faster? New Data Reveals Mixed Results

Performance Lab Plugin

The new plugin was developed by the official WordPress performance team which occasionally rolls out new plugins for users to test ahead of possible inclusion into the actual WordPress core. So it’s a good opportunity to be first to try out new performance technologies.

The new WordPress plugin is by default set to prerender “WordPress frontend URLs” which are pages, posts, and archive pages. How it works can be fine-tuned under the settings:

Settings > Reading > Speculative Loading

Browser Compatibility

The Speculative API is supported by Chrome 108 however the specific rules used by the new plugin require Chrome 121 or higher. Chrome 121 was released in early 2024.

Browsers that do not support will simply ignore the plugin and will have no effect on the user experience.

Check out the new Speculative Loading WordPress plugin developed by the official core WordPress performance team.

How Analytics Handles Prerendering

A WordPress developer commented with a question asking how Analytics would handle prerendering and someone else answered that it’s up to the Analytics provider to detect a prerender and not count it as a page load or site visit.

Fortunately both Google Analytics and Google Publisher Tags (GPT) both are able to handle prerenders. The Chrome developers support page has a note about how analytics handles prerendering:

“Google Analytics handles prerender by delaying until activation by default as of September 2023, and Google Publisher Tag (GPT) made a similar change to delay triggering advertisements until activation as of November 2023.”

Possible Conflict With Ad Blocker Extensions

There are a couple things to be aware of about this plugin, aside from the fact that it’s an experimental feature that requires Chrome 121 or higher.

A comment by a WordPress plugin developer that this feature may not work with browsers that are using the uBlock Origin ad blocking browser extension.

Download the plugin:

Speculative Loading Plugin by the WordPress Performance Team

Read the announcement at WordPress

Speculative Loading in WordPress

See also: WordPress, Wix & Squarespace Show Best CWV Rate Of Improvement

-

WORDPRESS7 days ago

WORDPRESS7 days ago10 WordPress Influencers to Follow in 2024 – WordPress.com News

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoGoogle Image Search Adds Pixel Level Object Segmentation Animation

-

MARKETING7 days ago

MARKETING7 days agoFeeling Stuck: What to Do When You Don’t Know What to Do

-

PPC5 days ago

PPC5 days agoA History of Google AdWords and Google Ads: Revolutionizing Digital Advertising & Marketing Since 2000

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoMore Google March 2024 Core Update Ranking Volatility

-

PPC6 days ago

PPC6 days agoCompetitor Monitoring: 7 ways to keep watch on the competition

-

PPC5 days ago

PPC5 days ago31 Ready-to-Go Mother’s Day Messages for Social Media, Email, & More

-

WORDPRESS6 days ago

WORDPRESS6 days agoThrive Architect vs Divi vs Elementor