SEARCHENGINES

Listen To Google Talk About de-SEOing The Search Central Website

Google’s Martin Splitt, Gary Illyes, and Lizzi Sassman sat together on the latest Search Off the Record to talk about de-SEOing – what we would probably call over-optimization strategies. It is an interesting listen to, stuff you probably heard before but it is interesting to hear Googlers who are intimate with how Google Search works tackle this issue on their own site.

Here is the embed:

One thing, they take credit for coining the name “de-SEOing” but what they describe as de-SEOing is what SEOs would call working on over optimization – something both Gary (who was on the podcast) and Matt Cutts talked about before. Here is where Gary mentioned it:

That is totally a thing, but I can’t think of a better name for it. It is literally optimizing so much that eventually it starts hurting

— Gary 鯨理/경리 Illyes (@methode) May 24, 2017

Before listening, it might make sense to read about Google relaunching the Google Webmasters brand to the Search Central brand and then the year later progress report.

One final point, at the end, at the 27 minute mark or so, Gary talks about he finds it annoying that he cannot use the internal Google tools to debug these issues. He has to use the public tools that you or I use, such as Google Search Console, to debug these issues because otherwise it would not be fair. He said:

And it’s also kind of annoying because when we are looking into how people reach those pages, I could use the internal tools. It could be very simple to do it. Like I could just hit up the debug interfaces that we have and tools and just look at how it happens. But we can’t do that. We actually have to use the same tools that anyone else, external to Google, has to use or could use. And it’s very annoying because the information is less obviously. I know why it’s less than what we have internally. Still annoying. But yeah, we will have to figure it out with Search Console and other similar tools.

Forum discussion at Twitter.

SEARCHENGINES

Daily Search Forum Recap: April 16, 2024

Here is a recap of what happened in the search forums today, through the eyes of the Search Engine Roundtable and other search forums on the web.

Google will fight the site reputation abuse spam both algorithmically and with manual actions. Google is testing thumbs-up and down in product carousels. Google Ads similar product carousel is being tested. Google Search updated its image documentation. Google AdSense has a new ad format named ad intents.

Search Engine Roundtable Stories:

-

Google Will Fight Site Reputation Abuse Spam Both With Manual Actions & Algorithms

Google’s new spam algorithm update also introduced new spam policies including the upcoming site reputation abuse policy that won’t go into effect until May 2024. Google has confirmed it will fight site reputation abuse spam using both manual actions (humans) and algorithms (machines). -

Google Search Tests Thumbs Up/Down Buttons In Product Grid Results

Google launched the style recommendations with thumbs up and down buttons not long ago after testing it in January. Now Google is showing this thumbs up and down buttons in the product grid search results, so Google can see what you like or dislike and then show you more products that you do like. -

Google Ads “Similar Product” Carousel

Google has a similar products section and carousel for Google Ads sponsored listings. We have seen similar products and similar shopping related results from the organic / free listings but now I am seeing examples of a search ad carousel for “similar products.” -

Clarification: Google Search Supports Images Referenced From src Attribute

Google has clarified in its image search help documentation that images are only extracted from the src attribute of img tags in Google Search. This is not new, but Google decided to update its documentation based on some questions it received about the topic. -

Google AdSense New Ad Intents Formats – Links & Anchors In Content

Google AdSense announced a new auto ads format named “Ad intents.” Ad intents places links and anchors showing organic search results with ads into existing text and pages on your site related to your content. Yea, it takes your content, and hyperlinks it to the Google search results. -

3-Wheel Tricycle At Google

Google’s Ann Arbor office has this 3-wheel tricycle that some Googlers have used over the years to get around the office. This one looks like the hot pink Huffy brand. I spotted this recently on Instagram but the photo is from 2016.

Other Great Search Threads:

- I don’t have an update to share at this time, but you should continue to use classic GMC for rules and supplemental feed support. Here’s how to switch back if needed, AdsLiaison on X

- In this case, I’ve been introducing a new metric; “likelihood to get search traffic” to see what we should add to XML sitemaps. Some pages might do incredibly well on other metrics, but simply, Joost de Valk on X

- It does have a title, and it loads a HTML page – so this seems normal. The JavaScript doesn’t seem to be loading well, so if you’re the site owner, I’d suggest reading ou, John Mueller on X

- News! Google Ads removed in some accounts the possibility to hover over a daily budget to edit it. You need to hover now and then click on “Edit budget”. Why changing things to less user-friendly ones, Thomas Eccel on X

- The time has come! Registration for the Zürich Product Experts Summit has now opened for eligible PEs in Europe. Virtual registration will follow in May. Check your inboxes and the KB for more details., Google’s Product Experts Program on X

- When I want to add positive search terms as exact match, I first add them quickly as broad, then bulk switch them over to exact match. lately I’ve been getting this error and the only way I can switch the keywords is by using Ads Editor., Greg on X

- Yeah, quantity says nothing about quality and even less about user value or business value. Sometimes the solution to a “crawl budget problem” is not to make the server faster & search engines, John Mueller on X

- Which the best method to link to the alternative language of a website, WebmasterWorld

Search Engine Land Stories:

Other Great Search Stories:

Analytics

Industry & Business

Links & Content Marketing

Local & Maps

Mobile & Voice

SEO

PPC

Search Features

Other Search

Feedback:

Have feedback on this daily recap; let me know on Twitter @rustybrick or @seroundtable, on Threads, Mastodon and Bluesky and you can follow us on Facebook and on Google News and make sure to subscribe to the YouTube channel, Apple Podcasts, Spotify, Google Podcasts or just contact us the old fashion way.

SEARCHENGINES

Daily Search Forum Recap: April 15, 2024

Here is a recap of what happened in the search forums today, through the eyes of the Search Engine Roundtable and other search forums on the web.

There was more Google core update volatility over the weekend. Google defended its statements about forums ranking for almost everything. Google responded to The Verge’s printer article mocking its search results. Google AdSense publishers are seeing really big earnings drops. Google crawl budget is allocated on the hostname level. Google threatened California over its new proposed link tax bill with pulling investments and its news results from the state.

Search Engine Roundtable Stories:

-

Weekend Google Core Ranking Volatility Taxing Site Owners

As I mentioned briefly in my Friday video recap, I was starting to see renewed chatter on Friday morning around more Google search ranking volatility. I spotted some renewed chatter that lead through Friday, into Saturday and today. So I figured I’d cover it and share some of what SEOs are saying over the weekend. -

Google Responds To The Verge Mocking Its Search Rankings For Best Printer

Nilay Patel, editor-in-chief of the Verge posted a new article with the intent to both rank for [best printer] in Google Search, as well as mock Google for how he can game Google’s search rankings using AI-generated content, while throwing in some affiliate links. Google’s John Mueller responded saying, “People seem to really enjoy it.” -

Google Goes On Defensive On Its Search Quality & Forum Results Statements

Recently, we covered some of Google’s rationale for ranking forums like Reddit and Quora so well in the Discussion and Forums box for many queries. Just a few days ago, we covered how I was sad to see Google ranking some dangerous and potentially harmful forum threads for health-related queries. -

Google Threatens California: Tests Removing Links To Publishers & Pauses Investments

On Friday, Google responded to a pending bill in the California state legislature, the California Journalism Preservation Act (CJPA), that would require Google to pay a link tax to publishers by testing removing links to California based publishers and pausing investments in news publishers within the state. -

Google Crawl Budget Is Allocated By Hostname

Google gives every hostname its own allocated crawl budget. So that means each domain, subdomain, etc has its own unique crawl budget. -

Google AdSense Publishers Reporting Huge RPM Earnings Drops

Many Google AdSense publishers have been reporting massive declines in their earnings and RPMs (page revenue per thousand impressions) since late February. This comes a couple of weeks after we reported the switch from CPC to CPM bidding in AdSense did not have a negative revenue result for publishers. -

Google Android Figurine Display Case

At the Google Chicago office they have dozens of Android figurines on display in this glass display case. I guess they all have a name and are labeled with details. I found this image on Instagram.

Other Great Search Threads:

- These things are done when they’re done, it’s hard to predict exact timelines., John Mueller on X

- Google just changed the layout for product detail window , also include the near by store in this, Khushal Bherwani on X

- Google Ads charging 0.01 for a few European countries, WebmasterWorld

- GPTBot got stuck in giant content farm, Orhan Kurulan on X

- I created a chatbot with the Google Webmasters Documentation. Based on the current data, the tool has: 1. Over 15,000 requests 2. Over 3,000 users 3. I received support from @JohnMu and @g33konaut from Google itself, Dido Grigorov on X

- I am the new Paid Media News Writer of @sengineland ! Such an honour – can’t believe it! I get to work with the likes of @rustybrick and @MrDannyGoodwin!! Dreams do come tru, Anu Adegbola on X

Search Engine Land Stories:

Other Great Search Stories:

Analytics

Industry & Business

Links & Content Marketing

Local & Maps

Mobile & Voice

SEO

PPC

Search Features

Other Search

Feedback:

Have feedback on this daily recap; let me know on Twitter @rustybrick or @seroundtable, on Threads, Mastodon and Bluesky and you can follow us on Facebook and on Google News and make sure to subscribe to the YouTube channel, Apple Podcasts, Spotify, Google Podcasts or just contact us the old fashion way.

SEARCHENGINES

Weekend Google Core Ranking Volatility

As I mentioned briefly in my Friday video recap, I was starting to see renewed chatter on Friday morning around more Google search ranking volatility likely related to the ongoing Google March 2024 core update. I spotted some renewed chatter that lead through Friday, into Saturday and today. So I figured I’d cover it and share some of what SEOs are saying over the weekend.

We reported on volatility last Wedneday, on April 10th, and now we are seeing more of it. As a reminder, some sites got hit super hard by this update and no, it is not done yet. We have still not seen any real recoveries for sites hit by the September 2023 helpful content update recovery yet with this core update.

We are now 40 days and almost 40 nights since the update started rolling out and Passover is just around the corner. (sorry, had to…)

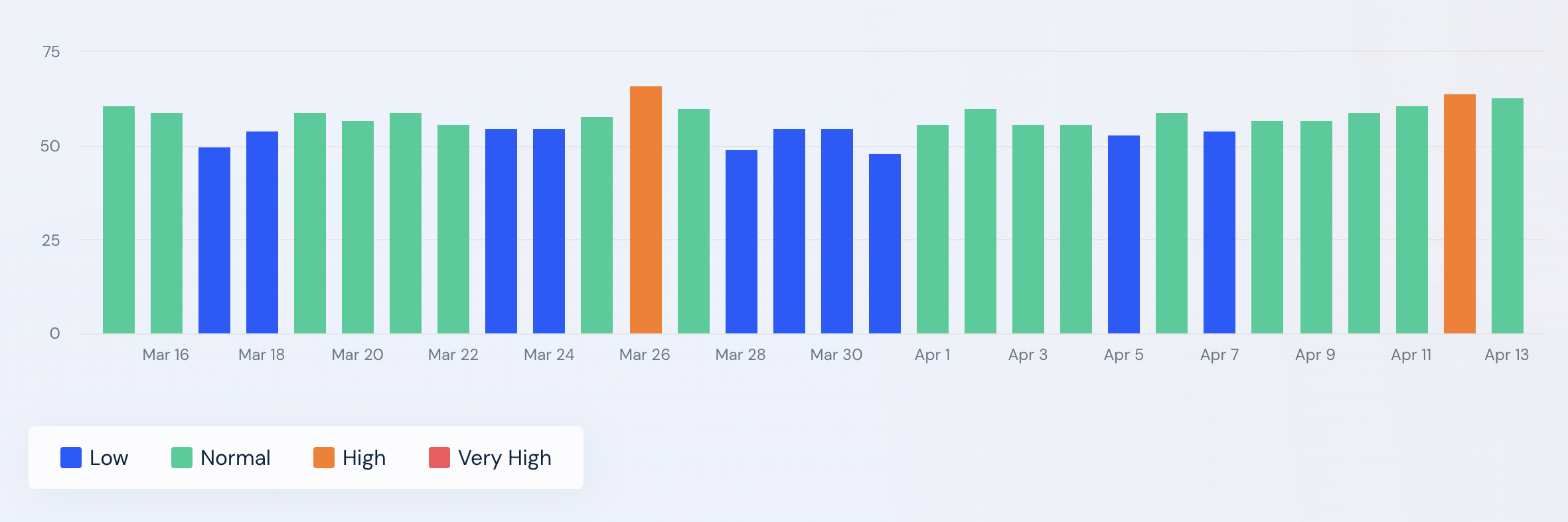

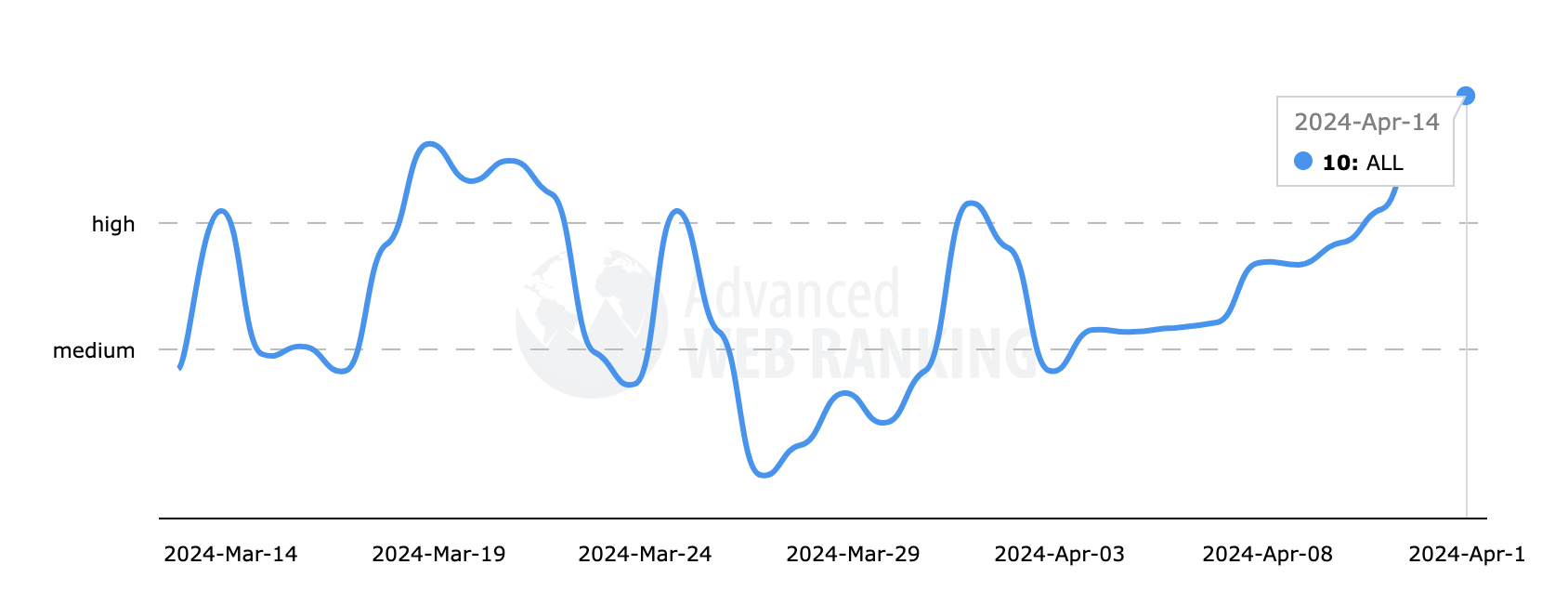

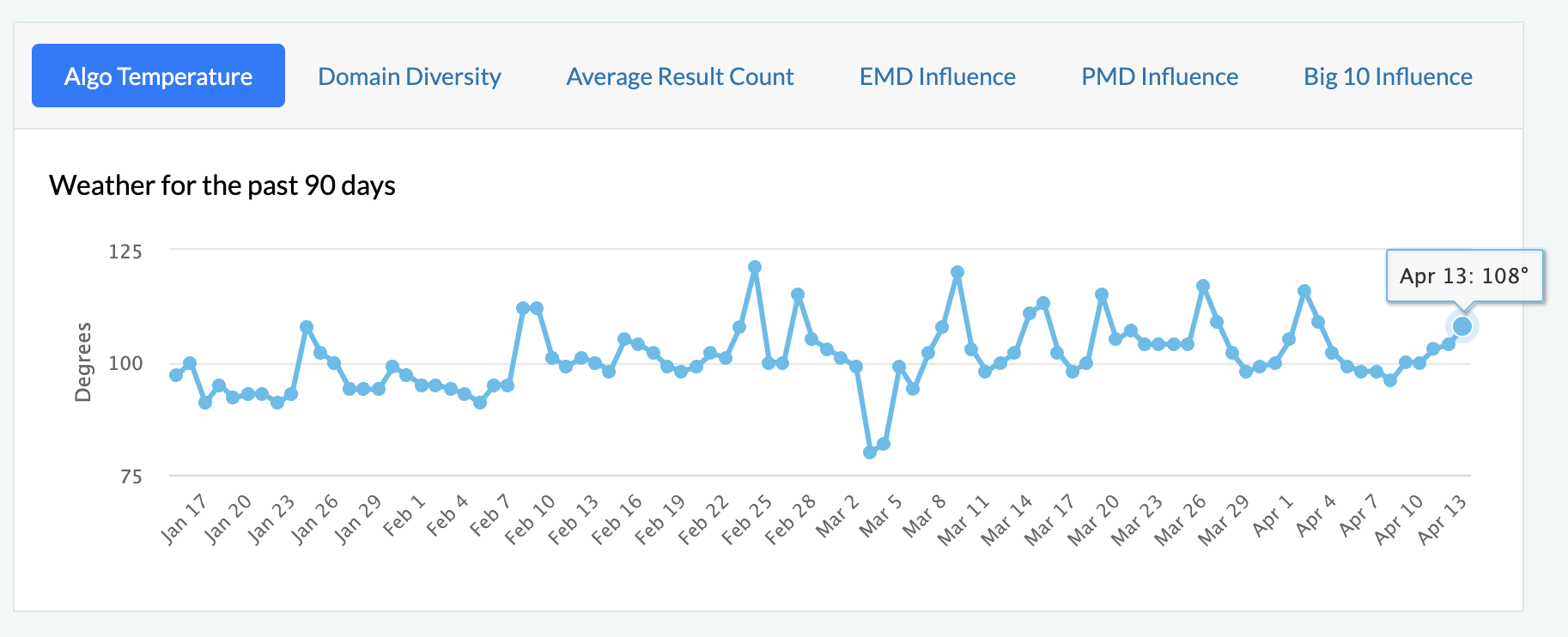

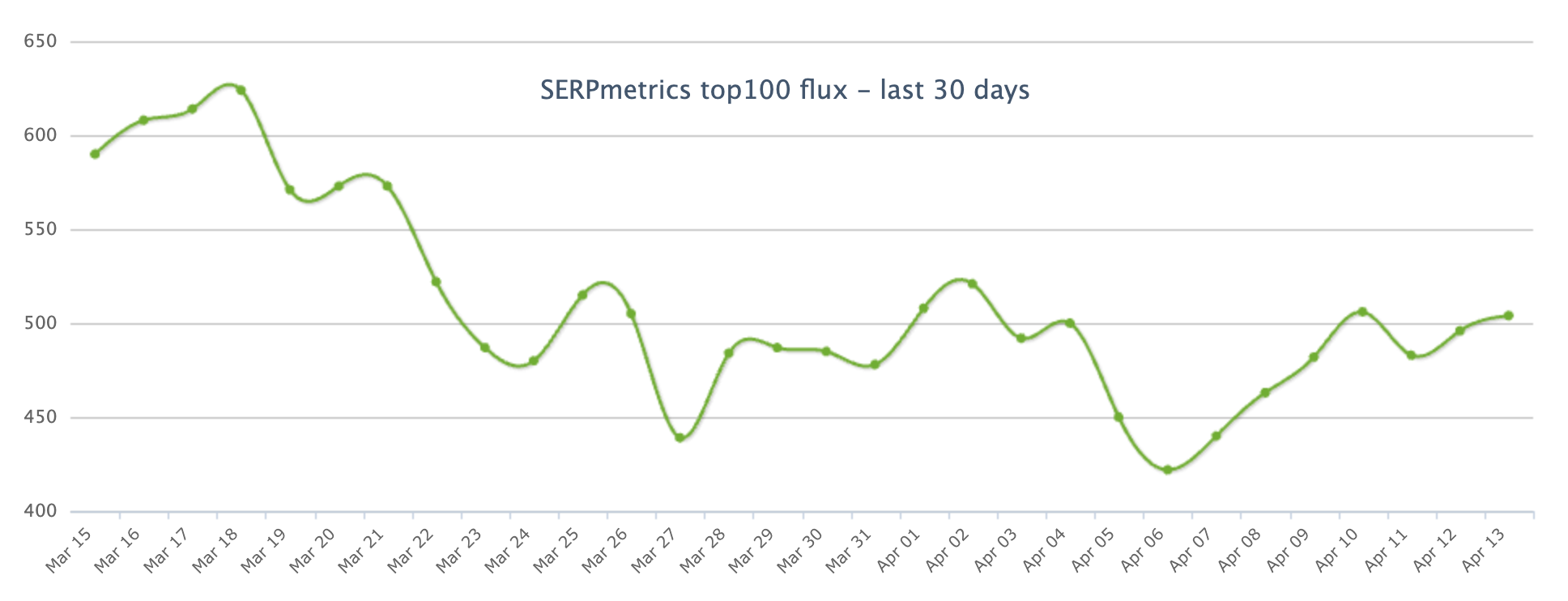

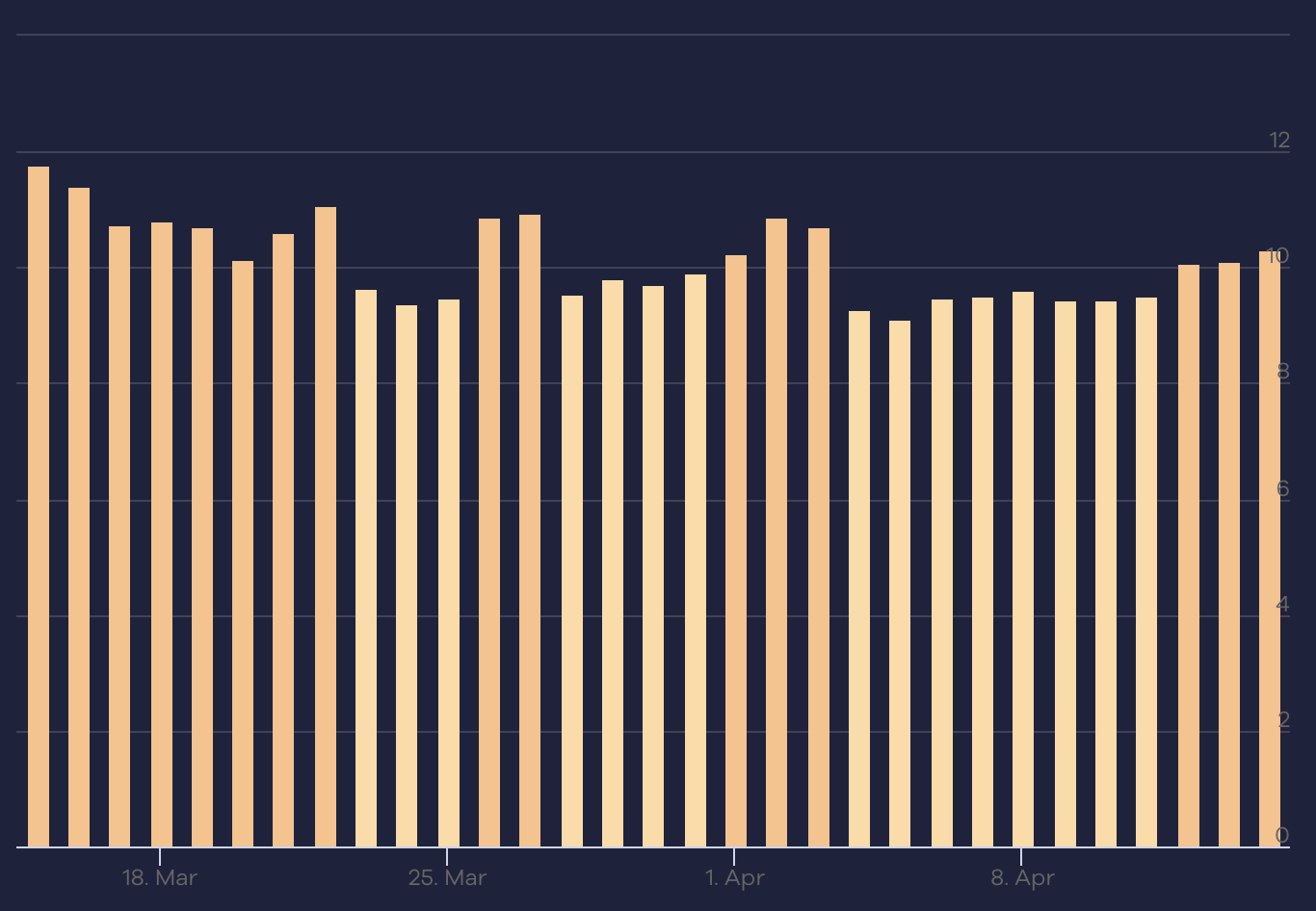

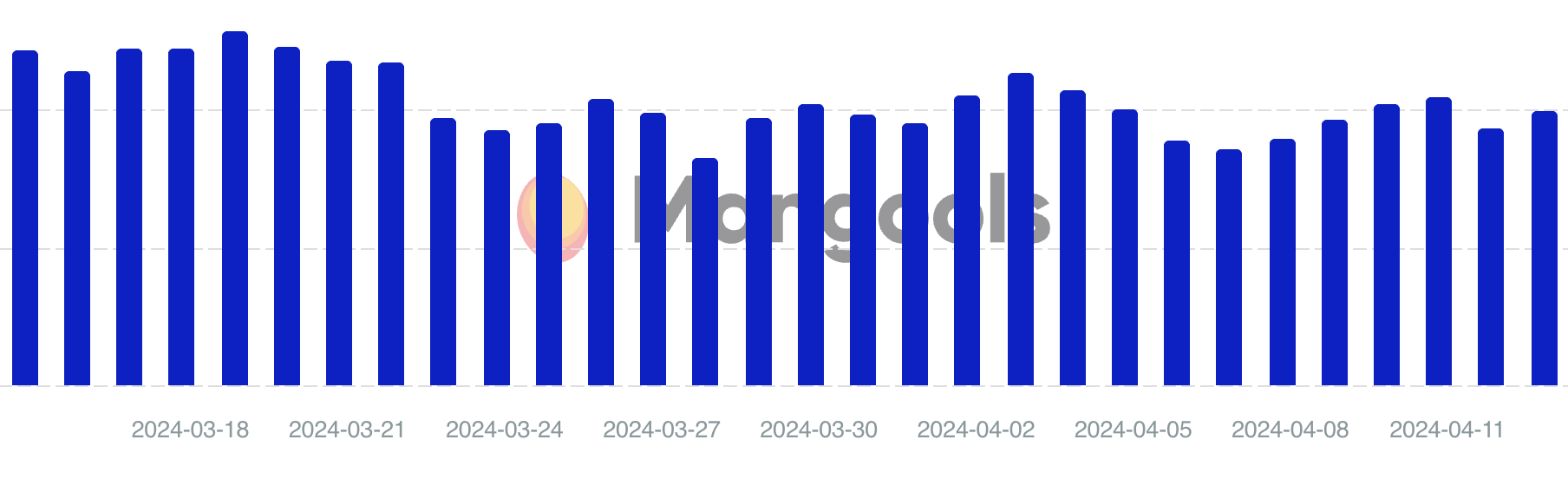

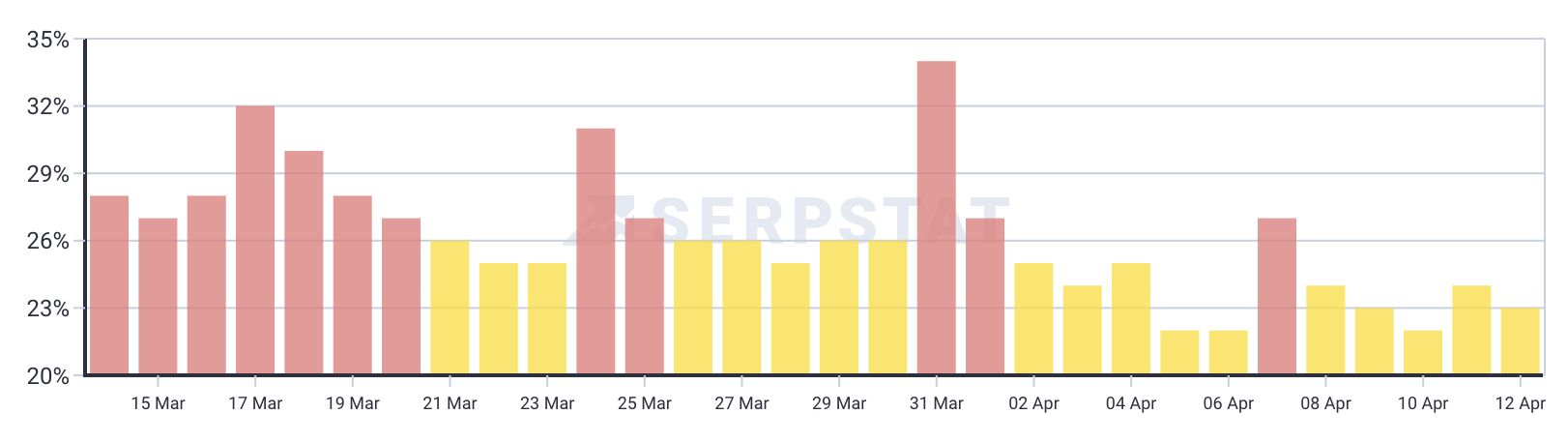

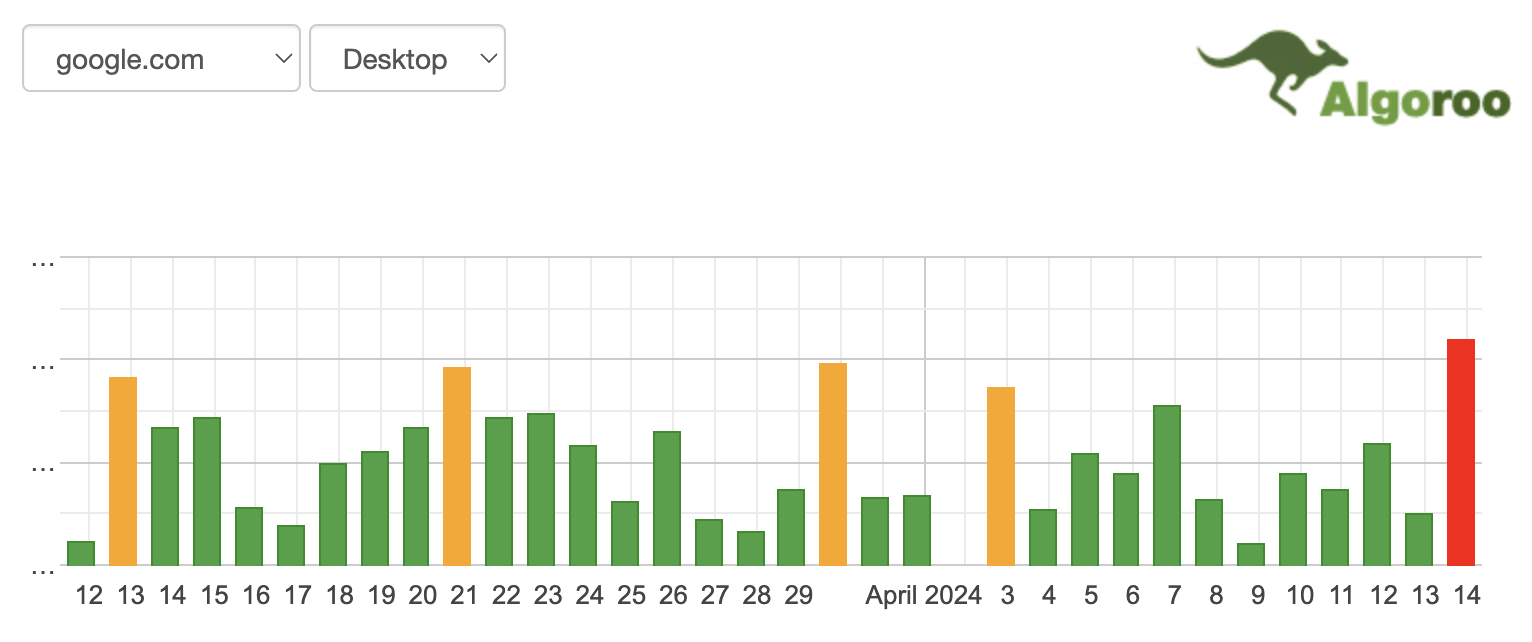

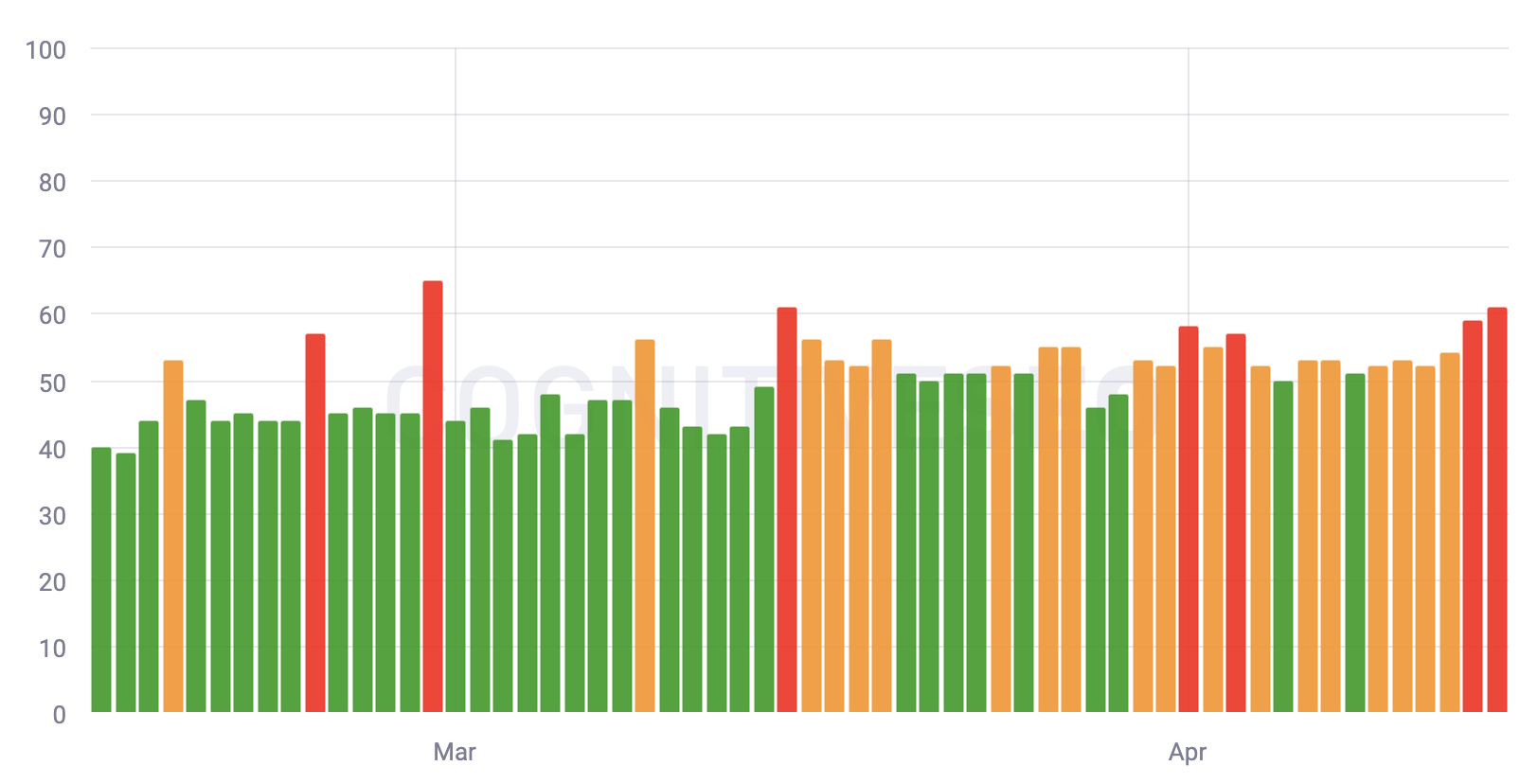

Both the Google ranking tracking tools and SEO chatter spiked over the past 48 hours.

SEO Chatter

Here is some of the chatter on social media, WebmasterWorld and comments here on this site over the past couple of days:

Glenn Gabe has been tracking the movement closely, comparing previously hit sites by previous core and helpful content updates. I find his shares very insightful. Here is his latest post this morning:

He wrote:

Google Morning Google Land! This is the April 14 edition of “Core Update Notes”. I shared yesterday how the tools were all spiking and I picked up serious volatility across several sites I’m helping and tracking. Just wanted to share more about that this morning. Whatever Google updated, it’s definitely having a big effect on some sites. I have several documented that reversed course (and some reversing course for the *second time* during the update). For example, I shared rank tracking yesterday for one of those sites, which is even clearer today (see first screenshot). That site surged with the March core update, then reversed course half way through losing all gains. And it just surged completely back yesterday. The site owner is on a roller coaster. And yep, he’s ready to get off the coaster and hoping this surge sticks. :)

In addition, I’ve included several other screenshots of sites reversing course over the weekend. Remember, Google explained they would be updating several systems with the March core update that would reinforce each other. They also said to expect more volatility with this update. I’m definitely seeing that as I’m tracking many sites over time.

And for those interested in sites impacted heavily by the September HCU(X), I have still not seen any bounce back. 0. I checked the visibility numbers for 373 sites heavily impacted by the Sep HCU(X) this morning and all are down heavily over time (and most more with the March core update). I’ll keep checking… and we’ll see if the old HCU classifier gets dropped at some point while Google’s systems for assessing the helpfulness of content take over. Stay tuned.

He shared some of eye-popping charts, here is one of them:

Here are more:

In addition, I shared the other day how some sites were seeing late surges or drops. Here are two of those. Big swings very late into the March core update. Again, this makes sense given what Google explained about how this update would proceed… Multiple systems being updated… pic.twitter.com/FJHMVLyk8H

— Glenn Gabe (@glenngabe) April 14, 2024

Slowly it’s not fun anymore. Since Friday, Google’s traffic has dropped considerably on my site and all the other sites I monitor. Many keywords have disappeared without a trace, even for the main keyword my site no longer ranks, in first place is now a cleaning company that has nothing to do with the topic, but well, people certainly want a cleaning when they google for the keyword.

Result since Friday -56 per cent, unfortunately the trend is still downwards. As I am also currently monitoring my friend’s online shop: it’s exactly the same for him, -56 per cent since Friday, we no longer need to talk about sales, although his ranking is stable.

Again, same same since Friday. No let up and remaining sites heading to zero. I thought I had it figured. Not so unfortunately.

Yes, they are rolling out something awful since Friday. Sensors confirm that too.

Traffic totally dead today here in Germany

Here too in Czech

My rankings had a little wobble yesterday. It always tends to happen on the back end of an update.

Traffic and conversions absolutely nonexistent today.

Weekends were the best days of the week. Currently I’m getting like 3-5 Visitors every 30 Min. That’s really a Joke.

Same, and as compared to all the previous weeks ,this one is the WORSE.

I fear it will just keep getting worse and we should get used to this as it will be the norm.

Google Tracking Tools

Many, not all, but many of the tools showed spikes over the past 24 hours or so. These are not insane spikes in volatility, well, Algoroo and Advanced Web Rankings show massive spikes but the others are not as heated.

More Google Update Stories

Here are our previous stories on these updates:

What are you all seeing? Think we are just about done after 40 days of this rolling out?

Forum discussion at WebmasterWorld.

-

WORDPRESS6 days ago

WORDPRESS6 days agoTurkish startup ikas attracts $20M for its e-commerce platform designed for small businesses

-

PPC7 days ago

PPC7 days agoA History of Google AdWords and Google Ads: Revolutionizing Digital Advertising & Marketing Since 2000

-

MARKETING6 days ago

MARKETING6 days agoRoundel Media Studio: What to Expect From Target’s New Self-Service Platform

-

SEO5 days ago

SEO5 days agoGoogle Limits News Links In California Over Proposed ‘Link Tax’ Law

-

MARKETING6 days ago

MARKETING6 days agoUnlocking the Power of AI Transcription for Enhanced Content Marketing Strategies

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Search Results Can Be Harmful & Dangerous In Some Cases

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 12, 2024

-

SEO4 days ago

SEO4 days ago10 Paid Search & PPC Planning Best Practices

You must be logged in to post a comment Login