SOCIAL

Facebook Updates its Automated Alt Text Process to Identify More Objects Within Posted Images

Facebook has announced a significant update to its Automatic Alt Text – or AAT – process, which will ensure that more images on the platform are readable by screen readers, enabling vision-impaired users to get a better experience within Facebook’s apps.

Facebook first launched its AAT process back in 2016, enabling automated identification of objects within posted images via machine learning process, whenever manual alt-text descriptions were not provided. But in its initial iteration, the process was fairly limited, which Facebook has been working to update ever since.

As explained by Facebook:

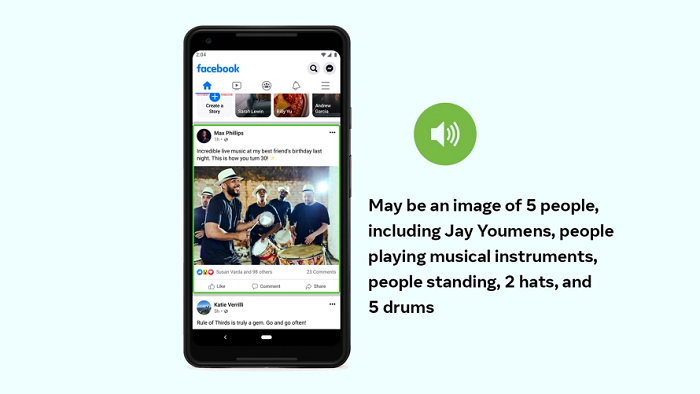

“First and foremost, we’ve expanded the number of concepts that AAT can reliably detect and identify in a photo by more than 10x, which in turn means fewer photos without a description. Descriptions are also more detailed, with the ability to identify activities, landmarks, types of animals, and so forth – for example, “May be a selfie of 2 people, outdoors, the Leaning Tower of Pisa.”

That provides more capacity for Facebook to provide detailed descriptions of objects within images, including not only what they are, but where they’re placed within the frame.

“So instead of describing the contents of a photo as “May be an image of 5 people,” we can specify that there are two people in the center of the photo and three others scattered toward the fringes, implying that the two in the center are the focus. Or, instead of simply describing a lovely landscape with “May be a house and a mountain,” we can highlight that the mountain is the primary object in a scene based on how large it appears in comparison with the house at its base.”

As noted, Facebook has been working to evolve its visual recognition tools for years, which includes both still images and video content. Indeed, back in 2017, Facebook shared an overview of its video ID tools, which are not available as yet, but will further boost the platform’s capacity to both cater to vision-impaired users, and gather more data insights about what’s in posted content, what users are watching, what they’re engaging with, and more.

For its latest upgrade of AAT, Facebook actually utilized Instagram images and hashtags to map content, which further underlines the potential of the process for data collection.

That could have implications beyond assisting differently abled users. For example, Facebook could look to help advertisers reach users who are interested in coffee by targeting those who’ve posted images of coffee cups or cafes regularly. That could also help to further amplify your messaging because those users are likely to post your offer as well – so you could reach these users with a discount offer, with an increased likelihood of them also sharing that with their followers and friends.

To be clear, Facebook is not offering these new image recognition insights as an ad targeting options as yet. But the capacity is there, and it could facilitate new search and research options to maximize your audience response in future.

Facebook says that the new system is also more culturally and demographically inclusive, due to Facebook using a broader dataset of content, through the translation of hashtags in many languages:

“For example, it can identify weddings around the world based (in part) on traditional apparel instead of labeling only photos featuring white wedding dresses.”

The new system can also provide more detail for those who want it. For example, users will be initially presented with a basic description of each image, but they’ll have the option to choose more specific insights, using the expanded data listings.

Again, the expanded implications here are significant – and while the main focus is on providing more access to Facebook’s platforms for all users, the extended data options could also be hugely valuable in a range of ways.

The new AAT system is now in operation, and users with screen readers can access the new data within Facebook’s apps.