SOCIAL

Despite Everything, Facebook Remains a Prominent Facilitator of Election Misinformation

Over the last four years, Facebook has implemented a range of measures to stamp out misinformation, tackle election interference and ensure that its users are kept accurately informed, despite various efforts, by various groups, to use the company’s massive network in order to influence public opinion and sway election results in their own favor.

Facebook’s increased push came after revelations of various mass misinformation and influence operations during the 2016 US Election campaign, which included the high-profile Cambridge Analytica scandal, and the revelations of Russia’s Internet Research Agency running thousands of ads to spark division among US voters. How effective either of these pushes actually was is impossible to say, but both did happen, and you would imagine that they had at least some effect.

Facebook’s various measures seem to have negated much of the foreign influence and manipulation that was present in 2016 – yet, despite this, several new insights show that Facebook is still facilitating the spread of misinformation, with President Donald Trump’s claims of election fraud, in particular, gaining increased traction via The Social Network.

Last week, Facebook published a post in which it sought to refute claims that its algorithm disproportionately amplifies controversial right-wing content, which sparks engagement and interaction, and therefore, seemingly, gains reach.

Facebook published the post in response to these lists, published daily by New York Times writer Kevin Roose.

The top-performing link posts by U.S. Facebook pages in the last 24 hours are from:

1. Dan Bongino

2. FOX 29

3. Donald J. Trump

4. Donald J. Trump

5. Fox News

6. Dan Bongino

7. CNN

8. Dios Es Bueno

9. Breitbart

10. Fox News— Facebook’s Top 10 (@FacebooksTop10) November 17, 2020

The lists, which are based on engagement data accessed via Facebook’s own analytics platform CrowdTangle, appear to show that content from extreme right-wing publishers performs better on Facebook, which often means that questionable, if not demonstrably false information, is getting big reach and engagement across The Social Network.

But hang on, Facebook said, that’s not the whole story:

“Most of the content people see [on Facebook], even in an election season, is not about politics. In fact, based on our analysis, political content makes up about 6% of what you see on Facebook. This includes posts from friends or from Pages (which are public profiles created by businesses, brands, celebrities, media outlets, causes and the like).”

So while the numbers posted by Roose may show that right-wing news content gets a lot of engagement, that’s only a fraction of what users see overall.

“Ranking top Page posts by reactions, comments, etc. doesn’t paint a full picture of what people actually see on Facebook.“

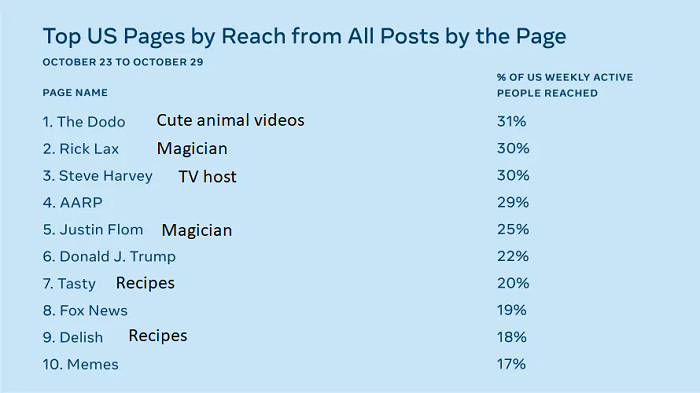

To demonstrate this, Facebook shared this listing of what content actually gets the most reach on Facebook – i.e. posts from these Pages appeared in the most user News Feed for the week in question:

I added the black text descriptions for context. As you can see, most of the content people see is not political-based, which, Facebook says, shows that political updates are not as prevalent as Roose’s listings may suggest.

But then again, this listing kind of proves Roose’s point – of all of the top 10 Pages from the week above, based on reach to US active users, the two political Pages with the most presence are:

- Donald J. Trump

- Fox News

So while people do see a lot of other content, you could equally argue that recipe videos are probably not going to influence how people vote. Which would suggest that Facebook, even by its own explanation, is helping to boost more extreme political views.

And that then leads into the next concern.

This week, BuzzFeed News has reported that despite Facebook adding warning labels to Trump’s posts which have criticized the US Election, and suggested widespread fraud in the voting process, those labels have had little impact on social sharing.

BuzzFeed shared this quote from an internal Facebook discussion board:

”We have evidence that applying these [labels] to posts decreases their reshares by ~8%. However given that Trump has SO many shares on any given post, the decrease is not going to change shares by orders of magnitude.”

As you can see, the above post was still widely shared and commented on. But Facebook may also be contributing directly to that. As noted, Facebook’s algorithm looks to boost posts that see more engagement, in order to keep people active and on-platform for longer. That means that posts which generate a lot of comments and discussion tend to see higher reach.

Facebook’s system even pushes that directly – as shown in this example shared by The Wall Street Journal’s Deepa Seetharaman:

So rather than limiting the spread of these claims, Facebook is actively promoting them to users, in order to spark engagement. That’s stands in significant contrast to Twitter, which last week reported that it had seen a 29% decrease in Quote Tweets as a result of its own warning labels.

The data here shows that Facebook is not only not seeing any major impact as a result of its deterrence measures, but that it’s own systems, intentionally or not, are even counteracting such efforts.

Why? Because as many have noted, Facebook values engagement above all else in most cases. It seems, in this instance, its internal measures to boost interaction may be inadvertently going against its other operations.

So what comes next? Should Facebook come under more scrutiny, and be forced to review its processes in order to stop the spread of misinformation?

That, at least in part, is the topic of the latest round of the latest Senate Judiciary Hearing into possible reforms to Section 230 laws. Within the context of this examination, Facebook and Twitter have faced questions over the influence of their platforms, and how their systems incentivize engagement.

Those hearings could eventually lead to reform, one way or another, and with former President Barack Obama this week suggesting that social platforms should face regulation, there could be more movement coming on this front.

As Obama explained to The Atlantic:

“I don’t hold the tech companies entirely responsible [for the rise of populist politics], because this predates social media. It was already there. But social media has turbocharged it. I know most of these folks. I’ve talked to them about it. The degree to which these companies are insisting that they are more like a phone company than they are like The Atlantic, I do not think is tenable. They are making editorial choices, whether they’ve buried them in algorithms or not. The First Amendment doesn’t require private companies to provide a platform for any view that is out there.”

With Obama’s former VP Joe Biden set to take the reigns in January, we could see a significant shift in approach to such moving forward, which could limit Facebook’s capacity to facilitate such content.

But then again, even if new rules are enacted, there will always be borderline cases, and as we’ve seen with the sudden rise of Parler, there will also be alternate platforms that will cater to more controversial views.

Maybe, then, this is a consequence of a more open media landscape – with fewer gatekeepers to limit the spread of misinformation, it will remain increasingly difficult to contain.

Free speech advocates will hail this as a benefit, while others may not be so sure. Either way, with the 2020 election discussion still playing out, we’re likely to see more examples of such before the platforms are truly pushed to act.