SOCIAL

Facebook is Taking Legal Action Against a Company Using Cloaking to Re-Direct Ads

Facebook has filed new lawsuit over violation of its terms and conditions, this time taking aim at a company called ‘LeadCloak’ and its use of ad ‘cloaking’ to re-direct user actions.

As explained by Facebook:

“Cloaking is a malicious technique that impairs ad review systems by concealing the nature of the website linked to an ad. When ads are cloaked, a company’s ad review system may see a website showing an innocuous product such as a sweater, but a user will see a different website, promoting deceptive products and services which, in many cases, are not allowed.”

Facebook’s Integrity Team Lead Rob Leathern provided this video overview of how cloaking works for more context:

Among various violations, Facebook says that LeadCloak’s software has been used to conceal websites featuring scams related to COVID-19, cryptocurrency, pharmaceuticals, diet pills, and fake news pages. Some of these cloaked websites also included images of celebrities.

It’s the latest in Facebook’s increasing legal action against companies that violate its terms – over the past year, Facebook has initiated legal proceedings against:

- Companies that sell fake followers and likes, which Facebook has pushed harder to enforce since New York’s Attorney General ruled that selling fake social media followers and likes is illegal last February

- Two different app developers over ‘click injection fraud‘, which simulates clicks in order to extract ad revenue

- Two companies over the creation of malware, and tricking Facebook users into installing it in order to steal personal information

- An organization which had registered various domain names which, Facebook claims, were intended to deceive people by pretending to be affiliated with Facebook apps via scams like emails that ask users to log-in to correct an error

These types of scams have been problematic for a long time, but Facebook is now taking up official, legal recourse to stop them, which could help to establish precedents that Facebook can then refer to in future proceedings.

Essentially, Facebook’s taking a harder stance against such scams. After the controversy of Cambridge Analytica, Facebook’s not taking any more chances, and if it can extract bigger penalties for such violations, it can also use those as a warning to others who may be looking to attempt the same.

In the past, scammers could get away with platform bans, but increasingly, Facebook’s looking to take things further – which should, hopefully, act as a deterrent as well as a case-by-case improvement.

Such proceedings can take time, but it’ll be interesting to see what results Facebook sees in each case, and how they relate to future efforts to combat the same.

In addition, Facebook says that its also looking to work with other digital platforms to share learnings, and address the same issues within the broader industry.

SOCIAL

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

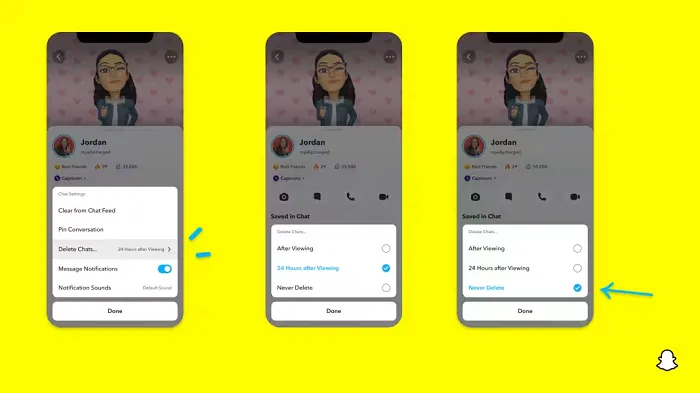

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

SOCIAL

Instagram Tests Live-Stream Games to Enhance Engagement

Instagram’s testing out some new options to help spice up your live-streams in the app, with some live broadcasters now able to select a game that they can play with viewers in-stream.

As you can see in these example screens, posted by Ahmed Ghanem, some creators now have the option to play either “This or That”, a question and answer prompt that you can share with your viewers, or “Trivia”, to generate more engagement within your IG live-streams.

That could be a simple way to spark more conversation and interaction, which could then lead into further engagement opportunities from your live audience.

Meta’s been exploring more ways to make live-streaming a bigger consideration for IG creators, with a view to live-streams potentially catching on with more users.

That includes the gradual expansion of its “Stars” live-stream donation program, giving more creators in more regions a means to accept donations from live-stream viewers, while back in December, Instagram also added some new options to make it easier to go live using third-party tools via desktop PCs.

Live streaming has been a major shift in China, where shopping live-streams, in particular, have led to massive opportunities for streaming platforms. They haven’t caught on in the same way in Western regions, but as TikTok and YouTube look to push live-stream adoption, there is still a chance that they will become a much bigger element in future.

Which is why IG is also trying to stay in touch, and add more ways for its creators to engage via streams. Live-stream games is another element within this, which could make this a better community-building, and potentially sales-driving option.

We’ve asked Instagram for more information on this test, and we’ll update this post if/when we hear back.

-

PPC4 days ago

PPC4 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

PPC7 days ago

PPC7 days ago4 New Google Ads Performance Max Updates: What You Need to Know

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 16, 2024

-

SEO6 days ago

SEO6 days agoGoogle Clarifies Vacation Rental Structured Data

-

MARKETING6 days ago

MARKETING6 days agoWill Google Buy HubSpot? | Content Marketing Institute

-

PPC7 days ago

PPC7 days agoShare Of Voice: Why Is It Important?

-

PPC6 days ago

PPC6 days agoHow to Collect & Use Customer Data the Right (& Ethical) Way

-

MARKETING5 days ago

MARKETING5 days agoStreamlining Processes for Increased Efficiency and Results