SOCIAL

Facebook Outlines New, Machine Learning Process to Improve the Accuracy of Community Standards Enforcement

Facebook is always working to improve its detection and enforcement efforts in order to remove content that breaks its rules, and keep users safe from abuse, misinformation, scams, etc.

And it’s systems have significantly improved in this respect – as explained by Facebook:

“Online services have made great strides in leveraging machine-learned models to fight abuse at scale. For example, 99.5% of takedowns on fake Facebook accounts are proactively detected before users report them.”

But there are still significant limitations in its processes, mostly due to the finite capacity for human reviewers to assess and pass judgment on such instances. Machine learning tools can identify a growing number of issues, but human input is still required to confirm whether many of those identified cases are correct, because computer systems often miss the complex nuance of language.

But now, Facebook has a new system to assist in this respect:

“CLARA (Confidence of Labels and Raters), is a system built and deployed at Facebook to estimate the uncertainty in human-generated decisions. […] CLARA is used at Facebook to obtain more accurate decisions overall while reducing operational resource use.”

The system essentially augments human decision making by adding a machine learning layer on top of that which assesses each individual raters’ capacity to make the right call on content, based on their past accuracy.

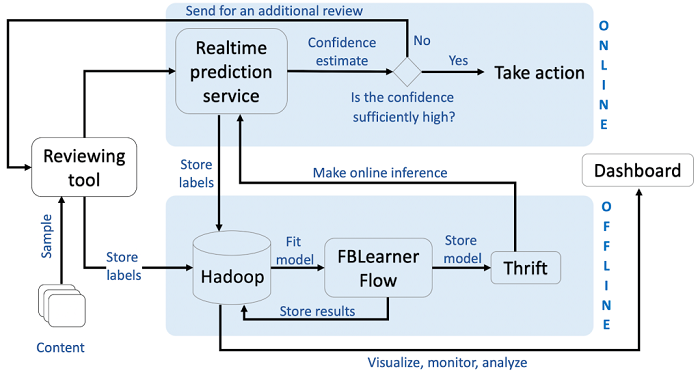

The CLARA element is in the ‘Realtime Prediction Service’ sector of this flow chart, which assesses the result of each incident and crosschecks the human ruling against what the machine model would have predicted, while also referencing both against each reviewers’ past results for each type of report.

That system, which has now been deployed at Facebook, has resulted in a significant improvement in efficiency, ensuring more accurate results in enforcement.

“Compared to a random sampling baseline, CLARA provides a better trade-off curve, enabling an efficient usage of labeling resources. In a production deployment, we found that CLARA can save up to 20% of total reviews compared to majority vote.”

Which is important right now, because Facebook has been forced to reduce its human moderation capacity due to COVID-19 lockdowns in different regions. By improving its systems for accurately detecting violations, through automated means, Facebook is then able to concentrate its resources on the key areas of concern, maximizing the manpower that it has available.

Of course, there are still issues with Facebook’s systems. Just this week, reports emerged that Facebook is looking at a new way to use share velocity signals in order to better guide human moderation efforts, and stop misinformation, in particular, from reaching massive audiences on the platform. That comes after a recent COVID-19 conspiracy video was viewed some 20 million times on Facebook, despite violating the platform’s rules, before, finally, Facebook’s moderators moved to take it down.

Improved human moderation wouldn’t have helped in this respect, so there are still other areas of concern for Facebook to address, but the smarter it can be about utilizing the resources it has available, the more Facebook can focus its efforts onto the key areas of concern, in order to detect and remove violating content before it can reach big audiences.

You can read more about Facebook’s new CLARA reinforcement process here.