SOCIAL

Instagram’s Testing a New, Full-Screen Main Feed of Feed Posts, Stories and Reels Content

It’s been on the horizon for a while, given the evolving usage trends in the app. And now, it looks a step closer to reality, with Instagram testing a new, fully-integrated home feed that would do away with the top Stories bar, and present everything in an immersive, full-screen, swipeable UI.

As you can see in this example, posted by app researcher Alessando Paluzzi, the experimental Instagram feed would include regular Feed posts, Stories and Reels all within a single flow.

Stories would be presented with a frame bar at the bottom of the display, indicating that you can swipe left to see the other frames, while videos have a progress bar instead.

It’s a more intuitive, and really logical way to present Instagram content, which would also align with evolving, TikTok-led usage trends. The update would also enable algorithmic improvements based on your response to each specific post, as opposed to the current format which presents things in different ways, and often shows more than one post on screen at a time.

Which is where TikTok has been able to gain its most significant advantage. Because all TikTok clips are displayed one at a time, in full-screen, everything you do while viewing that post can be used as a measure of your response to that specific content. If you tap ‘Like’ on a clip, if you watch it all the way through, if you let it play twice, swipe back to it again – every response is specific to that video, which gives TikTok a level of advantage in determining the specific elements of interest in each clip, which it can then align with your profile to improve your feed recommendations.

That’s why TikTok’s feed is so addictive – and while Instagram Reels are also presented in the same way, Instagram hasn’t yet been able to crack the algorithm as effectively as TikTok has, fueling its more immersive, more addictive ‘For You’ content stream.

This new presentation style could help to change that, and would be a big step in moving into line with the broader TikTok trend, which shows no sign of slowing as yet. And given that Reels is now the largest contributor to engagement growth on Instagram, and users spend more time with Stories than they do with their main feed, it makes perfect sense.

Again, I’ve been predicting that this would happen for the last two years – and really, the only surprise here is that it’s taken IG this long to actually move to live testing of the format.

Which, it’s important to note, hasn’t begun just yet. This is a back-end prototype at present, which might still not see the light of day. But it probably will, and given the state of its development, as shown here, I’d be expecting to see this soon, giving users a whole new way to engage with all of Instagram’s different content formats, while also aligning with the platform’s stated push on video content.

Indeed, back in December, Instagram chief Adam Mosseri said that video would be a key focus for IG in 2022.

“We’re going to double-down on our focus on video and consolidate all of our video formats around Reels”

This seems like the ultimate next step on this front, and another re-positioning in its face-off against TikTok, in order to mitigate TikTok’s rising dominance in the space.

SOCIAL

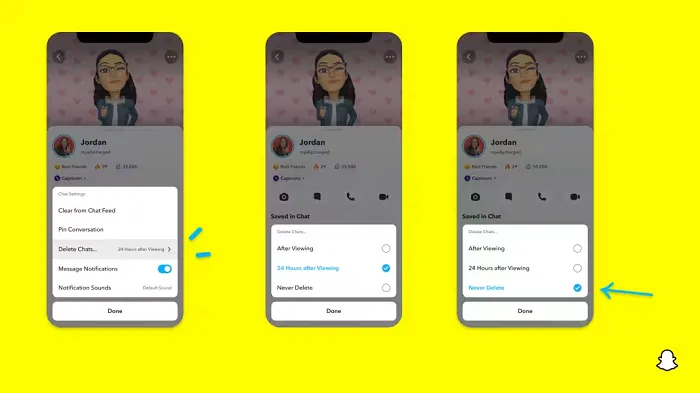

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

SOCIAL

Instagram Tests Live-Stream Games to Enhance Engagement

Instagram’s testing out some new options to help spice up your live-streams in the app, with some live broadcasters now able to select a game that they can play with viewers in-stream.

As you can see in these example screens, posted by Ahmed Ghanem, some creators now have the option to play either “This or That”, a question and answer prompt that you can share with your viewers, or “Trivia”, to generate more engagement within your IG live-streams.

That could be a simple way to spark more conversation and interaction, which could then lead into further engagement opportunities from your live audience.

Meta’s been exploring more ways to make live-streaming a bigger consideration for IG creators, with a view to live-streams potentially catching on with more users.

That includes the gradual expansion of its “Stars” live-stream donation program, giving more creators in more regions a means to accept donations from live-stream viewers, while back in December, Instagram also added some new options to make it easier to go live using third-party tools via desktop PCs.

Live streaming has been a major shift in China, where shopping live-streams, in particular, have led to massive opportunities for streaming platforms. They haven’t caught on in the same way in Western regions, but as TikTok and YouTube look to push live-stream adoption, there is still a chance that they will become a much bigger element in future.

Which is why IG is also trying to stay in touch, and add more ways for its creators to engage via streams. Live-stream games is another element within this, which could make this a better community-building, and potentially sales-driving option.

We’ve asked Instagram for more information on this test, and we’ll update this post if/when we hear back.

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS6 days ago

WORDPRESS6 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO7 days ago

SEO7 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS6 days ago

WORDPRESS6 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

WORDPRESS7 days ago

[GET] The7 Website And Ecommerce Builder For WordPress

You must be logged in to post a comment Login