SOCIAL

Internal Research from Facebook Shows that Re-Shares Can Significantly Amplify Misinformation

What if Facebook removed post shares entirely, as a means to limit the spread of misinformation in its apps? What impact would that have on Facebook engagement and interaction?

The question comes following the release of new insights from Facebook’s internal research, released as part of the broader ‘Facebook Files’ leak, which shows that Facebook’s own reporting found that post shares play a key role in amplifying misinformation, and spreading harm among the Facebook community.

As reported by Alex Kantrowitz in his newsletter Big Technology:

“The report noted that people are four times more likely to see misinformation when they encounter a post via a share of a share – kind of like a retweet of a retweet – compared to a typical photo or link on Facebook. Add a few more shares to the chain, and people are five to ten times more likely to see misinformation. It gets worse in certain countries. In India, people who encounter “deep reshares,” as the researchers call them, are twenty times more likely to see misinformation.”

So it’s not direct shares, as such, but re-amplified shares, which are more likely to be the kinds of controversial, divisive, shocking or surprising reports that gain viral traction in the app. Content that generates emotional response sees more share activity in this respect, so it makes sense that the more radical the claim, the more re-shares it’ll likely see, particularly as users look to either refute or reiterate their personal stance on issues via third party reports.

And there’s more:

“The study found that 38% of all [views] of link posts with misinformation take place after two reshares. For photos, the numbers increase – 65% of views of photo misinformation take place after two reshares. Facebook Pages, meanwhile, don’t rely on deep reshares for distribution. About 20% of page content is viewed at a reshare depth of two or higher.”

So again, the data shows that those more spicy, controversial claims and posts see significant viral traction through continued sharing, as users amplify and re-amplify these posts throughout Facebook’s network, often without adding their own thoughts or opinions on such.

So what if Facebook eliminated shares entirely, and forced people to either create their own posts to share content, or to comment on the original post, which would slow the rapid amplification of such by simply tapping a button?

Interestingly, Facebook has made changes on this front, potentially linked to this research. Last year, Facebook-owned (now Meta-owned) WhatsApp implemented new limits on message forwarding to stop the spread of misinformation through message chains, with sharing restricted to 5x per message.

Which, WhatsApp says, has been effective:

“Since putting into place the new limit, globally, there has been a 70% reduction in the number of highly forwarded messages sent on WhatsApp. This change is helping keep WhatsApp a place for personal and private conversations.”

Which is a positive outcome, and shows that there is likely value to such limits. But the newly revealed research looked at Facebook specifically, and thus far, Facebook hasn’t done anything to change the sharing process within its main app, the core focus of concern in this report.

The company’s lack of action on this front now forms part of Facebook whistleblower Frances Haugen’s legal push against the company, with Haugen’s lawyer calling for Facebook to be removed from the App Store if it fails to implement limits on re-shares.

Facebook hasn’t responded to these new claims as yet, but it is interesting to note this research in the context of other Facebook experiments, which seemingly both support and contradict the core focus of the claims.

In August 2018, Facebook actually did experiment with removing the Share button from posts, replacing it with a ‘Message’ prompt instead.

That seemed to be inspired by the increased discussion of content within messaging streams, as opposed to in the Facebook app – but given the timing of the experiment, in relation to the study, it seems now that Facebook was looking to see what impact the removal of sharing could have on in-app engagement.

On another front, however, Facebook’s actually tested expanded sharing, with a new option spotted in testing that enables users to share a post into multiple Facebook groups at once.

That’s seemingly focused on direct post sharing, as opposed to re-shares, which were the focus of its 2019 study. But even so, providing more ways to amplify content, potentially dangerous or harmful posts, more easily, seems to run counter to the findings outlined in the report.

Again, we don’t have full oversight, because Facebook hasn’t commented on the reports, but it does seem like there could be benefit to removing post shares entirely as an option, as a means to limit the rapid re-circulation of harmful claims.

But then again, maybe that just hurts Facebook engagement too much – maybe, through these various experiments, Facebook found that people engaged less, and spent less time in the app, which is why it abandoned the idea.

This is the core question that Haugen raises in her criticism of the platform, that Facebook, at least perceptually, is hesitant to take action on elements that potentially cause harm if that also means that it could hurt its business interests.

Which, at Facebook’s scale and influence, is an important consideration, and one which we need more transparency on.

Facebook claims that it conducts such research with the distinct intent of improving its systems, as CEO Mark Zuckerberg explains:

“If we wanted to ignore research, why would we create an industry-leading research program to understand these important issues in the first place? If we didn’t care about fighting harmful content, then why would we employ so many more people dedicated to this than any other company in our space – even ones larger than us? If we wanted to hide our results, why would we have established an industry-leading standard for transparency and reporting on what we’re doing?”

Which makes sense, but that doesn’t then explain whether business considerations factor into any subsequent decisions as a result, when a level of potential harm is detected by its examinations.

That’s the crux of the issue. Facebook’s influence is clear, its significance as a connection and information distribution channel is evident. But what plays into its decisions in regards to what to take action on, and what to leave, as it assesses such concerns?

There’s evidence to suggest that Facebook has avoided pushing too hard on such, even when its own data highlights problems, as seemingly shown in this case. And while Facebook should have a right to reply, and its day in court to respond to Haugen’s accusations, this is what we really need answers on, particularly as the company looks to make even more immersive, more all-encompassing connection tools for the future.

SOCIAL

Snapchat Explores New Messaging Retention Feature: A Game-Changer or Risky Move?

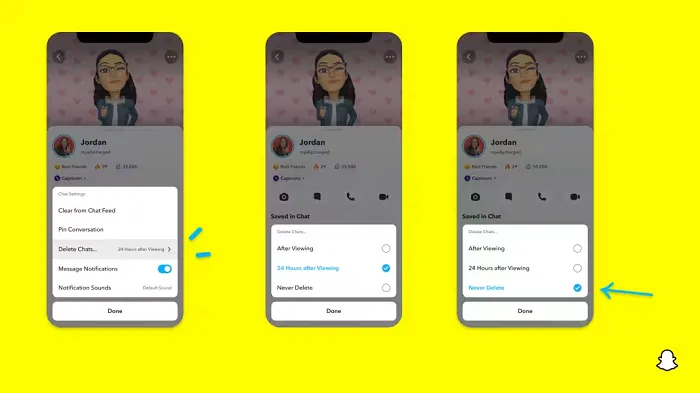

In a recent announcement, Snapchat revealed a groundbreaking update that challenges its traditional design ethos. The platform is experimenting with an option that allows users to defy the 24-hour auto-delete rule, a feature synonymous with Snapchat’s ephemeral messaging model.

The proposed change aims to introduce a “Never delete” option in messaging retention settings, aligning Snapchat more closely with conventional messaging apps. While this move may blur Snapchat’s distinctive selling point, Snap appears convinced of its necessity.

According to Snap, the decision stems from user feedback and a commitment to innovation based on user needs. The company aims to provide greater flexibility and control over conversations, catering to the preferences of its community.

Currently undergoing trials in select markets, the new feature empowers users to adjust retention settings on a conversation-by-conversation basis. Flexibility remains paramount, with participants able to modify settings within chats and receive in-chat notifications to ensure transparency.

Snapchat underscores that the default auto-delete feature will persist, reinforcing its design philosophy centered on ephemerality. However, with the app gaining traction as a primary messaging platform, the option offers users a means to preserve longer chat histories.

The update marks a pivotal moment for Snapchat, renowned for its disappearing message premise, especially popular among younger demographics. Retaining this focus has been pivotal to Snapchat’s identity, but the shift suggests a broader strategy aimed at diversifying its user base.

This strategy may appeal particularly to older demographics, potentially extending Snapchat’s relevance as users age. By emulating features of conventional messaging platforms, Snapchat seeks to enhance its appeal and broaden its reach.

Yet, the introduction of message retention poses questions about Snapchat’s uniqueness. While addressing user demands, the risk of diluting Snapchat’s distinctiveness looms large.

As Snapchat ventures into uncharted territory, the outcome of this experiment remains uncertain. Will message retention propel Snapchat to new heights, or will it compromise the platform’s uniqueness?

Only time will tell.

SOCIAL

Catering to specific audience boosts your business, says accountant turned coach

While it is tempting to try to appeal to a broad audience, the founder of alcohol-free coaching service Just the Tonic, Sandra Parker, believes the best thing you can do for your business is focus on your niche. Here’s how she did just that.

When running a business, reaching out to as many clients as possible can be tempting. But it also risks making your marketing “too generic,” warns Sandra Parker, the founder of Just The Tonic Coaching.

“From the very start of my business, I knew exactly who I could help and who I couldn’t,” Parker told My Biggest Lessons.

Parker struggled with alcohol dependence as a young professional. Today, her business targets high-achieving individuals who face challenges similar to those she had early in her career.

“I understand their frustrations, I understand their fears, and I understand their coping mechanisms and the stories they’re telling themselves,” Parker said. “Because of that, I’m able to market very effectively, to speak in a language that they understand, and am able to reach them.”Â

“I believe that it’s really important that you know exactly who your customer or your client is, and you target them, and you resist the temptation to make your marketing too generic to try and reach everyone,” she explained.

“If you speak specifically to your target clients, you will reach them, and I believe that’s the way that you’re going to be more successful.

Watch the video for more of Sandra Parker’s biggest lessons.

SOCIAL

Instagram Tests Live-Stream Games to Enhance Engagement

Instagram’s testing out some new options to help spice up your live-streams in the app, with some live broadcasters now able to select a game that they can play with viewers in-stream.

As you can see in these example screens, posted by Ahmed Ghanem, some creators now have the option to play either “This or That”, a question and answer prompt that you can share with your viewers, or “Trivia”, to generate more engagement within your IG live-streams.

That could be a simple way to spark more conversation and interaction, which could then lead into further engagement opportunities from your live audience.

Meta’s been exploring more ways to make live-streaming a bigger consideration for IG creators, with a view to live-streams potentially catching on with more users.

That includes the gradual expansion of its “Stars” live-stream donation program, giving more creators in more regions a means to accept donations from live-stream viewers, while back in December, Instagram also added some new options to make it easier to go live using third-party tools via desktop PCs.

Live streaming has been a major shift in China, where shopping live-streams, in particular, have led to massive opportunities for streaming platforms. They haven’t caught on in the same way in Western regions, but as TikTok and YouTube look to push live-stream adoption, there is still a chance that they will become a much bigger element in future.

Which is why IG is also trying to stay in touch, and add more ways for its creators to engage via streams. Live-stream games is another element within this, which could make this a better community-building, and potentially sales-driving option.

We’ve asked Instagram for more information on this test, and we’ll update this post if/when we hear back.

-

PPC5 days ago

PPC5 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 17, 2024

-

PPC7 days ago

PPC7 days ago97 Marvelous May Content Ideas for Blog Posts, Videos, & More

-

SEO7 days ago

SEO7 days agoAn In-Depth Guide And Best Practices For Mobile SEO

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 18, 2024

-

MARKETING6 days ago

MARKETING6 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: April 19, 2024