SOCIAL

Report Finds X’s Community Notes Moderation Fails To Address Harmful Misinformation

This week’s ruling in Colorado that former President Donald Trump cannot run for office again has reignited accusations of suppression of free speech and that U.S. voters are being restricted from having their say in who they may want as their president.

Because despite various criminal charges and other investigations, Trump remains the leading Republican candidate for the 2024 poll. If Trump is deemed ineligible to run, this could throw the party into chaos. As such, free speech advocates want less suppression and more capacity to say whatever they like, so that the people can then decide on who and what they believe in the upcoming presidential race.

But the thing is, that only works if the person doing the talking is honest, informed, and is not looking to mislead voters with misinformation. Which, intentional or not, Trump has been guilty of in the past, and now, we’re also seeing the same trends play out on Elon Musk’s X platform, where his easing of the parameters around what people can say in the app is facilitating the spread of harmful lies and untruths.

This week, ProPublica has published a joint investigation that it conducted in partnership with Columbia University’s Tow Center for Digital Journalism, in which they explored the current state of misinformation on X in relation to the war in Israel.

As per ProPublica:

“The [study] looked at over 200 distinct claims that independent fact-checks determined to be misleading, and searched for posts by verified accounts that perpetuated them, identifying 2,000 total tweets. The tweets had collectively been viewed half a billion times.”

So Musk’s reformation of the platform’s verification system, which theoretically adds more validity to claims made by these accounts, and expands their reach, could be helping to perpetuate these false reports.

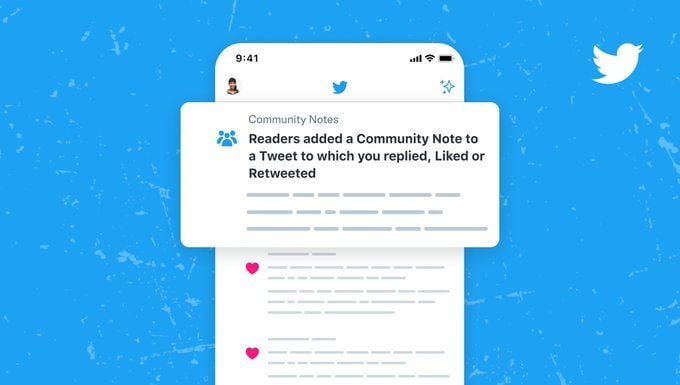

What’s more, Community Notes, Musk’s grand solution to social platform moderation, is not functioning as intended:

“We also found that the Community Notes system, which has been touted by Musk as a way to improve information accuracy on the platform, hasn’t scaled sufficiently. About 80% of the 2,000 debunked posts we reviewed had no Community Note. Of the 200 debunked claims, more than 80 were never clarified with a note.”

Which is no real surprise, considering that Musk himself has raised questions as to the reliability of Community Notes, even while hailing it as the best solution to moderation issues.

Interesting. This Note is being gamed by state actors. Will be helpful in figuring who they are.

Thanks for jumping in the honey pot, guys lmao!

— Elon Musk (@elonmusk) December 10, 2023

Interestingly, Musk has raised doubts about the validity of Community Notes several times when they’ve countered his own viewpoints.

But even so, X is moving forward in its push to make Community Notes its key guardrail against misinformation, with Musk’s belief being that the people should decide what’s true and what’s not, with this system being the ultimate arbiter, as opposed to independent fact checks.

X’s approach could soon see it land in hot water in the EU, with the EU Commission launching a full investigation into the spread of misinformation in the app, and its potential over-reliance on crowd-sourced fact-checking.

But Musk, and his many supporters, are adamant that they should be able to share whatever they like, about whatever they want to in the app, with alternative facts and misinformation best debunked by enabling them to be shared, then debating each claim on its merits.

Which is music to the ears of foreign influence groups, who rely on that sort of ambiguity to maximize the reach of their messaging. By enabling these groups to plant seeds of doubt, Musk is inadvertently facilitating the spread of destabilizing misinformation, which is designed specifically to divide voters and sow confusion.

And as we head towards the next U.S. election, that’s going to get much worse, and Community Notes, based on the available evidence, is simply not going to cut it. Which will likely, eventually force Elon and Co. to either make their own calls on moderation, or see X come under more scrutiny from regulatory groups.

The end result will see Musk portrayed as a martyr by free speech activists, but the truth is that X’s systems are allowing the spread of damaging false reports, which could influence election outcomes.

And we’ve already seen how that plays out, after Russian activist groups infiltrated U.S. political debates back in 2016. Maybe, given the outcome, Republican voters are okay with that, but it does feel like we’re headed for another crisis along similar lines next year.

Will anything be done to address such ahead of the election, or will we be reviewing such in retrospect once again?

This is just one of many reports which has found that X’s current approach is not adequate, yet Musk seems determined that he’s right, and more speech, on all topics, should be allowed in the app. Retrospective review of COVID measures has only strengthened the resolve of free speech advocates on this front, yet, that could enable foreign influence groups, whom Musk himself admits are active within the Community Notes system, to sway responses in their favor.

It seems like a risky proposition, and the ultimate “winner” may not be who Musk’s supporters expect.