SOCIAL

YouTube takes aim at teens bingeing on body image videos

A clinician says guardrails when it comes to watching videos about ‘ideal’ bodies or fitness levels can help protect the mental health of young people using online platforms such as YouTube – Copyright AFP Lionel BONAVENTURE

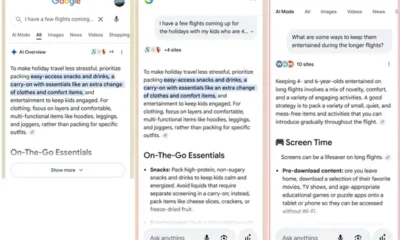

YouTube on Thursday said it tweaked its recommendation system in the United States to prevent teens from bingeing on videos idealizing certain body types.

The move comes about a week after dozens of US states accused Facebook and Instagram owner Meta of profiting “from children’s pain,” damaging their mental health and misleading people about the safety of its platforms.

YouTube’s video recommendation engine has been targeted over time by critics who contend it can lead young viewers to dark or disturbing content.

Google-run YouTube has responded by ramping up safety measures and parental controls on the globally popular platform.

Working with an advisory committee, YouTube identified “categories of content that may be innocuous as a single video, but could be problematic for some teens if viewed in repetition,” YouTube director of youth and kids product James Beser said in a blog post Thursday.

Categories noted included “content that compares physical features and idealizes some types over others, idealizes specific fitness levels or body weights, or displays social aggression in the form of non-contact fights and intimidation.”

YouTube now limits repeated recommendations of such videos to teens in the United States and will extend the change to other countries over the coming year, according to Beser.

“Teens are more likely than adults to form negative beliefs about themselves when seeing repeated messages about ideal standards in content they consume online,” Beser said.

“These insights led us to develop additional safeguards for content recommendations for teens, while still allowing them to explore the topics they love.”

YouTube community guidelines already ban content involving eating disorders, hate speech, and harassment.

“A higher frequency of content that idealizes unhealthy standards or behaviors can emphasize potentially problematic messages – and those messages can impact how some teens see themselves,” Youth and Family Advisory Committee member Allison Briscoe-Smith, a clinician, said in the blog post.

“Guardrails can help teens maintain healthy patterns as they naturally compare themselves to others and size up how they want to show up in the world.”

YouTube use has been growing, as has the amount of revenue taken in from advertising on the platform, according to earnings reports by Google-parent Alphabet.

US Surgeon General Vivek Murthy earlier this year urged action to make sure social media environments are not hurting young users.

“We are in the middle of a national youth mental health crisis, and I am concerned that social media is an important driver of that crisis — one that we must urgently address,” Murthy said in an issued advisory.

A few states have passed laws barring social media from allowing minors without parental permission.

Meta last week said it was “disappointed” by the suit filed against it and that the states should be working with the array of social media companies to create age-appropriate industry standards.