SOCIAL

Meta Outlines its Latest Image Recognition Advances, Which Could Facilitate its Metaverse Vision

Meta’s working towards the next stage of generative AI, which could eventually enable the creation of immersive VR environments via simple directions and prompts.

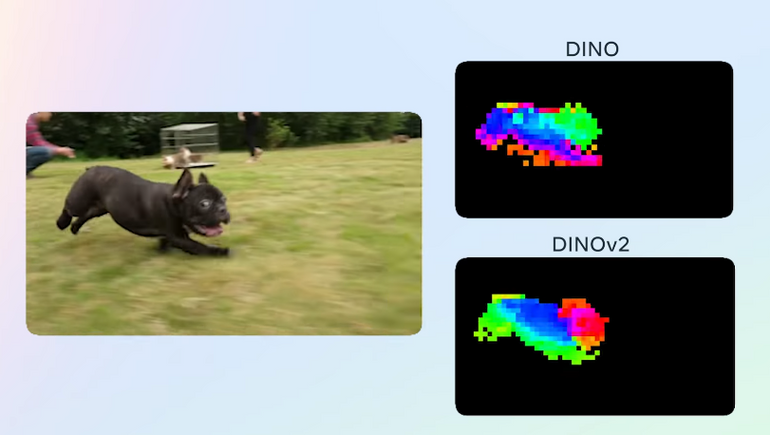

Its latest development on this front is its updated DINO image recognition model, which is now able to better identify individual objects within image and video frames, based on self-supervised learning, as opposed to requiring human annotation for each element.

Announced by Mark Zuckerberg this morning — today we’re releasing DINOv2, the first method for training computer vision models that uses self-supervised learning to achieve results matching or exceeding industry standards.

More on this new work ➡️ https://t.co/h5exzLJsFt pic.twitter.com/2pdxdTyxC4

— Meta AI (@MetaAI) April 17, 2023

As you can see in this example, DINOv2 is able to understand the context of visual inputs, and separate out individual elements, which will better enable Meta to build new models that have advanced understanding of not only what an item might look like, but also where it should be placed within a setting.

Meta published the first version of its DINO system back in 2021, which was a significant advance in what’s possible via image recognition. The new version builds upon this, and could have a range of potential use cases.

“In recent years, image-text pre-training, has been the standard approach for many computer vision tasks. But because the method relies on handwritten captions to learn the semantic content of an image, it ignores important information that typically isn’t explicitly mentioned in those text descriptions. For instance, a caption of a picture of a chair in a vast purple room might read ‘single oak chair’. Yet, the caption misses important information about the background, such as where the chair is spatially located in the purple room.”

DINOv2 is able to build in more of this context, without requiring manual intervention, which could have specific value for VR development.

It could also facilitate more immediately more accessible elements, like improved digital backgrounds in video chats, or tagging products within video content. It could also enable all new types of AR and visual tools that could lead to more immersive Facebook functions.

“Going forward, the team plans to integrate this model, which can function as a building block, in a larger, more complex AI system that could interact with large language models. A visual backbone providing rich information on images will allow complex AI systems to reason on images in a deeper way than describing them with a single text sentence. Models trained with text supervision are ultimately limited by the image captions. With DINOv2, there is no such built-in limitation.”

That, as noted, could also enable the development of AI-generated VR worlds, so that you’d eventually be able to speak entire, interactive virtual environments into existence.

That’s a long way off, and Meta’s hesitant to make too many references to the metaverse at this stage. But that’s where this technology could truly come into its own, via AI systems that can understand more about what’s in a scene, and where, contextually, things should be placed.

DINOv2 is another step in that direction – and while many have cooled on the prospects for Meta’s metaverse vision, it still could become the next big thing, once Meta’s ready to share more of its next-level vision.

It’ll likely be more cautious, given the negative coverage Meta has seen thus far. But it is coming, so don’t be surprised when Meta eventually wins the generative AI race with a totally new, totally different experience.

You can read more about DINOv2 here.