SEO

Do They Help Or Hurt Your SEO?

Early in the 2000s, reciprocal link building was a popular method to increase your search traffic and boost your backlink profile.

Also referred to as “traded” or “exchanged” links, reciprocal links were something of an SEO hack – even though they were technically against Google’s guidelines.

These days, Google still says “no” to reciprocal links (they call it a “link scheme”).

But the web today is still full of them.

What gives?

The way reciprocal links appear on sites – and the way they occur – is different from 20 years ago.

Today, reciprocal links are a natural byproduct of owning a website.

It happens when you develop relationships with other sites through authentic outreach, not to mention when you link to sources without any expectation of reciprocation, but they discover your link and, in fact, organically reciprocate.

Further, an insightful link-building study done by Ahrefs shows how common reciprocal links still are on the web.

This graph shows that only 26.4% of the authority domains used in the study are not using reciprocal links:

That means 73.6% of the domains are using them.

So, sure, reciprocal links are common, but do they help or hurt your SEO?

Intentions matter. Let me explain.

What Are Reciprocal Links?

A link exchange occurs when an agreement is made between two brands to trade links to boost SEO and site authority by essentially saying, “You link to me, and I’ll link to you.”

In essence, a reciprocal link is a quid pro quo or a “You scratch my back, I’ll scratch yours” situation.

Does this sound shady?

Maybe.

Is it shady?

It could be. That all depends on how – and how often – you use reciprocal links on your site.

Later in this article, we link to Ahrefs. The link sets us both up for a helpful, naturally occurring reciprocal link situation. Whether Ahrefs chooses to reciprocate by linking back to this article is entirely up to them.

If they do, that’s exactly how a natural reciprocal link is born.

Now, let’s take a look at the other end of the spectrum.

You might get emails or come across websites with shady link exchange offers. They’re usually pretty easy to spot:

- They offer an exchange or deal that will boost your SEO or help you rank in Google.

- They mention link networks or say the words “link exchange.”

- They talk about offering you multiple links or links on multiple sites.

Are Reciprocal Links Good For SEO?

If you want to grow your authority and rankings (and reduce the risk of penalties from search engines), the key is to focus on less risky strategies and tactics.

Above all else, your link-building methods should enhance your customer’s experience on your site.

Rather than focusing on SERP rankings and your website’s link profile, focus on providing something of value to your readers and customers by producing high-quality content.

Including some external links on your site can be helpful to SEO, but they aren’t the driving force behind your site’s ranking.

How To Use Reciprocal Links To Help Your SEO

Linking to quality sites that are relevant to your content enhances your reader’s overall experience on your website.

Content is king, and consistently delivering original and valuable information to your readers will earn your site a spot on the throne.

When you link to high-value content, you can establish your site as a trusted source of information.

In this case, if the other site reciprocates the link, consider it a bonus – the content matters first.

If you’re going to request reciprocation, check the site’s SEO metrics to ensure that you’re exchanging links with a high-authority website.

When reciprocal links occur naturally between authority sites, both sites may benefit.

Here are a few things to consider before you pursue a link exchange:

- Could the external site potentially improve your site’s traffic?

- Does the site produce content and share information related to your niche?

- Is the brand or business a direct competitor? (The answer to this one should be no!)

4 Ways Links Can Hurt Your SEO

There are some benefits to naturally occurring reciprocal links, but when you don’t use common sense, exchanging links can harm your site’s authority and rankings.

Here are four ways that links might actually hurt your SEO:

1. Site Penalization (Manual Action)

Simply put, reciprocal links are against Google’s Webmaster Guidelines.

Screenshot from developers.google.com, August 2022

Screenshot from developers.google.com, August 2022If your site is abusing backlinks – if you’re trying to manipulate search results by exchanging links – your website runs a high risk of being penalized by Google.

2. Decrease In Site Authority & Rankings

If you’re linking to external sites that aren’t relevant to your content, your page might experience a drop in site authority or SERP rankings.

Before linking, ensure that the content is relevant and check the site’s domain authority.

In some cases, it’s OK to link back to low-authority sites, but excessively linking to these sites will not improve your own website’s authority.

3. Boosting SEO For Direct Competition

When linking to sites with the same target keywords and phrases as your website, your chances of having that link reciprocated are low.

As a result, you’re only boosting your competition’s SEO, not your own.

Link exchanges or reciprocated links should be between sites with similar content and themes and not between directly competing sites.

4. Loss Of Trust

You never want to lose the trust of search engines. But reciprocal links can cause this to happen in two ways:

- Your site has a ridiculously high number of one-to-one links

- Your link’s anchor text is consistently suspicious or unrelated to your content.

Developing Relationships And Providing Value Are Key For Reciprocal Links

Don’t seek out reciprocal links for the sole purpose of getting more site traffic or building your backlink profile.

It won’t work, and you might get a Google penalty.

Instead, understand that aiming to be useful and valuable with your on-site linking will naturally lead to reciprocal links.

Linking to relevant, trusted resources is an excellent way to build authority and develop relationships with brands in your niche.

You’ll also give your readers immense value by pointing them to sites with trusted, relevant, useful content. That builds trust with your audience, which nurtures them and pulls them further into your brand ecosystem.

And that’s exactly what you want.

Featured Image: Paulo Bobita/Search Engine Journal

SEO

Measuring Content Impact Across The Customer Journey

Understanding the impact of your content at every touchpoint of the customer journey is essential – but that’s easier said than done. From attracting potential leads to nurturing them into loyal customers, there are many touchpoints to look into.

So how do you identify and take advantage of these opportunities for growth?

Watch this on-demand webinar and learn a comprehensive approach for measuring the value of your content initiatives, so you can optimize resource allocation for maximum impact.

You’ll learn:

- Fresh methods for measuring your content’s impact.

- Fascinating insights using first-touch attribution, and how it differs from the usual last-touch perspective.

- Ways to persuade decision-makers to invest in more content by showcasing its value convincingly.

With Bill Franklin and Oliver Tani of DAC Group, we unravel the nuances of attribution modeling, emphasizing the significance of layering first-touch and last-touch attribution within your measurement strategy.

Check out these insights to help you craft compelling content tailored to each stage, using an approach rooted in first-hand experience to ensure your content resonates.

Whether you’re a seasoned marketer or new to content measurement, this webinar promises valuable insights and actionable tactics to elevate your SEO game and optimize your content initiatives for success.

View the slides below or check out the full webinar for all the details.

SEO

How to Find and Use Competitor Keywords

Competitor keywords are the keywords your rivals rank for in Google’s search results. They may rank organically or pay for Google Ads to rank in the paid results.

Knowing your competitors’ keywords is the easiest form of keyword research. If your competitors rank for or target particular keywords, it might be worth it for you to target them, too.

There is no way to see your competitors’ keywords without a tool like Ahrefs, which has a database of keywords and the sites that rank for them. As far as we know, Ahrefs has the biggest database of these keywords.

How to find all the keywords your competitor ranks for

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Organic keywords report

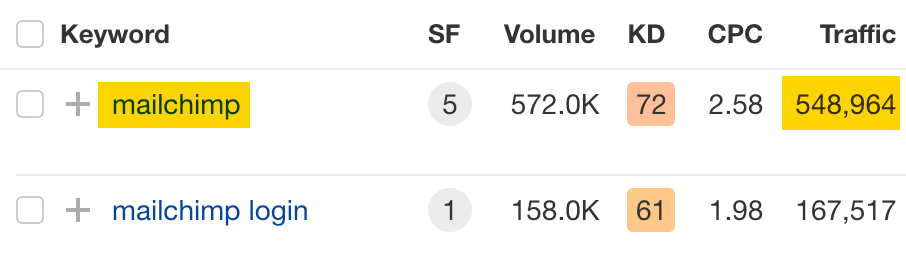

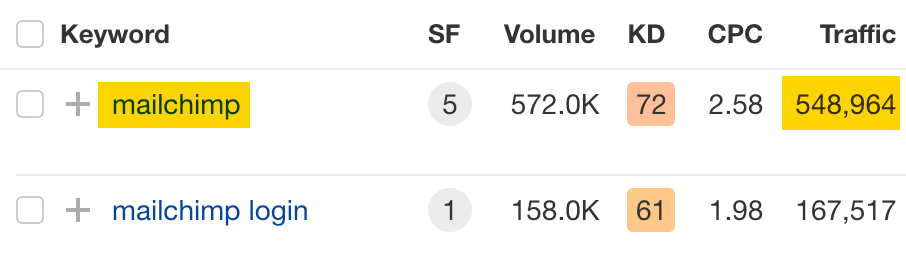

The report is sorted by traffic to show you the keywords sending your competitor the most visits. For example, Mailchimp gets most of its organic traffic from the keyword “mailchimp.”

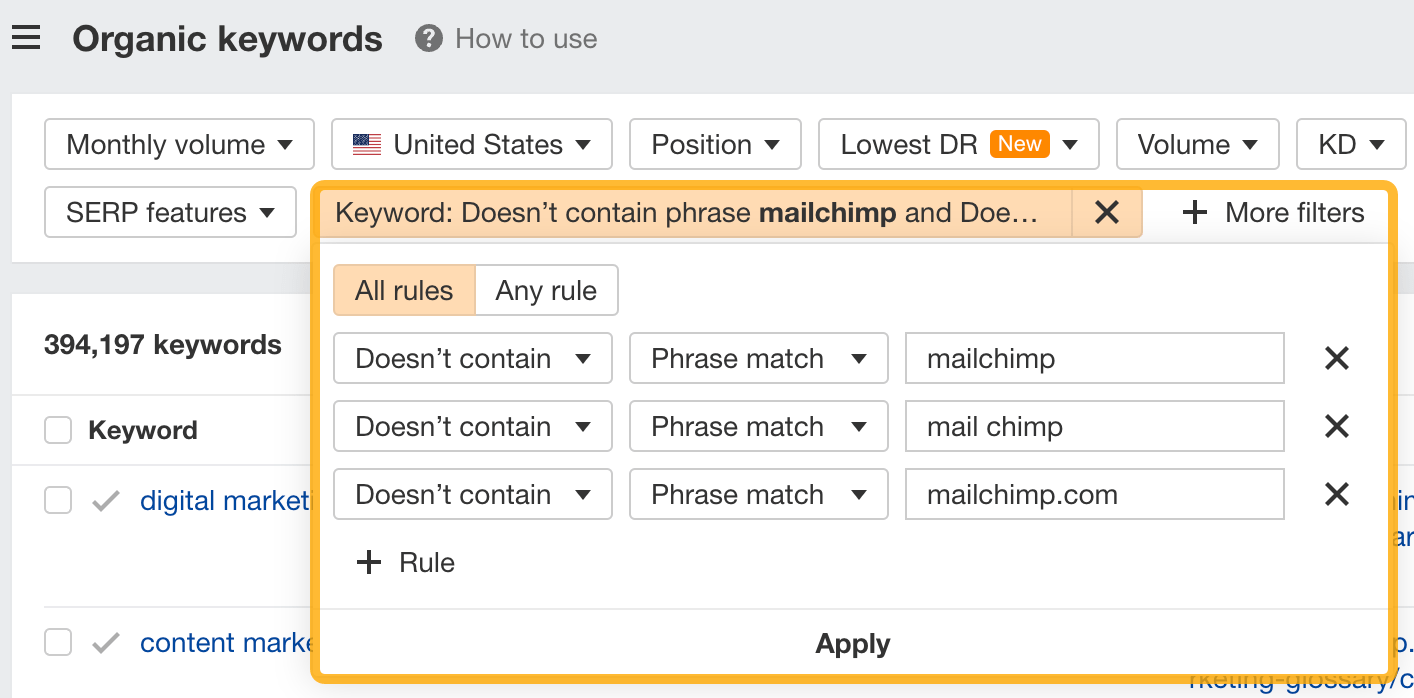

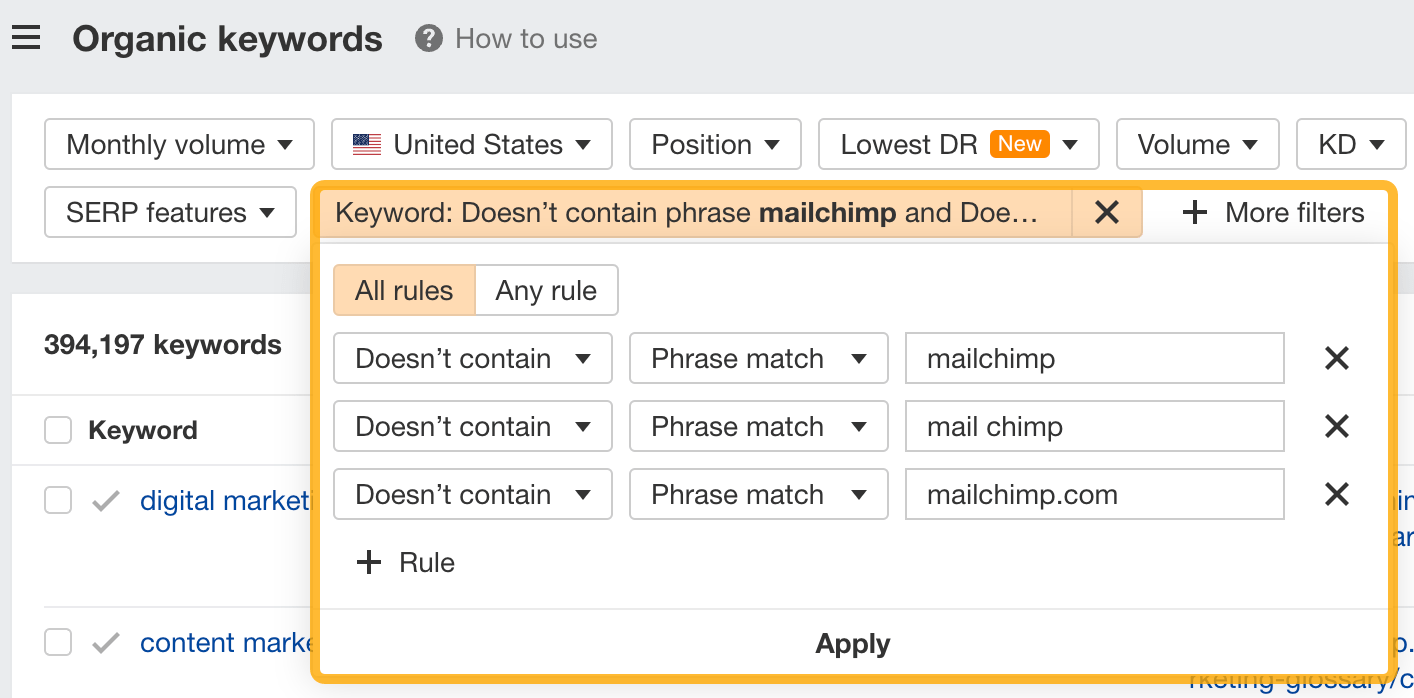

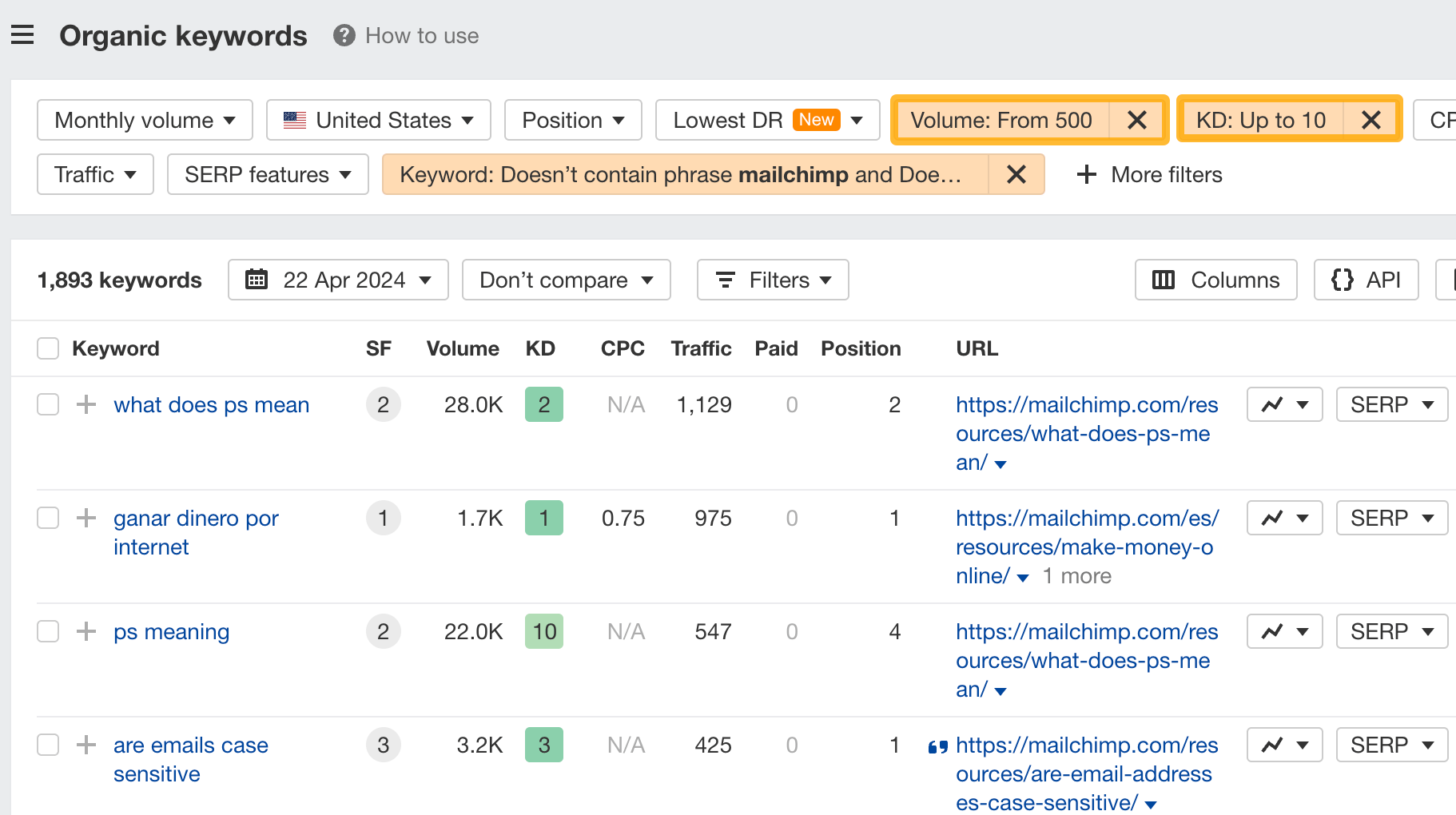

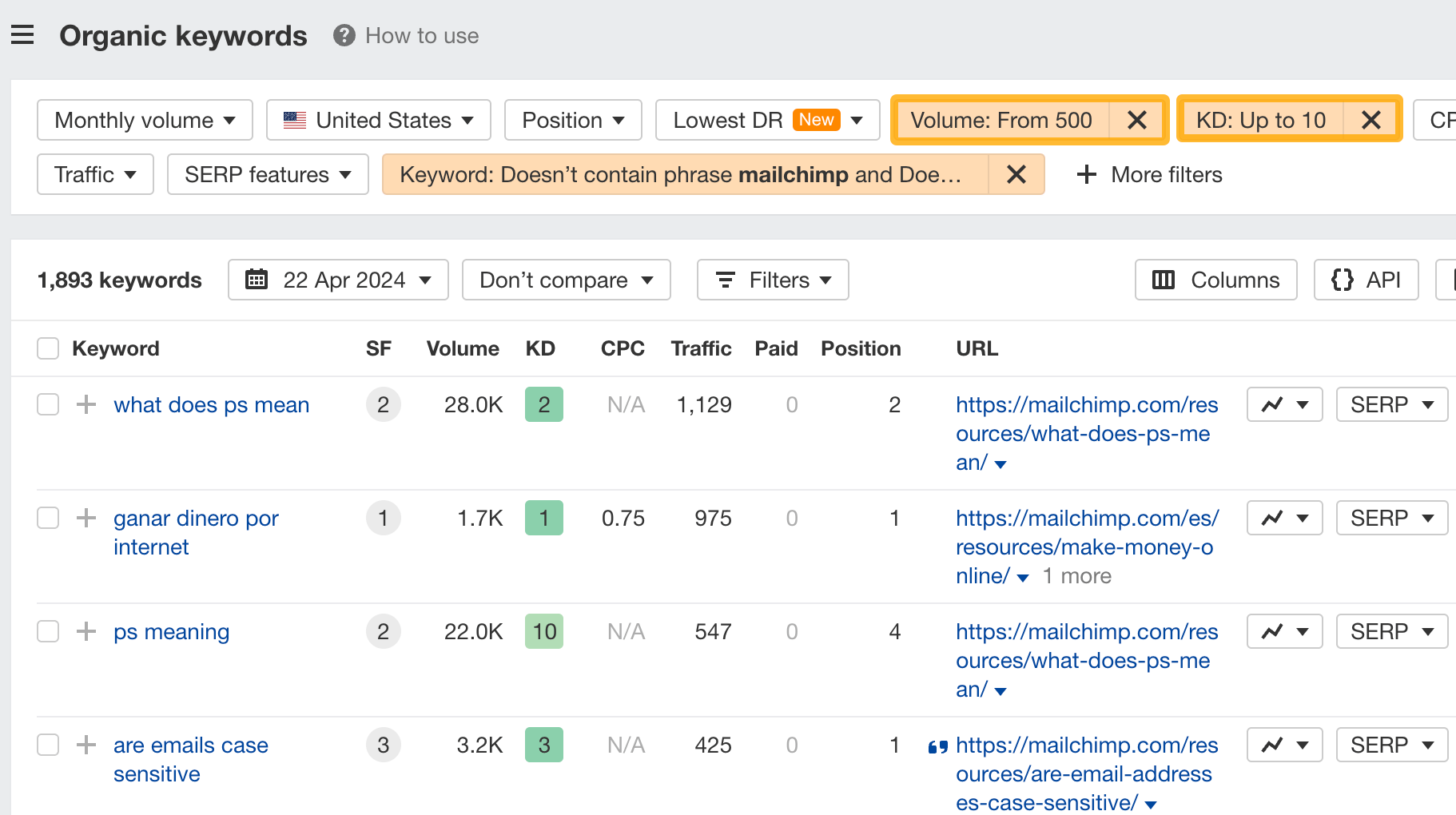

Since you’re unlikely to rank for your competitor’s brand, you might want to exclude branded keywords from the report. You can do this by adding a Keyword > Doesn’t contain filter. In this example, we’ll filter out keywords containing “mailchimp” or any potential misspellings:

If you’re a new brand competing with one that’s established, you might also want to look for popular low-difficulty keywords. You can do this by setting the Volume filter to a minimum of 500 and the KD filter to a maximum of 10.

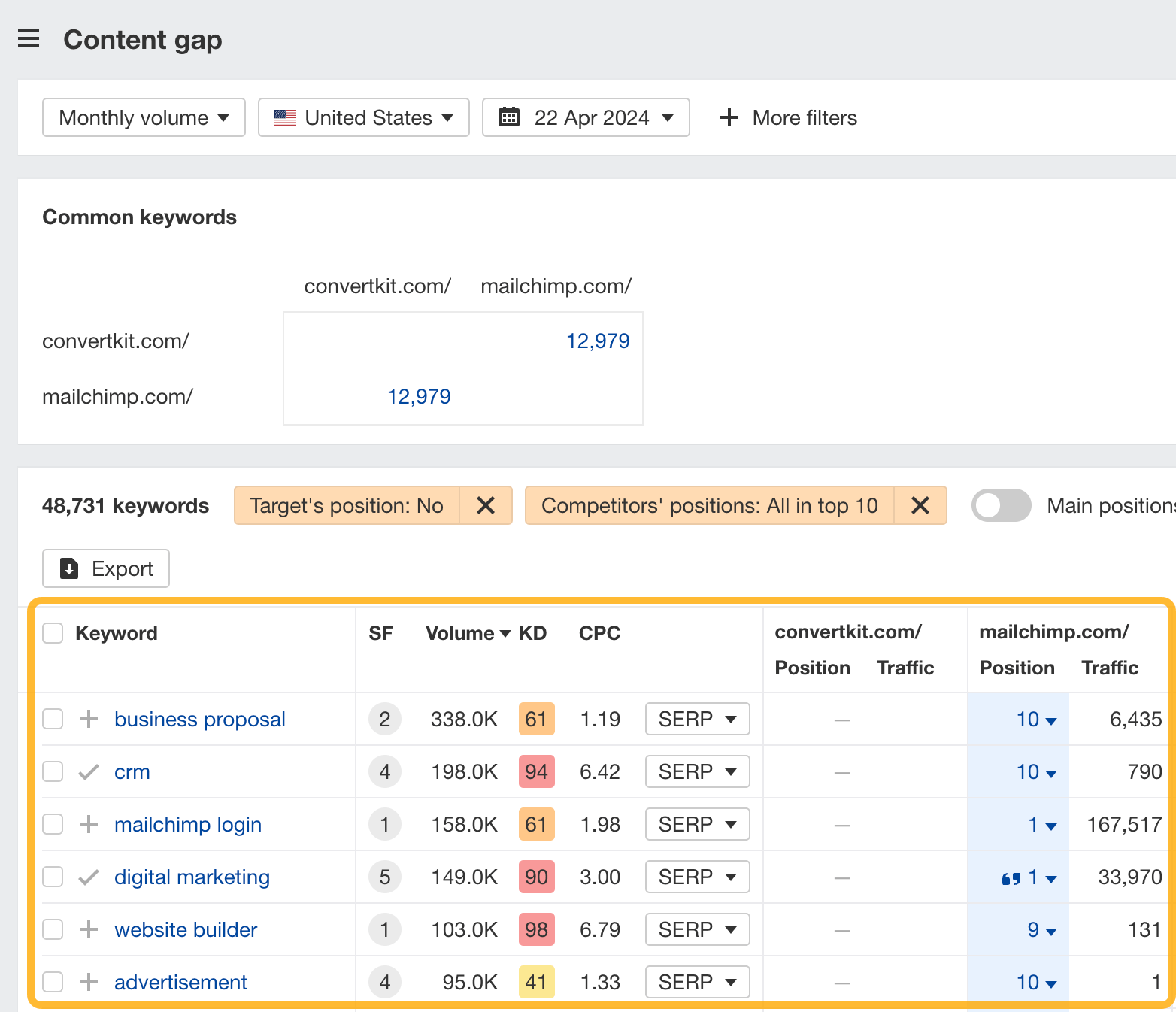

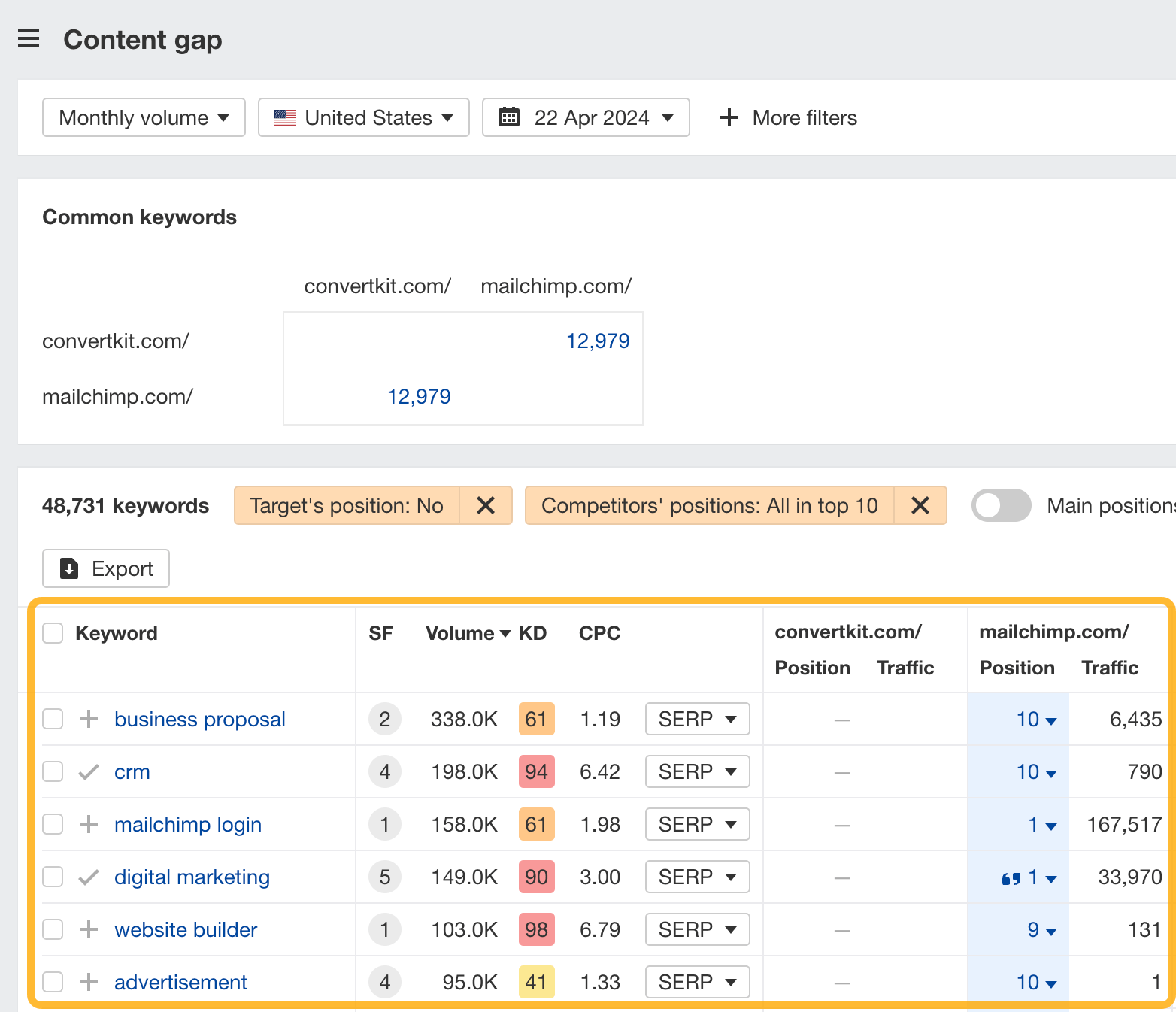

How to find keywords your competitor ranks for, but you don’t

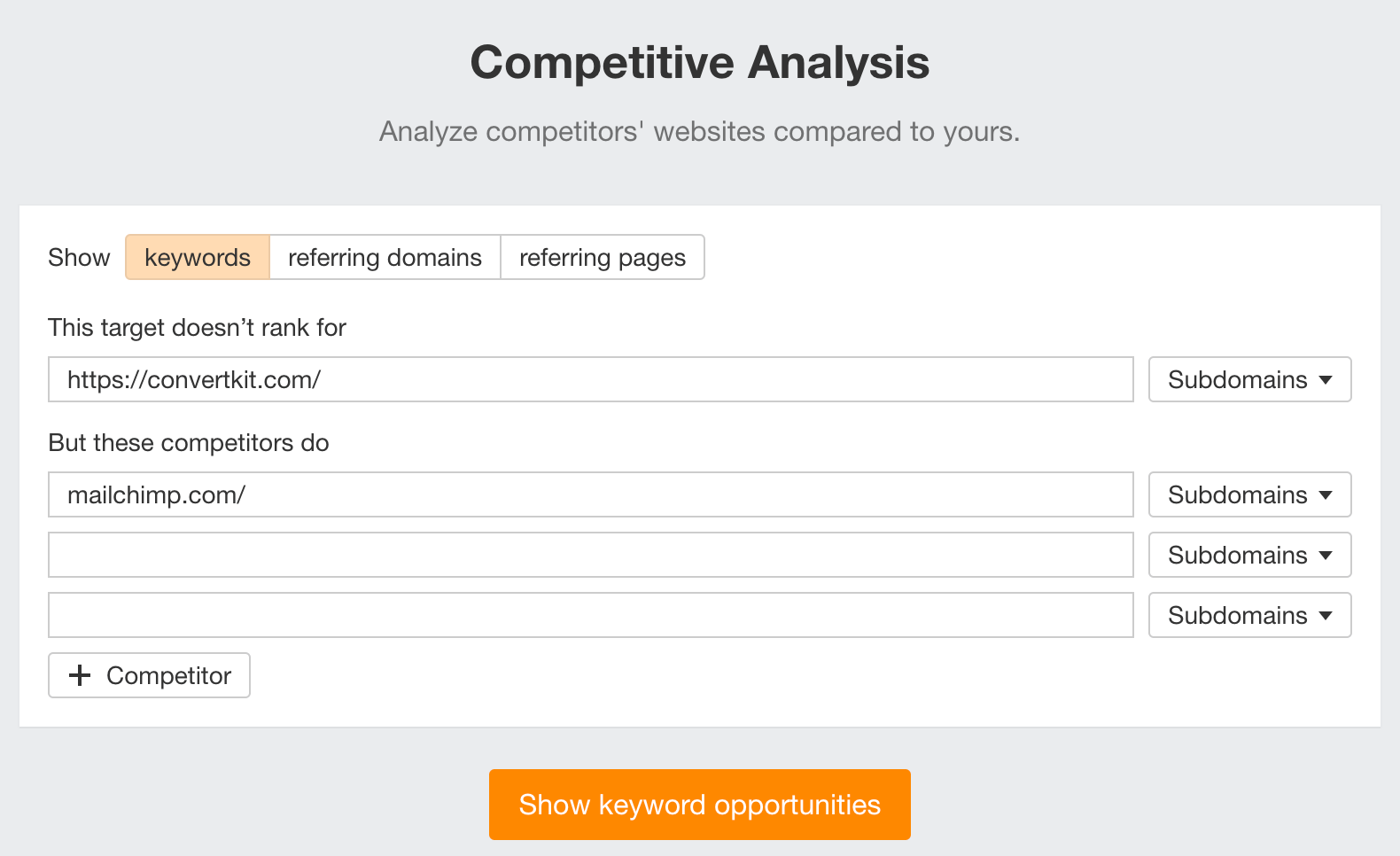

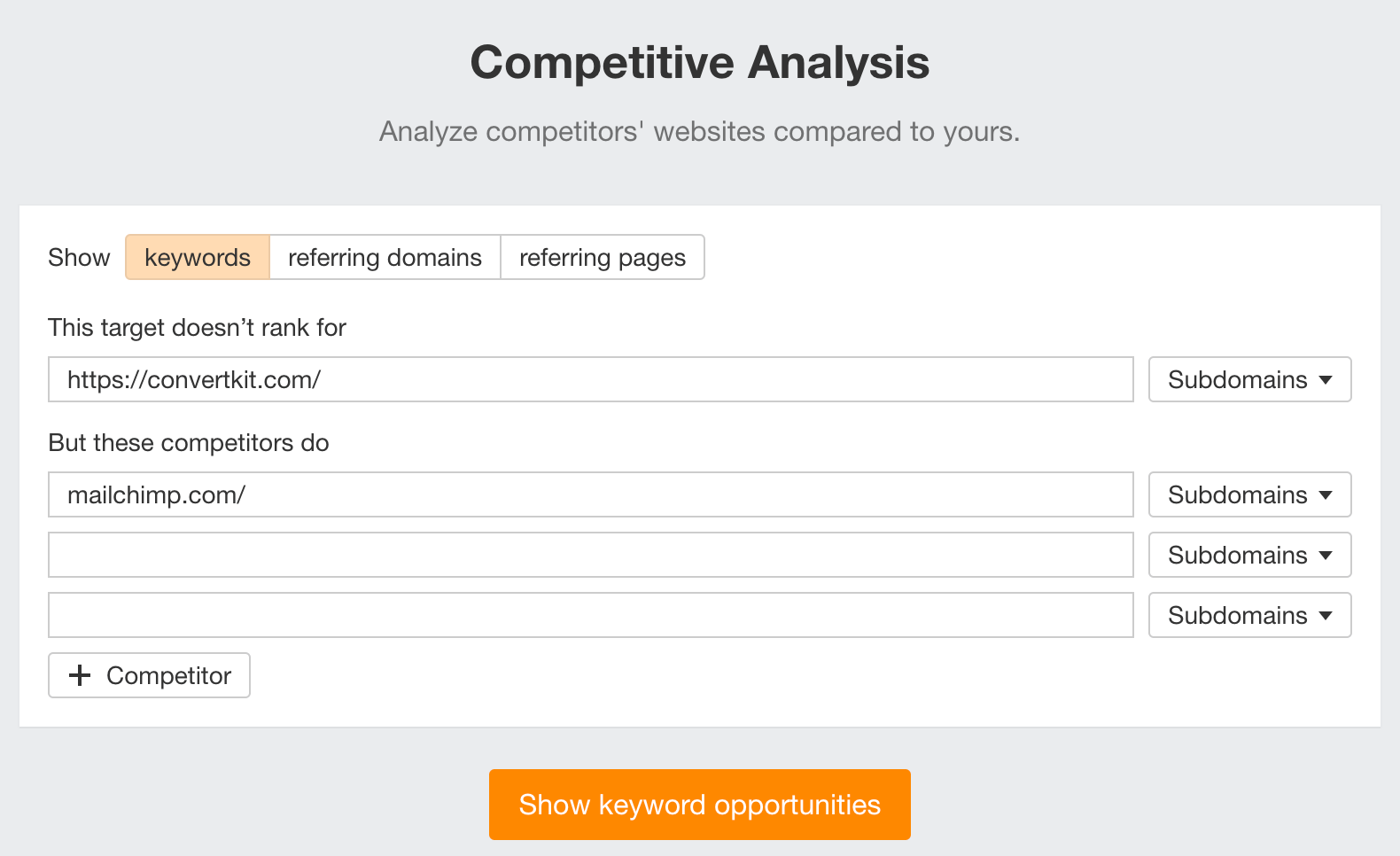

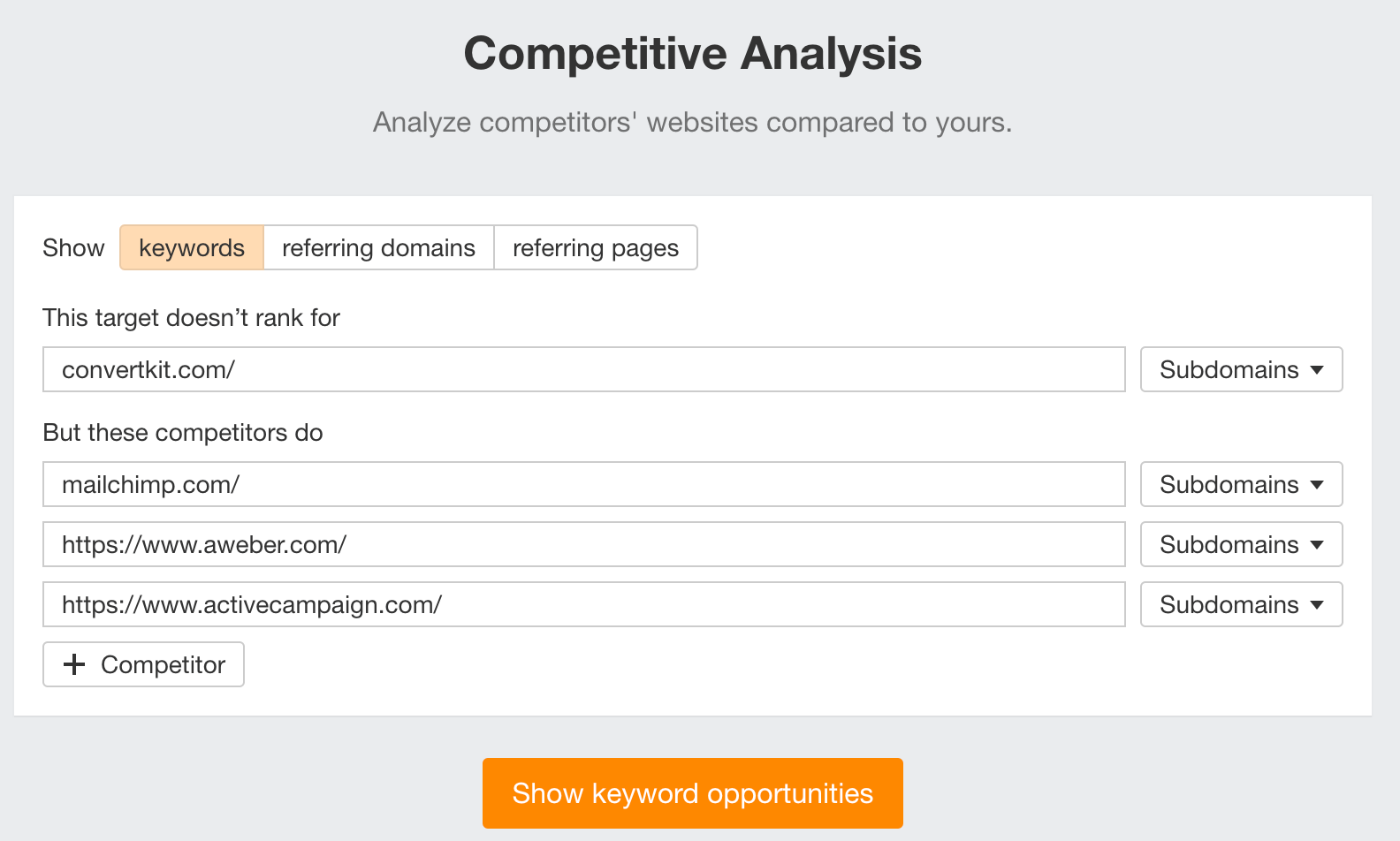

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter your competitor’s domain in the But these competitors do section

Hit “Show keyword opportunities,” and you’ll see all the keywords your competitor ranks for, but you don’t.

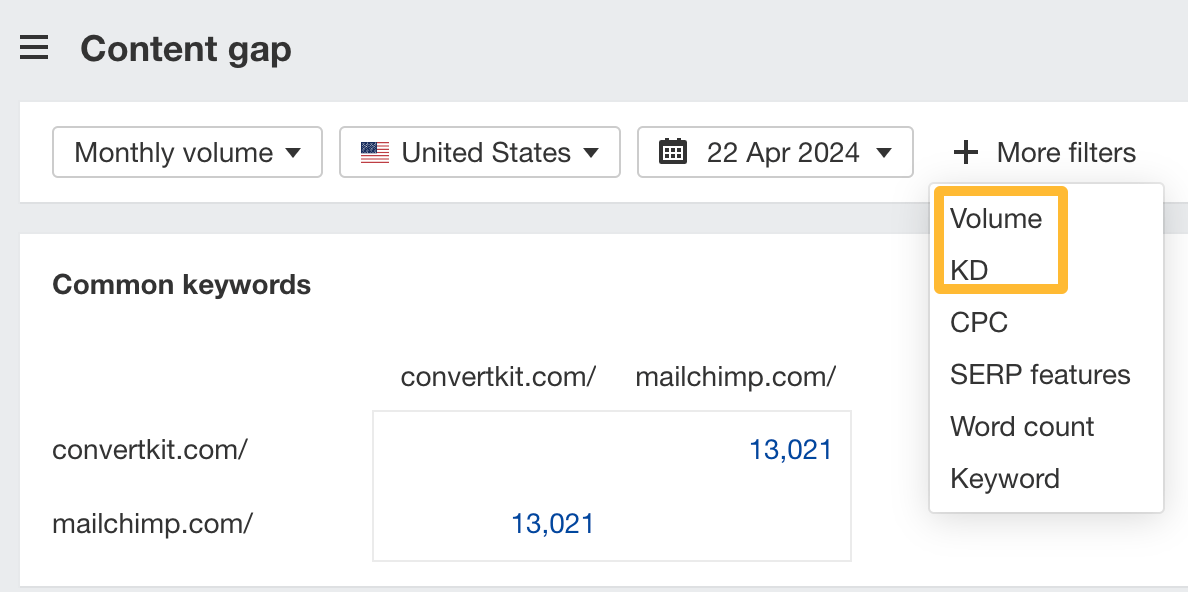

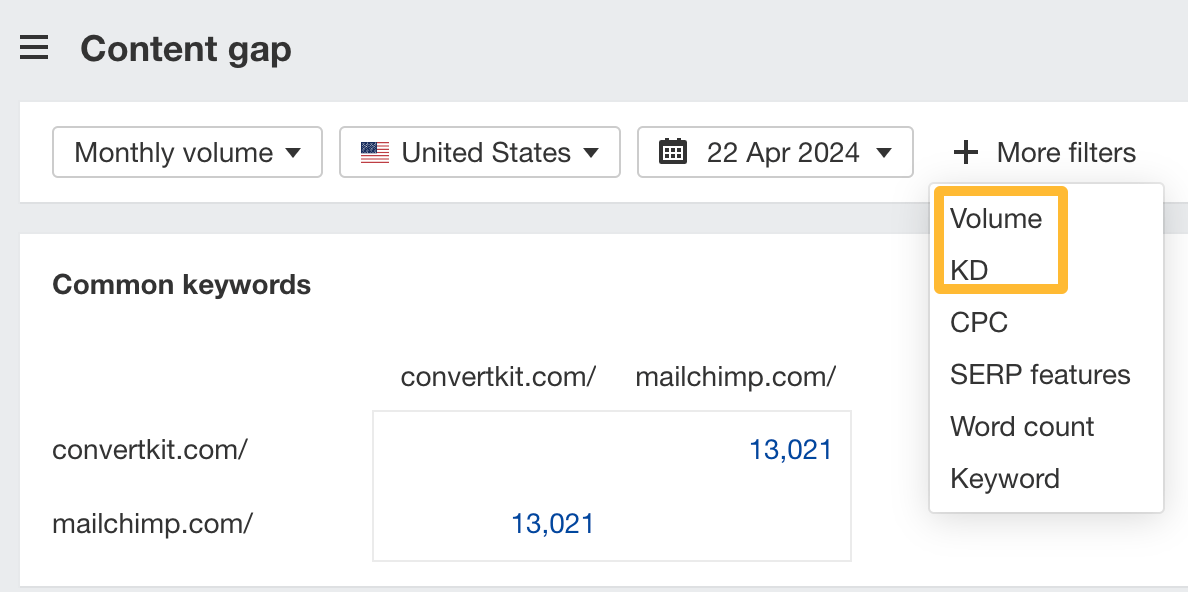

You can also add a Volume and KD filter to find popular, low-difficulty keywords in this report.

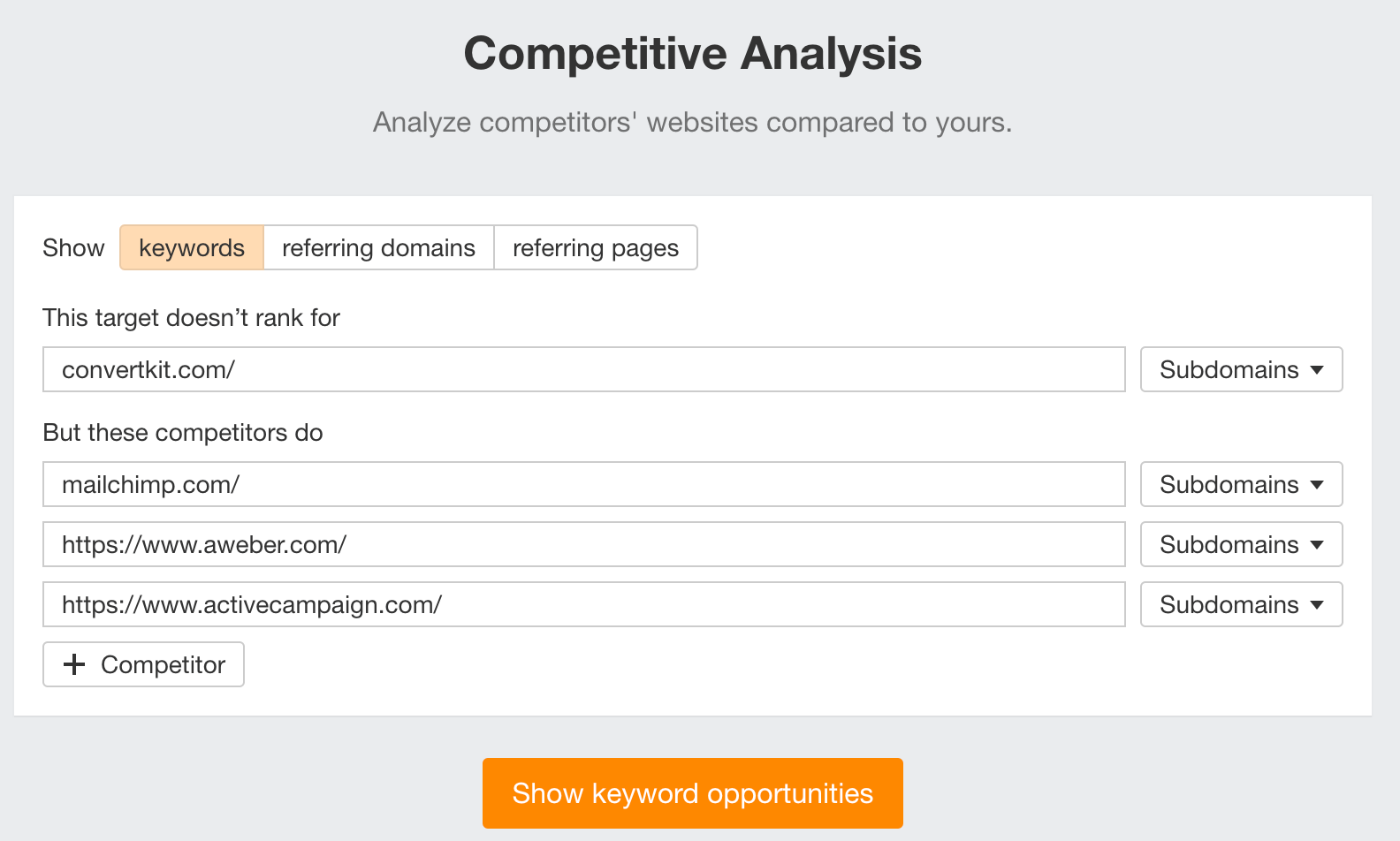

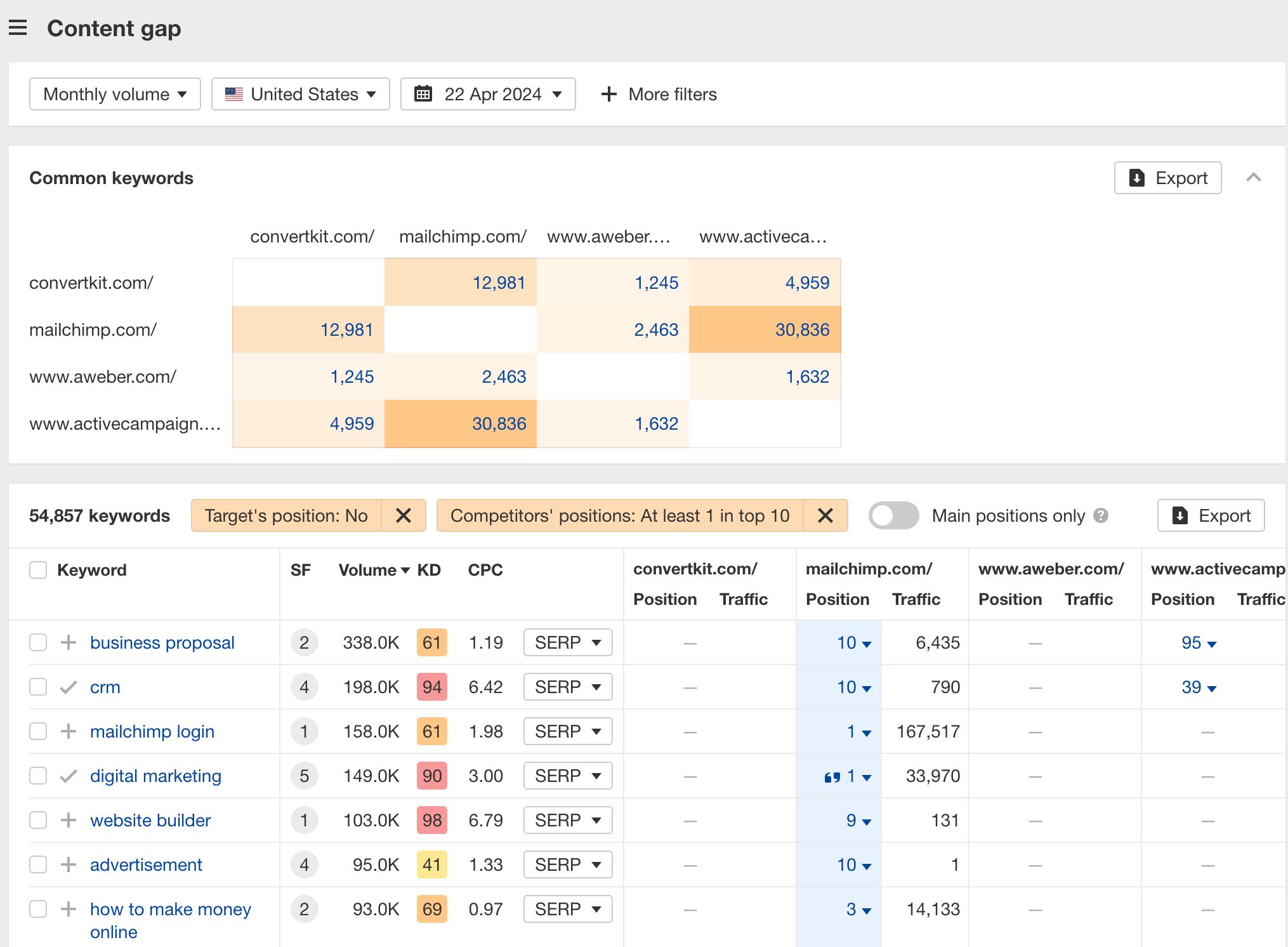

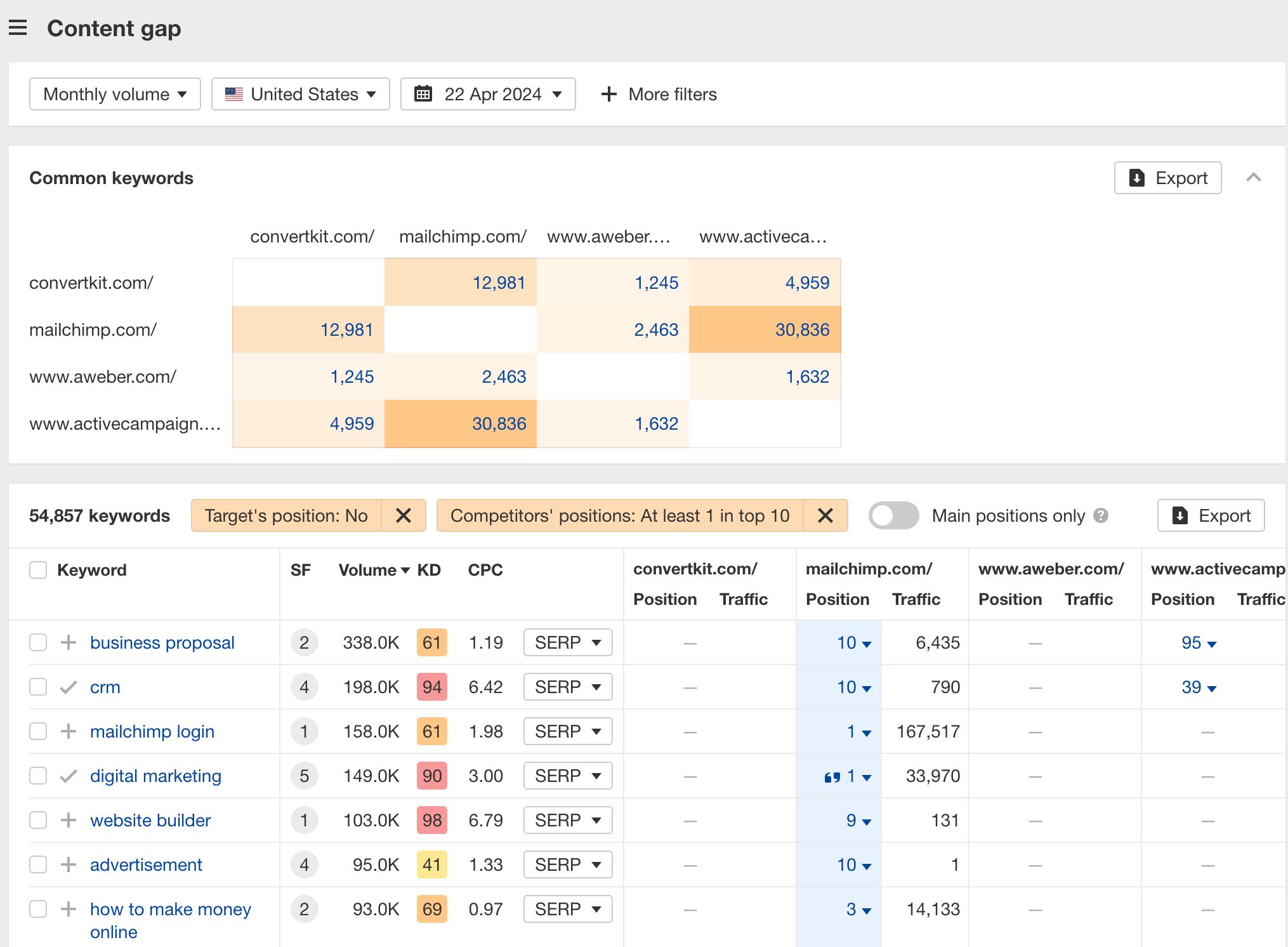

How to find keywords multiple competitors rank for, but you don’t

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter the domains of multiple competitors in the But these competitors do section

You’ll see all the keywords that at least one of these competitors ranks for, but you don’t.

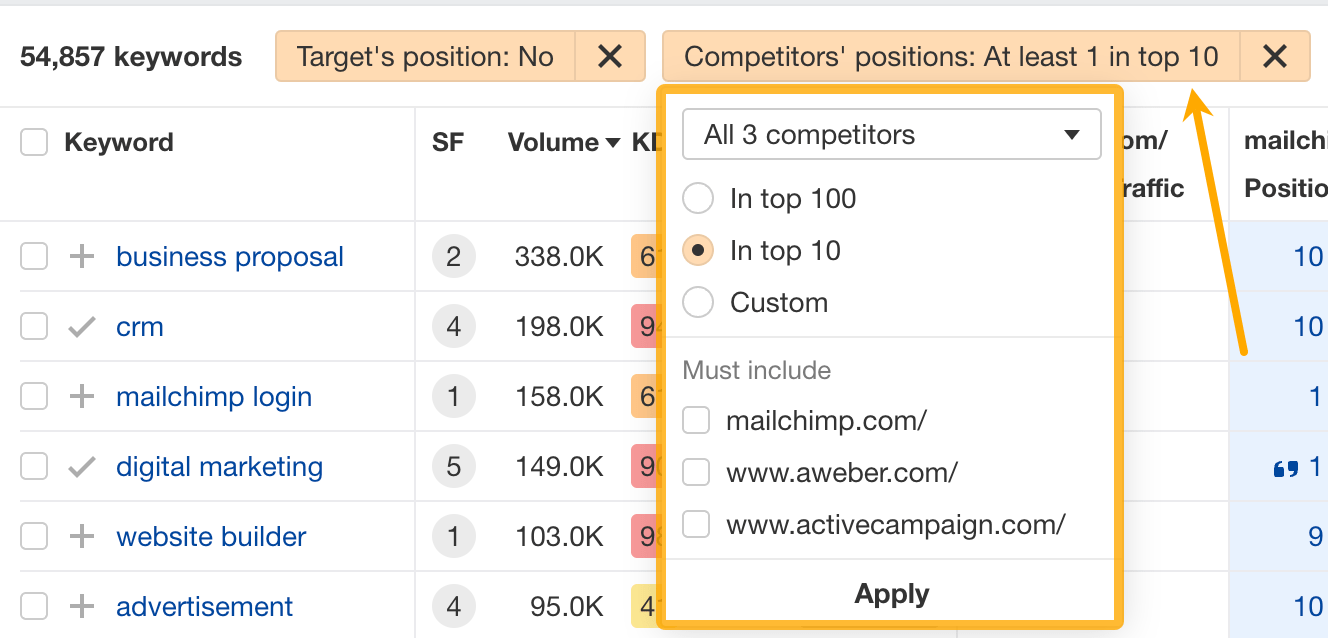

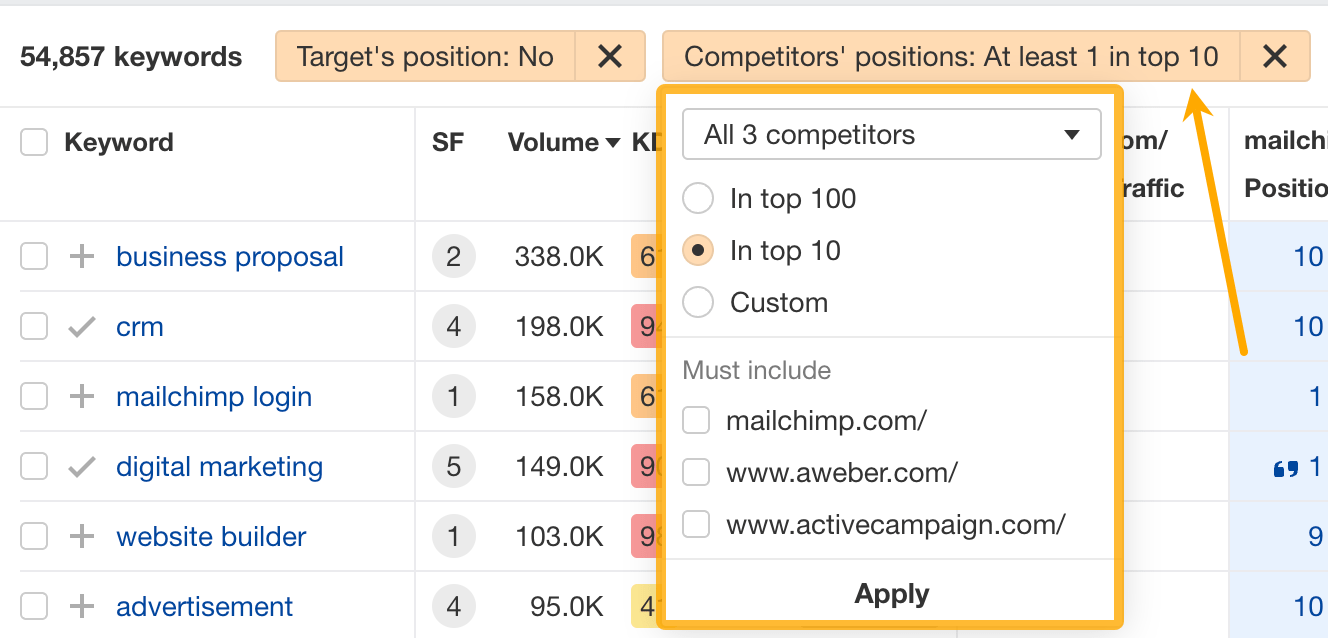

You can also narrow the list down to keywords that all competitors rank for. Click on the Competitors’ positions filter and choose All 3 competitors:

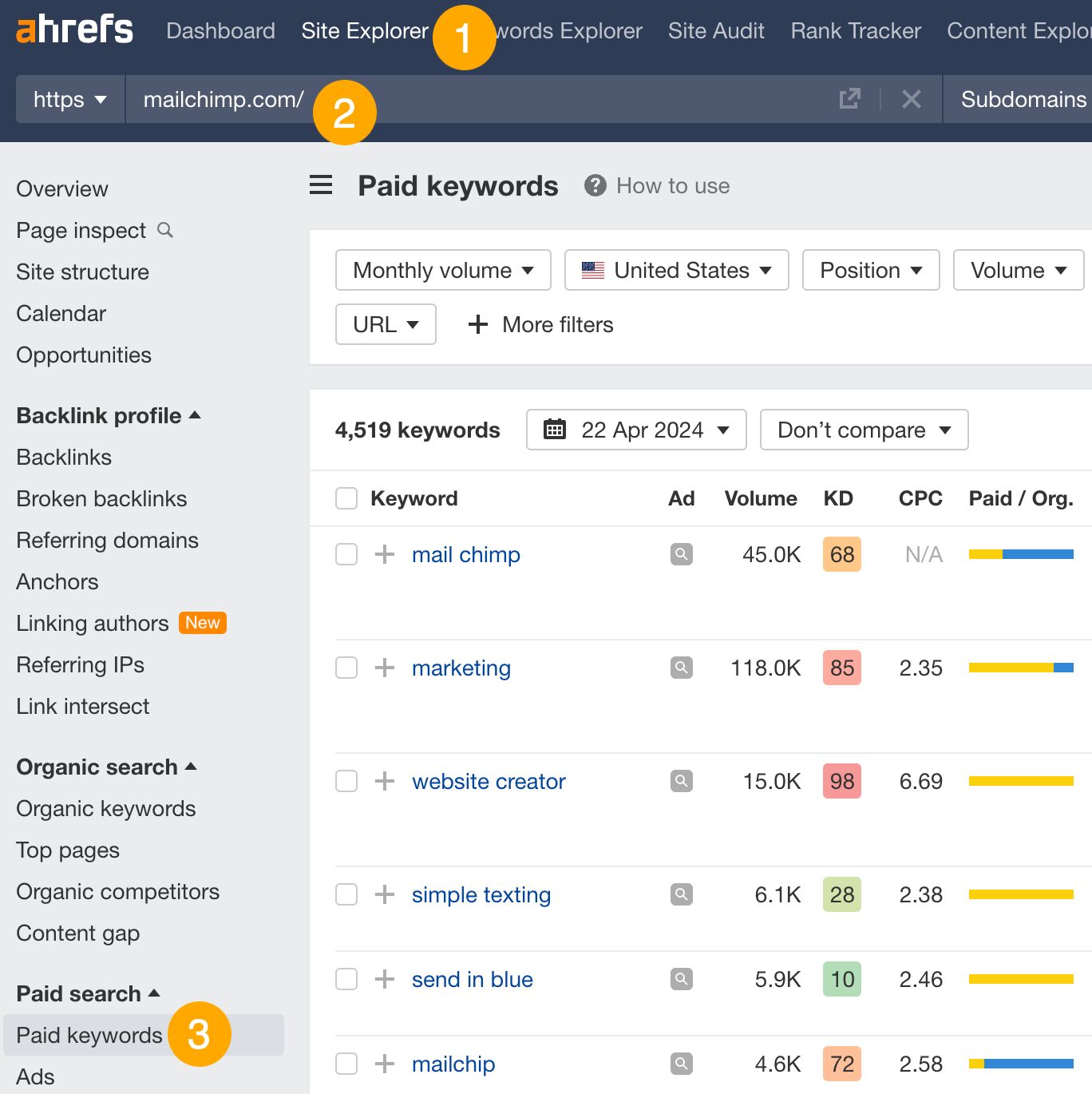

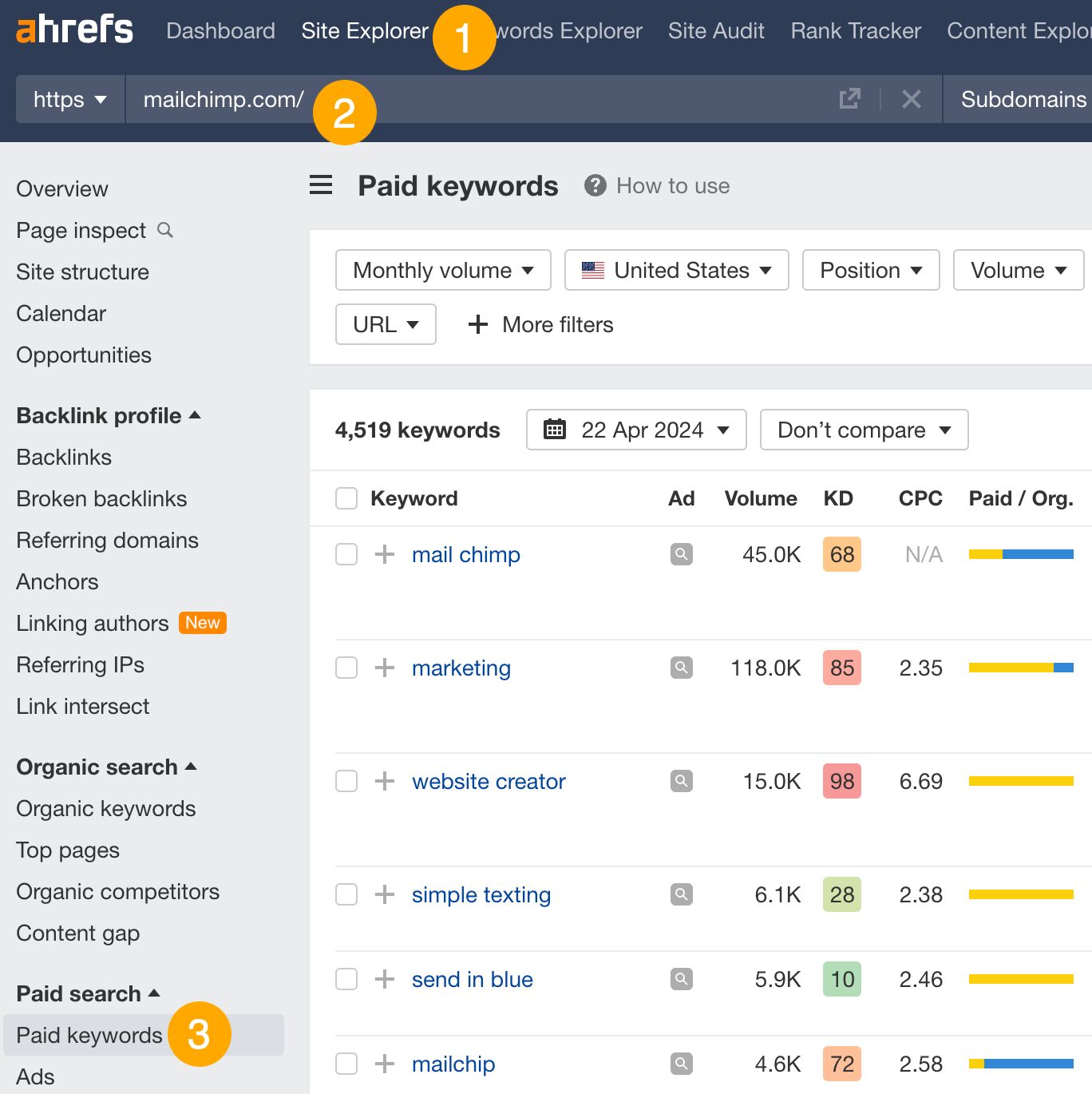

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Paid keywords report

This report shows you the keywords your competitors are targeting via Google Ads.

Since your competitor is paying for traffic from these keywords, it may indicate that they’re profitable for them—and could be for you, too.

You know what keywords your competitors are ranking for or bidding on. But what do you do with them? There are basically three options.

1. Create pages to target these keywords

You can only rank for keywords if you have content about them. So, the most straightforward thing you can do for competitors’ keywords you want to rank for is to create pages to target them.

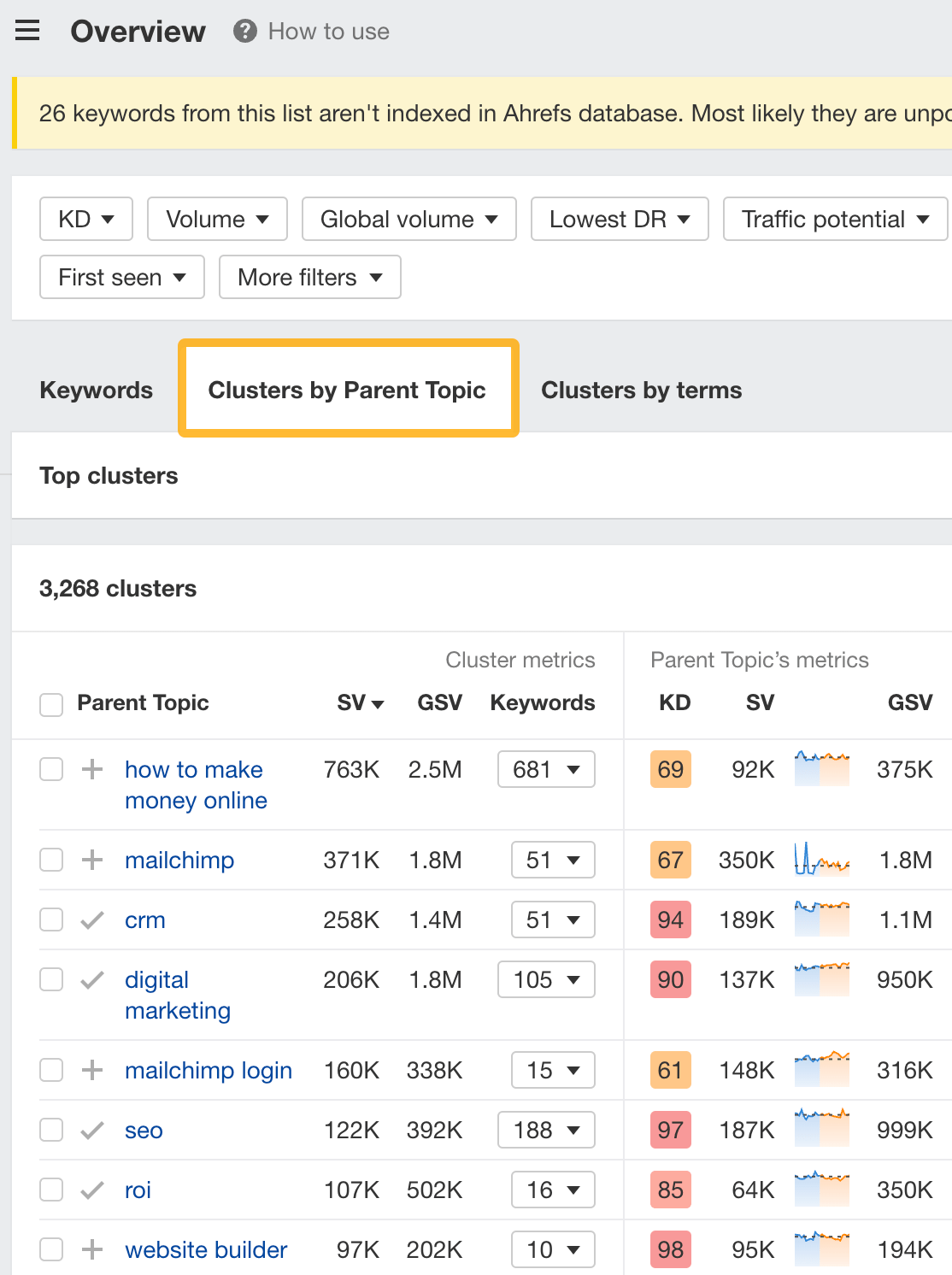

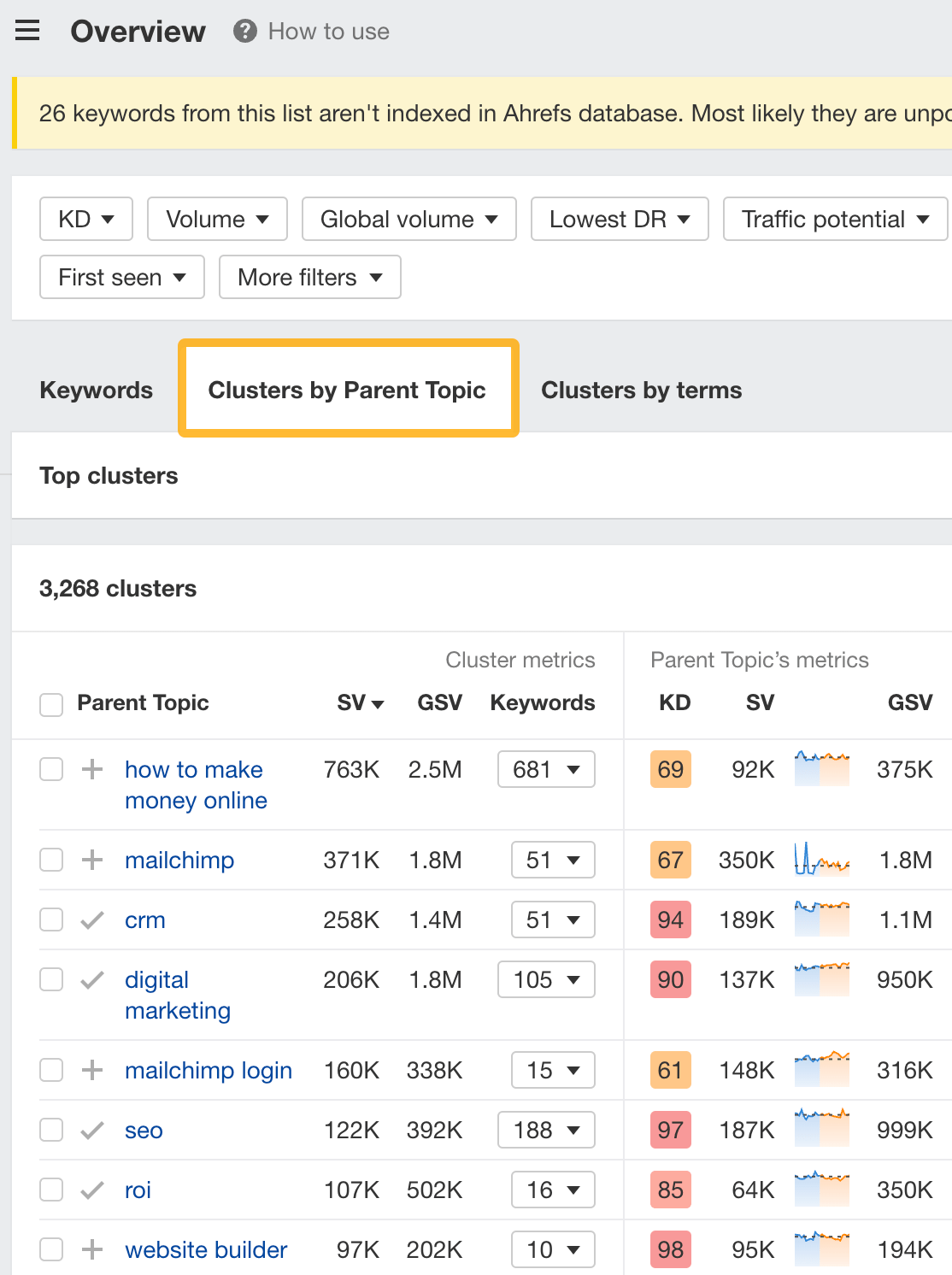

However, before you do this, it’s worth clustering your competitor’s keywords by Parent Topic. This will group keywords that mean the same or similar things so you can target them all with one page.

Here’s how to do that:

- Export your competitor’s keywords, either from the Organic Keywords or Content Gap report

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

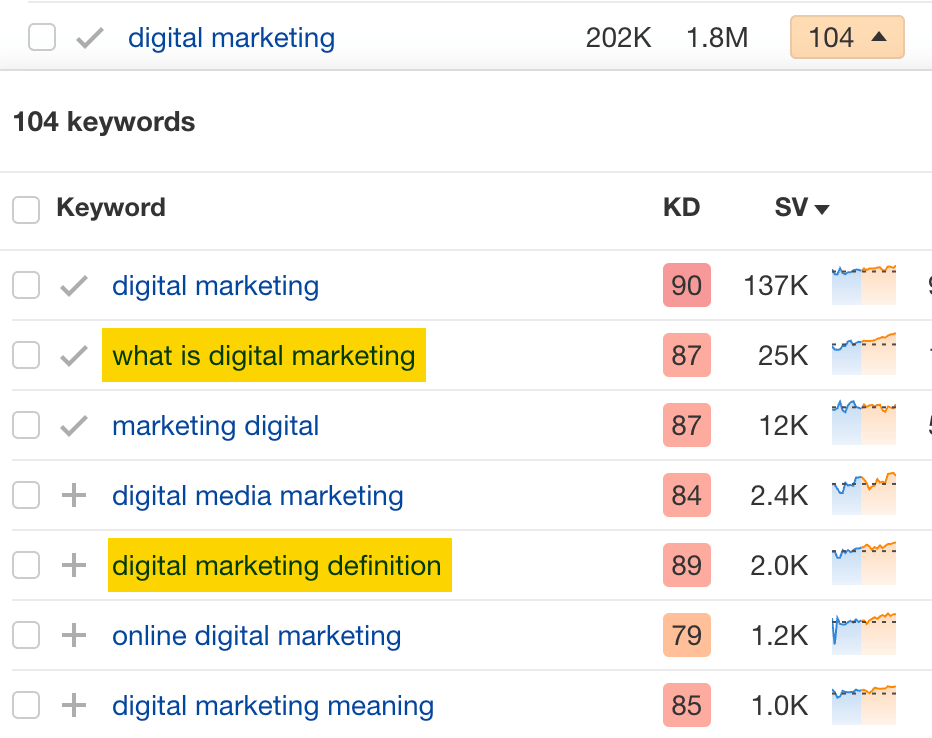

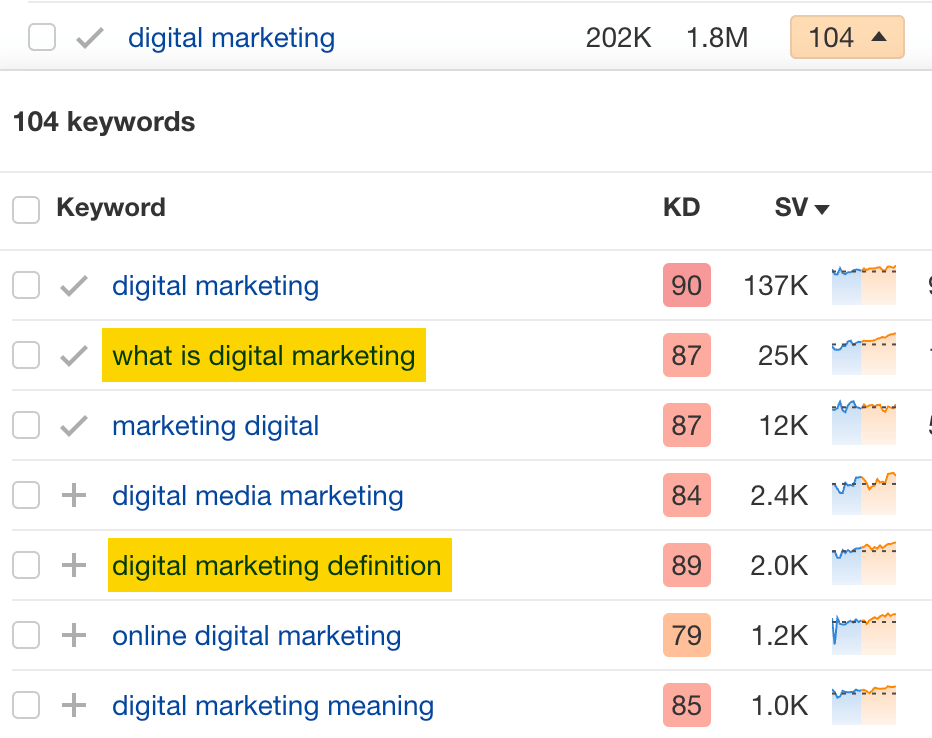

For example, MailChimp ranks for keywords like “what is digital marketing” and “digital marketing definition.” These and many others get clustered under the Parent Topic of “digital marketing” because people searching for them are all looking for the same thing: a definition of digital marketing. You only need to create one page to potentially rank for all these keywords.

2. Optimize existing content by filling subtopics

You don’t always need to create new content to rank for competitors’ keywords. Sometimes, you can optimize the content you already have to rank for them.

How do you know which keywords you can do this for? Try this:

- Export your competitor’s keywords

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

- Look for Parent Topics you already have content about

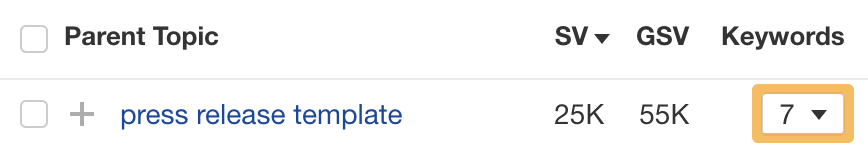

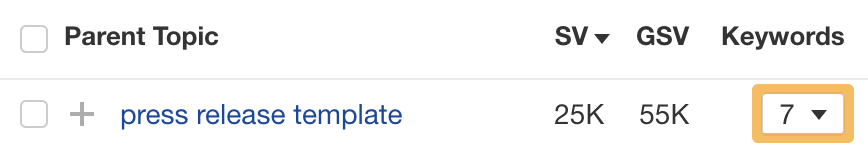

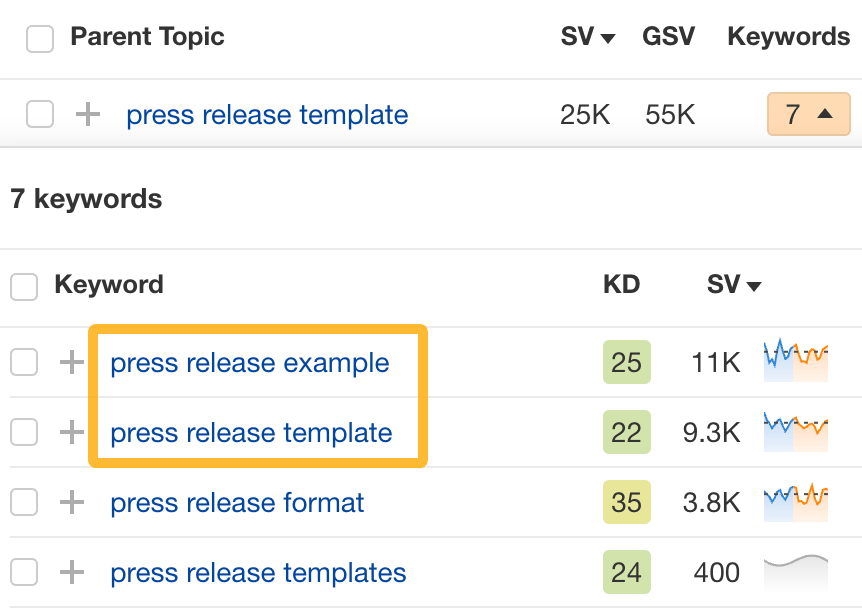

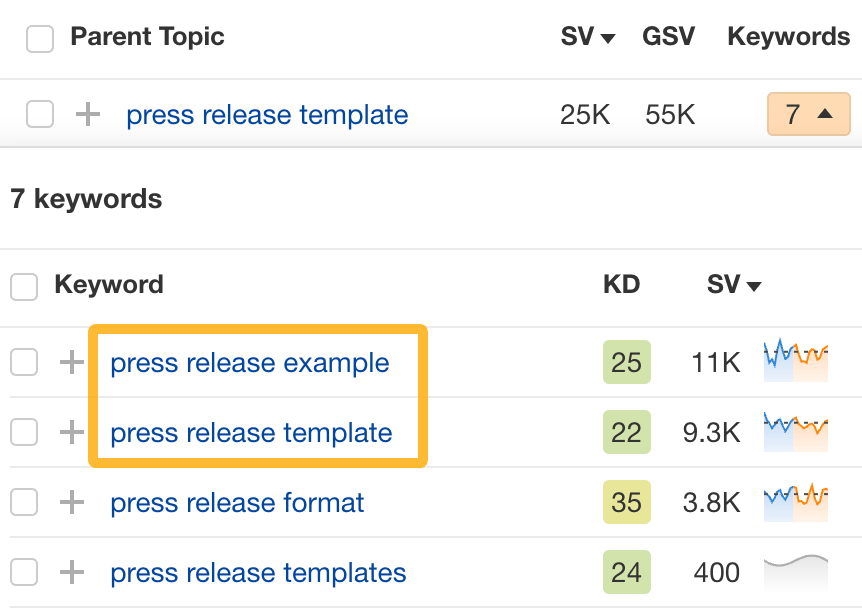

For example, if we analyze our competitor, we can see that seven keywords they rank for fall under the Parent Topic of “press release template.”

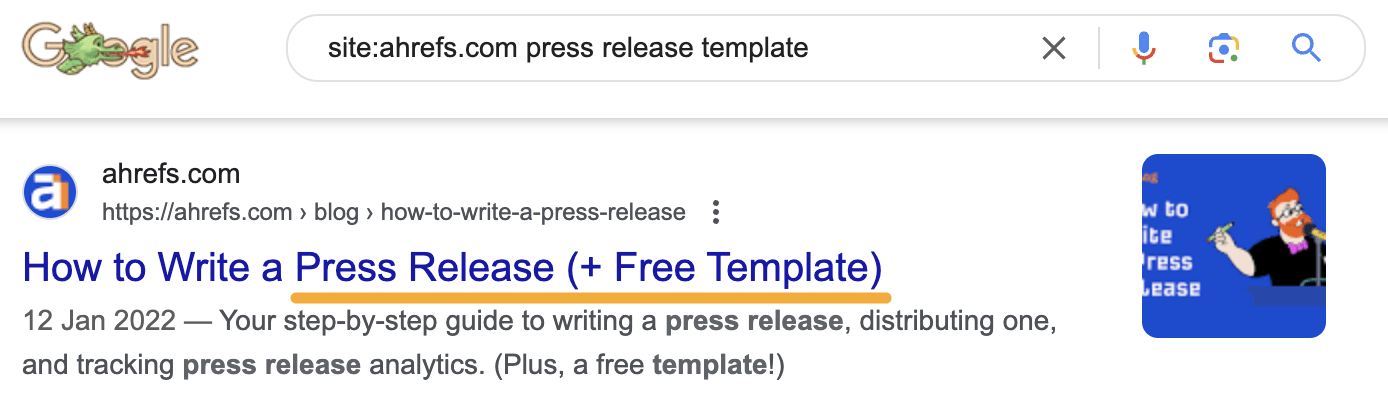

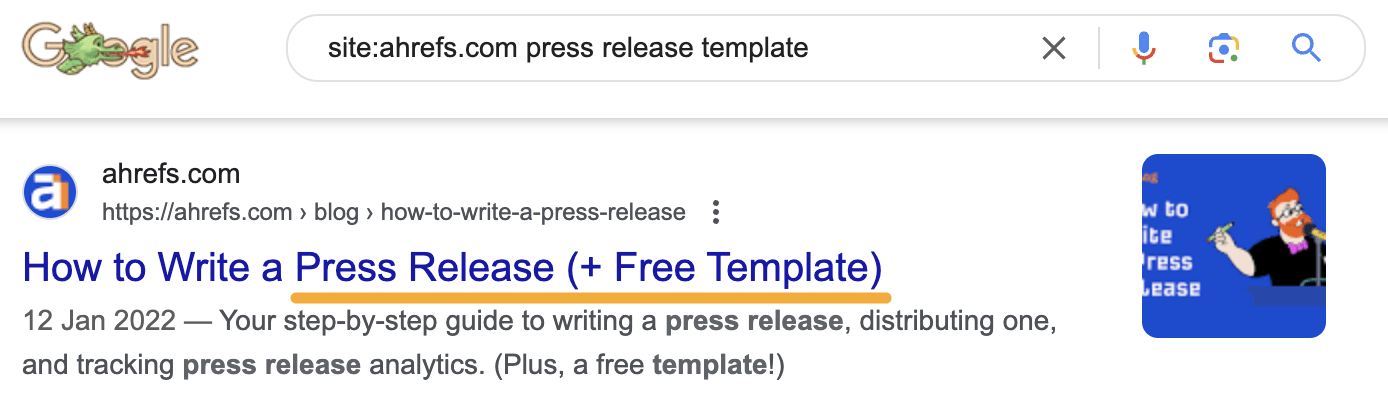

If we search our site, we see that we already have a page about this topic.

If we click the caret and check the keywords in the cluster, we see keywords like “press release example” and “press release format.”

To rank for the keywords in the cluster, we can probably optimize the page we already have by adding sections about the subtopics of “press release examples” and “press release format.”

3. Target these keywords with Google Ads

Paid keywords are the simplest—look through the report and see if there are any relevant keywords you might want to target, too.

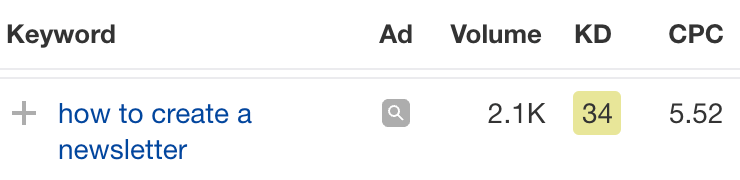

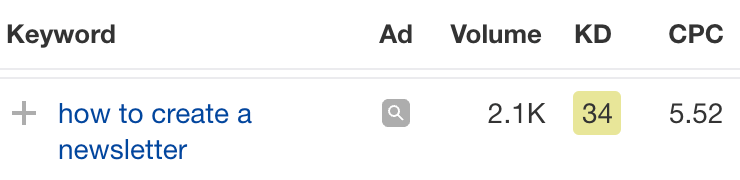

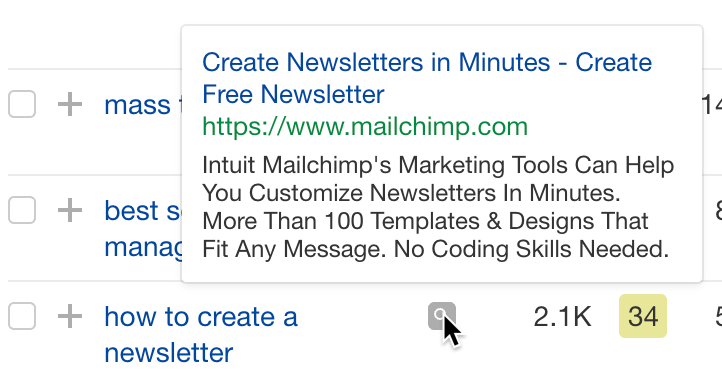

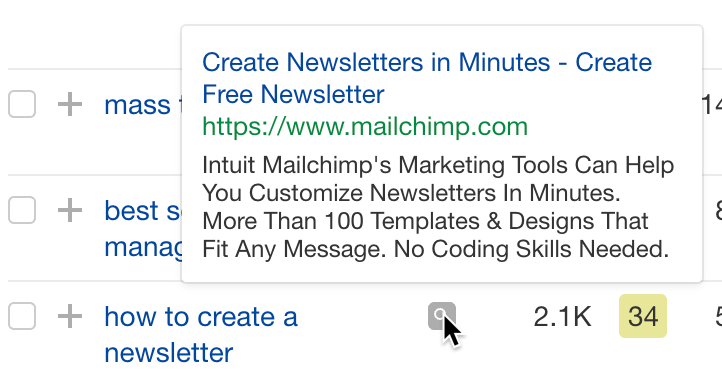

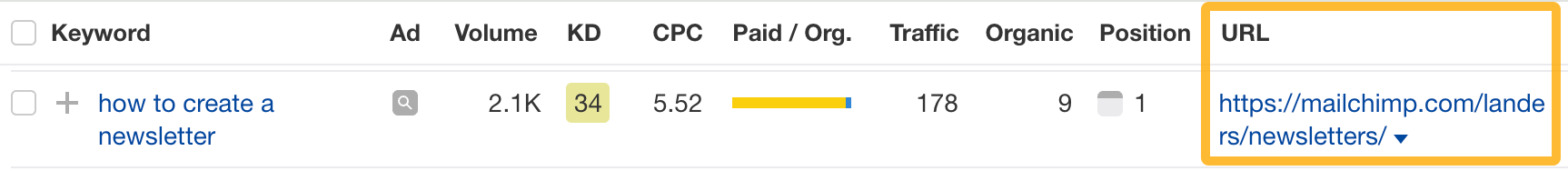

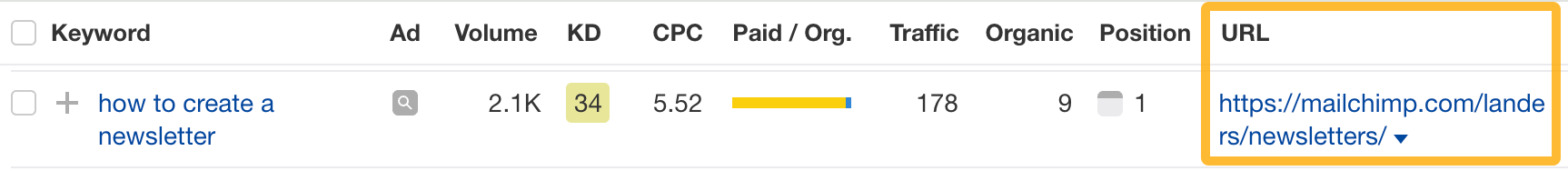

For example, Mailchimp is bidding for the keyword “how to create a newsletter.”

If you’re ConvertKit, you may also want to target this keyword since it’s relevant.

If you decide to target the same keyword via Google Ads, you can hover over the magnifying glass to see the ads your competitor is using.

You can also see the landing page your competitor directs ad traffic to under the URL column.

Learn more

Check out more tutorials on how to do competitor keyword analysis:

SEO

Google Confirms Links Are Not That Important

Google’s Gary Illyes confirmed at a recent search marketing conference that Google needs very few links, adding to the growing body of evidence that publishers need to focus on other factors. Gary tweeted confirmation that he indeed say those words.

Background Of Links For Ranking

Links were discovered in the late 1990’s to be a good signal for search engines to use for validating how authoritative a website is and then Google discovered soon after that anchor text could be used to provide semantic signals about what a webpage was about.

One of the most important research papers was Authoritative Sources in a Hyperlinked Environment by Jon M. Kleinberg, published around 1998 (link to research paper at the end of the article). The main discovery of this research paper is that there is too many web pages and there was no objective way to filter search results for quality in order to rank web pages for a subjective idea of relevance.

The author of the research paper discovered that links could be used as an objective filter for authoritativeness.

Kleinberg wrote:

“To provide effective search methods under these conditions, one needs a way to filter, from among a huge collection of relevant pages, a small set of the most “authoritative” or ‘definitive’ ones.”

This is the most influential research paper on links because it kick-started more research on ways to use links beyond as an authority metric but as a subjective metric for relevance.

Objective is something factual. Subjective is something that’s closer to an opinion. The founders of Google discovered how to use the subjective opinions of the Internet as a relevance metric for what to rank in the search results.

What Larry Page and Sergey Brin discovered and shared in their research paper (The Anatomy of a Large-Scale Hypertextual Web Search Engine – link at end of this article) was that it was possible to harness the power of anchor text to determine the subjective opinion of relevance from actual humans. It was essentially crowdsourcing the opinions of millions of website expressed through the link structure between each webpage.

What Did Gary Illyes Say About Links In 2024?

At a recent search conference in Bulgaria, Google’s Gary Illyes made a comment about how Google doesn’t really need that many links and how Google has made links less important.

Patrick Stox tweeted about what he heard at the search conference:

” ‘We need very few links to rank pages… Over the years we’ve made links less important.’ @methode #serpconf2024″

Google’s Gary Illyes tweeted a confirmation of that statement:

“I shouldn’t have said that… I definitely shouldn’t have said that”

Why Links Matter Less

The initial state of anchor text when Google first used links for ranking purposes was absolutely non-spammy, which is why it was so useful. Hyperlinks were primarily used as a way to send traffic from one website to another website.

But by 2004 or 2005 Google was using statistical analysis to detect manipulated links, then around 2004 “powered-by” links in website footers stopped passing anchor text value, and by 2006 links close to the words “advertising” stopped passing link value, links from directories stopped passing ranking value and by 2012 Google deployed a massive link algorithm called Penguin that destroyed the rankings of likely millions of websites, many of which were using guest posting.

The link signal eventually became so bad that Google decided in 2019 to selectively use nofollow links for ranking purposes. Google’s Gary Illyes confirmed that the change to nofollow was made because of the link signal.

Google Explicitly Confirms That Links Matter Less

In 2023 Google’s Gary Illyes shared at a PubCon Austin that links were not even in the top 3 of ranking factors. Then in March 2024, coinciding with the March 2024 Core Algorithm Update, Google updated their spam policies documentation to downplay the importance of links for ranking purposes.

The documentation previously said:

“Google uses links as an important factor in determining the relevancy of web pages.”

The update to the documentation that mentioned links was updated to remove the word important.

Links are not just listed as just another factor:

“Google uses links as a factor in determining the relevancy of web pages.”

At the beginning of April Google’s John Mueller advised that there are more useful SEO activities to engage on than links.

Mueller explained:

“There are more important things for websites nowadays, and over-focusing on links will often result in you wasting your time doing things that don’t make your website better overall”

Finally, Gary Illyes explicitly said that Google needs very few links to rank webpages and confirmed it.

I shouldn’t have said that… I definitely shouldn’t have said that

— Gary 鯨理/경리 Illyes (so official, trust me) (@methode) April 19, 2024

Why Google Doesn’t Need Links

The reason why Google doesn’t need many links is likely because of the extent of AI and natural language undertanding that Google uses in their algorithms. Google must be highly confident in its algorithm to be able to explicitly say that they don’t need it.

Way back when Google implemented the nofollow into the algorithm there were many link builders who sold comment spam links who continued to lie that comment spam still worked. As someone who started link building at the very beginning of modern SEO (I was the moderator of the link building forum at the #1 SEO forum of that time), I can say with confidence that links have stopped playing much of a role in rankings beginning several years ago, which is why I stopped about five or six years ago.

Read the research papers

Authoritative Sources in a Hyperlinked Environment – Jon M. Kleinberg (PDF)

The Anatomy of a Large-Scale Hypertextual Web Search Engine

Featured Image by Shutterstock/RYO Alexandre

-

PPC4 days ago

PPC4 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoWill Google Buy HubSpot? | Content Marketing Institute

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 16, 2024

-

SEO7 days ago

SEO7 days agoGoogle Clarifies Vacation Rental Structured Data

-

MARKETING6 days ago

MARKETING6 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 17, 2024

-

SEO6 days ago

SEO6 days agoAn In-Depth Guide And Best Practices For Mobile SEO

-

PPC6 days ago

PPC6 days ago97 Marvelous May Content Ideas for Blog Posts, Videos, & More

You must be logged in to post a comment Login