SEO

How Language Model For Dialogue Applications Work

Google creating a language model isn’t something new; in fact, Google LaMDA joins the likes of BERT and MUM as a way for machines to better understand user intent.

Google has researched language-based models for several years with the hope of training a model that could essentially hold an insightful and logical conversation on any topic.

So far, Google LaMDA appears to be the closest to reaching this milestone.

What Is Google LaMDA?

LaMDA, which stands for Language Models for Dialog Application, was created to enable software to better engage in a fluid and natural conversation.

LaMDA is based on the same transformer architecture as other language models such as BERT and GPT-3.

However, due to its training, LaMDA can understand nuanced questions and conversations covering several different topics.

With other models, because of the open-ended nature of conversations, you could end up speaking about something completely different, despite initially focusing on a single topic.

This behavior can easily confuse most conversational models and chatbots.

During last year’s Google I/O announcement, we saw that LaMDA was built to overcome these issues.

The demonstration proved how the model could naturally carry out a conversation on a randomly given topic.

Despite the stream of loosely associated questions, the conversation remained on track, which was amazing to see.

How Does LaMDA work?

LaMDA was built on Google’s open-source neural network, Transformer, which is used for natural language understanding.

The model is trained to find patterns in sentences, correlations between the different words used in those sentences, and even predict the word that is likely to come next.

It does this by studying datasets consisting of dialogue rather than just individual words.

While a conversational AI system is similar to chatbot software, there are some key differences between the two.

For example, chatbots are trained on limited, specific datasets and can only have a limited conversation based on the data and exact questions it is trained on.

On the other hand, because LaMDA is trained on multiple different datasets, it can have open-ended conversations.

During the training process, it picks up on the nuances of open-ended dialogue and adapts.

It can answer questions on many different topics, depending on the flow of the conversation.

Therefore, it enables conversations that are even more similar to human interaction than chatbots can often provide.

How Is LaMDA Trained?

Google explained that LaMDA has a two-stage training process, including pre-training and fine-tuning.

In total, the model is trained on 1.56 trillion words with 137 billion parameters.

Pre-training

For the pre-training stage, the team at Google created a dataset of 1.56T words from multiple public web documents.

This dataset is then tokenized (turned into a string of characters to make sentences) into 2.81T tokens, on which the model is initially trained.

During pre-training, the model uses general and scalable parallelization to predict the next part of the conversation based on previous tokens it has seen.

Fine-tuning

LaMDA is trained to perform generation and classification tasks during the fine-tuning phase.

Essentially, the LaMDA generator, which predicts the next part of the dialogue, generates several relevant responses based on the back-and-forth conversation.

The LaMDA classifiers will then predict safety and quality scores for each possible response.

Any response with a low safety score is filtered out before the top-scored response is selected to continue the conversation.

The scores are based on safety, sensibility, specificity, and interesting percentages.

The goal is to ensure the most relevant, high quality, and ultimately safest response is provided.

LaMDA Key Objectives And Metrics

Three main objectives for the model have been defined to guide the model’s training.

These are quality, safety, and groundedness.

Quality

This is based on three human rater dimensions:

- Sensibleness.

- Specificity

- Interestingness.

The quality score is used to ensure a response makes sense in the context it is used, that it is specific to the question asked, and is considered insightful enough to create better dialogue.

Safety

To ensure safety, the model follows the standards of responsible AI. A set of safety objectives are used to capture and review the model’s behavior.

This ensures the output does not provide any unintended response and avoids any bias.

Groundedness

Groundedness is defined as “the percentage of responses containing claims about the external world.”

This is used to ensure that responses are as “factually accurate as possible, allowing users to judge the validity of a response based on the reliability of its source.”

Evaluation

Through an ongoing process of quantifying progress, responses from the pre-trained model, fine-tuned model and human raters, are reviewed to evaluate the responses against the aforementioned quality, safety, and groundedness metrics.

So far, they have been able to conclude that:

- Quality metrics improve with the number of parameters.

- Safety improves with fine-tuning.

- Groundedness improves as the model size increases.

Image from Google AI Blog, March 2022

Image from Google AI Blog, March 2022How Will LaMDA Be Used?

While still a work in progress with no finalized release date, it is predicted that LaMDA will be used in the future to improve customer experience and enable chatbots to provide a more human-like conversation.

In addition, using LaMDA to navigate search within Google’s search engine is a genuine possibility.

LaMDA Implications For SEO

By focusing on language and conversational models, Google offers insight into their vision for the future of search and highlights a shift in how their products are set to develop.

This ultimately means there may well be a shift in search behavior and the way users search for products or information.

Google is constantly working on improving the understanding of users’ search intent to ensure they receive the most useful and relevant results in SERPs.

The LaMDA model will, no doubt, be a key tool to understand questions searchers may be asking.

This all further highlights the need to ensure content is optimized for humans rather than search engines.

Making sure content is conversational and written with your target audience in mind means that even as Google advances, content can continue to perform well.

It’s also key to regularly refresh evergreen content to ensure it evolves with time and remains relevant.

In a paper titled Rethinking Search: Making Experts out of Dilettantes, research engineers from Google shared how they envisage AI advancements such as LaMDA will further enhance “search as a conversation with experts.”

They shared an example around the search question, “What are the health benefits and risks of red wine?”

Currently, Google will display an answer box list of bullet points as answers to this question.

However, they suggest that in the future, a response may well be a paragraph explaining the benefits and risks of red wine, with links to the source information.

Therefore, ensuring content is backed up by expert sources will be more important than ever should Google LaMDA generate search results in the future.

Overcoming Challenges

As with any AI model, there are challenges to address.

The two main challenges engineers face with Google LaMDA are safety and groundedness.

Safety – Avoiding Bias

Because you can pull answers from anywhere on the web, there is the possibility that the output will amplify bias, reflecting the notions that are shared online.

It is important that responsibility comes first with Google LaMDA to ensure it is not generating unpredictable or harmful results.

To help overcome this, Google has open-sourced the resources used to analyze and train the data.

This enables diverse groups to participate in creating the datasets used to train the model, help identify existing bias, and minimize any harmful or misleading information from being shared.

Factual Grounding

It isn’t easy to validate the reliability of answers that AI models produce, as sources are collected from all over the web.

To overcome this challenge, the team enables the model to consult with multiple external sources, including information retrieval systems and even a calculator, to provide accurate results.

The Groundedness metric shared earlier also ensures responses are grounded in known sources. These sources are shared to allow users to validate the results given and prevent the spreading of misinformation.

What’s Next For Google LaMDA?

Google is clear that there are benefits and risks to open-ended dialog models such as LaMDA and are committed to improving safety and groundedness to ensure a more reliable and unbiased experience.

Training LaMDA models on different data, including images or videos, is another thing we may see in the future.

This opens up the ability to navigate even more on the web, using conversational prompts.

Google’s CEO Sundar Pichai said of LaMDA, “We believe LaMDA’s conversation capabilities have the potential to make information and computing radically more accessible and easier to use.”

While a rollout date hasn’t yet been confirmed, it’s no doubt models such as LaMDA will be the future of Google.

More resources:

Featured Image: Andrey Suslov/Shutterstock

SEO

How To Write ChatGPT Prompts To Get The Best Results

ChatGPT is a game changer in the field of SEO. This powerful language model can generate human-like content, making it an invaluable tool for SEO professionals.

However, the prompts you provide largely determine the quality of the output.

To unlock the full potential of ChatGPT and create content that resonates with your audience and search engines, writing effective prompts is crucial.

In this comprehensive guide, we’ll explore the art of writing prompts for ChatGPT, covering everything from basic techniques to advanced strategies for layering prompts and generating high-quality, SEO-friendly content.

Writing Prompts For ChatGPT

What Is A ChatGPT Prompt?

A ChatGPT prompt is an instruction or discussion topic a user provides for the ChatGPT AI model to respond to.

The prompt can be a question, statement, or any other stimulus to spark creativity, reflection, or engagement.

Users can use the prompt to generate ideas, share their thoughts, or start a conversation.

ChatGPT prompts are designed to be open-ended and can be customized based on the user’s preferences and interests.

How To Write Prompts For ChatGPT

Start by giving ChatGPT a writing prompt, such as, “Write a short story about a person who discovers they have a superpower.”

ChatGPT will then generate a response based on your prompt. Depending on the prompt’s complexity and the level of detail you requested, the answer may be a few sentences or several paragraphs long.

Use the ChatGPT-generated response as a starting point for your writing. You can take the ideas and concepts presented in the answer and expand upon them, adding your own unique spin to the story.

If you want to generate additional ideas, try asking ChatGPT follow-up questions related to your original prompt.

For example, you could ask, “What challenges might the person face in exploring their newfound superpower?” Or, “How might the person’s relationships with others be affected by their superpower?”

Remember that ChatGPT’s answers are generated by artificial intelligence and may not always be perfect or exactly what you want.

However, they can still be a great source of inspiration and help you start writing.

Must-Have GPTs Assistant

I recommend installing the WebBrowser Assistant created by the OpenAI Team. This tool allows you to add relevant Bing results to your ChatGPT prompts.

This assistant adds the first web results to your ChatGPT prompts for more accurate and up-to-date conversations.

It is very easy to install in only two clicks. (Click on Start Chat.)

For example, if I ask, “Who is Vincent Terrasi?,” ChatGPT has no answer.

With WebBrower Assistant, the assistant creates a new prompt with the first Bing results, and now ChatGPT knows who Vincent Terrasi is.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023You can test other GPT assistants available in the GPTs search engine if you want to use Google results.

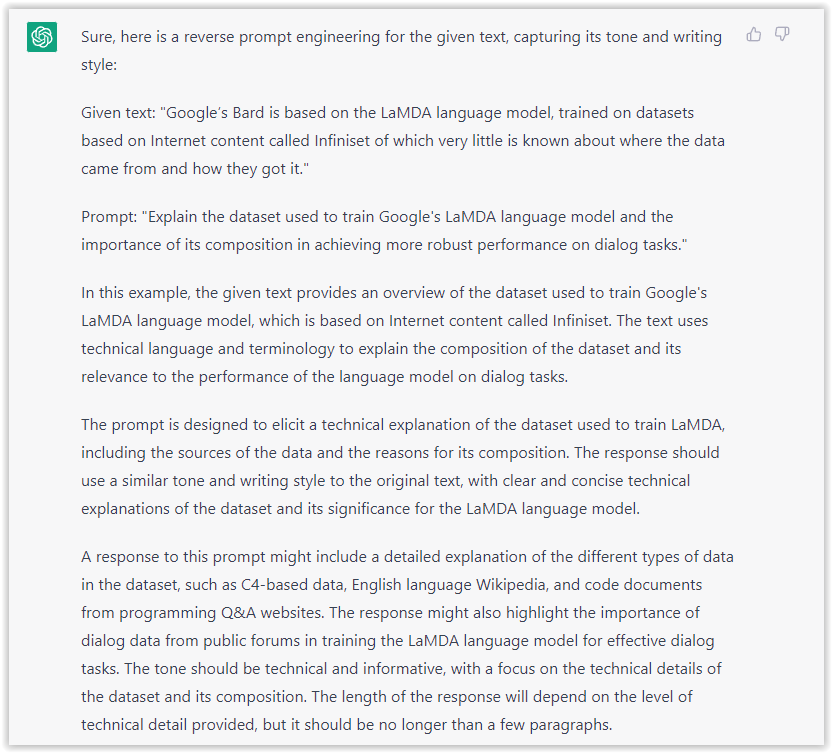

Master Reverse Prompt Engineering

ChatGPT can be an excellent tool for reverse engineering prompts because it generates natural and engaging responses to any given input.

By analyzing the prompts generated by ChatGPT, it is possible to gain insight into the model’s underlying thought processes and decision-making strategies.

One key benefit of using ChatGPT to reverse engineer prompts is that the model is highly transparent in its decision-making.

This means that the reasoning and logic behind each response can be traced, making it easier to understand how the model arrives at its conclusions.

Once you’ve done this a few times for different types of content, you’ll gain insight into crafting more effective prompts.

Prepare Your ChatGPT For Generating Prompts

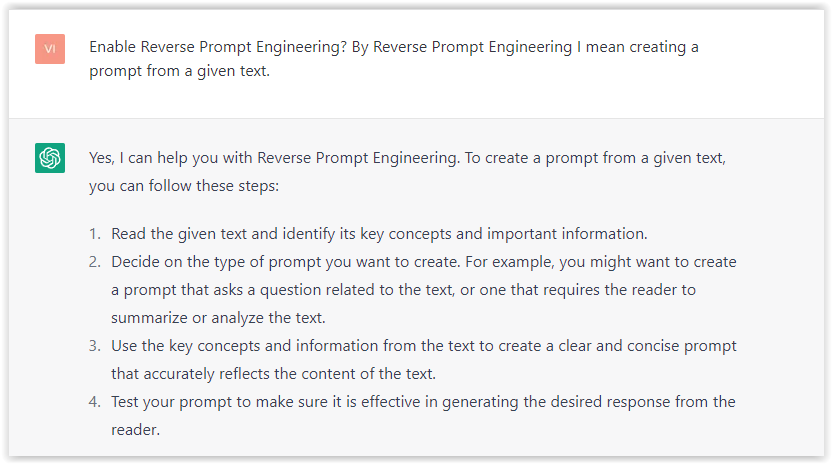

First, activate the reverse prompt engineering.

- Type the following prompt: “Enable Reverse Prompt Engineering? By Reverse Prompt Engineering I mean creating a prompt from a given text.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023ChatGPT is now ready to generate your prompt. You can test the product description in a new chatbot session and evaluate the generated prompt.

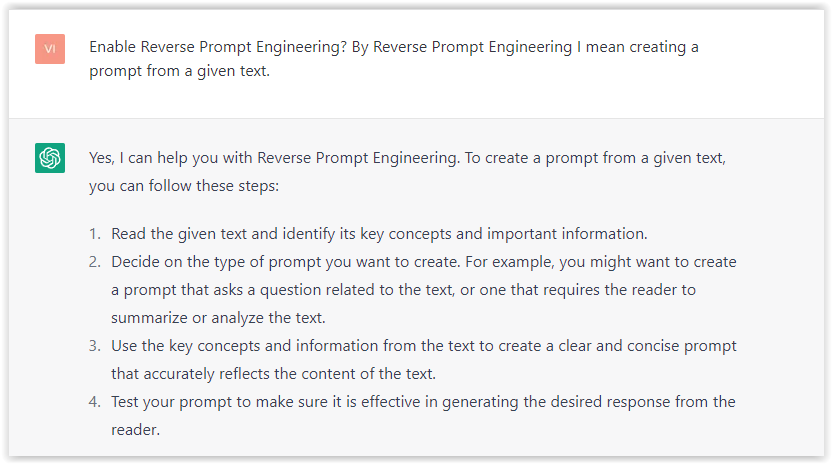

- Type: “Create a very technical reverse prompt engineering template for a product description about iPhone 11.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023The result is amazing. You can test with a full text that you want to reproduce. Here is an example of a prompt for selling a Kindle on Amazon.

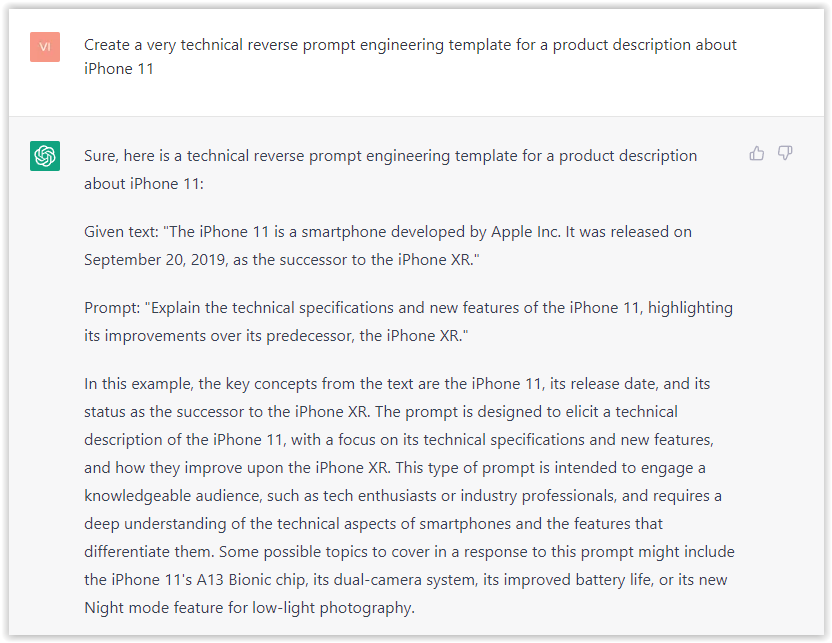

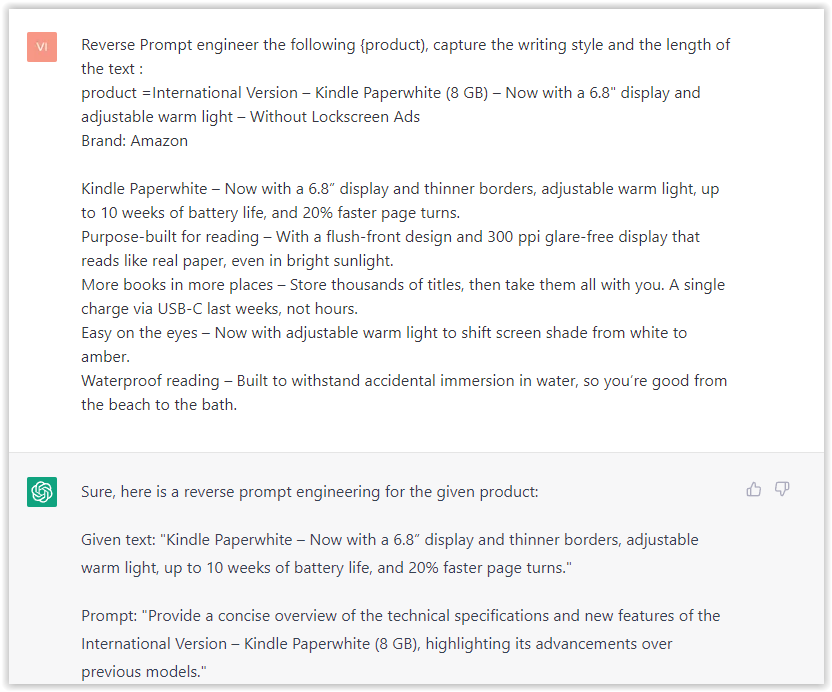

- Type: “Reverse Prompt engineer the following {product), capture the writing style and the length of the text :

product =”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023I tested it on an SEJ blog post. Enjoy the analysis – it is excellent.

- Type: “Reverse Prompt engineer the following {text}, capture the tone and writing style of the {text} to include in the prompt :

text = all text coming from https://www.searchenginejournal.com/google-bard-training-data/478941/”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023But be careful not to use ChatGPT to generate your texts. It is just a personal assistant.

Go Deeper

Prompts and examples for SEO:

- Keyword research and content ideas prompt: “Provide a list of 20 long-tail keyword ideas related to ‘local SEO strategies’ along with brief content topic descriptions for each keyword.”

- Optimizing content for featured snippets prompt: “Write a 40-50 word paragraph optimized for the query ‘what is the featured snippet in Google search’ that could potentially earn the featured snippet.”

- Creating meta descriptions prompt: “Draft a compelling meta description for the following blog post title: ’10 Technical SEO Factors You Can’t Ignore in 2024′.”

Important Considerations:

- Always Fact-Check: While ChatGPT can be a helpful tool, it’s crucial to remember that it may generate inaccurate or fabricated information. Always verify any facts, statistics, or quotes generated by ChatGPT before incorporating them into your content.

- Maintain Control and Creativity: Use ChatGPT as a tool to assist your writing, not replace it. Don’t rely on it to do your thinking or create content from scratch. Your unique perspective and creativity are essential for producing high-quality, engaging content.

- Iteration is Key: Refine and revise the outputs generated by ChatGPT to ensure they align with your voice, style, and intended message.

Additional Prompts for Rewording and SEO:

– Rewrite this sentence to be more concise and impactful.

– Suggest alternative phrasing for this section to improve clarity.

– Identify opportunities to incorporate relevant internal and external links.

– Analyze the keyword density and suggest improvements for better SEO.

Remember, while ChatGPT can be a valuable tool, it’s essential to use it responsibly and maintain control over your content creation process.

Experiment And Refine Your Prompting Techniques

Writing effective prompts for ChatGPT is an essential skill for any SEO professional who wants to harness the power of AI-generated content.

Hopefully, the insights and examples shared in this article can inspire you and help guide you to crafting stronger prompts that yield high-quality content.

Remember to experiment with layering prompts, iterating on the output, and continually refining your prompting techniques.

This will help you stay ahead of the curve in the ever-changing world of SEO.

More resources:

Featured Image: Tapati Rinchumrus/Shutterstock

SEO

Measuring Content Impact Across The Customer Journey

Understanding the impact of your content at every touchpoint of the customer journey is essential – but that’s easier said than done. From attracting potential leads to nurturing them into loyal customers, there are many touchpoints to look into.

So how do you identify and take advantage of these opportunities for growth?

Watch this on-demand webinar and learn a comprehensive approach for measuring the value of your content initiatives, so you can optimize resource allocation for maximum impact.

You’ll learn:

- Fresh methods for measuring your content’s impact.

- Fascinating insights using first-touch attribution, and how it differs from the usual last-touch perspective.

- Ways to persuade decision-makers to invest in more content by showcasing its value convincingly.

With Bill Franklin and Oliver Tani of DAC Group, we unravel the nuances of attribution modeling, emphasizing the significance of layering first-touch and last-touch attribution within your measurement strategy.

Check out these insights to help you craft compelling content tailored to each stage, using an approach rooted in first-hand experience to ensure your content resonates.

Whether you’re a seasoned marketer or new to content measurement, this webinar promises valuable insights and actionable tactics to elevate your SEO game and optimize your content initiatives for success.

View the slides below or check out the full webinar for all the details.

SEO

How to Find and Use Competitor Keywords

Competitor keywords are the keywords your rivals rank for in Google’s search results. They may rank organically or pay for Google Ads to rank in the paid results.

Knowing your competitors’ keywords is the easiest form of keyword research. If your competitors rank for or target particular keywords, it might be worth it for you to target them, too.

There is no way to see your competitors’ keywords without a tool like Ahrefs, which has a database of keywords and the sites that rank for them. As far as we know, Ahrefs has the biggest database of these keywords.

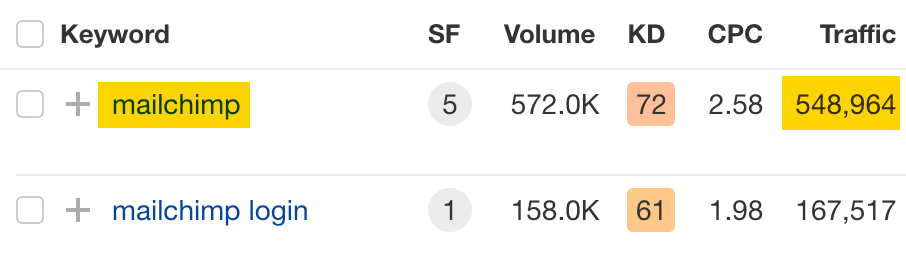

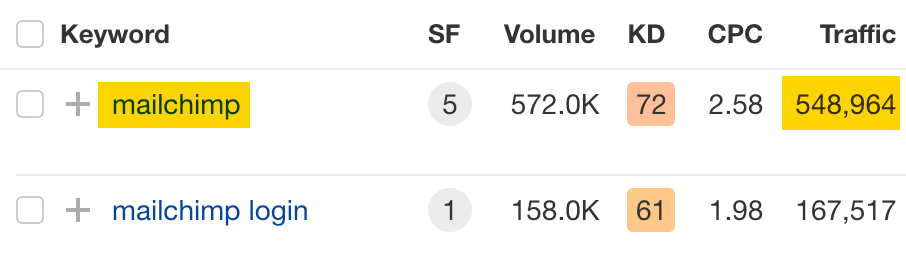

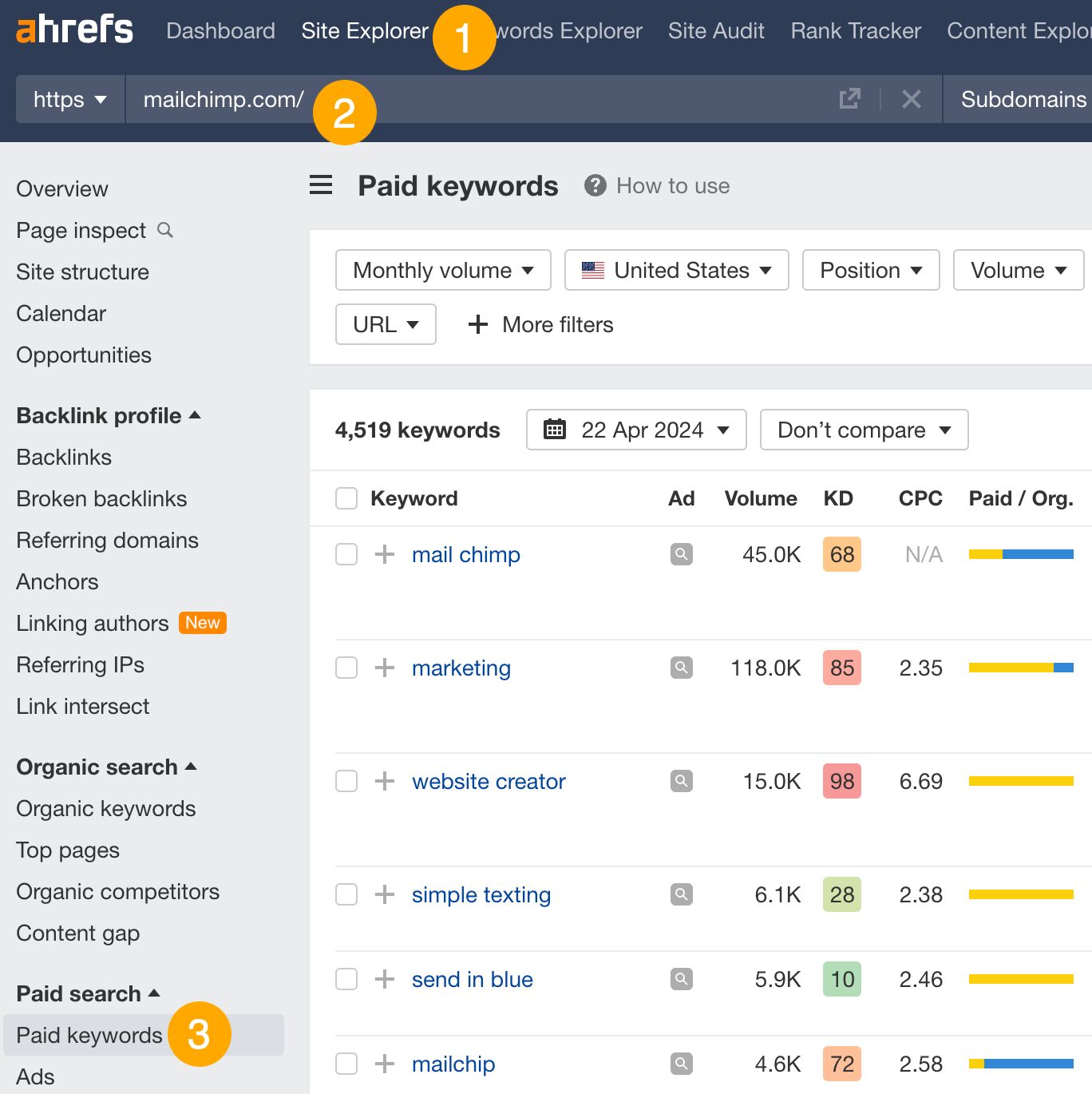

How to find all the keywords your competitor ranks for

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Organic keywords report

The report is sorted by traffic to show you the keywords sending your competitor the most visits. For example, Mailchimp gets most of its organic traffic from the keyword “mailchimp.”

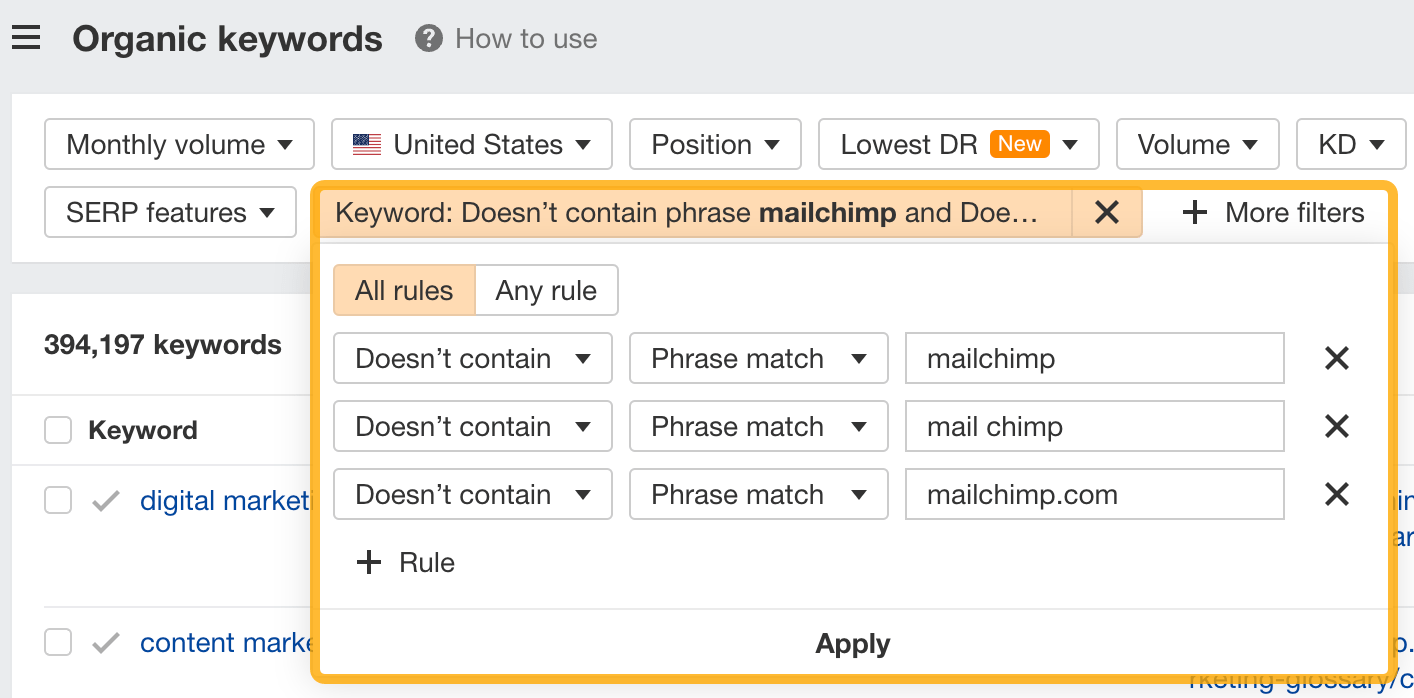

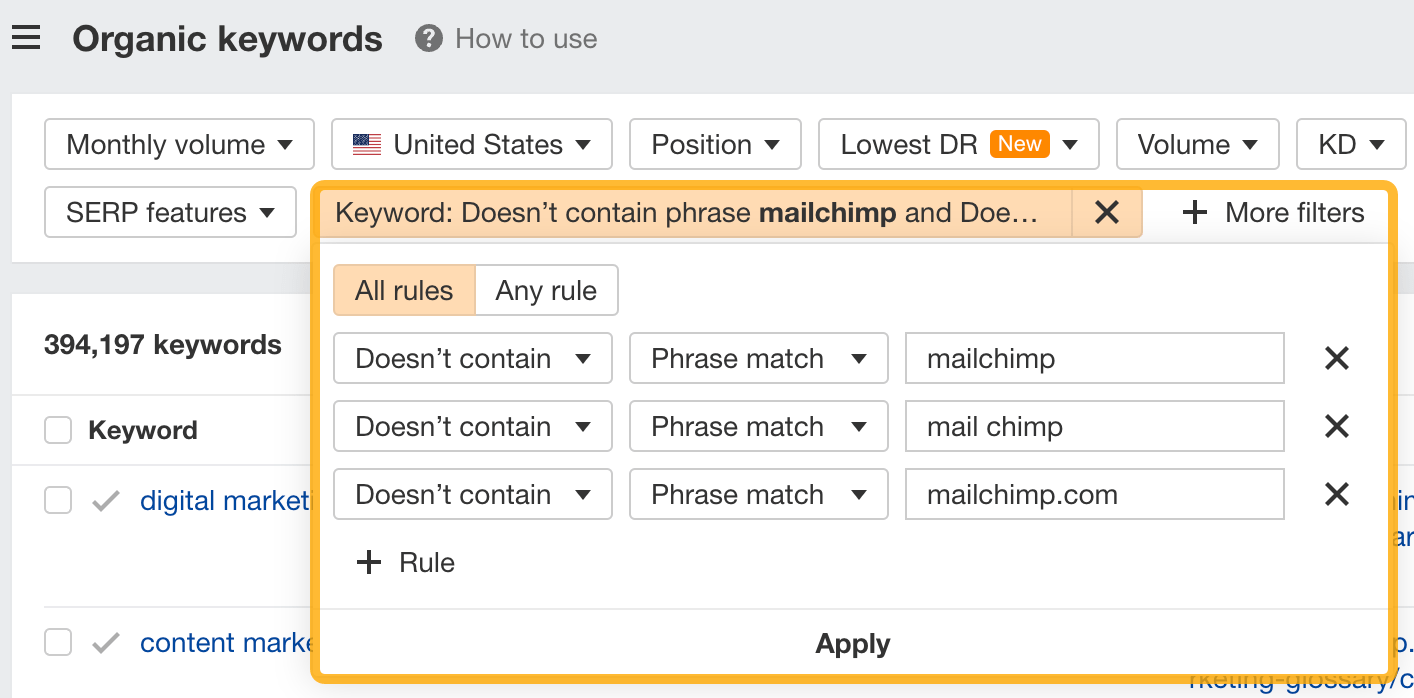

Since you’re unlikely to rank for your competitor’s brand, you might want to exclude branded keywords from the report. You can do this by adding a Keyword > Doesn’t contain filter. In this example, we’ll filter out keywords containing “mailchimp” or any potential misspellings:

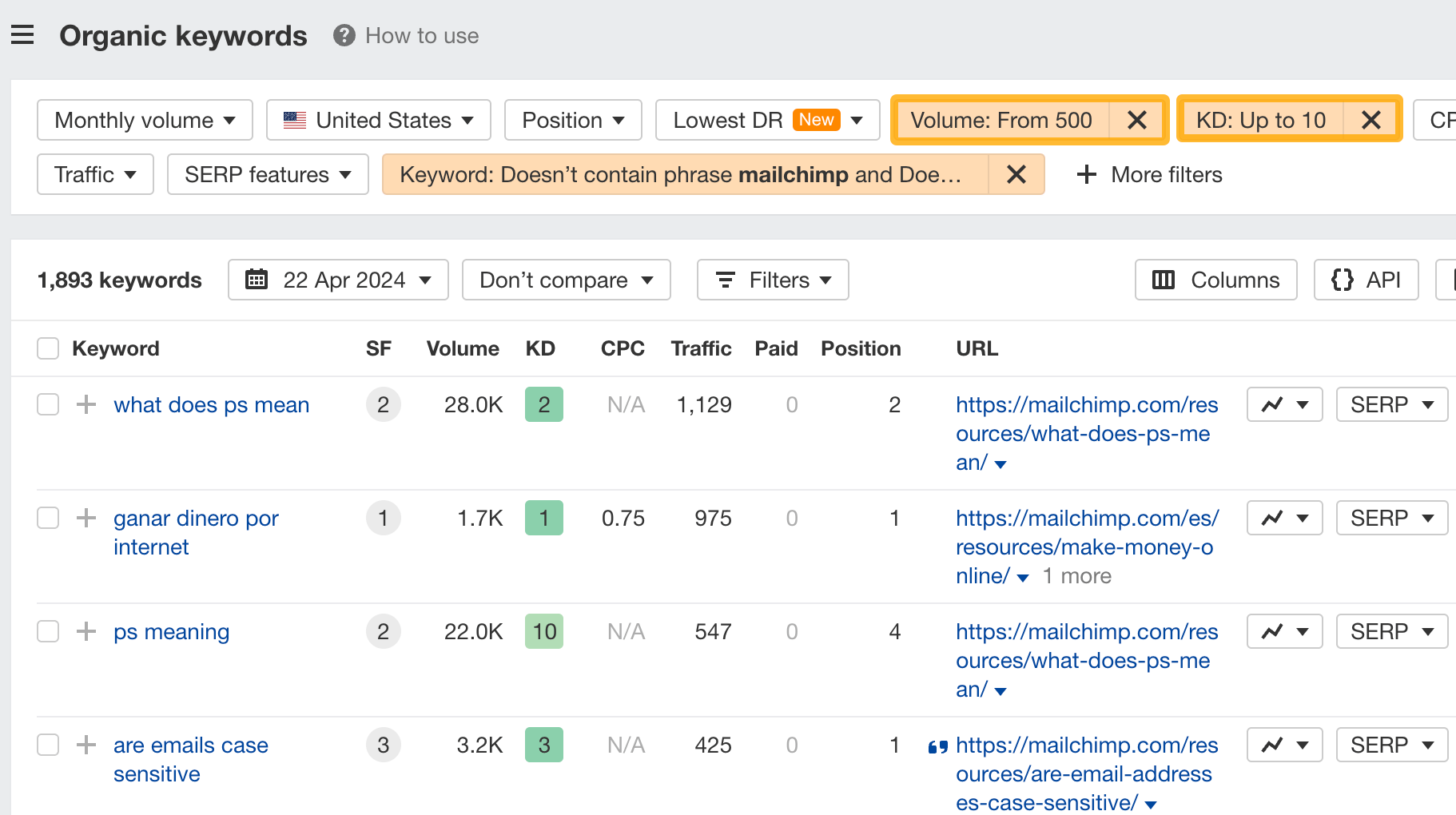

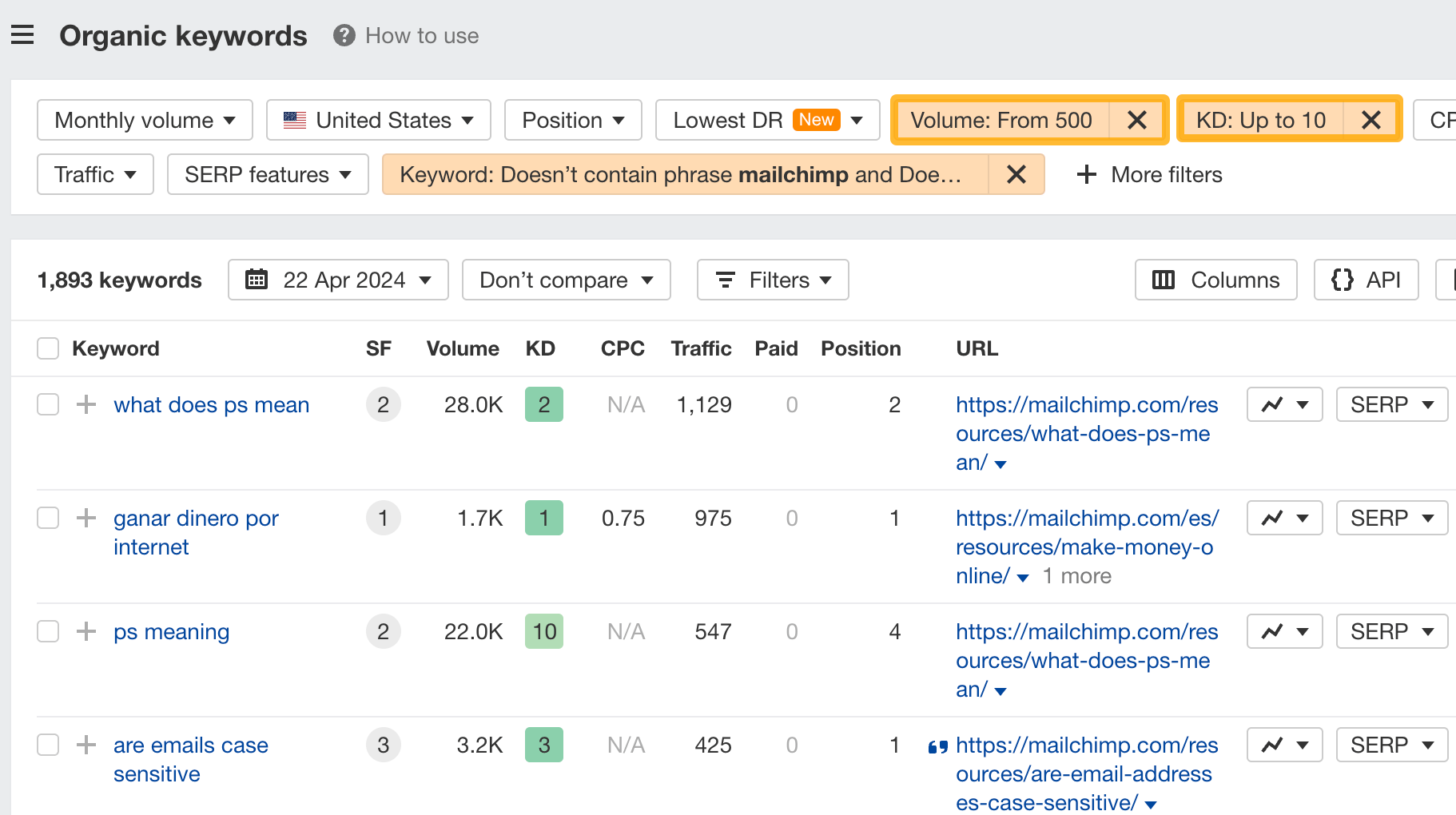

If you’re a new brand competing with one that’s established, you might also want to look for popular low-difficulty keywords. You can do this by setting the Volume filter to a minimum of 500 and the KD filter to a maximum of 10.

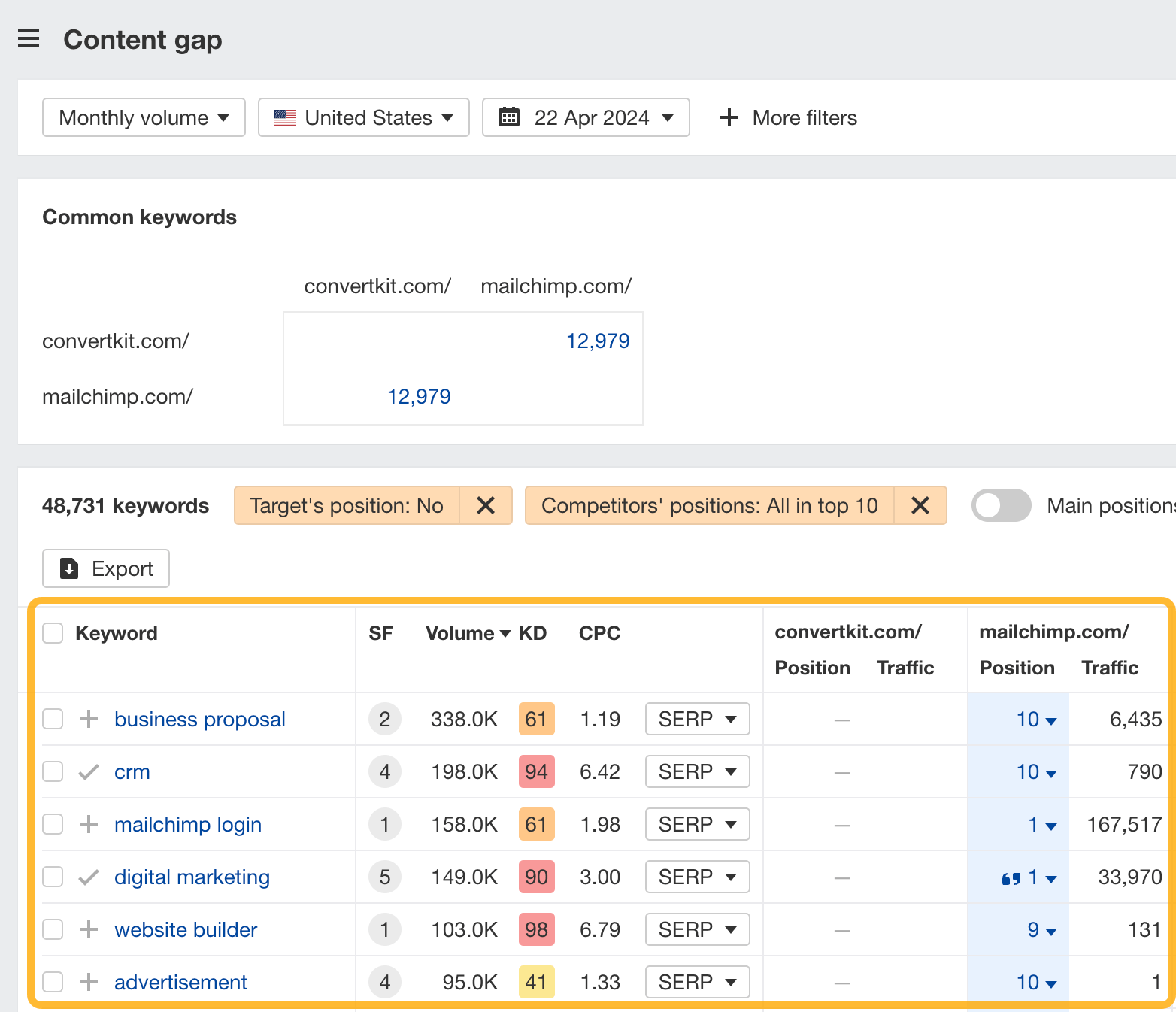

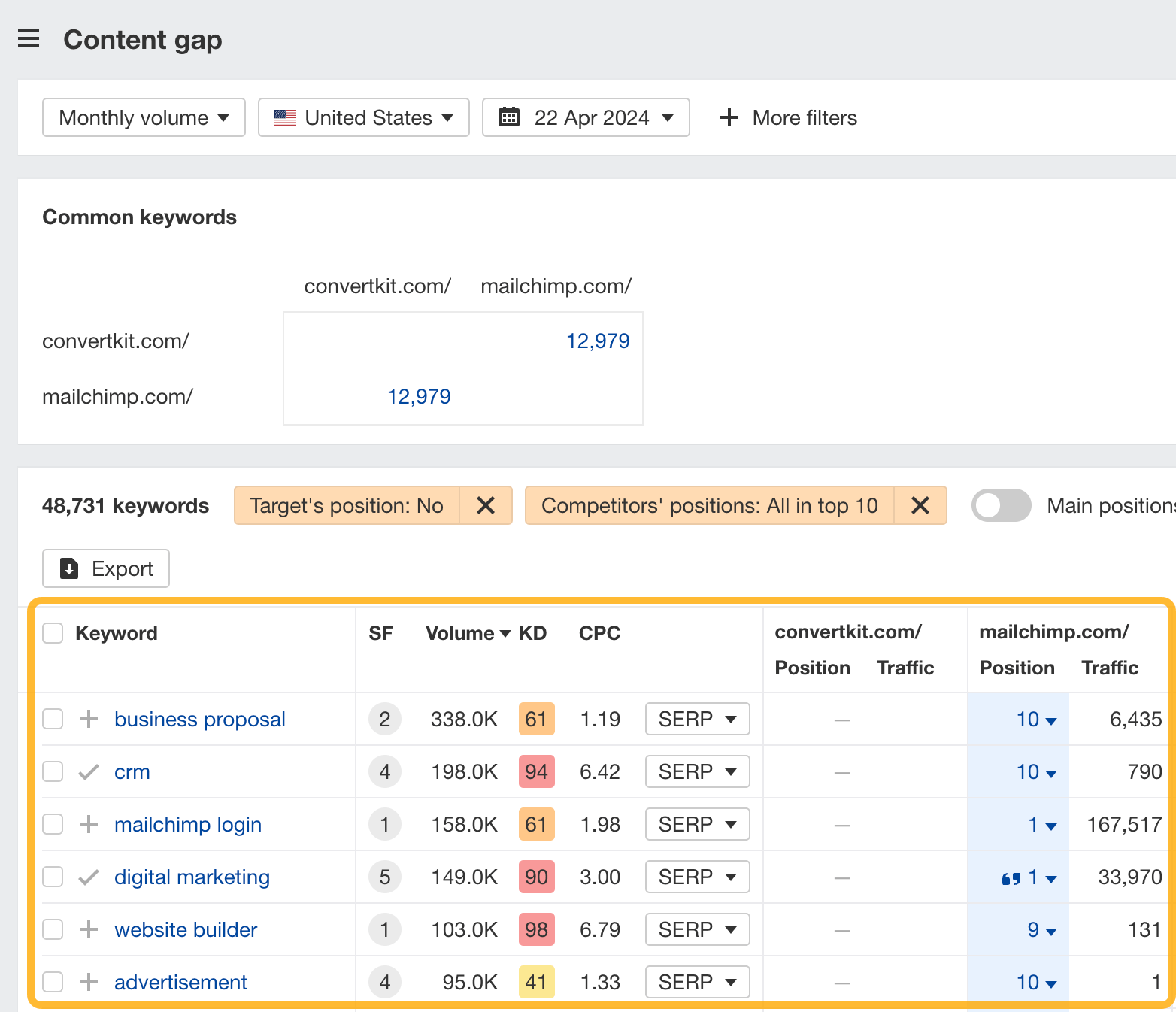

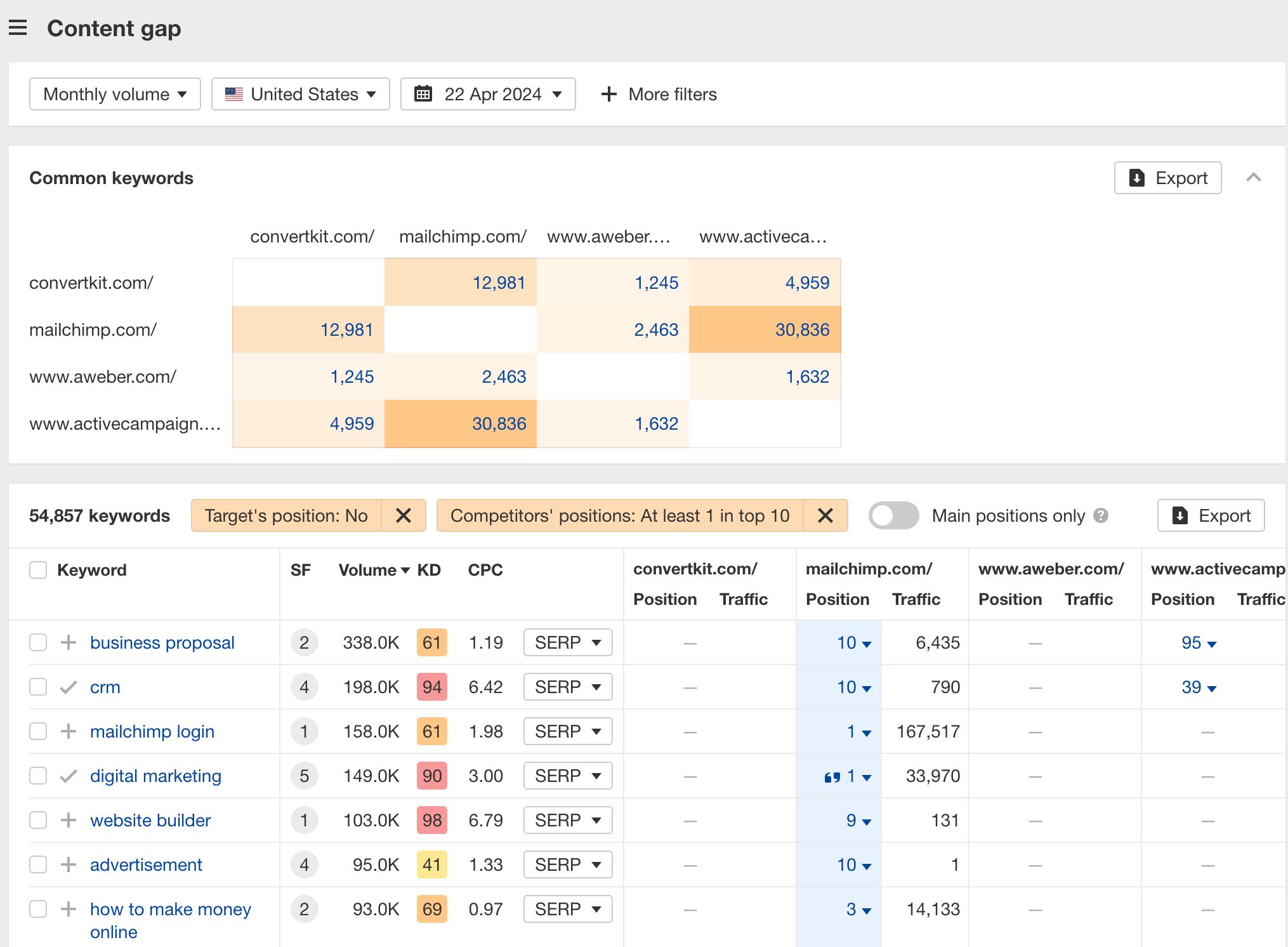

How to find keywords your competitor ranks for, but you don’t

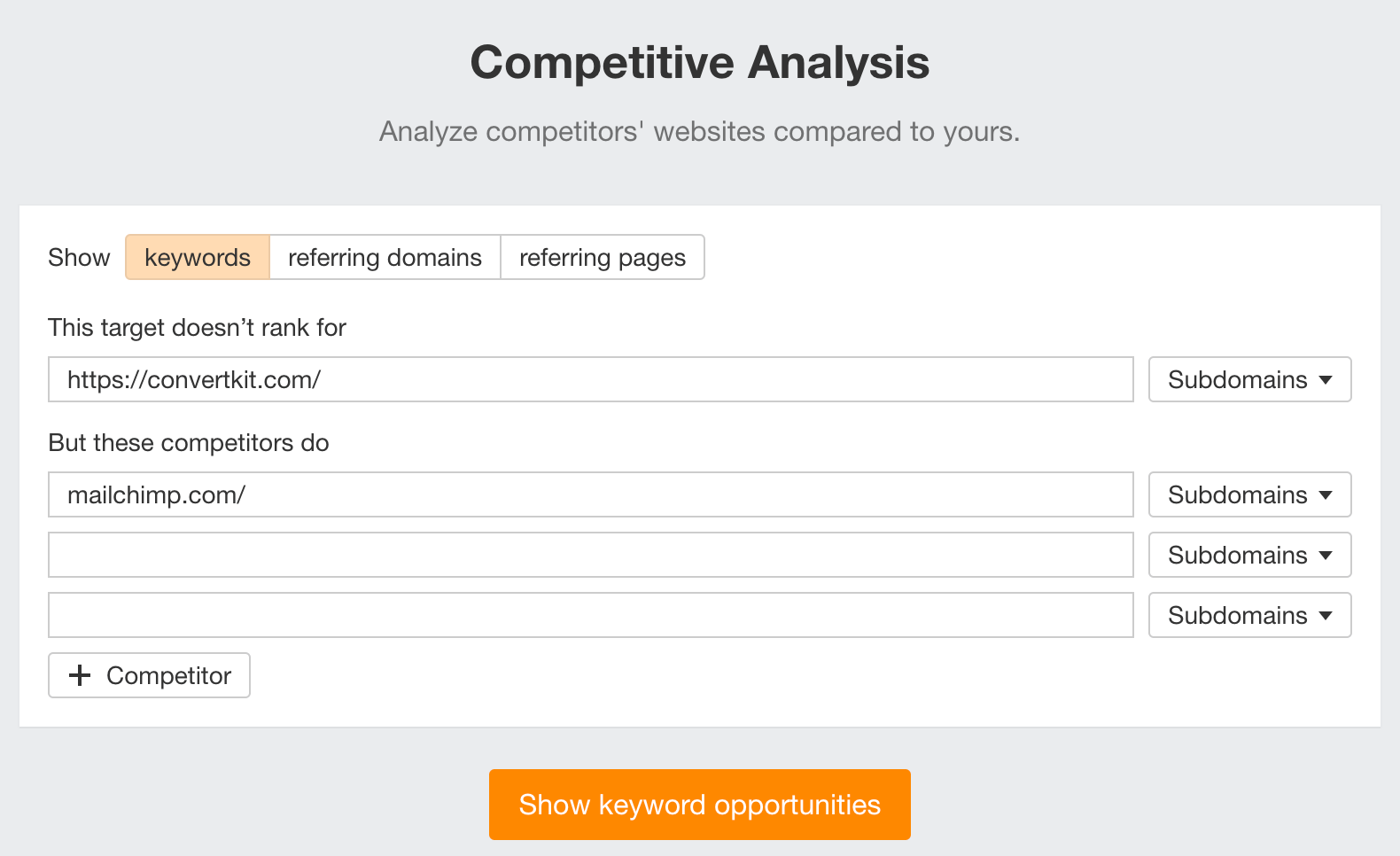

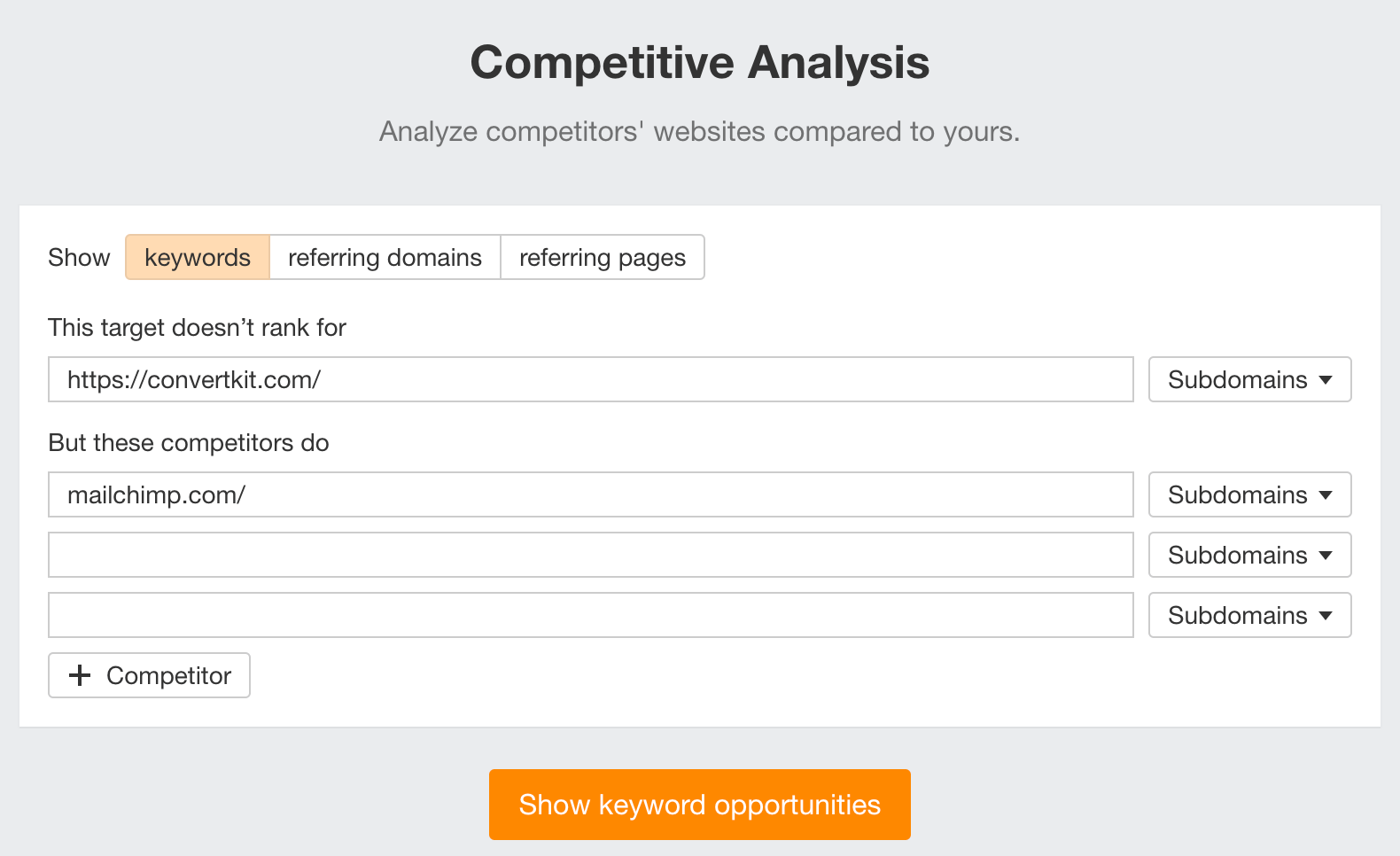

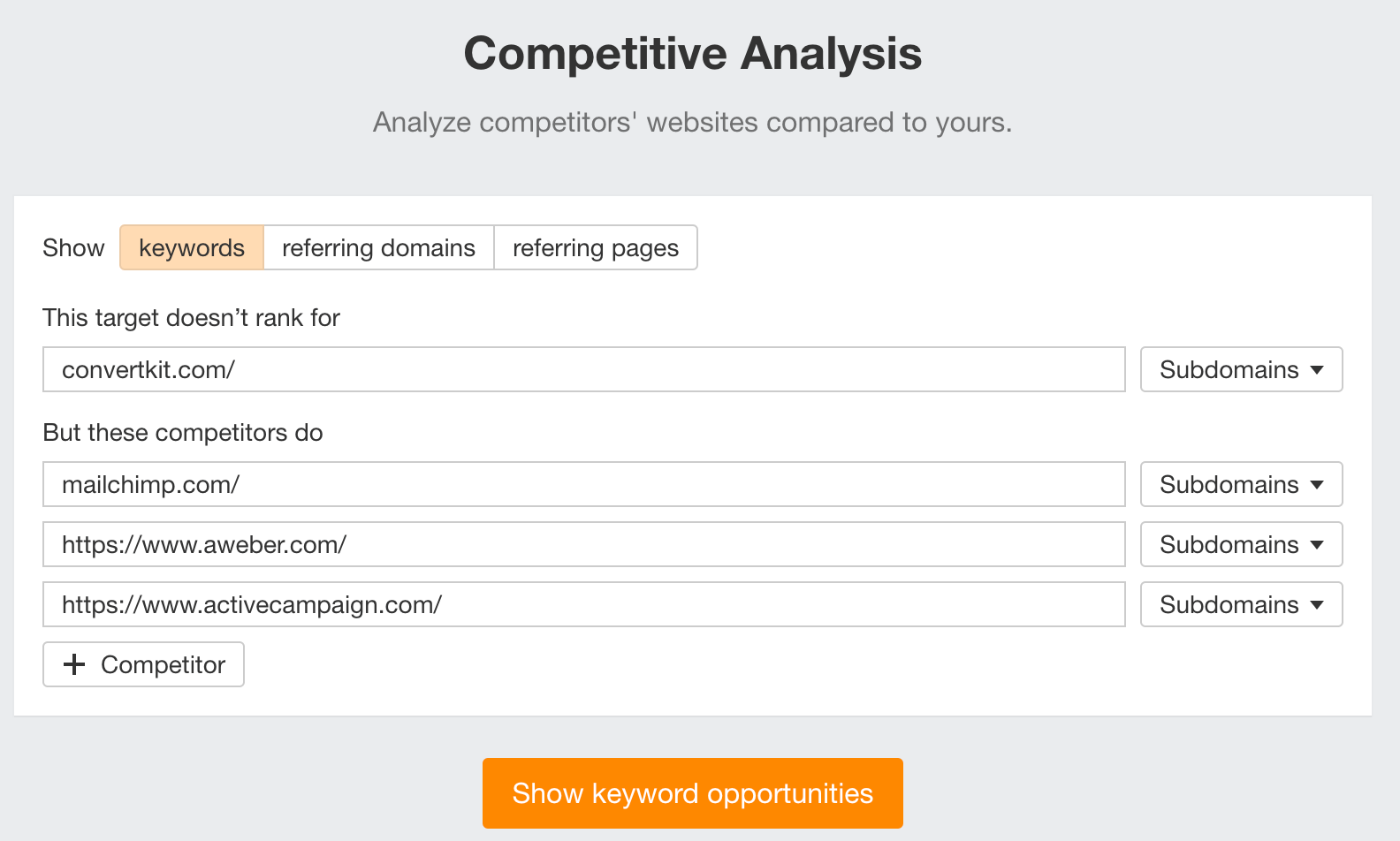

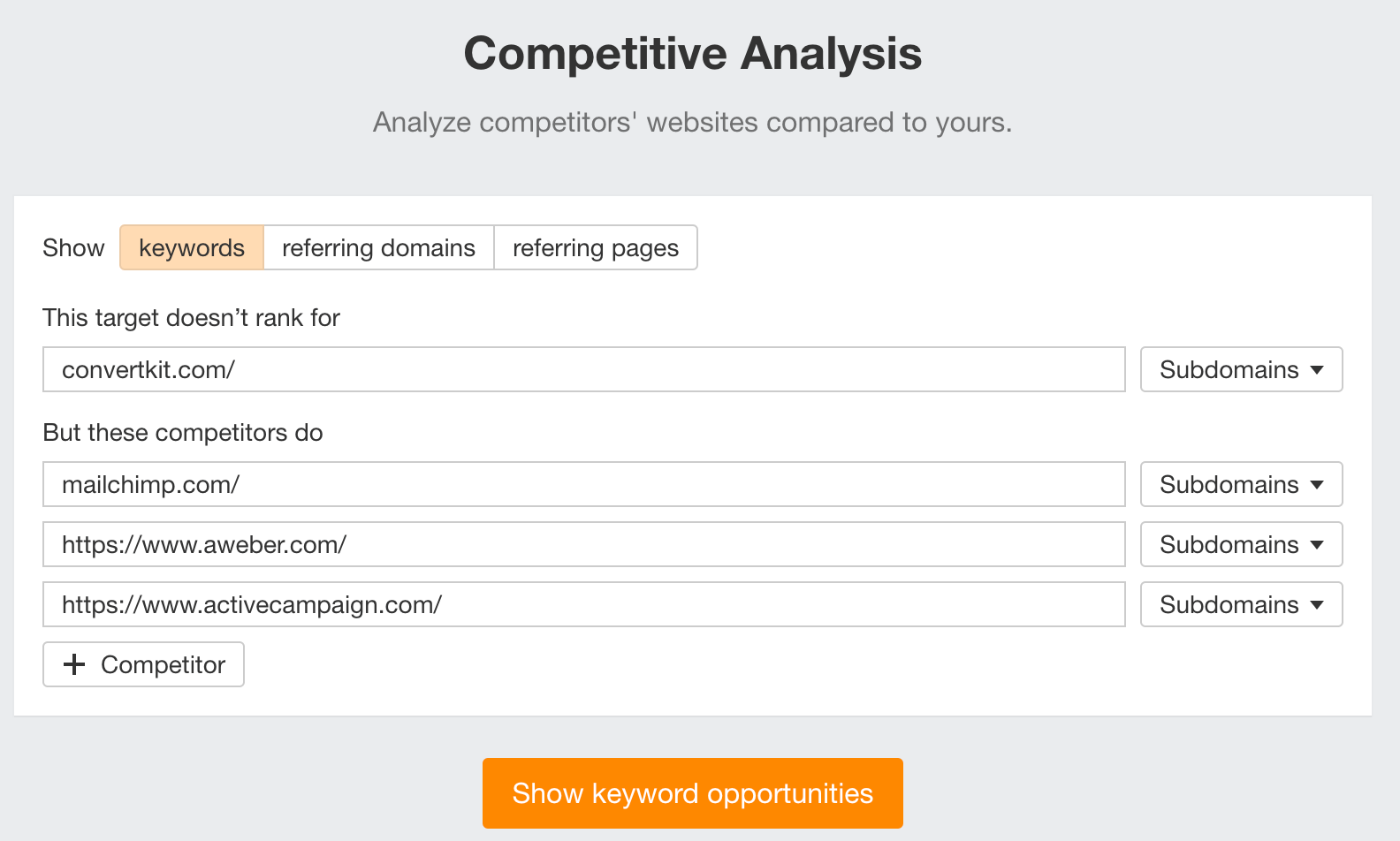

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter your competitor’s domain in the But these competitors do section

Hit “Show keyword opportunities,” and you’ll see all the keywords your competitor ranks for, but you don’t.

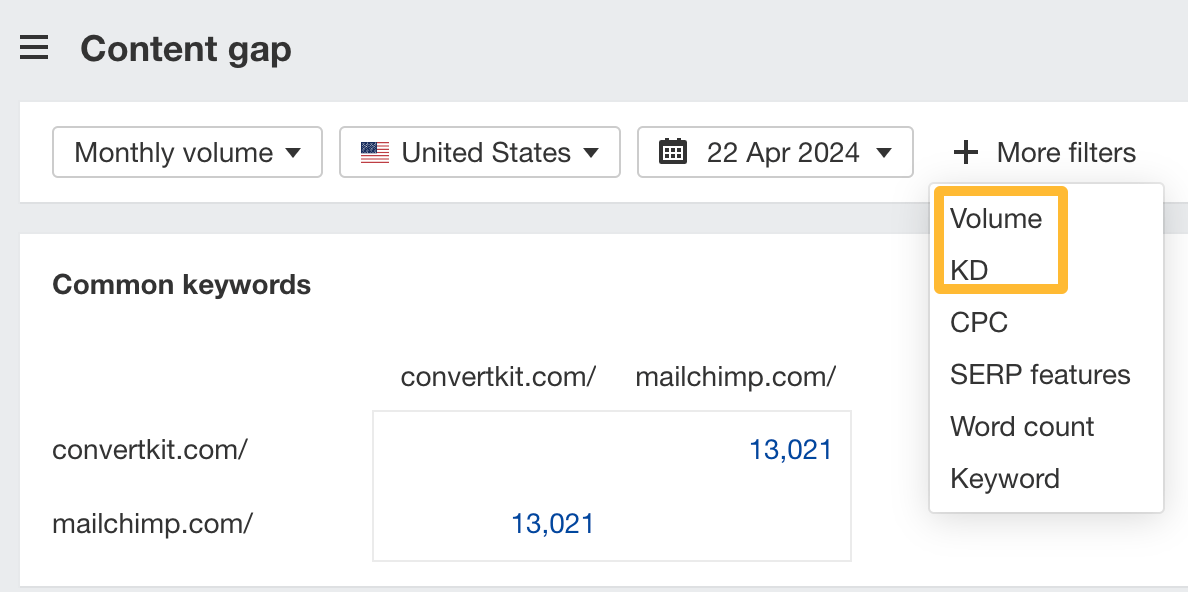

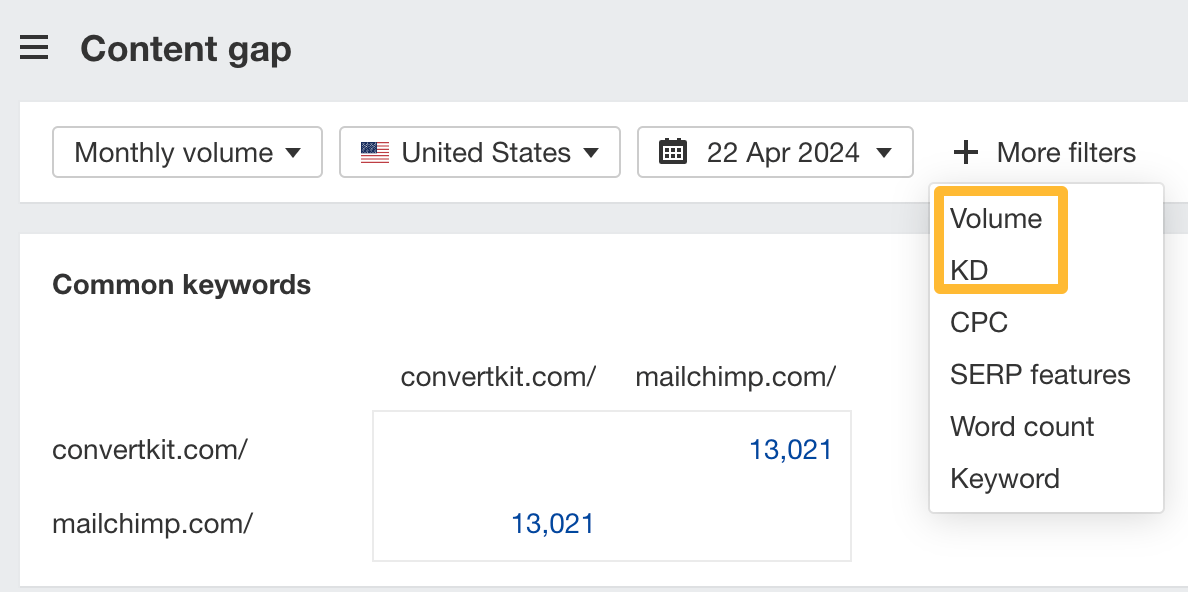

You can also add a Volume and KD filter to find popular, low-difficulty keywords in this report.

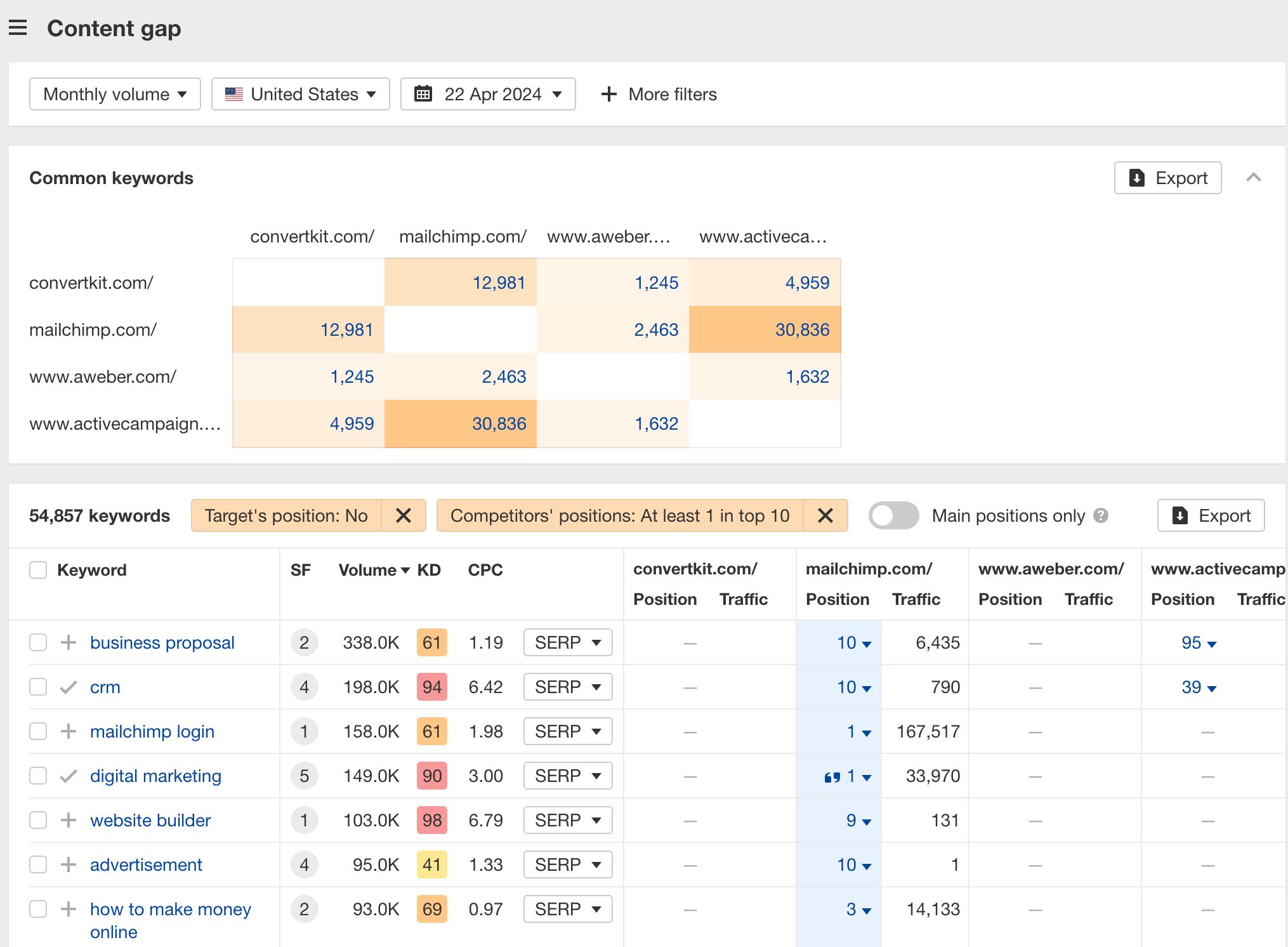

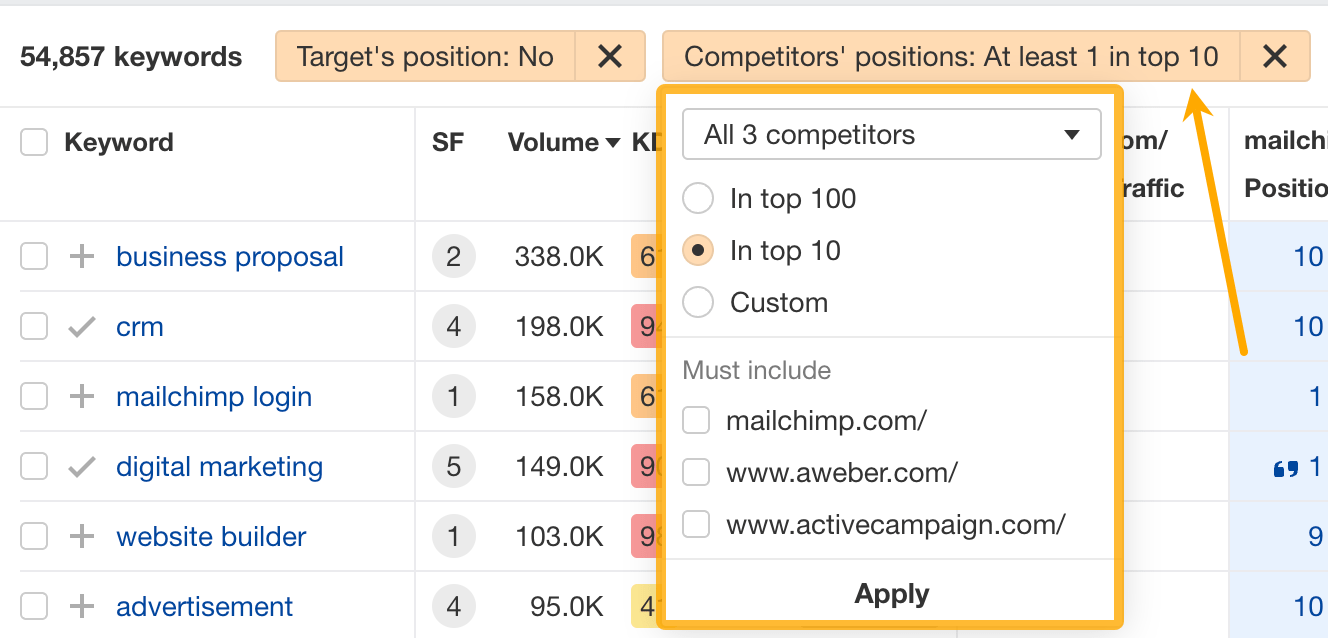

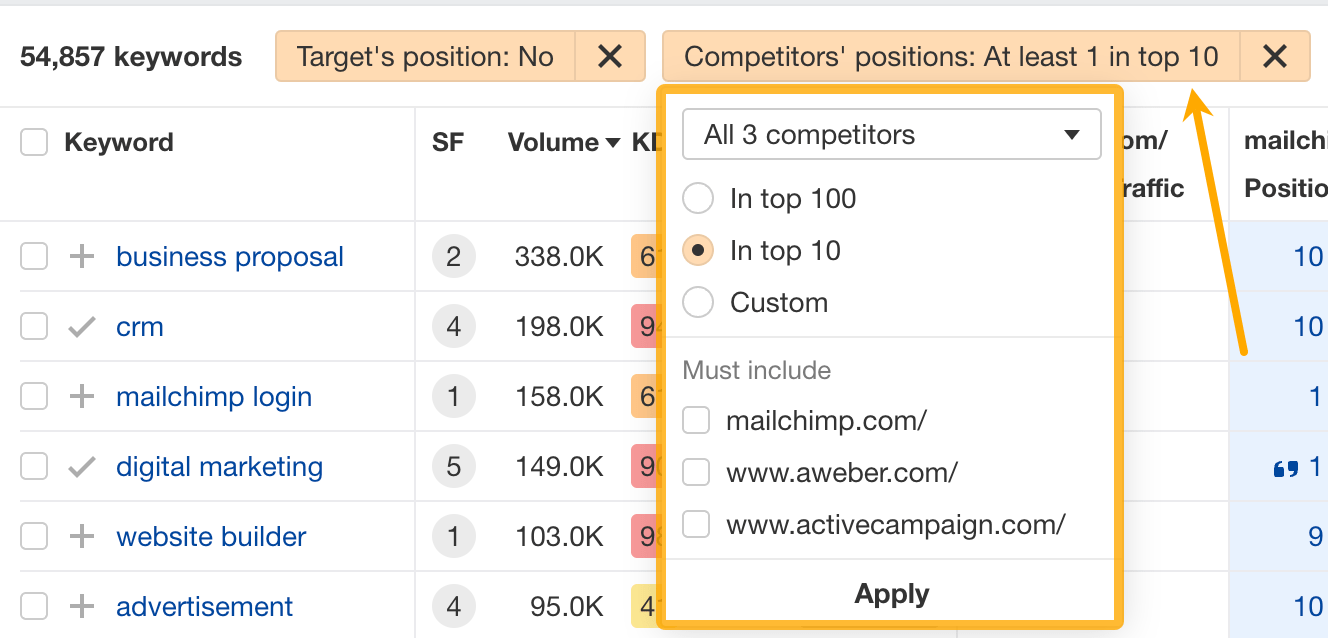

How to find keywords multiple competitors rank for, but you don’t

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter the domains of multiple competitors in the But these competitors do section

You’ll see all the keywords that at least one of these competitors ranks for, but you don’t.

You can also narrow the list down to keywords that all competitors rank for. Click on the Competitors’ positions filter and choose All 3 competitors:

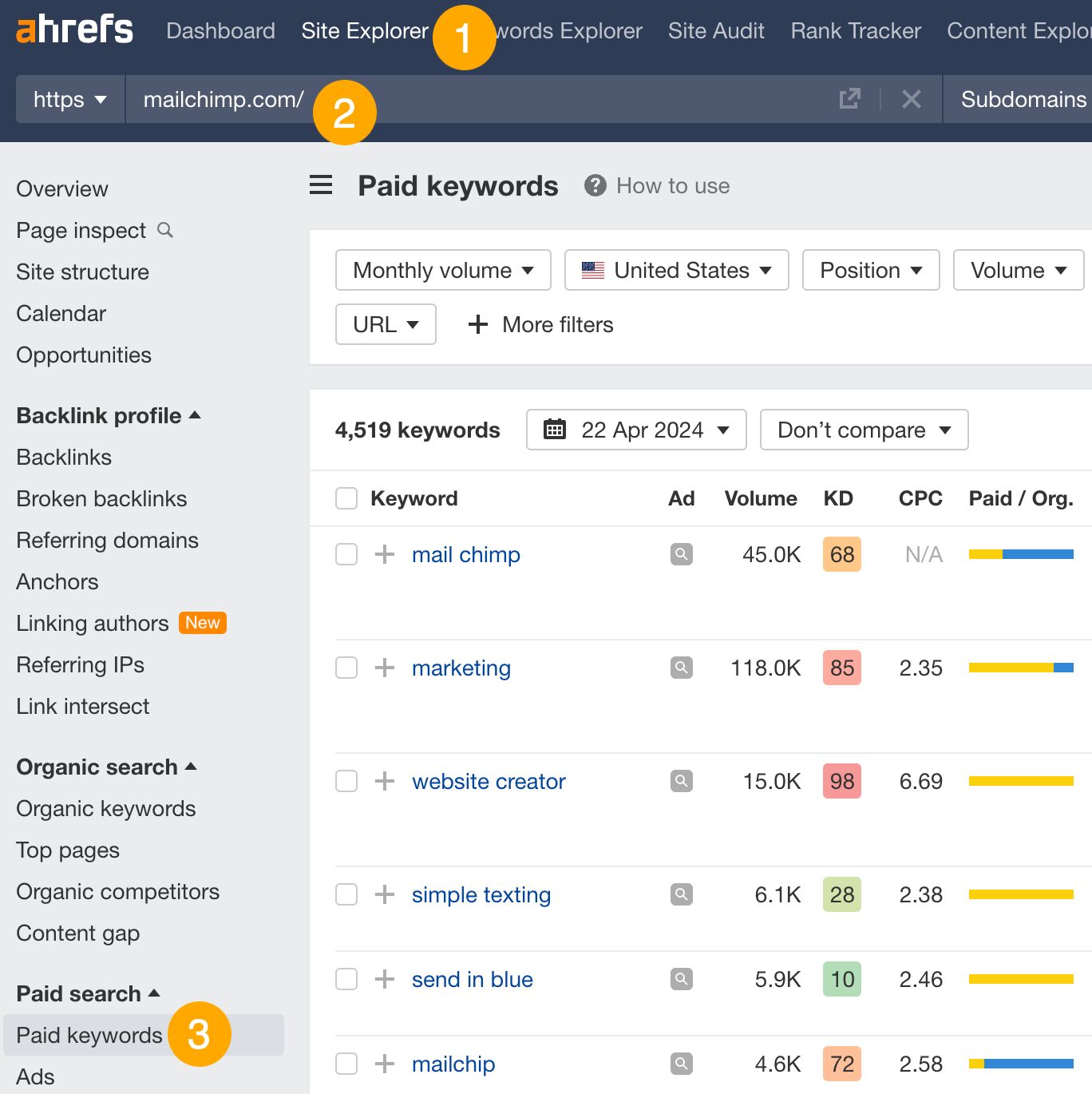

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Paid keywords report

This report shows you the keywords your competitors are targeting via Google Ads.

Since your competitor is paying for traffic from these keywords, it may indicate that they’re profitable for them—and could be for you, too.

You know what keywords your competitors are ranking for or bidding on. But what do you do with them? There are basically three options.

1. Create pages to target these keywords

You can only rank for keywords if you have content about them. So, the most straightforward thing you can do for competitors’ keywords you want to rank for is to create pages to target them.

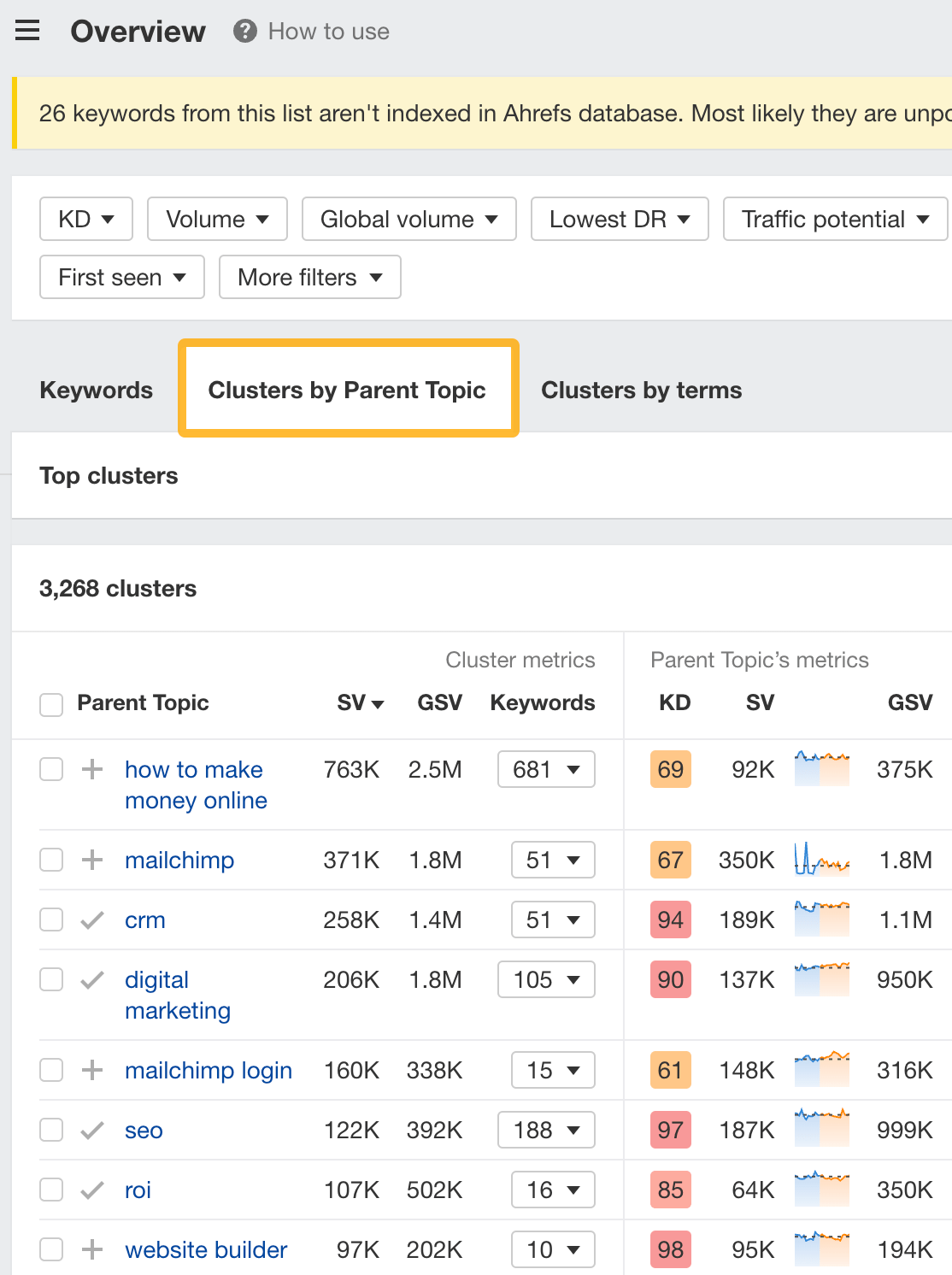

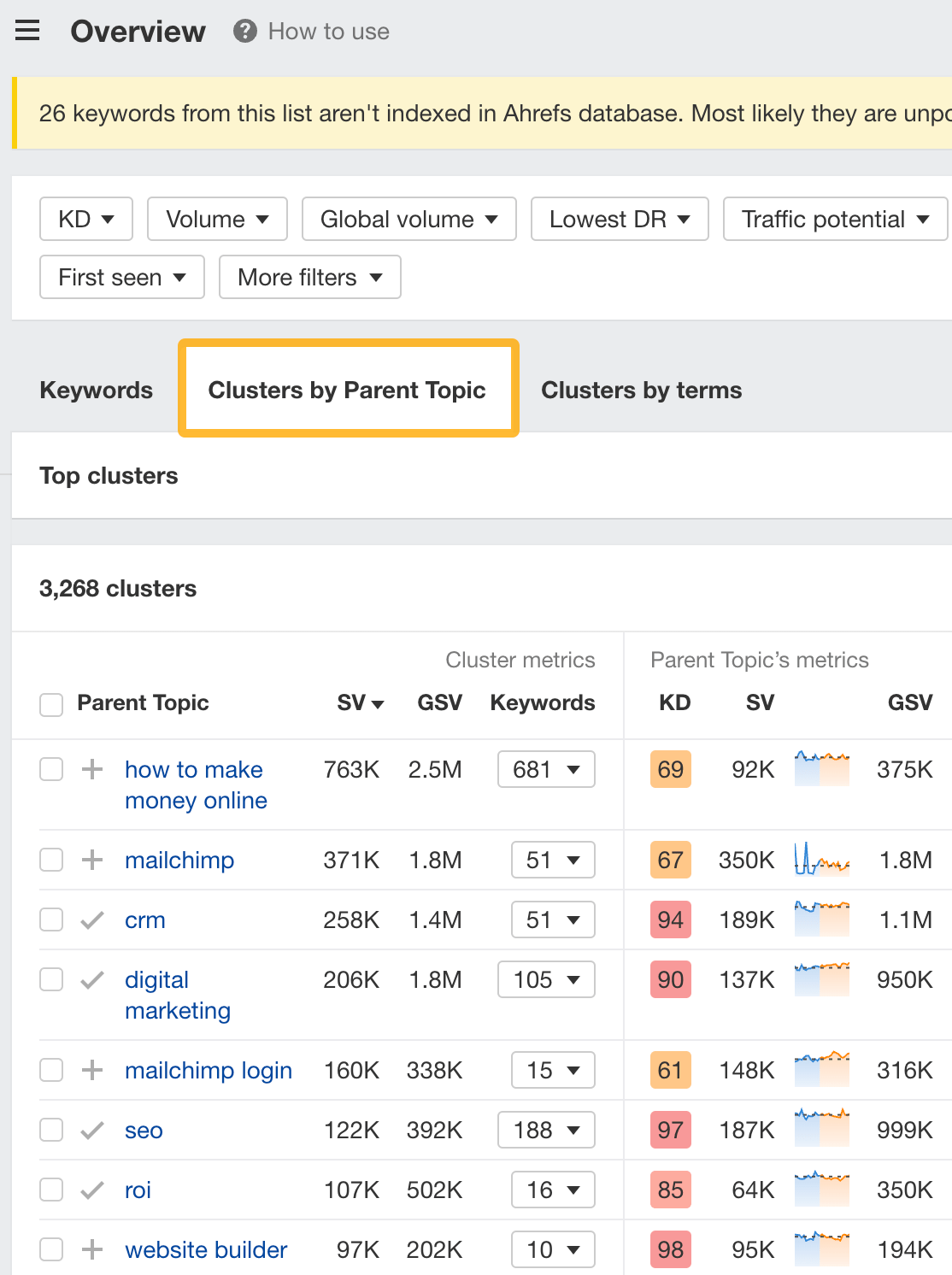

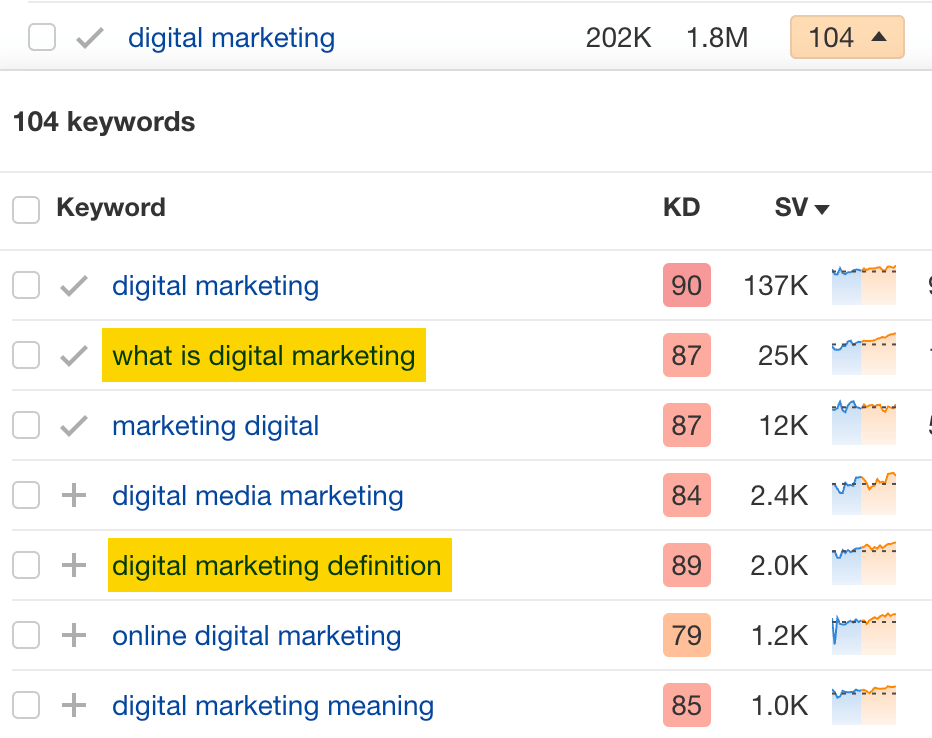

However, before you do this, it’s worth clustering your competitor’s keywords by Parent Topic. This will group keywords that mean the same or similar things so you can target them all with one page.

Here’s how to do that:

- Export your competitor’s keywords, either from the Organic Keywords or Content Gap report

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

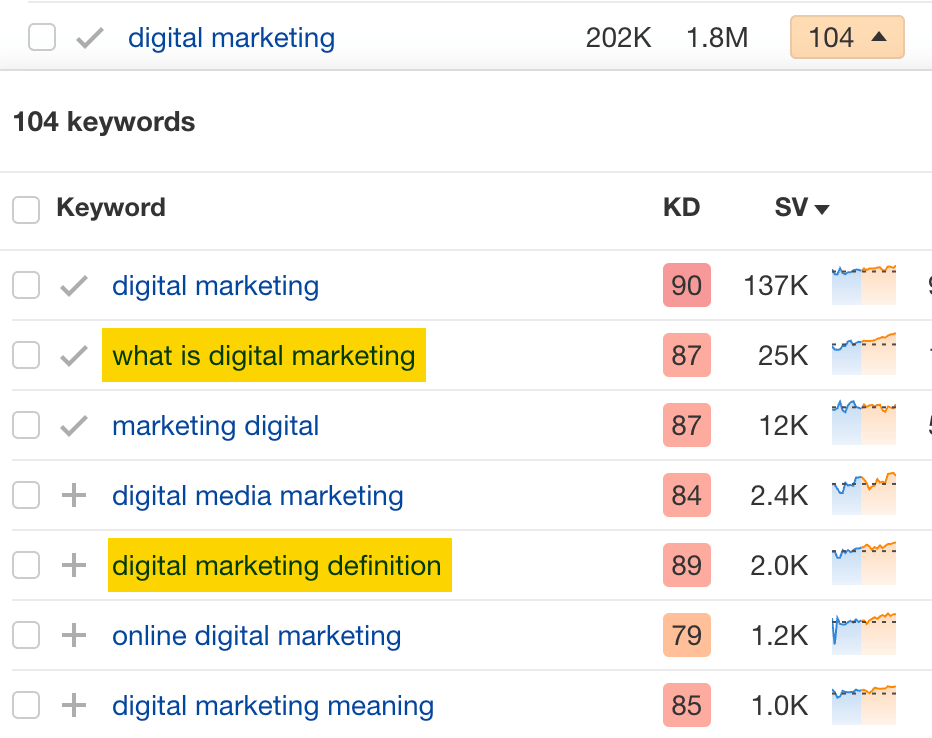

For example, MailChimp ranks for keywords like “what is digital marketing” and “digital marketing definition.” These and many others get clustered under the Parent Topic of “digital marketing” because people searching for them are all looking for the same thing: a definition of digital marketing. You only need to create one page to potentially rank for all these keywords.

2. Optimize existing content by filling subtopics

You don’t always need to create new content to rank for competitors’ keywords. Sometimes, you can optimize the content you already have to rank for them.

How do you know which keywords you can do this for? Try this:

- Export your competitor’s keywords

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

- Look for Parent Topics you already have content about

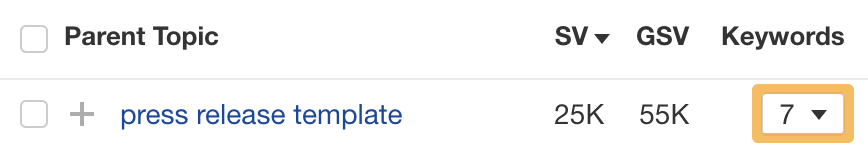

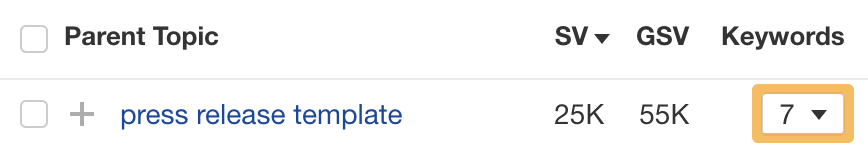

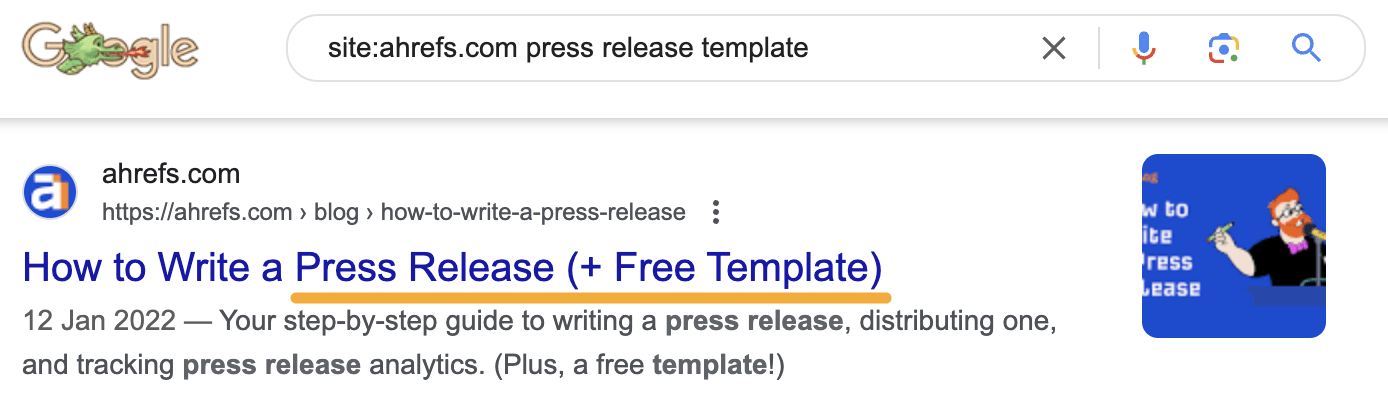

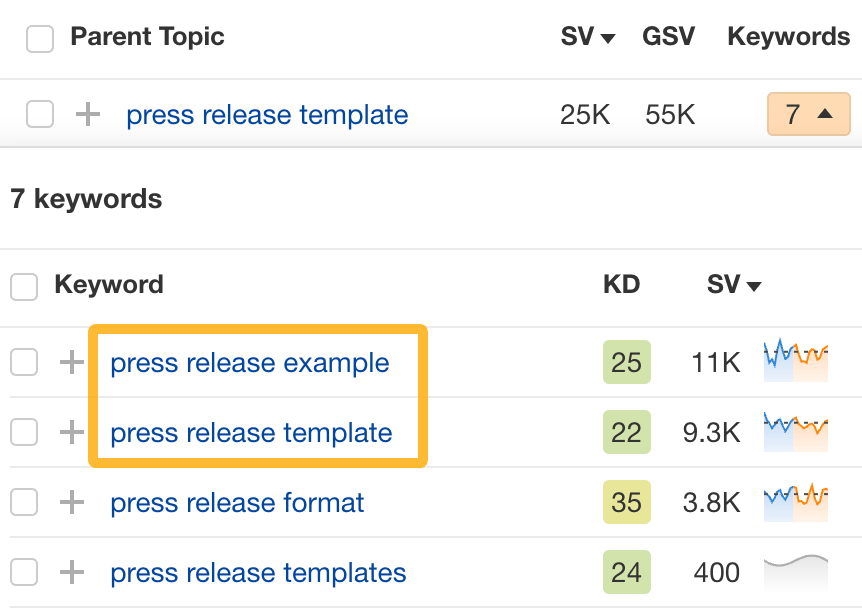

For example, if we analyze our competitor, we can see that seven keywords they rank for fall under the Parent Topic of “press release template.”

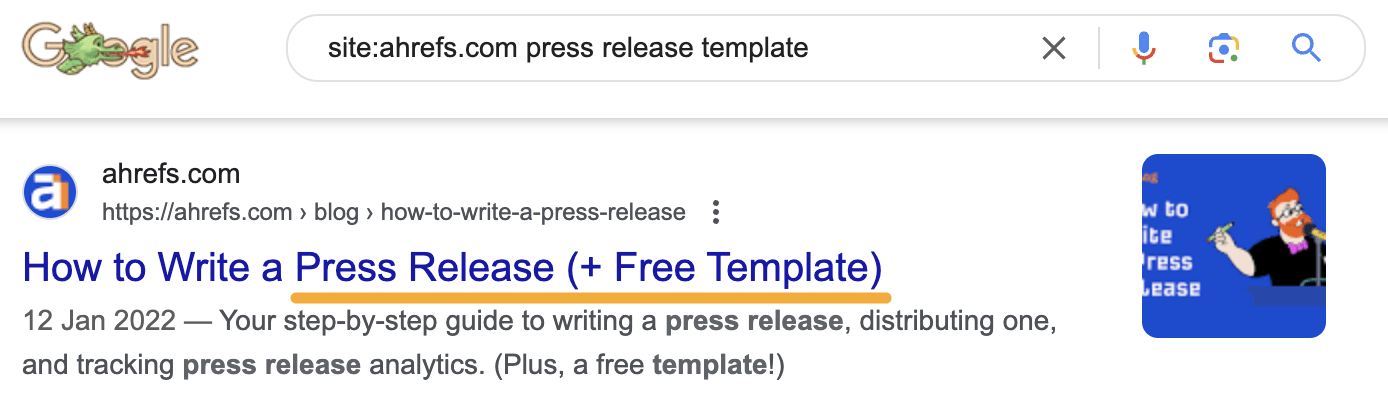

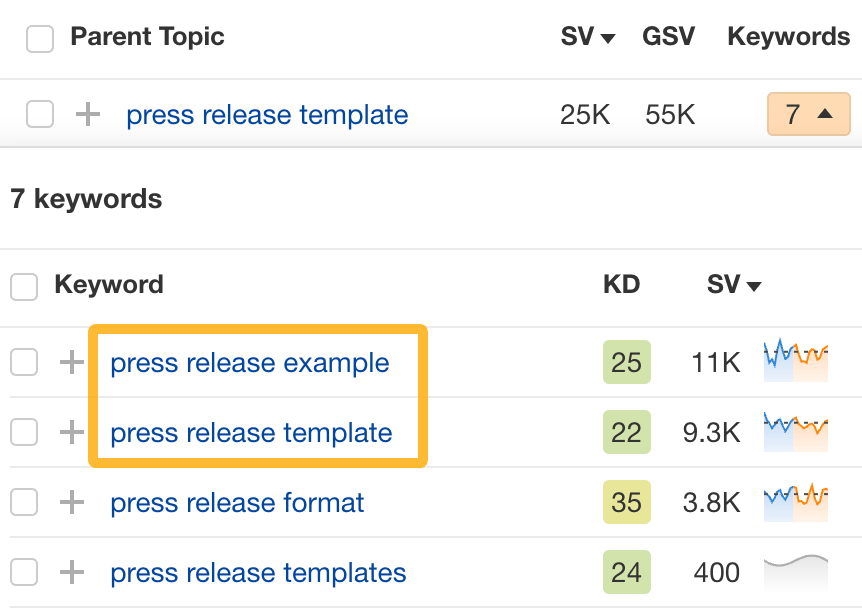

If we search our site, we see that we already have a page about this topic.

If we click the caret and check the keywords in the cluster, we see keywords like “press release example” and “press release format.”

To rank for the keywords in the cluster, we can probably optimize the page we already have by adding sections about the subtopics of “press release examples” and “press release format.”

3. Target these keywords with Google Ads

Paid keywords are the simplest—look through the report and see if there are any relevant keywords you might want to target, too.

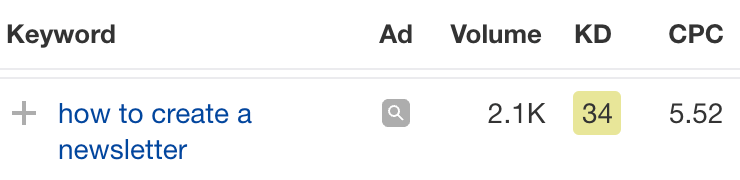

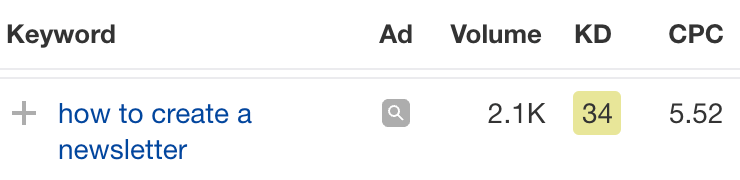

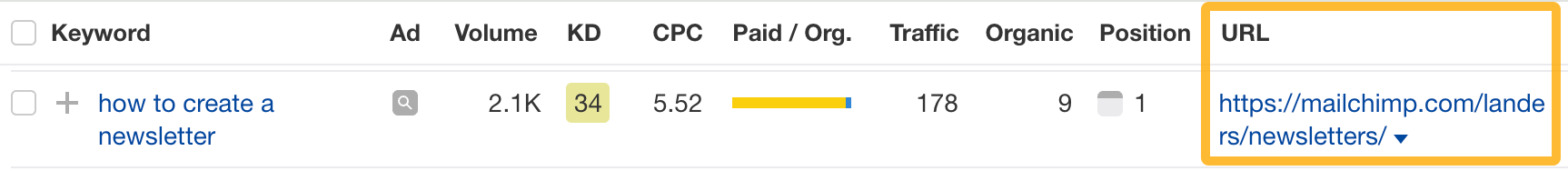

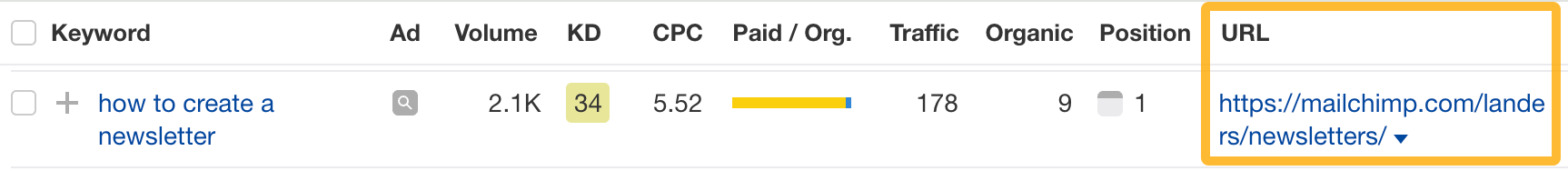

For example, Mailchimp is bidding for the keyword “how to create a newsletter.”

If you’re ConvertKit, you may also want to target this keyword since it’s relevant.

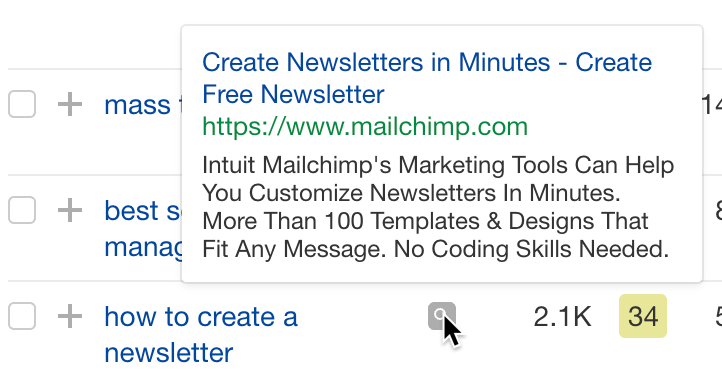

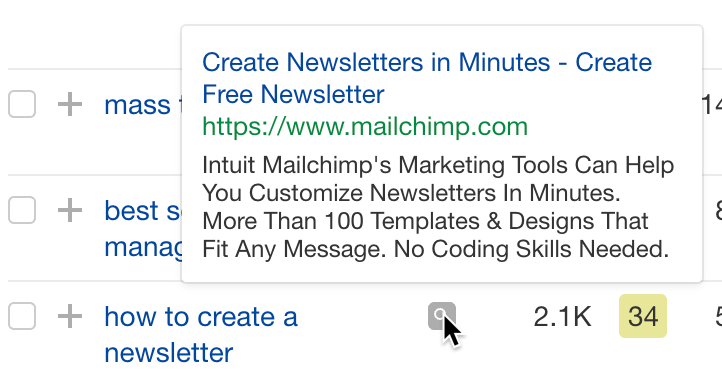

If you decide to target the same keyword via Google Ads, you can hover over the magnifying glass to see the ads your competitor is using.

You can also see the landing page your competitor directs ad traffic to under the URL column.

Learn more

Check out more tutorials on how to do competitor keyword analysis:

-

PPC5 days ago

PPC5 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 17, 2024

-

SEO7 days ago

SEO7 days agoAn In-Depth Guide And Best Practices For Mobile SEO

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

MARKETING6 days ago

MARKETING6 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEO6 days ago

SEO6 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

You must be logged in to post a comment Login