SEO

How To Target Multiple Cities Without Hurting Your SEO

Can you imagine the hassle of finding an electrician if every Google search returned global SEO results?

How many pages of search results would you have to comb through to find a beautician in your neighborhood?

On the other hand, think of how inefficient your digital marketing strategy would be if your local business had to compete with every competitor worldwide for clicks.

Luckily, Google has delivered a solution for this issue through local SEO.

By allowing you to target just the customers in your area, it’s a quick and easy way to give information about your business to the people who are most likely to patronize it.

But what if you have multiple locations in multiple cities?

Is it possible to rank for keywords that target multiple cities without hurting your local SEO?

Of course, it is.

But before you go running off to tweak your site for local searches, there’s one caveat: If you do it wrong, it can actually hurt you. So, it’s important to ensure you do it correctly.

It’s a bit more complex than regular old search engine optimization, but never fear – we’re here to guide you through the process.

Follow the instructions below, and you’ll rank in searches of all your locales before you know it. Ready to get started?

Why Is Local SEO Important?

If local SEO can potentially “hurt” you, why do it at all? Here are two good reasons:

Local SEO Attracts Foot Traffic

Imagine you’re out of town for a cousin’s wedding.

On the night before the big day, you’re in your hotel room when you crave a cheese pizza.

You pick up your phone and Google … what?

“Pizza?”

I don’t think so.

No, you’re probably going to Google a location-specific keyword, like [best pizza in Louisville].

When you get the results, you don’t say, “good to know,” and then head off to sleep.

No. Instead, you take the action that drove the search in the first place. In other words, you pick up your phone and order the pizza.

Or you get up, take a taxi, and dine out at that spectacular pizzeria.

And you’re not the only one doing this.

In fact, every month, searchers visit 1.5 billion locations related to their searches.

And you’re not the one in a million person who’s doing a local search, either.

Nearly 46% of Google searches have local intent.

That’s huge!

So, the next time you’re thinking of skipping local SEO, think again.

It could actually be your ticket to getting that random out-on-vacation dude to check out your pizza place. (Or beauty salon. Or hardware store – you get the point.)

Local SEO Ranks You Higher On Google

We’re all well-informed on the SEO KPIs you should track to rank on Google.

Two of these are:

- Clicks to your site.

- Keyword ranking increases.

With local SEO, you hit both of these birds with one stone. Note that it’s based on the searcher’s location distance/relevance to the business.

City Pages: Good Or Bad For SEO?

Long ago, in the dark ages of SEO, city pages were used to stuff in local keywords to gain higher rankings on Google.

For example, you’d create a page and write content on flower delivery.

Then, you’d copy your content onto several different pages, each one with a different city in the keyword.

So, a page for [flower delivery in Louisville], [flower delivery in Newark], and [flower delivery in Shelbyville], each with the exact same content.

As tends to be the case, it didn’t take long for Google to notice this spammy tactic.

When it rolled out its Panda Update, it made sure to flag and penalize sites doing it.

So, city pages can hurt your SEO and penalize your site.

This brings us to…

How Do I Optimize My Business For Multiple Locations On Google?

1. Use Google Business Profile

Remember, Google’s mission is to organize and deliver the most relevant and reliable information available to online searchers.

Its goal is to give people exactly what they’re looking for.

This means if they can verify your business, you’ll have a higher chance of ranking on the SERPs.

Enter Google Business Profile.

When you register on Google Business Profile, you’re confirming to Google exactly what you offer and where you’re located.

In turn, Google will be confident about sharing your content with searchers.

The good news is Google Business Profile is free and easy to use.

Simply create an account, claim your business, and fill in as much information as possible about it.

Photos and customer reviews (plus replying to reviews) can also help you optimize your Google Business Profile account.

2. Get Into Google’s Local Map Pack

Ever do a local search and get three featured suggestions from Google?

You know, like this.

Yes, these businesses are super lucky.

Chances are that searchers will pick one of them and look no further for their plumbing needs. Tough luck, everyone else.

Of course, this makes it extremely valuable to be one of the three listed in the Local Map Pack. And with the right techniques, you can be.

Here are three things you can do to increase your chances of making it to one of the three coveted slots:

Sign Up For Google Business Profile

As discussed in the previous point, Google prioritizes sites it has verified.

Give Google All Your Details

Provide Google with all your information, including your company’s name, address, phone number, and operating hours.

Photos and other media work splendidly, too. And remember, everyone loves images.

Leverage Your Reviews

The better your reviews, the higher your chances of being featured on Google’s Local Map Pack.

3. Build Your Internal Linking Structure

Did you know that tweaking your internal linking structure will help boost your SEO?

Sure, external links pointing to your site are great.

But you don’t control them. And getting them takes a bit of work. If you can’t get them yet, internal linking will help you:

- Improve your website navigation.

- Show Google which of your site’s pages is most important.

- Improve your website’s architecture.

All these will help you rank higher on Google and increase your chances of discovery by someone doing a local search.

4. Build Your NAP Citations

NAP stands for name, address, and phone number.

Generally, it stands for your business information online.

The first place you want your NAP on is your website.

A good rule of thumb is to put this information at the bottom of your homepage, which is where visitors expect to find it.

Screenshot from allweekplumbing.com, November 2022

Screenshot from allweekplumbing.com, November 2022It’s also great to list your business information on online data aggregators.

These aggregators provide data to top sites like TripAdvisor, Yelp, and Microsoft Bing.

Here are some of the big ones you shouldn’t miss.

Listing your website on all the top aggregators sounds tedious, but it’s worthwhile if you want to get a feature like this.

Screenshot from Trip Advisor, November 2022

Screenshot from Trip Advisor, November 2022Important note: Make sure that your NAPs are consistent throughout the web.

One mistake can seriously hurt your chances of getting featured on Google’s Local Map Pack or on sites like Yelp and TripAdvisor.

5. Use Schema Markup

Sometimes called structured data or simply schema, schema markup on your website can significantly affect your local SEO results.

But if you’re not a developer, it can look intimidating.

Don’t worry – it’s not as difficult to use as you might think.

A collaboration between Google, Yahoo, Yandex, and Microsoft, Schema.org was established in 2011 to establish a common vocabulary between search engines.

While it can be used to improve the appearance of your search result, help you appear for relevant queries, and increase visitor time spent on a page, Google has been very clear that it does not impact search rankings.

So, why are we talking about it here? Because it does improve the chances of your content being used for rich results, making you more eye-catching and improving click-through rates.

On top of that, the schema provides several different property options relevant to local SEO, allowing you to select relevant schema categories.

By selecting Schema.org/bakery for your cupcake shop, you’re helping search engines better understand the topic of your website.

After you’ve selected the right category, you need to select the sub-properties to ensure validation. This includes the business name, hours, the area served, etc.

The Schema.org/areaServed on the local landing page should always match the service areas set up in a Google Business Profile, AND your local landing page should mention those same towns in its on-page content.

For a full list of required and recommended schema properties and information on validating your structured data, read this article. Using a plugin, you can also find more information about Schema markup for WordPress.

6. Optimize Your Site For Mobile

If you wake up in the middle of the night to find your bathroom flooding with water from an exploded faucet, do you:

- Run to your laptop and do a local search for the best emergency plumber.

- Grab your phone and type “emergency plumber” into your Chrome app.

If it’s 3 a.m., chances are you chose No. 2.

But here’s the thing.

People don’t only choose their smartphones over their computers at 3 a.m.

They do it all the time.

Almost 59% of all website traffic comes from a mobile device.

Screenshot from Statista, November 2022

Screenshot from Statista, November 2022As usual, Google noticed and moved to mobile-first indexing.

All this means your site has to be optimized for mobile if you want to rank well on Google, especially for local SEO.

Here are six tips on making your website mobile-friendly:

- Make sure your website is responsive and fits nicely into different screen sizes.

- Don’t make your buttons too small.

- Prioritize large fonts.

- Forget about pop-ups and text blockers.

- Put your important information front and center.

- If you’re using WordPress, choose mobile-friendly themes.

Bonus Tip: Make Your Most Important City Pages Unique

If you want to name all the cities in a region you serve, just list them on the page – you don’t need an individual page for each city to rank in most cases.

To make the pages different, write original content for each area or city.

Which means it’s up to you.

You can simply list all the cities you serve on one page.

Or you can go ahead and create individual pages for each city.

When you take this step, make sure each page’s content is unique.

And no, I don’t mean simply changing the word “hand-wrestling” to “arm-wrestling.”

You need to do extra research on your targeted location, then go ahead and write specific and helpful information for readers in the area.

For example:

- If you’re a plumber, talk about the problem of hard water in the area.

- If you’re a florist, explain how you grow your plants in the local climate.

- If you’re into real estate, talk about communities in the area.

Here’s an excellent example from 7th State Builders.

Adding information about a city or town is also a great way to build your client’s confidence.

A OnePoll survey conducted on behalf of CG Roxane found 67% of people trust local businesses – by identifying your understanding of the situations and issues in a locale, you’re insinuating that you’re local – even if you have multiple locations spread throughout the country.

They’ll see how much you know their area and trust you to solve their area-specific problems.

Important note: Ensure this information goes on all variations of your website.

With Google’s mobile-first index in place, you don’t want to fall in the rankings simply because you failed to optimize for mobile.

5 Tools To Scale Your Local SEO

What if you have 100s of locations? How do you manage listings for them?

Here are some tools to help you scale your local SEO efforts.

Ready To Target Local SEO?

Hopefully, I’ve made it very clear by this point – local SEO is important. And just because you’re running multiple locations in different cities doesn’t mean you can’t put it to work for you.

How you go about that is up to you. Do you want to create one landing page for each location? Or do you want to list all your locations on the same page?

Whatever you choose, be aware of the power of local SEO in attracting customers to your neighborhood.

Just make sure you’re doing it correctly. If, after reading this piece, you’re still unsure what steps to take, just imagine yourself as a customer.

What kind of information would you be looking for?

What would convince you that your business is perfect for their needs?

There’s a good chance location will be one of the driving factors, and the best way to take advantage of that is with local SEO.

More Resources:

Featured Image: New Africa/Shutterstock

SEO

brightonSEO Live Blog

Hello everyone. It’s April again, so I’m back in Brighton for another two days of Being the introvert I am, my idea of fun isn’t hanging around our booth all day explaining we’ve run out of t-shirts (seriously, you need to be fast if you want swag!). So I decided to do something useful and live-blog the event instead.

Follow below for talk takeaways and (very) mildly humorous commentary. sun, sea, and SEO!

SEO

Google Further Postpones Third-Party Cookie Deprecation In Chrome

Google has again delayed its plan to phase out third-party cookies in the Chrome web browser. The latest postponement comes after ongoing challenges in reconciling feedback from industry stakeholders and regulators.

The announcement was made in Google and the UK’s Competition and Markets Authority (CMA) joint quarterly report on the Privacy Sandbox initiative, scheduled for release on April 26.

Chrome’s Third-Party Cookie Phaseout Pushed To 2025

Google states it “will not complete third-party cookie deprecation during the second half of Q4” this year as planned.

Instead, the tech giant aims to begin deprecating third-party cookies in Chrome “starting early next year,” assuming an agreement can be reached with the CMA and the UK’s Information Commissioner’s Office (ICO).

The statement reads:

“We recognize that there are ongoing challenges related to reconciling divergent feedback from the industry, regulators and developers, and will continue to engage closely with the entire ecosystem. It’s also critical that the CMA has sufficient time to review all evidence, including results from industry tests, which the CMA has asked market participants to provide by the end of June.”

Continued Engagement With Regulators

Google reiterated its commitment to “engaging closely with the CMA and ICO” throughout the process and hopes to conclude discussions this year.

This marks the third delay to Google’s plan to deprecate third-party cookies, initially aiming for a Q3 2023 phaseout before pushing it back to late 2024.

The postponements reflect the challenges in transitioning away from cross-site user tracking while balancing privacy and advertiser interests.

Transition Period & Impact

In January, Chrome began restricting third-party cookie access for 1% of users globally. This percentage was expected to gradually increase until 100% of users were covered by Q3 2024.

However, the latest delay gives websites and services more time to migrate away from third-party cookie dependencies through Google’s limited “deprecation trials” program.

The trials offer temporary cookie access extensions until December 27, 2024, for non-advertising use cases that can demonstrate direct user impact and functional breakage.

While easing the transition, the trials have strict eligibility rules. Advertising-related services are ineligible, and origins matching known ad-related domains are rejected.

Google states the program aims to address functional issues rather than relieve general data collection inconveniences.

Publisher & Advertiser Implications

The repeated delays highlight the potential disruption for digital publishers and advertisers relying on third-party cookie tracking.

Industry groups have raised concerns that restricting cross-site tracking could push websites toward more opaque privacy-invasive practices.

However, privacy advocates view the phaseout as crucial in preventing covert user profiling across the web.

With the latest postponement, all parties have more time to prepare for the eventual loss of third-party cookies and adopt Google’s proposed Privacy Sandbox APIs as replacements.

Featured Image: Novikov Aleksey/Shutterstock

SEO

How To Write ChatGPT Prompts To Get The Best Results

ChatGPT is a game changer in the field of SEO. This powerful language model can generate human-like content, making it an invaluable tool for SEO professionals.

However, the prompts you provide largely determine the quality of the output.

To unlock the full potential of ChatGPT and create content that resonates with your audience and search engines, writing effective prompts is crucial.

In this comprehensive guide, we’ll explore the art of writing prompts for ChatGPT, covering everything from basic techniques to advanced strategies for layering prompts and generating high-quality, SEO-friendly content.

Writing Prompts For ChatGPT

What Is A ChatGPT Prompt?

A ChatGPT prompt is an instruction or discussion topic a user provides for the ChatGPT AI model to respond to.

The prompt can be a question, statement, or any other stimulus to spark creativity, reflection, or engagement.

Users can use the prompt to generate ideas, share their thoughts, or start a conversation.

ChatGPT prompts are designed to be open-ended and can be customized based on the user’s preferences and interests.

How To Write Prompts For ChatGPT

Start by giving ChatGPT a writing prompt, such as, “Write a short story about a person who discovers they have a superpower.”

ChatGPT will then generate a response based on your prompt. Depending on the prompt’s complexity and the level of detail you requested, the answer may be a few sentences or several paragraphs long.

Use the ChatGPT-generated response as a starting point for your writing. You can take the ideas and concepts presented in the answer and expand upon them, adding your own unique spin to the story.

If you want to generate additional ideas, try asking ChatGPT follow-up questions related to your original prompt.

For example, you could ask, “What challenges might the person face in exploring their newfound superpower?” Or, “How might the person’s relationships with others be affected by their superpower?”

Remember that ChatGPT’s answers are generated by artificial intelligence and may not always be perfect or exactly what you want.

However, they can still be a great source of inspiration and help you start writing.

Must-Have GPTs Assistant

I recommend installing the WebBrowser Assistant created by the OpenAI Team. This tool allows you to add relevant Bing results to your ChatGPT prompts.

This assistant adds the first web results to your ChatGPT prompts for more accurate and up-to-date conversations.

It is very easy to install in only two clicks. (Click on Start Chat.)

For example, if I ask, “Who is Vincent Terrasi?,” ChatGPT has no answer.

With WebBrower Assistant, the assistant creates a new prompt with the first Bing results, and now ChatGPT knows who Vincent Terrasi is.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023You can test other GPT assistants available in the GPTs search engine if you want to use Google results.

Master Reverse Prompt Engineering

ChatGPT can be an excellent tool for reverse engineering prompts because it generates natural and engaging responses to any given input.

By analyzing the prompts generated by ChatGPT, it is possible to gain insight into the model’s underlying thought processes and decision-making strategies.

One key benefit of using ChatGPT to reverse engineer prompts is that the model is highly transparent in its decision-making.

This means that the reasoning and logic behind each response can be traced, making it easier to understand how the model arrives at its conclusions.

Once you’ve done this a few times for different types of content, you’ll gain insight into crafting more effective prompts.

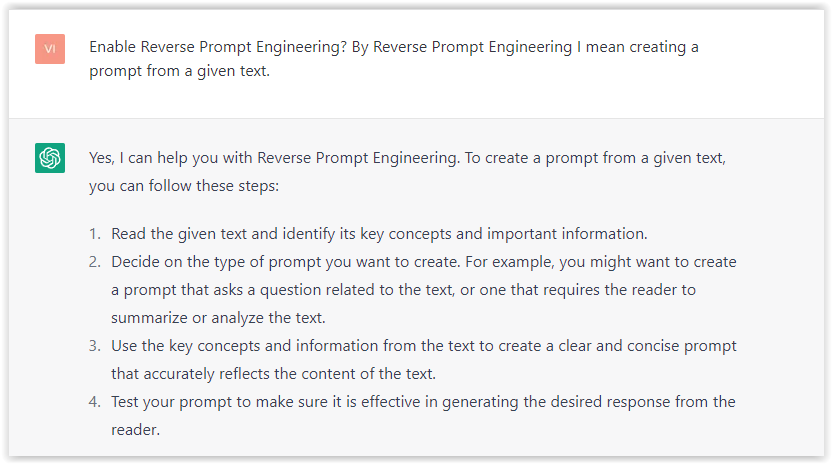

Prepare Your ChatGPT For Generating Prompts

First, activate the reverse prompt engineering.

- Type the following prompt: “Enable Reverse Prompt Engineering? By Reverse Prompt Engineering I mean creating a prompt from a given text.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023ChatGPT is now ready to generate your prompt. You can test the product description in a new chatbot session and evaluate the generated prompt.

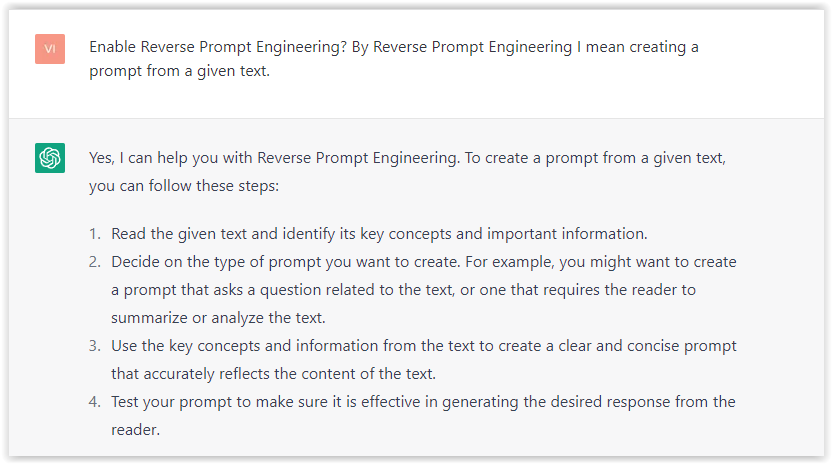

- Type: “Create a very technical reverse prompt engineering template for a product description about iPhone 11.”

Screenshot from ChatGPT, March 2023

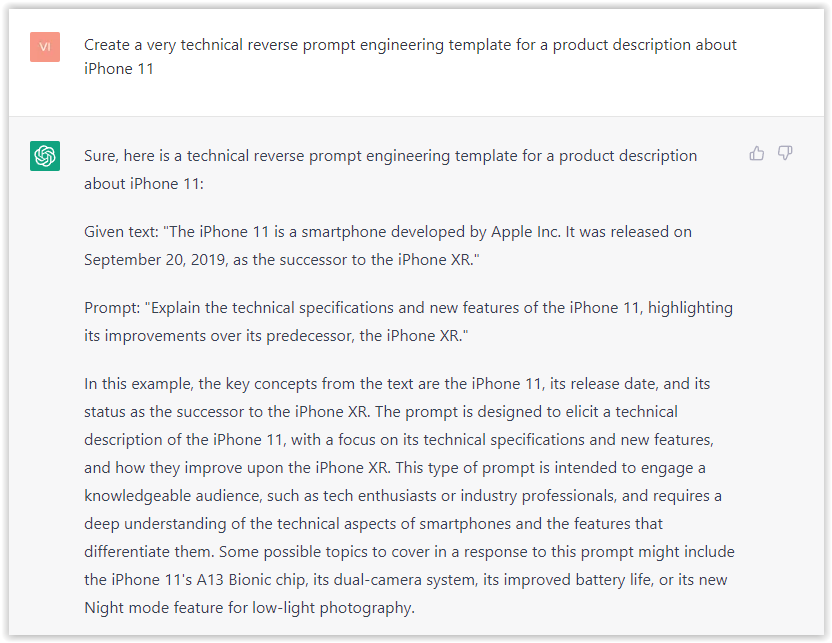

Screenshot from ChatGPT, March 2023The result is amazing. You can test with a full text that you want to reproduce. Here is an example of a prompt for selling a Kindle on Amazon.

- Type: “Reverse Prompt engineer the following {product), capture the writing style and the length of the text :

product =”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023I tested it on an SEJ blog post. Enjoy the analysis – it is excellent.

- Type: “Reverse Prompt engineer the following {text}, capture the tone and writing style of the {text} to include in the prompt :

text = all text coming from https://www.searchenginejournal.com/google-bard-training-data/478941/”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023But be careful not to use ChatGPT to generate your texts. It is just a personal assistant.

Go Deeper

Prompts and examples for SEO:

- Keyword research and content ideas prompt: “Provide a list of 20 long-tail keyword ideas related to ‘local SEO strategies’ along with brief content topic descriptions for each keyword.”

- Optimizing content for featured snippets prompt: “Write a 40-50 word paragraph optimized for the query ‘what is the featured snippet in Google search’ that could potentially earn the featured snippet.”

- Creating meta descriptions prompt: “Draft a compelling meta description for the following blog post title: ’10 Technical SEO Factors You Can’t Ignore in 2024′.”

Important Considerations:

- Always Fact-Check: While ChatGPT can be a helpful tool, it’s crucial to remember that it may generate inaccurate or fabricated information. Always verify any facts, statistics, or quotes generated by ChatGPT before incorporating them into your content.

- Maintain Control and Creativity: Use ChatGPT as a tool to assist your writing, not replace it. Don’t rely on it to do your thinking or create content from scratch. Your unique perspective and creativity are essential for producing high-quality, engaging content.

- Iteration is Key: Refine and revise the outputs generated by ChatGPT to ensure they align with your voice, style, and intended message.

Additional Prompts for Rewording and SEO:

– Rewrite this sentence to be more concise and impactful.

– Suggest alternative phrasing for this section to improve clarity.

– Identify opportunities to incorporate relevant internal and external links.

– Analyze the keyword density and suggest improvements for better SEO.

Remember, while ChatGPT can be a valuable tool, it’s essential to use it responsibly and maintain control over your content creation process.

Experiment And Refine Your Prompting Techniques

Writing effective prompts for ChatGPT is an essential skill for any SEO professional who wants to harness the power of AI-generated content.

Hopefully, the insights and examples shared in this article can inspire you and help guide you to crafting stronger prompts that yield high-quality content.

Remember to experiment with layering prompts, iterating on the output, and continually refining your prompting techniques.

This will help you stay ahead of the curve in the ever-changing world of SEO.

More resources:

Featured Image: Tapati Rinchumrus/Shutterstock

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS6 days ago

WORDPRESS6 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO7 days ago

SEO7 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS7 days ago

WORDPRESS7 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

SEO6 days ago

SEO6 days ago25 WordPress Alternatives Best For SEO

-

WORDPRESS5 days ago

WORDPRESS5 days ago7 Best WooCommerce Points and Rewards Plugins (Free & Paid)

You must be logged in to post a comment Login