SEO

Pro-Tech SEO Checklist For Agencies

This post was sponsored by JetOctopus. The opinions expressed in this article are the sponsor’s own.

When you’re taking on large-scale projects or working with extensive websites with hundreds to thousands of pages, you must leverage advanced technical SEO techniques.

Large websites come with challenges such as vast site architectures, dynamic content, and the higher-stakes competition in maintaining rankings.F

Leveling up your team’s technical SEO chops can help you establish a stronger value proposition, ensuring your clients gain that extra initial edge and choose to continue growing with your agency.

With this in mind, here’s a concise checklist covering the most important nuances of advanced technical SEO that can lead your clients to breakthrough performance in the SERPs.

1. Advanced Indexing And Crawl Control

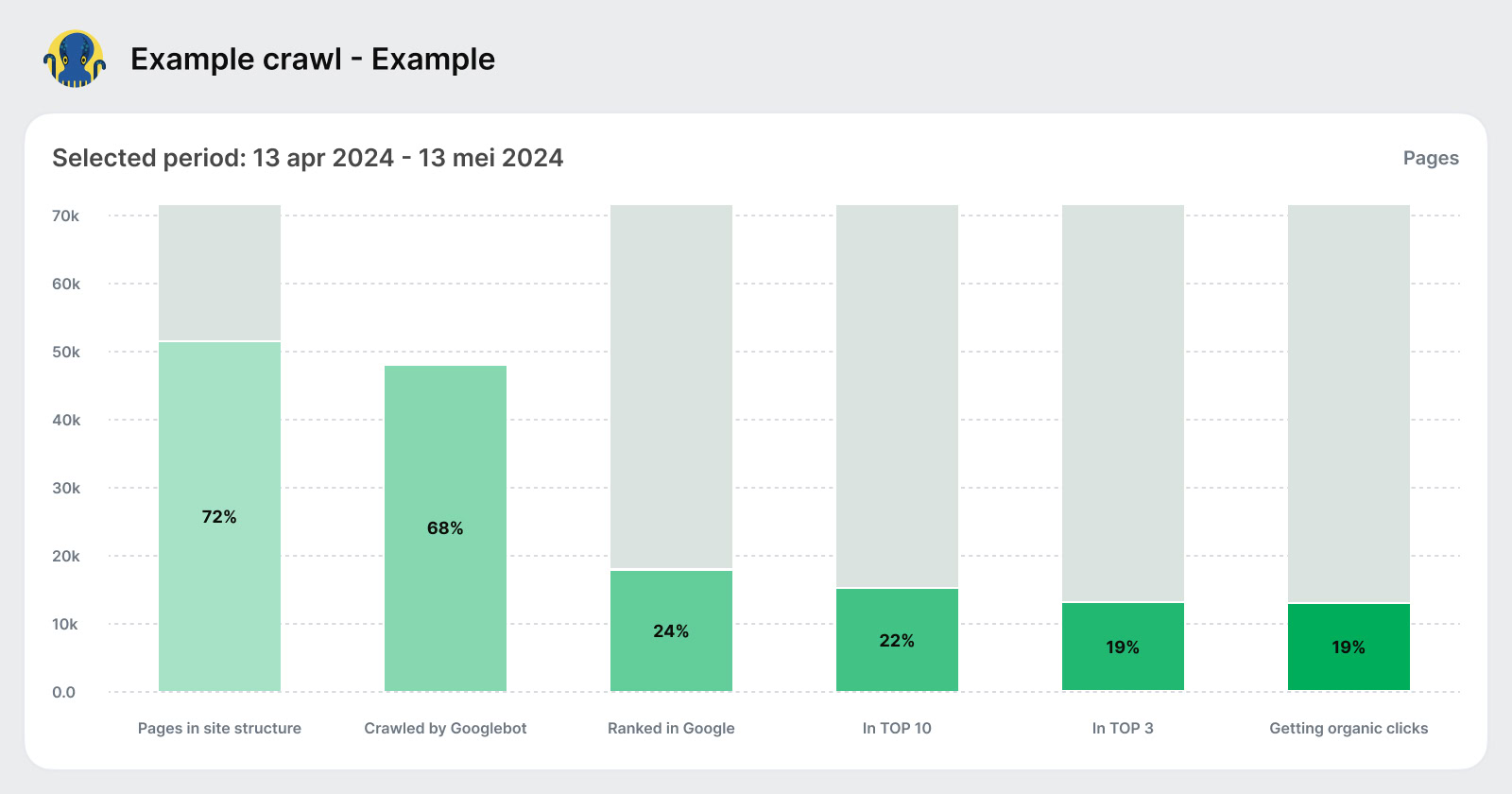

Optimizing search engine crawl and indexation is foundational for effective technical SEO. Managing your crawl budget effectively begins with log file analysis—a technique that offers direct insights into how search engines interact with your clients’ websites.

A log file analysis helps:

- Crawl Budget Management: Essential for ensuring Googlebot crawls and indexes your most valuable pages. Log file analysis indicates how many pages are crawled daily and whether important sections are missed.

- Identifying Non-Crawled Pages: Identifies pages Googlebot misses due to issues like slow loading times, poor internal linking, or unappealing content, giving you clear insights into necessary improvements.

- Understand Googlebot Behavior: Know what Googlebot actually crawls on a daily basis. Spikes in the crawl budget may signal technical issues on your website, like auto-generated thin, trashy pages, etc.

For this, integrating your SEO log analyzer data with GSC crawl data provides a complete view of site functionality and search engine interactions, enhancing your ability to guide crawler behavior.

Next, structure robots.txt to exclude search engines from admin areas or low-value add-ons while ensuring they can access and index primary content. Or, use the x-robots-tag—an HTTP header—to control indexing at a more granular level than robots.txt. It is particularly useful for non-HTML files like images or PDFs, where robot meta tags can’t be used.

For large websites, the approach with sitemaps is different from what you may have experienced. It almost doesn’t make sense to put millions of URLs in the sitemaps and want Googlebot to crawl them. Instead, do this: generate sitemaps with new products, categories, and pages on a daily basis. It will help Googlebot to find new content and make your sitemaps more efficient. For instance, DOM.RIA, a Ukrainian real estate marketplace, implemented a strategy that included creating mini-sitemaps for each city directory to improve indexing. This approach significantly increased Googlebot visits (by over 200% for key pages), leading to enhanced content visibility and click-through rates from the SERPs.

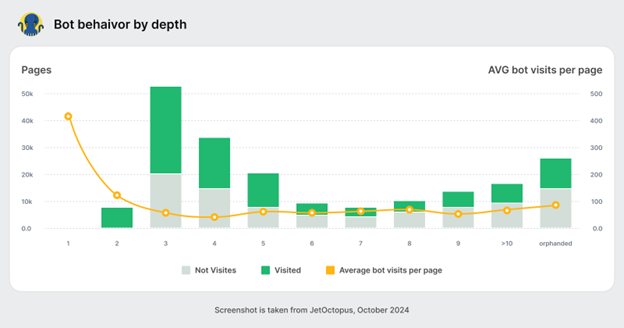

2. Site Architecture And Navigation

An intuitive site structure aids both users and search engine crawlers in navigating the site efficiently, enhancing overall SEO performance.

Specifically, a flat site architecture minimizes the number of clicks required to reach any page on your site, making it easier for search engines to crawl and index your content. It enhances site crawling efficiency by reducing the depth of important content. This improves the visibility of more pages in search engine indexes.

So, organize (or restructure) content with a shallow hierarchy, as this facilitates quicker access and better link equity distribution across your site.

For enterprise eCommerce clients, in particular, ensure proper handling of dynamic parameters in URLs. Use the rel=”canonical” link element to direct search engines to the original page, avoiding parameters that can result in duplicates.

Similarly, product variations (such as color and size) can create multiple URLs with similar content. It depends on the particular case, but the general rule is to apply the canonical tag to the preferred URL version of a product page to ensure all variations point back to the primary URL for indexing. If there is a significant number of such pages where Google ignores non-canonical content and puts them in the index, consider reviewing the canonicalization approach on the website.

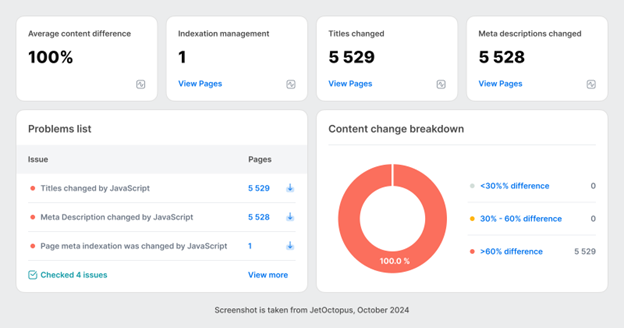

3. JavaScript SEO

As you know, JavaScript (JS) is crucial in modern web development, enhancing site interactivity and functionality but introducing unique SEO challenges. Even if you’re not directly involved in development, ensuring effective JavaScript SEO is important.

The foremost consideration in this regard is critical rendering path optimization — wait, what’s that?

The critical rendering path refers to the sequence of steps the browser must take to convert HTML, CSS, and JavaScript into a rendered web page. Optimizing this path is crucial for improving the speed at which a page becomes visible to users.

Here’s how to do it:

- Reduce the number and size of the resources required to display initial content.

- Minify JavaScript files to reduce their load time.

- Prioritize loading of above-the-fold content to speed up page render times.

If you’re dealing with Single Page Applications (SPAs), which rely on JavaScript for dynamic content loading, then you might need to fix:

- Indexing Issues: Since content is loaded dynamically, search engines might see a blank page. Implement Server-Side Rendering (SSR) to ensure content is visible to search engines upon page load.

- Navigation Problems: Traditional link-based navigation is often absent in SPAs, affecting how search engines understand site structure. Use the HTML5 History API to maintain traditional navigation functionality and improve crawlability.

Dynamic rendering is another technique useful for JavaScript-heavy sites, serving static HTML versions to search engines while presenting interactive versions to users.

However, ensure the browser console shows no errors, confirming the page is fully rendered with all necessary content. Also, verify that pages load quickly, ideally under a couple of seconds or so, to prevent user frustration (nobody likes a prolonged loading spinner) and reduce bounce rates.

Employ tools like GSC and Lighthouse to test and monitor your site’s rendering and web vitals performance. Regularly check that the rendered content matches what users see to ensure consistency in what search engines index.

4. Optimizing For Seasonal Trends

In the retail eCommerce space, seasonal trends influence consumer behavior and, consequently, search queries.

So, for these projects, you must routinely adapt your SEO strategies to stay on par with any product line updates.

Seasonal product variations—such as holiday-specific items or summer/winter editions—require special attention to ensure they are visible at the right times:

- Timely Content Updates: Update product descriptions, meta tags, and content with seasonal keywords well before the season begins.

- Seasonal Landing Pages: Create and optimize dedicated landing pages for seasonal products, ensuring they link appropriately to main product categories.

- Ongoing Keyword Research: Continually perform keyword research to capture evolving consumer interests and optimize new product categories accordingly.

- Technical SEO: Regularly check for crawl errors, ensure fast load times, and confirm that new pages are mobile-friendly and accessible.

On the flip side, managing discontinued products or outdated pages is just as crucial in maintaining site quality and retaining SEO value:

- Evaluate Page Value: Conduct regular content audits to assess whether a page still holds value. If a page hasn’t received any traffic or a bot hit in the last half-year, it might not be worth keeping.

- 301 Redirects: Use 301 redirects to transfer SEO value from outdated pages to relevant existing content.

- Prune Content: Remove or consolidate underperforming content to focus authority on more impactful pages, enhancing site structure and UX.

- Informative Out-of-Stock Pages: Keep pages for seasonally unavailable products informative, providing availability dates or links to related products.

Put simply, optimizing for seasonal trends means preparing for high-traffic periods and effectively managing the transition periods. This supports sustained SEO performance and a streamlined site experience for your clients.

5. Structured Data And Schema Implementation

Structured data via schema.org markup is a powerful tool to enhance a site’s SERP visibility and boost CTR through rich snippets.

Advanced schema markup goes beyond basic implementation, allowing you to present more detailed and specific information in SERPs. Consider these schema markups in your next client campaign:

- Nested Schema: Utilize nested schema objects to provide more detailed information. For example, a Product schema can include nested Offer and Review schemas to display prices and reviews in search results.

- Event Schema: For clients promoting events, implementing an Event schema with nested attributes like startDate, endDate, location, and offers can help in displaying rich snippets that show event details directly in SERPs.

- FAQ and How-To Pages: Implement FAQPage and HowTo schemas on relevant pages to provide direct answers in search results.

- Ratings, Reviews, and Prices: Implement the AggregateRating and Review schema on product pages to display star ratings and reviews. Use the Offer schema to specify pricing information, making the listings more attractive to potential buyers.

- Availability Status: Use the ItemAvailability schema to display stock status, which can increase the urgency and likelihood of a purchase from SERPs.

- Blog Enhancements: For content-heavy sites, use Article schema with properties like headline, author, and datePublished to enhance the display of blog articles.

Use Google’s Structured Data Testing Tool tool to test your pages’ structured data and identify any errors/warnings in your schema implementation. Also, use Google’s Rich Results Test to get feedback on how your page may appear in SERPs with the implemented structured data.

Conclusion

Considering their long SEO history and legacy, enterprise-level websites require more profound analysis from different perspectives.

We hope this mini checklist serves as a starting point for your team to take a fresh look into your new and existing customers and help deliver great SEO results.

Image Credits

Featured Image: Image by JetOctopus. Used with permission.

In-Post Images: Image by JetOctopus. Used with permission.

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)