SEO

Screaming Frog SEO Spider Version 20.0: AI-Powered Features

For SEO experts, our toolkit is crucial. It’s how we make sure we can quickly and effectively assess how well our websites are performing. Using the best tools can put you way ahead of other SEOs. One example (and one tool I’ve personally been using for years) is Screaming Frog. It’s a powerful, straightforward, and insightful website crawler tool that’s indispensable for finding technical issues on your website.

And the good news is that it keeps getting better. Screaming Frog just released its 20th major version of the software, which includes new features based on feedback from SEO professionals.

Here are the main updates:

- Custom JavaScript Snippets

- Mobile Usability

- N-Grams Analysis

- Aggregated Anchor Text

- Carbon Footprint & Rating

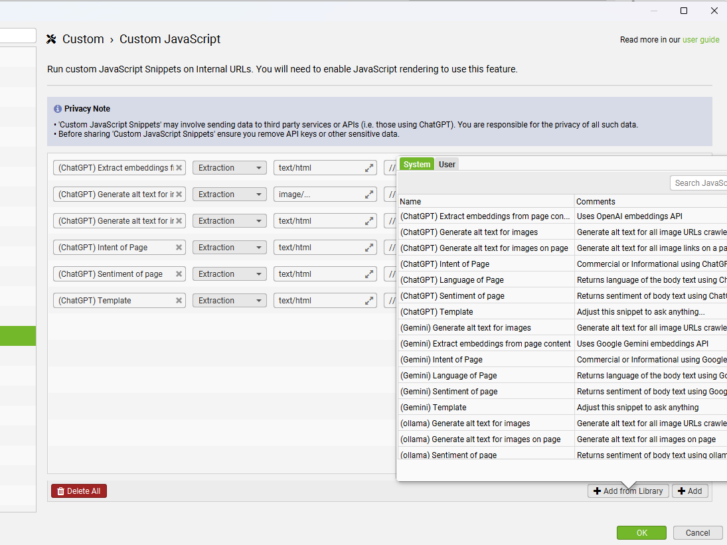

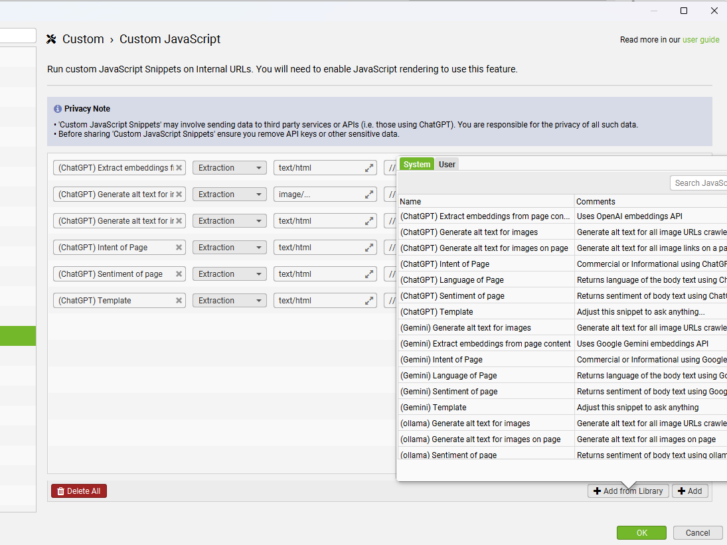

Custom JavaScript Snippets

One of the standout features in this release is the ability to execute custom JavaScript snippets during a crawl. This functionality expands the horizons for data manipulation and API communication, offering unprecedented flexibility.

Use Cases:

- Data Extraction and Manipulation: Gather specific data points or modify the DOM to suit your needs.

- API Communication: Integrate with APIs like OpenAI’s ChatGPT from within the SEO Spider.

Setting Up Custom JS Snippets:

- Navigate to `Config > Custom > Custom JavaScript`.

- Click ‘Add’ to create a new snippet or ‘Add from Library’ to select from preset snippets.

- Ensure JavaScript rendering mode is set via `Config > Spider > Rendering`.

Crawl with ChatGPT:

- Leverage the `(ChatGPT) Template` snippet, add your OpenAI API key and tailor the prompt to your needs.

- Follow our tutorial on ‘How To Crawl With ChatGPT’ for more detailed guidance.

Sharing Your Snippets:

- Export/import snippet libraries as JSON files to share with colleagues.

- Remember to remove sensitive data such as API keys before sharing.

Introducing Custom JavaScript Snippets to Screaming Frog SEO Spider version 20.0 significantly enhances the tool’s flexibility and power. Whether you’re generating dynamic content, interacting with external APIs, or conducting complex page manipulations, these snippets open a world of possibilities.

Mobile Usability

In today’s mobile-first world, ensuring a seamless mobile user experience is imperative. Version 20.0 introduces extensive mobile usability audits through Lighthouse integration.

With an ever-increasing number of users accessing websites via mobile devices, ensuring a seamless mobile experience is crucial. Google’s mobile-first indexing highlights the importance of mobile usability, which directly impacts your site’s rankings and user experience.

Mobile Usability Features:

- New Mobile Tab: This tab includes filters for regular mobile usability issues such as viewport settings, tap target sizes, content sizing, and more.

- Granular Issue Details: Detailed data on mobile usability issues can be explored in the ‘Lighthouse Details’ tab.

- Bulk Export Capability: Export comprehensive mobile usability reports via `Reports > Mobile`.

Setup:

- Connect to the PSI API through `Config > API Access > PSI` or run Lighthouse locally.

Example Use Cases:

- Identify pages where content does not fit within the viewport.

- Flag and correct small tap targets and illegible font sizes.

With these new features, Screaming Frog SEO Spider version 20.0 streamlines the process of auditing mobile usability, making it more efficient and comprehensive. By integrating with Google Lighthouse, both via the PSI API and local runs, the tool provides extensive insights into the mobile performance of your website. Addressing these issues not only enhances user experience but also improves your site’s SEO performance.

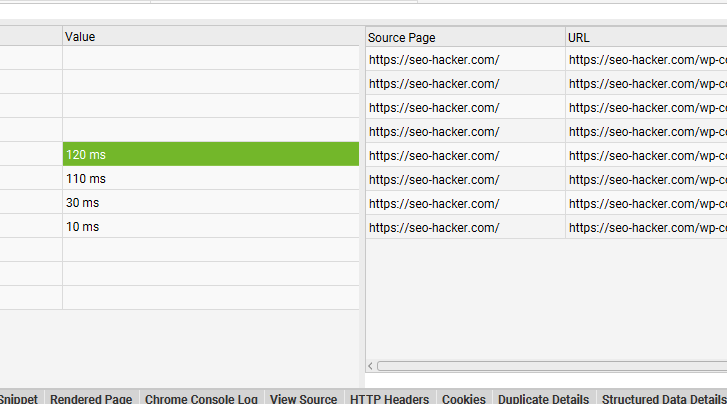

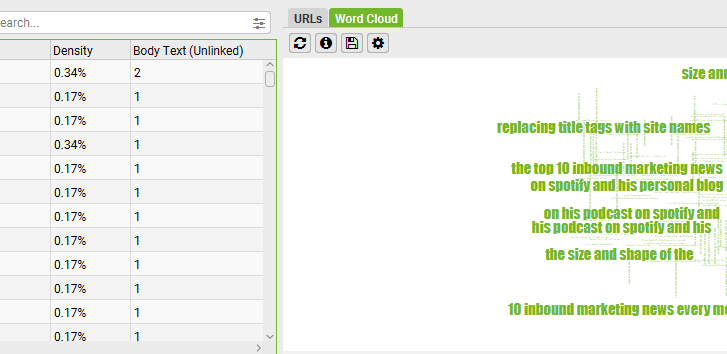

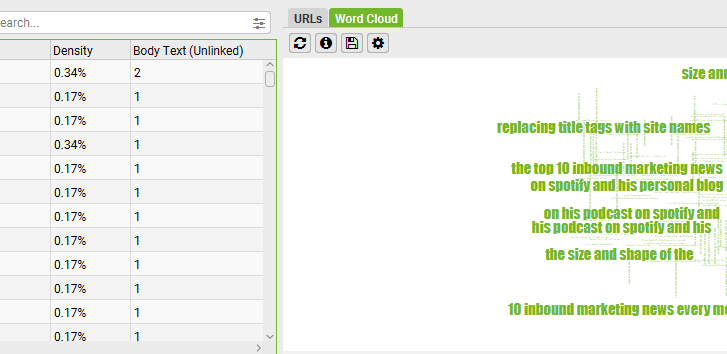

N-grams Analysis

N-grams analysis is a powerful new feature that allows users to analyze phrase frequency across web pages. This can greatly enhance on-page SEO efforts and internal linking strategies.

Setting Up N-grams:

- Activate HTML storage by enabling ‘Store HTML’ or ‘Store Rendered HTML’ under `Config > Spider > Extraction`.

- View the N-grams in the lower N-grams tab.

Example Use Cases:

- Improving Keyword Usage: Adjust content based on the frequency of targeted N-grams.

- Optimizing Internal Links: Use N-grams to identify unlinked keywords and create new internal links.

Internal Linking Opportunities:

The N-grams feature provides a nuanced method for discovering internal linking opportunities, which can significantly enhance your SEO strategy and site navigation.

The introduction of N-grams analysis in Screaming Frog SEO Spider version 20 provides a tool for deep content analysis and optimization. By understanding the frequency and distribution of phrases within your content, you can significantly improve your on-page SEO and internal linking strategies.

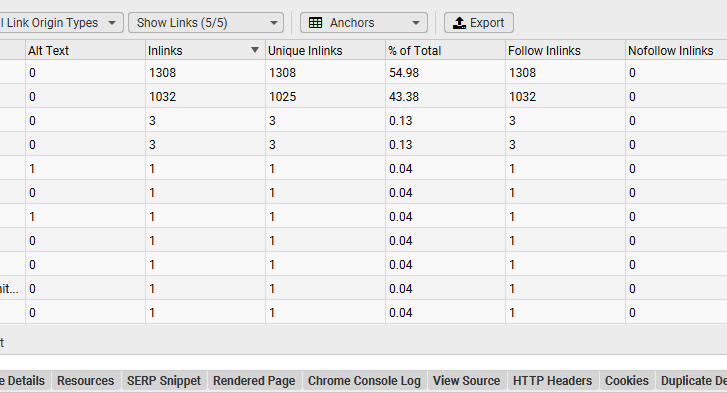

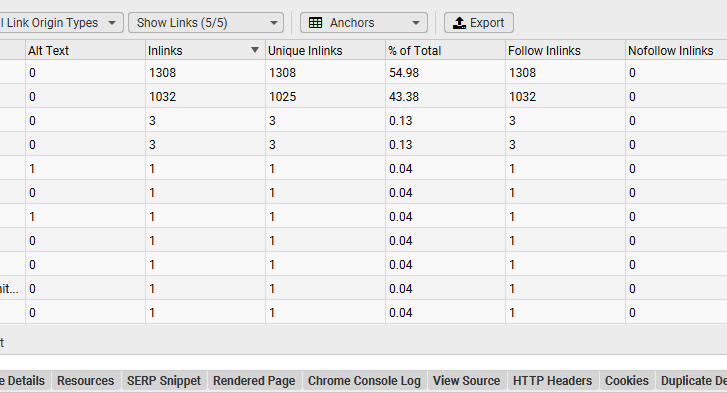

Aggregated Anchor Text

Effective anchor text management is essential for internal linking and overall SEO performance. The aggregated anchor text feature in version 20.0 provides clear insights into how anchor texts are used across your site.

Using Aggregated Anchor Text:

- Navigate to the ‘Inlinks’ or ‘Outlinks’ tab.

- Utilize the new ‘Anchors’ filters to see aggregated views of anchor text usage.

Practical Benefits:

- Anchor Text Diversity: Ensure a natural distribution of anchor texts to avoid over-optimization.

- Descriptive Linking: Replace generic texts like “click here” with keyword-rich alternatives.

The aggregated anchor text feature provides powerful insights into your internal link structure and optimization opportunities. This feature is essential if you are looking to enhance your site’s internal linking strategy for better keyword relevance, user experience, and search engine performance.

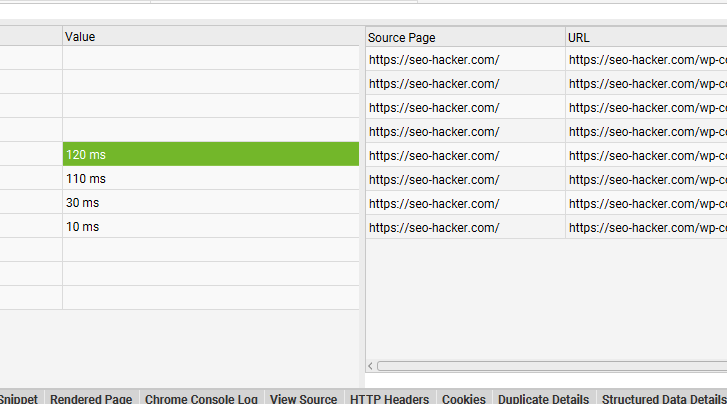

Aligning with digital sustainability trends, Screaming Frog SEO Spider version 20.0 includes features to measure and optimize your website’s carbon footprint.

Key Features:

- Automatic CO2 Calculation: The SEO Spider now calculates carbon emissions for each page using the CO2.js library.

- Carbon Rating: Each URL receives a rating based on its emissions, derived from the Sustainable Web Design Model.

- High Carbon Rating Identification: Pages with high emissions are flagged in the ‘Validation’ tab.

Practical Applications:

- Resource Optimization: Identify and optimize high-emission resources.

- Sustainable Practices: Implement changes such as compressing images, reducing script sizes, and using green hosting solutions.

The integration of carbon footprint calculations in Screaming Frog SEO Spider signifies a growing recognition of digital sustainability. As more businesses adopt these practices, we can collectively reduce the environmental impact of the web while driving performance and user satisfaction.

Other Updates

In addition to major features, version 20.0 includes numerous smaller updates and bug fixes that enhance functionality and user experience.

Rich Result Validation Enhancements:

- Split Google Rich Result validation errors from Schema.org.

- New filters and columns provide detailed insights into rich result triggers and errors.

Enhanced File Types and Filters:

- Internal and external filters include new file types such as Media, Fonts, and XML.

Website Archiving:

- A new option to archive entire websites during a crawl is available under `Config > Spider > Rendering > JS`.

Viewport and Screenshot Configuration:

- Customize viewport and screenshot sizes to fit different audit needs.

API Auto Connect:

- Automatically connect APIs on start, making the setup process more seamless.

Resource Over 15MB Filter:

- A new validation filter flags resources over 15MB, which is crucial for performance optimization.

Page Text Export:

- Export all visible page text through the new `Bulk Export > Web > All Page Text` option.

Lighthouse Details Tab:

- The ‘PageSpeed Details’ tab has been renamed ‘Lighthouse Details’ to reflect its expanded role.

HTML Content Type Configuration:

- An ‘Assume Pages are HTML’ option helps accurately classify pages without explicit content types.

Bug Fixes and Performance Improvements:

- Numerous small updates and fixes enhance stability and reliability.

Screaming Frog SEO Spider version 20.0 is a comprehensive update packed with innovative features and enhancements that cater to the evolving needs of SEO professionals like us. From advanced data extraction capabilities with Custom JavaScript Snippets to environmental sustainability with Carbon Footprint and Rating, this release sets a new benchmark in SEO auditing tools.

Key Takeaway

Add this to your toolbox, or update to version 20 to explore the rich array of new features from Screaming Frog to optimize your website’s SEO, usability, and sustainability. It’s a no-fuss tool with tons of features that will help you stay ahead of your competitors, and ensure your websites perform optimally in terms of user experience and search engine visibility.

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)