SOCIAL

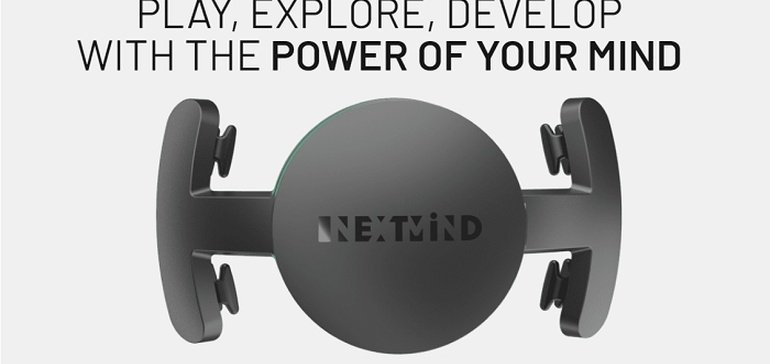

Snapchat Acquires Brain-Reading Tech ‘NextMind’ for the Next Stage of Digital Interaction

I don’t know about you, but any time I read things like “non-invasive brain computer interface”, I cringe just a little bit.

But that, apparently, is the direction we’re headed, as companies look to the next stage of digital interaction, with Snapchat today announcing that it’s acquired Paris-based neurotech company NextMind to help drive its long-term augmented reality research efforts.

As explained by Snap:

“Before joining Snap, NextMind developed non-invasive brain computer interface (BCI) technology in order to enable easier hands-free interaction using electronic devices, including computers and AR/VR wearables and headsets. This technology monitors neural activity to understand your intent when interacting with a computing interface, allowing you to push a virtual button simply by focusing on it.”

[Instinctively rubs at the side of my head]

Snap does clarify that NextMind’s tech ‘does not “read” thoughts or send any signals towards the brain’. So there is that – but still, the idea that your brain will eventually, subconsciously control your digital experience, and that big tech companies will have literal direct line into your head, does seem somewhat concerning.

Meta’s developing the same, and has been working on brain-to-screen interaction since at least 2017.

Though Meta did discontinue its full digital mind-reading project last year, in order to focus on wrist-based devices “powered by electromyography” instead.

That could be the key to navigating more advanced AR and VR environments, in a more natural and intuitive way, with Meta similarly acquiring CTRL-Labs back in 2019 to further develop this element.

As explained by Meta’s head of VR Andrew Bosworth at the time:

“The vision for this work is a wristband that lets people control their devices as a natural extension of movement. Here’s how it’ll work: You have neurons in your spinal cord that send electrical signals to your hand muscles telling them to move in specific ways such as to click a mouse or press a button. The wristband will decode those signals and translate them into a digital signal your device can understand, empowering you with control over your digital life.”

NextMind is essentially working on the same thing, though it tracks those same types of signals direct from your visual cortex, via electrode sensors on your head.

Yeah, I don’t know, I’m not 100% comfortable with that just yet.

But it may well be the future, and with no keyboards in the virtual realm, and tools now able to detect your brain impulses in order to facilitate such interaction, it does make sense.

It just feels a little creepy. Your body’s natural instinct is to protect the brain at all costs, so it’s going to be a hard sell for the tech platforms to make.

There’s also a question of longer-term impact – if we no longer need to move to make things happen, will that take us another step closer to the people on the spaceship in Wall-E, who just sit in their automatic chairs with a screen in front of their face all day?

Of course, these are considerations that will be incorporated over time, and we have to start with the core functionality first, before we move on to the implications.

I think – is that how it’s supposed to work?

In any event, it does increasingly seem like you’re going to be letting tech companies into your brain, just a little bit, as we move towards the next stage of digital controls and interactive processes.

Welcome to the future.

Source link

You must be logged in to post a comment Login