Tech companies are laying off their ethics and safety teams.

Mark Zuckerberg, chief executive officer of Meta Platforms Inc., left, arrives at federal court in San Jose, California, US, on Tuesday, Dec. 20, 2022.

David Paul Morris | Bloomberg | Getty Images

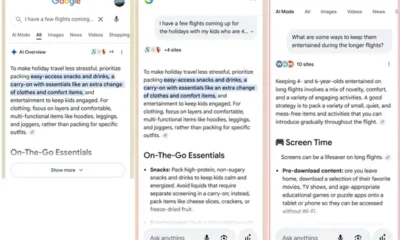

Toward the end of 2022, engineers on Meta’s team combating misinformation were ready to debut a key fact-checking tool that had taken half a year to build. The company needed all the reputational help it could get after a string of crises had badly damaged the credibility of Facebook and Instagram and given regulators additional ammunition to bear down on the platforms.

The new product would let third-party fact-checkers like The Associated Press and Reuters, as well as credible experts, add comments at the top of questionable articles on Facebook as a way to verify their trustworthiness.

But CEO Mark Zuckerberg’s commitment to make 2023 the “year of efficiency” spelled the end of the ambitious effort, according to three people familiar with the matter who asked not to be named due to confidentiality agreements.

Over multiple rounds of layoffs, Meta announced plans to eliminate roughly 21,000 jobs, a mass downsizing that had an outsized effect on the company’s trust and safety work. The fact-checking tool, which had initial buy-in from executives and was still in a testing phase early this year, was completely dissolved, the sources said.

A Meta spokesperson did not respond to questions related to job cuts in specific areas and said in an emailed statement that “we remain focused on advancing our industry-leading integrity efforts and continue to invest in teams and technologies to protect our community.”

Across the tech industry, as companies tighten their belts and impose hefty layoffs to address macroeconomic pressures and slowing revenue growth, wide swaths of people tasked with protecting the internet’s most-populous playgrounds are being shown the exits. The cuts come at a time of increased cyberbullying, which has been linked to higher rates of adolescent self-harm, and as the spread of misinformation and violent content collides with the exploding use of artificial intelligence.

In their most recent earnings calls, tech executives highlighted their commitment to “do more with less,” boosting productivity with fewer resources. Meta, Alphabet, Amazon and Microsoft have all cut thousands of jobs after staffing up rapidly before and during the Covid pandemic. Microsoft CEO Satya Nadella recently said his company would suspend salary increases for full-time employees.

The slashing of teams tasked with trust and safety and AI ethics is a sign of how far companies are willing to go to meet Wall Street demands for efficiency, even with the 2024 U.S. election season — and the online chaos that’s expected to ensue — just months away from kickoff. AI ethics and trust and safety are different departments within tech companies but are aligned on goals related to limiting real-life harm that can stem from use of their companies’ products and services.

“Abuse actors are usually ahead of the game; it’s cat and mouse,” said Arjun Narayan, who previously served as a trust and safety lead at Google and TikTok parent ByteDance, and is now head of trust and safety at news aggregator app Smart News. “You’re always playing catch-up.”

For now, tech companies seem to view both trust and safety and AI ethics as cost centers.

Twitter effectively disbanded its ethical AI team in November and laid off all but one of its members, along with 15% of its trust and safety department, according to reports. In February, Google cut about one-third of a unit that aims to protect society from misinformation, radicalization, toxicity and censorship. Meta reportedly ended the contracts of about 200 content moderators in early January. It also laid off at least 16 members of Instagram’s well-being group and more than 100 positions related to trust, integrity and responsibility, according to documents filed with the U.S. Department of Labor.

Andy Jassy, chief executive officer of Amazon.Com Inc., during the GeekWire Summit in Seattle, Washington, U.S., on Tuesday, Oct. 5, 2021.

David Ryder | Bloomberg | Getty Images

In March, Amazon downsized its responsible AI team and Microsoft laid off its entire ethics and society team – the second of two layoff rounds that reportedly took the team from 30 members to zero. Amazon didn’t respond to a request for comment, and Microsoft pointed to a blog post regarding its job cuts.

At Amazon’s game streaming unit Twitch, staffers learned of their fate in March from an ill-timed internal post from Amazon CEO Andy Jassy.

Jassy’s announcement that 9,000 jobs would be cut companywide included 400 employees at Twitch. Of those, about 50 were part of the team responsible for monitoring abusive, illegal or harmful behavior, according to people familiar with the matter who spoke on the condition of anonymity because the details were private.

The trust and safety team, or T&S as it’s known internally, was losing about 15% of its staff just as content moderation was seemingly more important than ever.

In an email to employees, Twitch CEO Dan Clancy didn’t call out the T&S department specifically, but he confirmed the broader cuts among his staffers, who had just learned about the layoffs from Jassy’s post on a message board.

“I’m disappointed to share the news this way before we’re able to communicate directly to those who will be impacted,” Clancy wrote in the email, which was viewed by CNBC.

‘Hard to win back consumer trust’

A current member of Twitch’s T&S team said the remaining employees in the unit are feeling “whiplash” and worry about a potential second round of layoffs. The person said the cuts caused a big hit to institutional knowledge, adding that there was a significant reduction in Twitch’s law enforcement response team, which deals with physical threats, violence, terrorism groups and self-harm.

A Twitch spokesperson did not provide a comment for this story, instead directing CNBC to a blog post from March announcing the layoffs. The post didn’t include any mention of trust and safety or content moderation.

Narayan of Smart News said that with a lack of investment in safety at the major platforms, companies lose their ability to scale in a way that keeps pace with malicious activity. As more problematic content spreads, there’s an “erosion of trust,” he said.

“In the long run, it’s really hard to win back consumer trust,” Narayan added.

While layoffs at Meta and Amazon followed demands from investors and a dramatic slump in ad revenue and share prices, Twitter’s cuts resulted from a change in ownership.

Almost immediately after Elon Musk closed his $44 billion purchase of Twitter in October, he began eliminating thousands of jobs. That included all but one member of the company’s 17-person AI ethics team, according to Rumman Chowdhury, who served as director of Twitter’s machine learning ethics, transparency and accountability team. The last remaining person ended up quitting.

The team members learned of their status when their laptops were turned off remotely, Chowdhury said. Hours later, they received email notifications.

“I had just recently gotten head count to build out my AI red team, so these would be the people who would adversarially hack our models from an ethical perspective and try to do that work,” Chowdhury told CNBC. She added, “It really just felt like the rug was pulled as my team was getting into our stride.”

Part of that stride involved working on “algorithmic amplification monitoring,” Chowdhury said, or tracking elections and political parties to see if “content was being amplified in a way that it shouldn’t.”

Chowdhury referenced an initiative in July 2021, when Twitter’s AI ethics team led what was billed as the industry’s first-ever algorithmic bias bounty competition. The company invited outsiders to audit the platform for bias, and made the results public.

Chowdhury said she worries that now Musk “is actively seeking to undo all the work we have done.”

“There is no internal accountability,” she said. “We served two of the product teams to make sure that what’s happening behind the scenes was serving the people on the platform equitably.”

Twitter did not provide a comment for this story.

Advertisers are pulling back in places where they see increased reputational risk.

According to Sensor Tower, six of the top 10 categories of U.S. advertisers on Twitter spent much less in the first quarter of this year compared with a year earlier, with that group collectively slashing its spending by 53%. The site has recently come under fire for allowing the spread of violent images and videos.

The rapid rise in popularity of chatbots is only complicating matters. The types of AI models created by OpenAI, the company behind ChatGPT, and others make it easier to populate fake accounts with content. Researchers from the Allen Institute for AI, Princeton University and Georgia Tech ran tests in ChatGPT’s application programming interface (API), and found up to a sixfold increase in toxicity, depending on which type of functional identity, such as a customer service agent or virtual assistant, a company assigned to the chatbot.

Regulators are paying close attention to AI’s growing influence and the simultaneous downsizing of groups dedicated to AI ethics and trust and safety. Michael Atleson, an attorney at the Federal Trade Commission’s division of advertising practices, called out the paradox in a blog post earlier this month.

“Given these many concerns about the use of new AI tools, it’s perhaps not the best time for firms building or deploying them to remove or fire personnel devoted to ethics and responsibility for AI and engineering,” Atleson wrote. “If the FTC comes calling and you want to convince us that you adequately assessed risks and mitigated harms, these reductions might not be a good look.”

Meta as a bellwether

For years, as the tech industry was enjoying an extended bull market and the top internet platforms were flush with cash, Meta was viewed by many experts as a leader in prioritizing ethics and safety.

The company spent years hiring trust and safety workers, including many with academic backgrounds in the social sciences, to help avoid a repeat of the 2016 presidential election cycle, when disinformation campaigns, often operated by foreign actors, ran rampant on Facebook. The embarrassment culminated in the 2018 Cambridge Analytica scandal, which exposed how a third party was illicitly using personal data from Facebook.

But following a brutal 2022 for Meta’s ad business — and its stock price — Zuckerberg went into cutting mode, winning plaudits along the way from investors who had complained of the company’s bloat.

Beyond the fact-checking project, the layoffs hit researchers, engineers, user design experts and others who worked on issues pertaining to societal concerns. The company’s dedicated team focused on combating misinformation suffered numerous losses, four former Meta employees said.

Prior to Meta’s first round of layoffs in November, the company had already taken steps to consolidate members of its integrity team into a single unit. In September, Meta merged its central integrity team, which handles social matters, with its business integrity group tasked with addressing ads and business-related issues like spam and fake accounts, ex-employees said.

In the ensuing months, as broader cuts swept across the company, former trust and safety employees described working under the fear of looming layoffs and for managers who sometimes failed to see how their work affected Meta’s bottom line.

For example, things like improving spam filters that required fewer resources could get clearance over long-term safety projects that would entail policy changes, such as initiatives involving misinformation. Employees felt incentivized to take on more manageable tasks because they could show their results in their six-month performance reviews, ex-staffers said.

Ravi Iyer, a former Meta project manager who left the company before the layoffs, said that the cuts across content moderation are less bothersome than the fact that many of the people he knows who lost their jobs were performing critical roles on design and policy changes.

“I don’t think we should reflexively think that having fewer trust and safety workers means platforms will necessarily be worse,” said Iyer, who’s now the managing director of the Psychology of Technology Institute at University of Southern California’s Neely Center. “However, many of the people I’ve seen laid off are amongst the most thoughtful in rethinking the fundamental designs of these platforms, and if platforms are not going to invest in reconsidering design choices that have been proven to be harmful — then yes, we should all be worried.”

A Meta spokesperson previously downplayed the significance of the job cuts in the misinformation unit, tweeting that the “team has been integrated into the broader content integrity team, which is substantially larger and focused on integrity work across the company.”

Still, sources familiar with the matter said that following the layoffs, the company has fewer people working on misinformation issues.

For those who’ve gained expertise in AI ethics, trust and safety and related content moderation, the employment picture looks grim.

Newly unemployed workers in those fields from across the social media landscape told CNBC that there aren’t many job openings in their area of specialization as companies continue to trim costs. One former Meta employee said that after interviewing for trust and safety roles at Microsoft and Google, those positions were suddenly axed.

An ex-Meta staffer said the company’s retreat from trust and safety is likely to filter down to smaller peers and startups that appear to be “following Meta in terms of their layoff strategy.”

Chowdhury, Twitter’s former AI ethics lead, said these types of jobs are a natural place for cuts because “they’re not seen as driving profit in product.”

“My perspective is that it’s completely the wrong framing,” she said. “But it’s hard to demonstrate value when your value is that you’re not being sued or someone is not being harmed. We don’t have a shiny widget or a fancy model at the end of what we do; what we have is a community that’s safe and protected. That is a long-term financial benefit, but in the quarter over quarter, it’s really hard to measure what that means.”

At Twitch, the T&S team included people who knew where to look to spot dangerous activity, according to a former employee in the group. That’s particularly important in gaming, which is “its own unique beast,” the person said.

Now, there are fewer people checking in on the “dark, scary places” where offenders hide and abusive activity gets groomed, the ex-employee added.

More importantly, nobody knows how bad it can get.