The new app is called watchGPT and as I tipped off already, it gives you access to ChatGPT from your Apple Watch. Now the $10,000 question (or more accurately the $3.99 question, as that is the one-time cost of the app) is why having ChatGPT on your wrist is remotely necessary, so let’s dive into what exactly the app can do.

NEWS

Twitter Announces Birdwatch – Volunteer Program Against Misinformation via @martinibuster

Twitter announced a new anti-misinformation initiative. The plan is to use a transparent community-based approach to identifying misinformation. This is a pilot program restricted to the United States.

Twitter Birdwatch

Birdwatch is a system where contributors create “notes” about misinformation found on tweets. The notes are meant to provide context.

The notes will initially not be visible from the tweets but exist on a separate site located on a subdomain of Twitter (birdwatch.twitter.com) that currently redirects to twitter.com/i/birdwatch.

The goal is to eventually show the notes on the tweets that are judged to contain misinformation.

That way Twitter community members can be made aware of the low quality of a tweet.

Community Approach

What Twitter is proposing is a passive form of content moderation.

Many forums and social media sites (including Twitter) have a way for members to report a post when it is problematic in some way. Typical reasons for reporting a post can be spam, bullying, or misinformation.

This is a passive form of moderating content in a community, which is the form of moderation that Twitter is taking.

Community-driven moderation is a more proactive step because members, usually called moderators, can delete or edit a problematic post.

Birdwatch’s approach is limited to creating notes about a problematic post. Contributors will not be able to actually remove a bad post.

According to Twitter:

“Birdwatch allows people to identify information in Tweets they believe is misleading and write notes that provide informative context. We believe this approach has the potential to respond quickly when misleading information spreads, adding context that people trust and find valuable.

Eventually we aim to make notes visible directly on Tweets for the global Twitter audience, when there is consensus from a broad and diverse set of contributors.”

Advertisement

Continue Reading Below

Example of How Birdwatch Works

Birdwatch has three components:

- Notes

- Ratings

- Birdwatch site

Birdwatch Notes

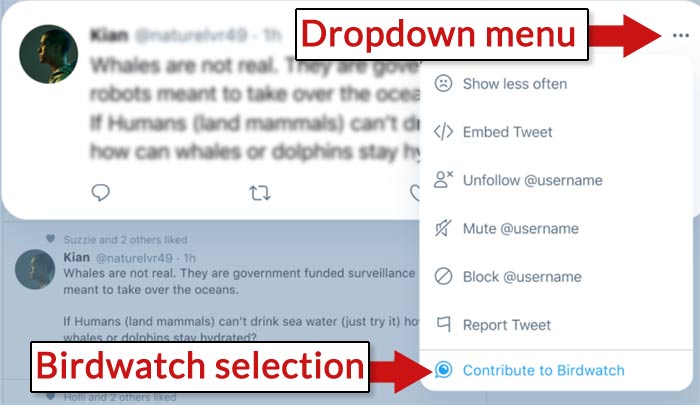

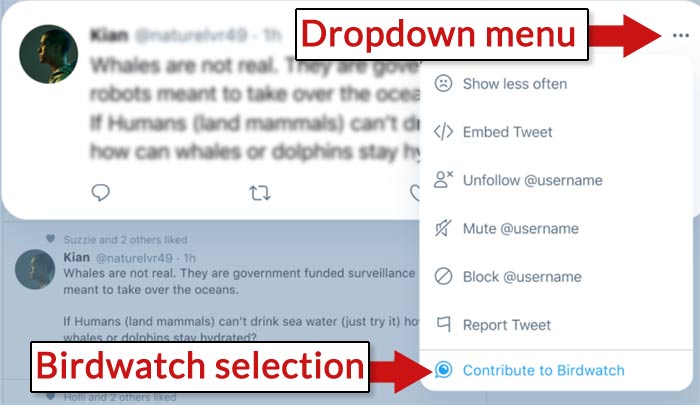

Birdwatch community moderators attach notes to tweets they judge to be problematic. The note process begins with clicking the three dot menu and selecting the Notes option.

Thereafter the note process involves answering multiple choice questions and adding feedback within a text area.

Advertisement

Continue Reading Below

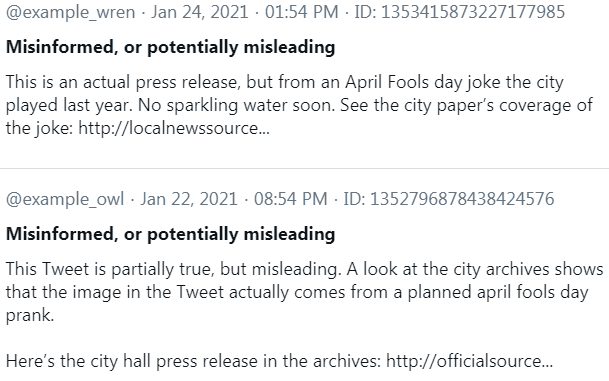

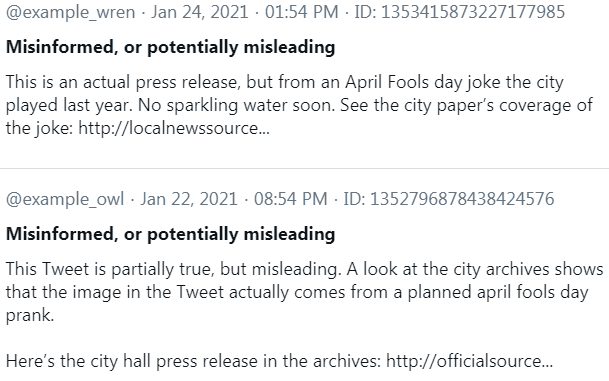

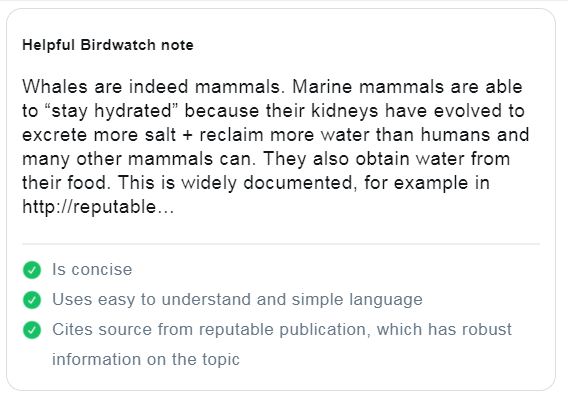

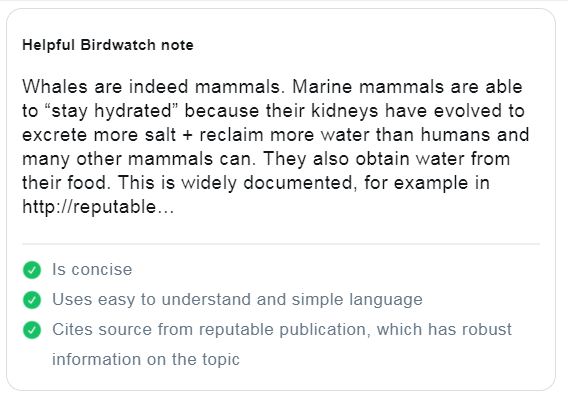

Example of Twitter Birdwatch Notes

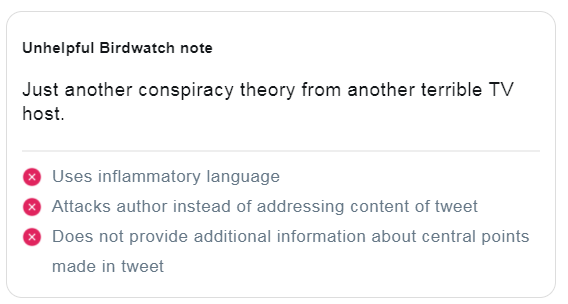

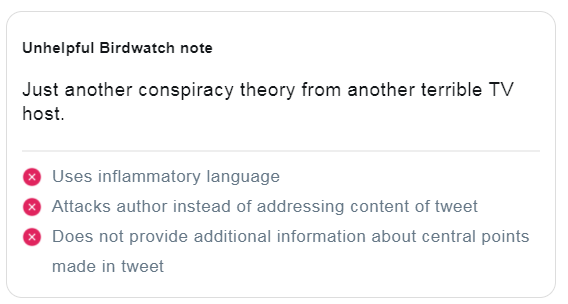

Here is an example of a helpful Birdwatch note:

This is an example of an unhelpful Birdwatch note:

Note Ranking

The next component of Birdwatch is community ranking of each other’s notes. This is a way for members to upvote or downvote rankings so that the best ranked notes can be selected as representative of an accurate note.

Advertisement

Continue Reading Below

This is a way for the community to self-moderate notes so that only the best and non-manipulative notes make it to the top.

Transparency of Twitter Birdwatch

The Birdwatch system is transparent. That means all the data is available to be downloaded and viewed by anyone from the Birdwatch Download page.

Try It and See Approach

Twitter acknowledges that the pilot program is a work in process and that issues such as malicious efforts to manipulate the program are things that will have to be dealt with as they turn up.

Twitter did not, however, offer a plan for dealing with community manipulation.

This is their statement:

“We know there are a number of challenges toward building a community-driven system like this — from making it resistant to manipulation attempts to ensuring it isn’t dominated by a simple majority or biased based on its distribution of contributors. We’ll be focused on these things throughout the pilot.”

Advertisement

Continue Reading Below

This seems like an ad-hoc approach to dealing with problems as they arise as opposed to anticipating them and having a plan in place to deal with them.

Community-driven Fight Against Misinformation

I have almost twenty years of experience moderating and running forums. In my experience, community administrators identify trustworthy members and make them moderators, allowing the volunteer members to help run the community themselves.

In a healthy community, moderators do not function like police who are enforcing rules. In a well-operated community moderators are more like servants that help the community function better.

What Twitter is proposing falls short of true moderation. It’s more of an attempt to provide trustworthy feedback on problematic posts that contain misinformation.

Citations

Read the official announcement:

Introducing Birdwatch, a Community-based Approach to Misinformation

Advertisement

Continue Reading Below

Sign up for Birdwatch

http://twitter.github.io/birdwatch/join

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.

NEWS

We asked ChatGPT what will be Google (GOOG) stock price for 2030

Investors who have invested in Alphabet Inc. (NASDAQ: GOOG) stock have reaped significant benefits from the company’s robust financial performance over the last five years. Google’s dominance in the online advertising market has been a key driver of the company’s consistent revenue growth and impressive profit margins.

In addition, Google has expanded its operations into related fields such as cloud computing and artificial intelligence. These areas show great promise as future growth drivers, making them increasingly attractive to investors. Notably, Alphabet’s stock price has been rising due to investor interest in the company’s recent initiatives in the fast-developing field of artificial intelligence (AI), adding generative AI features to Gmail and Google Docs.

However, when it comes to predicting the future pricing of a corporation like Google, there are many factors to consider. With this in mind, Finbold turned to the artificial intelligence tool ChatGPT to suggest a likely pricing range for GOOG stock by 2030. Although the tool was unable to give a definitive price range, it did note the following:

“Over the long term, Google has a track record of strong financial performance and has shown an ability to adapt to changing market conditions. As such, it’s reasonable to expect that Google’s stock price may continue to appreciate over time.”

GOOG stock price prediction

While attempting to estimate the price range of future transactions, it is essential to consider a variety of measures in addition to the AI chat tool, which includes deep learning algorithms and stock market experts.

Finbold collected forecasts provided by CoinPriceForecast, a finance prediction tool that utilizes machine self-learning technology, to anticipate Google stock price by the end of 2030 to compare with ChatGPT’s projection.

According to the most recent long-term estimate, which Finbold obtained on March 20, the price of Google will rise beyond $200 in 2030 and touch $247 by the end of the year, which would indicate a 141% gain from today to the end of the year.

Google has been assigned a recommendation of ‘strong buy’ by the majority of analysts working on Wall Street for a more near-term time frame. Significantly, 36 analysts of the 48 have recommended a “strong buy,” while seven people have advocated a “buy.” The remaining five analysts had given a ‘hold’ rating.

The average price projection for Alphabet stock over the last three months has been $125.32; this objective represents a 22.31% upside from its current price. It’s interesting to note that the maximum price forecast for the next year is $160, representing a gain of 56.16% from the stock’s current price of $102.46.

While the outlook for Google stock may be positive, it’s important to keep in mind that some potential challenges and risks could impact its performance, including competition from ChatGPT itself, which could affect Google’s price.

Disclaimer: The content on this site should not be considered investment advice. Investing is speculative. When investing, your capital is at risk.

NEWS

This Apple Watch app brings ChatGPT to your wrist — here’s why you want it

ChatGPT feels like it is everywhere at the moment; the AI-powered tool is rapidly starting to feel like internet connected home devices where you are left wondering if your flower pot really needed Bluetooth. However, after hearing about a new Apple Watch app that brings ChatGPT to your favorite wrist computer, I’m actually convinced this one is worth checking out.

-

PPC4 days ago

PPC4 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

PPC7 days ago

PPC7 days ago4 New Google Ads Performance Max Updates: What You Need to Know

-

MARKETING6 days ago

MARKETING6 days agoWill Google Buy HubSpot? | Content Marketing Institute

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 16, 2024

-

SEO6 days ago

SEO6 days agoGoogle Clarifies Vacation Rental Structured Data

-

MARKETING6 days ago

MARKETING6 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 17, 2024

-

PPC7 days ago

PPC7 days agoHow to Collect & Use Customer Data the Right (& Ethical) Way