SEO

Can You Work Faster & Smarter?

AI for SEO is at a tipping point where the technology used by big corporations is increasingly within reach for smaller businesses.

The increasing use of this new technology is permanently changing the practice of SEO today.

But is it right for your business? These are the surprising facts.

What Is AI For SEO

AI, or artificial intelligence, is already a part of our daily lives. Anyone who uses Alexa or Google Maps is using AI software to make their lives better in some way.

Popular writing assistant Grammarly is an AI software that illustrates the power of AI to improve performance.

It takes a so-so piece of content and makes it better by fixing grammar and spelling mistakes and catching repetitive use of words.

AI for SEO works similarly to improve performance and, to a certain degree, democratize SEO by making scale and sophisticated data analyses within reach for everybody.

How Can AI Be Used In SEO

Mainstream AI SEO platforms automate data analysis, providing high-level views that identify patterns and trends that are not otherwise visible.

Mark Traphagen of seoClarity describes why AI SEO automation is essential:

“A decade ago, the best SEOs were great excel jockeys, downloading and correlating data from different sources and parts of the SEO lifecycle, all by hand.

If SEOs were doing that today, they’d be left in the dust.

By the time humans can process – results have changed, algorithms updated, SERPs shifted, etc.

And that’s not to mention the access and depth of data available in this decade, fast-paced changes in search engine algorithms, varying ranking factors that are different for every query, intent-based results that change seasonally, and the immense complexity of modern enterprise websites.

These realities have made utilizing AI now essential at the enterprise level.”

AI In Onsite Optimization

AI SEO automation platform WordLift helps publishers automate structured data, internal linking, and other on-page-related factors.

Andrea Volpini, CEO of WordLift, comments:

“WordLift automatically ingests the latest version of the schema vocabulary to support all possible entity types.

We can reuse this data to build internal links, render context cards on web pages, and recommend similar content.

Much like Google, a publisher can use this network of entities to let the readers discover related content.

WordLift enables many SEO workflows as the knowledge graph of the website gets built.

Some use WordLift’s NLP to manage internal links to their important pages; others use the data in the knowledge graph to instruct the internal search engine or to fine-tune a language model for content generation.

By automating structured data, publishing entities, and adding internal links, it’s not uncommon to see substantial growth in organic traffic for content creators.”

AI For SEO At Scale

AI for SEO can be applied to a wide range of activities that minimize engaging in repetitive tasks and improves productivity.

A partial list includes:

- Content planning.

- Content analysis.

- Data analysis.

- Creation of local knowledge graphs.

- Automate the creation of Schema structured data.

- Optimization of interlinking.

- Page by Page content optimization.

- Automatically optimized meta descriptions.

- Programmatic title elements.

- Optimized headings at scale.

AI In Content Creation

Content creation consists of multiple subjective choices. What one writer feels is relevant to a topic might be different from what users think it is.

A writer may assume that a topic is about Topic X. The search engine may identify that users prefer content about X, Y, and Z. Consequently, the content may experience poor search performance.

AI content tools help content developers form tighter relationships between content and what users are looking for by providing an objective profile of what a given piece of content is about.

AI tools allow search marketers to work with content in a way that is light years ahead of the decades-old practice of first identifying high-traffic keywords and then building content around them.

AI In Content Optimization

Search engines understand search queries and content better by identifying what users mean and what webpages are about.

Today’s AI content tools do the same for SEO from the entire content development workflow.

There’s more to this as well.

In 2018 Google developed what they referred to as the Topic Layer, which helps it understand the content and how the topics and subtopics relate to each other.

Google described it like this:

“So we’ve taken our existing Knowledge Graph—which understands connections between people, places, things and facts about them—and added a new layer, called the Topic Layer, engineered to deeply understand a topic space and how interests can develop over time as familiarity and expertise grow.

The Topic Layer is built by analyzing all the content that exists on the web for a given topic and develops hundreds and thousands of subtopics.

For these subtopics, we can identify the most relevant articles and videos—the ones that have shown themselves to be evergreen and continually useful, as well as fresh content on the topic.

We then look at patterns to understand how these subtopics relate to each other, so we can more intelligently surface the type of content you might want to explore next.”

AI content tools help search marketers align their activities with the reality of how search engines work.

AI In Keyword Research

Beyond that, they introduce content workflow efficiency by enabling the entire process to scale, reducing the time between research and publishing content online.

Mark Traphagen of seoClarity emphasized that AI tools take over the tedious parts of SEO.

Mark explained:

“seoClarity long ago moved from being a data provider to leveraging AI in every part of the SEO lifecycle to move clients quickly from data to insights to execution.

We use:

AI in surfacing insights and recommendations from different data sources (rankings -> SERP opportunities -> technical issues)

AI in delivering the most accurate data possible in search demand, keyword difficulty, and topic intent — all in real-time and trended views

AI in content optimization and analysis

AI-assisted automation in instant execution of SEO enables changes at massive scale.

The future of AI in SEO isn’t AI “doing SEO” for us, but rather AI taking over the most time-consuming tasks freeing SEOs to be directors implementing the best-informed actions at scale at unheard of speeds.”

A key value of using AI for SEO is increasing productivity and efficiency while also increasing expertise, authoritativeness, and content relevance.

Jeff Coyle of Market Muse outlines AI’s benefits as creating justification for how much is budgeted for content and what value it brings to the bottom line.

Jeff commented:

“When more of the content strategy you budget for turns into a success, it becomes immediately apparent that using AI to predict content budget needs and drive efficiency rates is the most important thing one can invest in for a content organization.

For operations, human resource efficiency is the top priority. Where do you have humans performing manual tasks for research, planning, prioritizing, briefing, writing, editing, production, and optimization? How much time is lost, and how many feedback or rework loops exist?

Data-driven, predictive, defendable content creation and optimization plans that yield single sources of truth in the form of content briefs and project plans are the foundation of a team focused on using technology to improve human resource efficiencies.

For optimization, picking the content to update, understanding how to update it and whether it needs to be parlayed with creation, repurposing, and transformation are the critical advantages of using AI for content analysis.

Knowing if a page is high quality, exhibits expertise, appeals to the right target intent, and is integrated into the site correctly gives a team the best chance to succeed.”

Drawbacks And Ethical Considerations

Publishing content that is entirely created by AI can result in a negative outcome because Google explicitly prohibits autogenerated content.

Google’s spam guidelines warn that publishing autogenerated content may result in a manual action, removing the content from Google’s search results.

The guidelines explain:

“To be eligible to appear in Google web search results (web pages, images, videos, news content, or other material that Google finds from across the web), content shouldn’t violate Google Search’s overall policies or the spam policies listed on this page.

…Spammy automatically generated (or “auto-generated”) content is content that’s been generated programmatically without producing anything original or adding sufficient value; instead, it’s been generated for the primary purpose of manipulating search rankings and not helping users.”

There’s no ban on publishing autogenerated content and no law against it. Google even suggests ways to exclude that kind of content from Google’s search engine if you choose to use that kind of content.

But using automatically generated content is not viable if the goal is to rank well in Google’s search engine.

Can Google Identify AI-Generated Content?

Yes, Google and other search engines can likely identify content that is entirely generated by AI.

Content contains word use patterns unique to both human and AI-generated content. Statistical analysis reveals which content is created by AI.

The Future of Tools Is Now

Many AI-based tools are available that are appropriate for different levels of users.

Not every business needs to scale its SEO for hundreds of thousands of products.

But even a small to medium online business can benefit from the streamlined and efficient workflow that an AI-based content tool offers.

More resources:

Featured image by Shutterstock/Master1305

SEO

brightonSEO Live Blog

Hello everyone. It’s April again, so I’m back in Brighton for another two days of Being the introvert I am, my idea of fun isn’t hanging around our booth all day explaining we’ve run out of t-shirts (seriously, you need to be fast if you want swag!). So I decided to do something useful and live-blog the event instead.

Follow below for talk takeaways and (very) mildly humorous commentary. sun, sea, and SEO!

SEO

Google Further Postpones Third-Party Cookie Deprecation In Chrome

Google has again delayed its plan to phase out third-party cookies in the Chrome web browser. The latest postponement comes after ongoing challenges in reconciling feedback from industry stakeholders and regulators.

The announcement was made in Google and the UK’s Competition and Markets Authority (CMA) joint quarterly report on the Privacy Sandbox initiative, scheduled for release on April 26.

Chrome’s Third-Party Cookie Phaseout Pushed To 2025

Google states it “will not complete third-party cookie deprecation during the second half of Q4” this year as planned.

Instead, the tech giant aims to begin deprecating third-party cookies in Chrome “starting early next year,” assuming an agreement can be reached with the CMA and the UK’s Information Commissioner’s Office (ICO).

The statement reads:

“We recognize that there are ongoing challenges related to reconciling divergent feedback from the industry, regulators and developers, and will continue to engage closely with the entire ecosystem. It’s also critical that the CMA has sufficient time to review all evidence, including results from industry tests, which the CMA has asked market participants to provide by the end of June.”

Continued Engagement With Regulators

Google reiterated its commitment to “engaging closely with the CMA and ICO” throughout the process and hopes to conclude discussions this year.

This marks the third delay to Google’s plan to deprecate third-party cookies, initially aiming for a Q3 2023 phaseout before pushing it back to late 2024.

The postponements reflect the challenges in transitioning away from cross-site user tracking while balancing privacy and advertiser interests.

Transition Period & Impact

In January, Chrome began restricting third-party cookie access for 1% of users globally. This percentage was expected to gradually increase until 100% of users were covered by Q3 2024.

However, the latest delay gives websites and services more time to migrate away from third-party cookie dependencies through Google’s limited “deprecation trials” program.

The trials offer temporary cookie access extensions until December 27, 2024, for non-advertising use cases that can demonstrate direct user impact and functional breakage.

While easing the transition, the trials have strict eligibility rules. Advertising-related services are ineligible, and origins matching known ad-related domains are rejected.

Google states the program aims to address functional issues rather than relieve general data collection inconveniences.

Publisher & Advertiser Implications

The repeated delays highlight the potential disruption for digital publishers and advertisers relying on third-party cookie tracking.

Industry groups have raised concerns that restricting cross-site tracking could push websites toward more opaque privacy-invasive practices.

However, privacy advocates view the phaseout as crucial in preventing covert user profiling across the web.

With the latest postponement, all parties have more time to prepare for the eventual loss of third-party cookies and adopt Google’s proposed Privacy Sandbox APIs as replacements.

Featured Image: Novikov Aleksey/Shutterstock

SEO

How To Write ChatGPT Prompts To Get The Best Results

ChatGPT is a game changer in the field of SEO. This powerful language model can generate human-like content, making it an invaluable tool for SEO professionals.

However, the prompts you provide largely determine the quality of the output.

To unlock the full potential of ChatGPT and create content that resonates with your audience and search engines, writing effective prompts is crucial.

In this comprehensive guide, we’ll explore the art of writing prompts for ChatGPT, covering everything from basic techniques to advanced strategies for layering prompts and generating high-quality, SEO-friendly content.

Writing Prompts For ChatGPT

What Is A ChatGPT Prompt?

A ChatGPT prompt is an instruction or discussion topic a user provides for the ChatGPT AI model to respond to.

The prompt can be a question, statement, or any other stimulus to spark creativity, reflection, or engagement.

Users can use the prompt to generate ideas, share their thoughts, or start a conversation.

ChatGPT prompts are designed to be open-ended and can be customized based on the user’s preferences and interests.

How To Write Prompts For ChatGPT

Start by giving ChatGPT a writing prompt, such as, “Write a short story about a person who discovers they have a superpower.”

ChatGPT will then generate a response based on your prompt. Depending on the prompt’s complexity and the level of detail you requested, the answer may be a few sentences or several paragraphs long.

Use the ChatGPT-generated response as a starting point for your writing. You can take the ideas and concepts presented in the answer and expand upon them, adding your own unique spin to the story.

If you want to generate additional ideas, try asking ChatGPT follow-up questions related to your original prompt.

For example, you could ask, “What challenges might the person face in exploring their newfound superpower?” Or, “How might the person’s relationships with others be affected by their superpower?”

Remember that ChatGPT’s answers are generated by artificial intelligence and may not always be perfect or exactly what you want.

However, they can still be a great source of inspiration and help you start writing.

Must-Have GPTs Assistant

I recommend installing the WebBrowser Assistant created by the OpenAI Team. This tool allows you to add relevant Bing results to your ChatGPT prompts.

This assistant adds the first web results to your ChatGPT prompts for more accurate and up-to-date conversations.

It is very easy to install in only two clicks. (Click on Start Chat.)

For example, if I ask, “Who is Vincent Terrasi?,” ChatGPT has no answer.

With WebBrower Assistant, the assistant creates a new prompt with the first Bing results, and now ChatGPT knows who Vincent Terrasi is.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023You can test other GPT assistants available in the GPTs search engine if you want to use Google results.

Master Reverse Prompt Engineering

ChatGPT can be an excellent tool for reverse engineering prompts because it generates natural and engaging responses to any given input.

By analyzing the prompts generated by ChatGPT, it is possible to gain insight into the model’s underlying thought processes and decision-making strategies.

One key benefit of using ChatGPT to reverse engineer prompts is that the model is highly transparent in its decision-making.

This means that the reasoning and logic behind each response can be traced, making it easier to understand how the model arrives at its conclusions.

Once you’ve done this a few times for different types of content, you’ll gain insight into crafting more effective prompts.

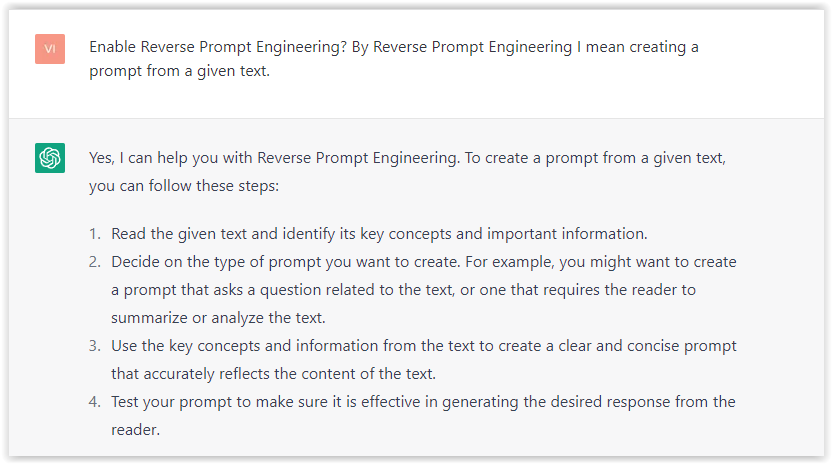

Prepare Your ChatGPT For Generating Prompts

First, activate the reverse prompt engineering.

- Type the following prompt: “Enable Reverse Prompt Engineering? By Reverse Prompt Engineering I mean creating a prompt from a given text.”

Screenshot from ChatGPT, March 2023

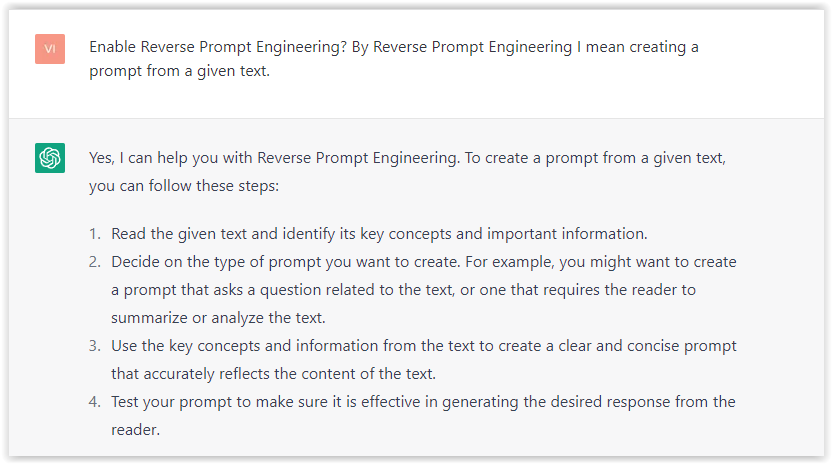

Screenshot from ChatGPT, March 2023ChatGPT is now ready to generate your prompt. You can test the product description in a new chatbot session and evaluate the generated prompt.

- Type: “Create a very technical reverse prompt engineering template for a product description about iPhone 11.”

Screenshot from ChatGPT, March 2023

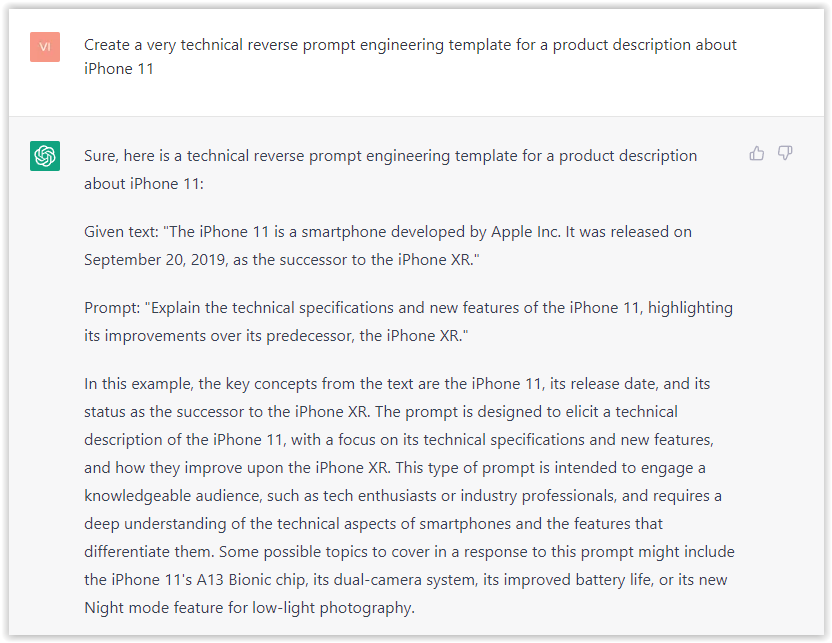

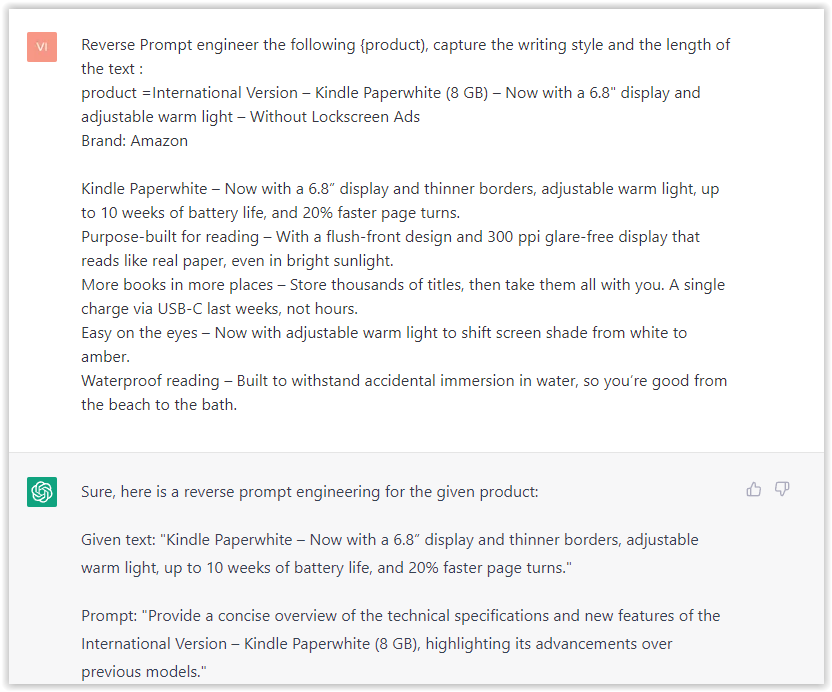

Screenshot from ChatGPT, March 2023The result is amazing. You can test with a full text that you want to reproduce. Here is an example of a prompt for selling a Kindle on Amazon.

- Type: “Reverse Prompt engineer the following {product), capture the writing style and the length of the text :

product =”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023I tested it on an SEJ blog post. Enjoy the analysis – it is excellent.

- Type: “Reverse Prompt engineer the following {text}, capture the tone and writing style of the {text} to include in the prompt :

text = all text coming from https://www.searchenginejournal.com/google-bard-training-data/478941/”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023But be careful not to use ChatGPT to generate your texts. It is just a personal assistant.

Go Deeper

Prompts and examples for SEO:

- Keyword research and content ideas prompt: “Provide a list of 20 long-tail keyword ideas related to ‘local SEO strategies’ along with brief content topic descriptions for each keyword.”

- Optimizing content for featured snippets prompt: “Write a 40-50 word paragraph optimized for the query ‘what is the featured snippet in Google search’ that could potentially earn the featured snippet.”

- Creating meta descriptions prompt: “Draft a compelling meta description for the following blog post title: ’10 Technical SEO Factors You Can’t Ignore in 2024′.”

Important Considerations:

- Always Fact-Check: While ChatGPT can be a helpful tool, it’s crucial to remember that it may generate inaccurate or fabricated information. Always verify any facts, statistics, or quotes generated by ChatGPT before incorporating them into your content.

- Maintain Control and Creativity: Use ChatGPT as a tool to assist your writing, not replace it. Don’t rely on it to do your thinking or create content from scratch. Your unique perspective and creativity are essential for producing high-quality, engaging content.

- Iteration is Key: Refine and revise the outputs generated by ChatGPT to ensure they align with your voice, style, and intended message.

Additional Prompts for Rewording and SEO:

– Rewrite this sentence to be more concise and impactful.

– Suggest alternative phrasing for this section to improve clarity.

– Identify opportunities to incorporate relevant internal and external links.

– Analyze the keyword density and suggest improvements for better SEO.

Remember, while ChatGPT can be a valuable tool, it’s essential to use it responsibly and maintain control over your content creation process.

Experiment And Refine Your Prompting Techniques

Writing effective prompts for ChatGPT is an essential skill for any SEO professional who wants to harness the power of AI-generated content.

Hopefully, the insights and examples shared in this article can inspire you and help guide you to crafting stronger prompts that yield high-quality content.

Remember to experiment with layering prompts, iterating on the output, and continually refining your prompting techniques.

This will help you stay ahead of the curve in the ever-changing world of SEO.

More resources:

Featured Image: Tapati Rinchumrus/Shutterstock

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS6 days ago

WORDPRESS6 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO7 days ago

SEO7 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS7 days ago

WORDPRESS7 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

SEO6 days ago

SEO6 days ago25 WordPress Alternatives Best For SEO

You must be logged in to post a comment Login