SOCIAL

Meta Outlines its Approach to Brand and User Safety with New Mini-Site

It may not be what it is, but Meta’s latest “media responsibility” push feels like a shot at Elon Musk, and the revised approach that X is taking to content moderation in its app.

Today, Meta has outlined its new Media Responsibility framework, which are the guiding principles that it’s applying to its own moderation and ad placement guidelines, in order to facilitate more protection and safety for all users of its apps.

As explained by Meta:

“The advertising industry has come together to embrace media responsibility, but there isn’t an industry-wide definition of it just yet. At Meta, we define it as the commitment of the entire marketing industry to contribute to a better world through a more accountable, equitable and sustainable advertising ecosystem.”

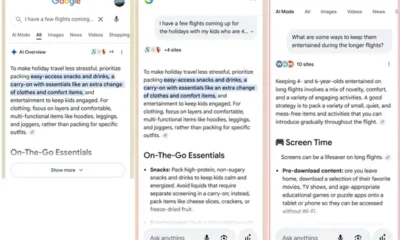

Within this, Meta has launched a new mini-site, where it outlines its “four pillars of media responsibility”.

Those pillars are:

- Safety and expression – Ensuring everybody has a voice, while protecting users from harm

- Diversity, equity and inclusion – Ensuring that opportunity exists for all, and that everybody feels valued, respected, and supported

- Privacy and transparency – Building products with privacy “at their very core” and ensuring transparency in media placement and measurement

- Sustainability – Protecting the planet, and having a positive impact

The mini-site includes overviews of each element in more depth, along with explainers as to how, exactly, Meta’s looking to enact such within its platforms.

Meta says that aim of the mini-site is to enable ad partners and users “to hold us accountable, and see who we’re working with”, in order to provide more assurance and transparency into its various processes.

And yes, it does feel a little like Meta’s taking aim at Elon and Co. here.

The new X team is increasingly putting its trust in crowd-sourced moderation, via Community Notes, which appends user-originated fact-checks to posts that include questionable claims in the app.

But that process is flawed, in that it requires “ideological consensus” to ensure that Notes are displayed in the app. And given the disagreement on certain divisive topics, that agreement is never going to be achieved, leaving many misleading claims active and unchallenged in the app.

But Musk believes that “citizen journalism” is more accurate than the mainstream media, which, in his view at least, means that Community Notes are more reflective of the actual truth, even if some of that may be considered misinformation.

As a result, claims about COVID, the war in Israel, U.S. politics, basically every divisive argument now has at least some form of misinformation filtering through on X, because Community Notes contributors cannot reach agreement on the actual core facts of such.

Which is part of the reason why so many advertisers are staying away from the app, while Musk himself also continues to spread misleading or false reports, and amplify harmful profiles, further eroding trust in X’s capacity to manage information flow.

Some, of course, will view this as the right approach, as it enables users to counter what they see as false media narratives. But Meta’s employing a different strategy, using its years of experience to mitigate the spread of harmful content, in various ways.

The new mini-site lays out its approaches in detail, which could help to provide more transparency, and accountability, in the process.

It’s an interesting overview either way, which provides more insight into Meta’s various strategies and initiatives.

You can check out Meta’s media responsibility mini-site here.