SEO

Microsoft Unveils Predictive Targeting, AI-Based Advertising Tool

Microsoft announces the launch of Predictive Targeting, an artificial intelligence-powered advertising tool.

The technology relies on machine learning to help advertisers reach new, receptive audiences and drive higher conversion rates.

Finding Hidden Audiences

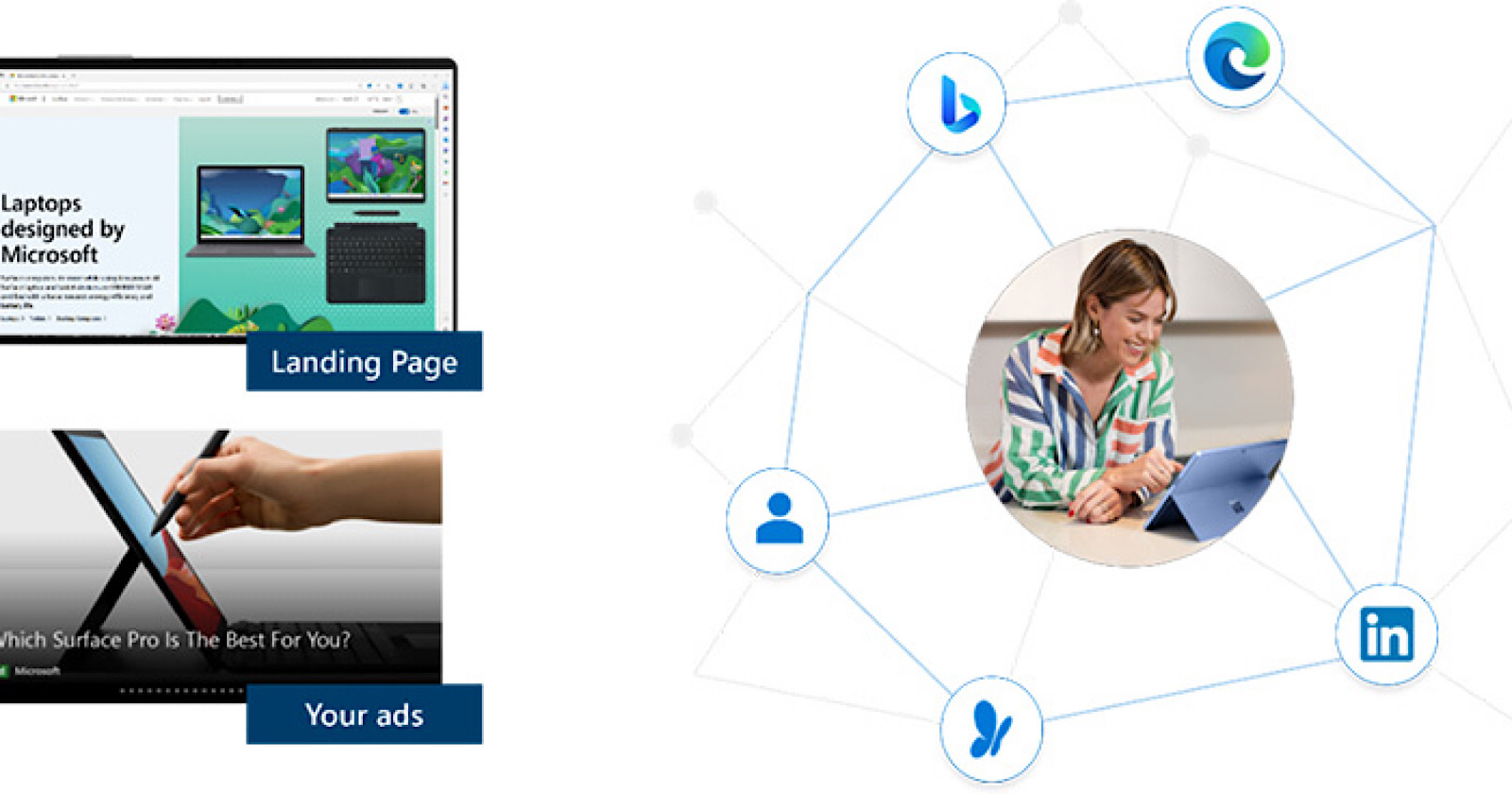

Predictive Targeting analyzes signals from advertisers’ existing ads and landing pages and Microsoft’s audience data to identify potential new audiences.

The tool automatically targets ads to the audiences most likely to convert without requiring advertisers to build an audience-targeting strategy manually.

Saving Time & Increasing Efficiency

Microsoft claims Predictive Targeting can increase advertisers’ conversion rates by an average of 46 percent while streamlining the ad targeting process.

Advertisers no longer have to spend time researching to determine their target audiences and can rely on Microsoft’s algorithms to find the most promising prospects.

The tool aims to help advertisers maximize their return on investment and gain greater efficiency in their ad campaigns.

Flexibility For Different Needs

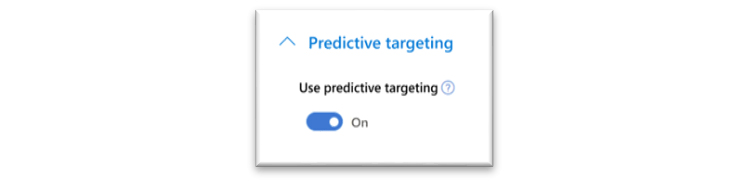

Predictive Targeting can be used independently or in combination with advertisers’ existing audience targeting strategies.

When used alone, it provides a comprehensive solution for discovering and reaching relevant audiences.

When layered on top of existing strategies, it helps advertisers expand their reach and find new potential customers outside of their defined target audiences.

This flexibility allows advertisers to tailor the tool to suit their specific needs.

Potential Drawbacks

Before switching to a new targeting solution, it’s essential to consider potential drawbacks.

By relying on Microsoft’s algorithms to determine target audiences, advertisers give up some control over who sees their ads.

The AI may target audiences advertisers did not anticipate or intend to reach.

This could result in wasted ad spend or damage to the brand if the wrong audiences are exposed to the ads.

Advertisers may want to use other targeting and measurement tools in addition to Protective Targeting to avoid complete reliance on Microsoft.

Getting Started

Predictive Targeting will now be the default targeting method for Audience Ads.

Advertisers simply have to activate the tool, and Microsoft’s algorithms will determine the optimal audiences for their ads.

Screenshot from: about.ads.microsoft.com, June 2023.

Screenshot from: about.ads.microsoft.com, June 2023.Advertisers can also disable Predictive Targeting and define their audiences as needed.

Microsoft recommends that advertisers use compelling ad copy aligned with their target customers, automated bidding, expanded reach across ad groups, and campaign performance monitoring.

The company predicts Predictive Targeting will help propel advertisers’ Audience Ads campaigns forward and usher in the future of targeted advertising.

Featured image: Screenshot from about.ads.microsoft.com, June 2023.

Source: Microsoft

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)