MARKETING

SEO Recap: PageRank – Moz

The author’s views are entirely his or her own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

Have you ever wondered how Moz employees learn internally? Well, here’s your chance to get a sneak peek into never seen before, internal webinar footage with Tom Capper! Learning is important at Moz, and the sharing of information amongst employees is crucial in making sure we stay true to our core values. Knowledge sharing allows us to stay transparent, work together more easily, find better ways of doing things, and create even better tools and experiences for our customers.

Tom started these sessions when everyone was working remotely in 2020. It allowed us to come together again in a special, collaborative way. So, today, we give to you all the gift of learning! In this exclusive webinar, Tom Capper takes us through the crucial topic of PageRank.

Video Transcription

This is actually a topic that I used to put poor, innocent, new recruits through, particularly if they came from a non-marketing background. Even though this is considered by a lot people to be an advanced topic, I think it’s something that actually it makes sense for people who want to learn about SEO to learn first because it’s foundational. And if you think about a lot of other technical SEO and link building topics from this perspective, they make a lot more sense and are simpler and you kind of figure out the answers yourself rather than needing to read 10,000 word blog posts and patents and this kind of thing.

Anyway, hold that thought, because it’s 1998. I am 6 years old, and this is a glorious state-of-the-art video game, and internet browsing that I do in my computer club at school looks a bit like this. I actually didn’t use Yahoo!. I used Excite, which in hindsight was a mistake, but in my defense I was 6.

The one thing you’ll notice about this as a starting point for a journey on the internet, compared to something like Google or whatever you use today, maybe even like something that’s built into your browser these days, there is a lot of links on this page, and mostly there are links to pages with links on this page. It’s kind of like a taxonomy directory system. And this is important because if a lot of people browse the web using links, and links are primarily a navigational thing, then we can get some insights out of looking at links.

They’re a sort of proxy for popularity. If we assume that everyone starts their journey on the internet on Yahoo! in 1998, then the pages that are linked to from Yahoo! are going to get a lot of traffic. They are, by definition, popular, and the pages that those pages link to will also still get quite a lot and so on and so forth. And through this, we could build up some kind of picture of what websites are popular. And popularity is important because if you show popular websites to users in search results, then they will be more trustworthy and credible and likely to be good and this kind of thing.

This is massive oversimplification, bear with me, but this is kind of why Google won. Google recognized this fact, and they came up with an innovation called PageRank, which made their search engine better than other people’s search engines, and which every other search engine subsequently went on to imitate.

However, is anything I said just now relevant 23 years later? We definitely do not primarily navigate the word with links anymore. We use these things called search engines, which Google might know something about. But also we use newsfeeds, which are kind of dynamic and uncrawlable, and all sorts of other non-static, HTML link-based patterns. Links are probably not the majority even of how we navigate our way around the web, except maybe within websites. And Google has better data on popularity anyway. Like Google runs a mobile operating system. They run ISPs. They run a browser. They run YouTube. There are lots of ways for Google to figure out what is and isn’t popular without building some arcane link graph.

However, be that true or not, there still is a core methodology that underpins how Google works on a foundational level. In 1998, it was the case that PageRank was all of how Google worked really. It was just PageRank plus relevance. These days, there’s a lot of nuance and layers on top, and even PageRank itself probably isn’t even called that and probably has changed and been refined and tweaked around the edges. And it might be that PageRank is not used as a proxy for popularity anymore, but maybe as a proxy for trust or something like that and it has a slightly different role in the algorithm.

But the point is we still know purely through empirical evidence that changing how many and what pages link to a page has a big impact on organic performance. So we still know that something like this is happening. And the way that Google talks about how links work and their algorithms still reflects a broadly PageRank-based understanding as do developments in SEO directives and hreflang and rel and this kind of thing. It still all speaks to a PageRank-based ecosystem, if not a PageRank-only ecosystem.

Also, I’m calling it PageRank because that’s what Google calls it, but some other things you should be aware of that SEOs use, link equity I think is a good one to use because it kind of explains what you’re talking about in a useful way. Link flow, it’s not bad, but link flow is alluding to a different metaphor that you’ve probably seen before, where you think of links as being sent through big pipes of liquids that then pour in different amounts into different pages. It’s a different metaphor to the popularity one, and as a result it has some different implications if it’s overstretched, so use some caution. And then linking strength, I don’t really know what metaphor this is trying to do. It doesn’t seem as bad as link juice, at least fine, I guess.

More importantly, how does it work? And I don’t know if anyone here hates maths. If you do, I’m sorry, but there’s going to be maths.

So the initial sort of question is or the foundation of all this is imagine that, so A, in the red box here, that’s a web page to be clear in this diagram, imagine that the whole internet is represented in this diagram, that there’s only one web page, which means this is 1970 something, I guess, what is the probability that a random browser is on this page? We can probably say it’s one or something like that. If you want to have some other take on that, it kind of doesn’t matter because it’s all just going to be based on whatever number that is. From that though, we can sort of try to infer some other things.

So whatever probability you thought that was, and let’s say we thought that if there’s one page on the internet, everyone is on it, what’s the probability a random browser is on the one page, A, links to? So say that we’ve pictured the whole internet here. A is a page that links to another page which links nowhere. And we started by saying that everyone was on this page. Well, what’s the probability now, after a cycle, that everyone will be on this page? Well, we go with the assumption that there’s an 85% chance, and the 85% number comes from Google’s original 1998 white paper. There’s an 85% chance that they go onto this one page in their cycle, and a 15% chance that they do one of these non-browser-based activities. And the reason why we assume that there’s a chance on every cycle that people exit to do non-browser-based activities, it’s because otherwise we get some kind of infinite cycle later on. We don’t need to worry about that. But yeah, the point is that if you assume that people never leave their computers and that they just browse through links endlessly, then you end up assuming eventually that every page has infinite traffic, which is not the case.

That’s the starting point where we have this really simple internet, we have a page with a link on it, and a page without a link on it and that’s it. Something to bear in mind with these systems is, obviously, web pages don’t have our link on them and web pages with no links on them are virtually unheard of, like the one on the right. This gets really complex really fast. If we try to make a diagram just of two pages on the Moz website, it would not fit on the screen. So we’re talking with really simplified versions here, but it doesn’t matter because the principles are extensible.

So what if the page on the left actually linked to two pages, not one? What is the probability now that we’re on one of those two pages? We’re taking that 85% chance that they move on at all without exiting, because the house caught fire, they went for a bike ride or whatever, and we’re now dividing that by two. So we’re saying 42.5% chance that they were on this page, 42.5% chance they were on this page, and then nothing else happens because there are no more links in the world. That’s fine.

What about this page? So if this page now links to one more, how does this page’s strength relates to page A? So this one was 0.85/2, and this one is 0.85 times that number. So note that we are diluting as we go along because we’ve applied that 15% deterioration on every step. This is useful and interesting to us because we can imagine a model in which page A, on the left, is our homepage and the page on the right is some page we want to rank, and we’re diluting with every step that we have to jump to get there. And this is crawl depth, which is a metric that is exposed by Moz Pro and most other technical SEO tools. That’s why crawl depth is something that people are interested in is this, and part of it is discovery, which we won’t get into today, but part of it is also this dilution factor.

And then if this page actually linked to three, then again, each of these pages is only one-third as strong as when it only linked to one. So it’s being split up and diluted the further down we go.

So that all got very complicated very quick on a very simple, fictional website. Don’t panic. The lessons we want to take away from this are quite simple, even though the math becomes very arcane very quickly.

So the first lesson we want to take is that each additional link depth diluted value. So we talked about the reasons for that, but obviously it has implications for site structure. It also has implications in some other things, some other common technical SEO issues that I’ll cover in a bit.

So if I link to a page indirectly that is less effective than linking to a page directly, even in a world where every page only has one link on it, which is obviously an ideal scenario.

The other takeaway we can have is that more links means each link is less valuable. So if every additional link you add to your homepage, you’re reducing the effectiveness of the links that were already there. So this is very important because if you look on a lot of sites right now, you’ll find 600 link mega navs at the top of the page and the same at the bottom of the page and all this kind of thing. And that can be an okay choice. I’m not saying that’s always wrong, but it is a choice and it has dramatic implications.

Some of the biggest changes in SEO performance I’ve ever seen on websites came from cutting back the number of links on the homepage by a factor of 10. If you change a homepage so that it goes from linking to 600 pages to linking to the less than 100 that you actually want to rank, that will almost always have a massive difference, a massive impact, more so than external link building could ever dream of because you’re not going to get that 10 times difference through external link building, unless it’s a startup or something.

Some real-world scenarios. I want to talk about basically some things that SEO tools often flag, that we’re all familiar with talking about as SEO issues or optimizations or whatever, but often we don’t think about why and we definitely don’t think of them as being things that hark back quite so deep into Google’s history.

So a redirect is a link, the fictional idea of a page with one link on it is a redirect, because a redirect is just a page that links to exactly one other page. So in this scenario, the page on the left could have linked directly to the page on the top right, but because it didn’t, we’ve got this 0.85 squared here, which is 0.7225. The only thing you need to know about that is that it’s a smaller number than 0.85. Because we didn’t link directly, we went through this page here that redirected, which doesn’t feel like a link, but is a link in this ecosystem, we’ve just arbitrarily decided to dilute the page at the end of the cycle. And this is, obviously, particularly important when we think about chain redirects, which is another thing that’s often flagged by the SEO tools.

But when you look in an issue report in something like Moz Pro and it gives you a list of redirects as if they’re issues, that can be confusing because a redirect is something we’re also told is a good thing. Like if we have a URL that’s no longer in use, it should redirect. But the reason that issue is being flagged is we shouldn’t still be linking to the URL that redirects. We should be linking directly to the thing at the end of the chain. And this is why. It’s because of this arbitrary dilution that we’re inserting into our own website, which is basically just a dead weight loss. If you imagine that in reality, pages do tend to link back to each other, this will be a big complex web and cycle that is, and I think this is where the flow thing comes around because people can imagine a flow of buckets that drip round into each other but leak a little bit at every step, and then you get less and less water, unless there’s some external source. If you imagine these are looping back around, then inserting redirects is just dead weight loss. We’ve drilled a hole in the bottom of a bucket.

So, yeah, better is a direct link. Worse is a 302, although that’s a controversial subject, who knows. Google sometimes claim that they treat 302s as 301s these days. Let’s not get into that.

Canonicals, very similar, a canonical from a PageRank perspective. A canonical is actually a much later addition to search engines. But a canonical is basically equivalent to a 301 redirect. So if we have this badgers page, which has two versions, so you can access it by going to badgers?colour=brown. Or so imagine I have a website that sells live badgers for some reason in different colors, and then I might have these two different URL variants for my badger e-com page filtered to brown. And I’ve decided that this one without any parameters is the canonical version, literally and figuratively speaking. If the homepage links to it via this parameter page, which then has canonical tag pointing at the correct version, then I’ve arbitrarily weakened the correct version versus what I could have done, which would be the direct link through. Interestingly, if we do have this direct link through, note that this page now has no strength at all. It now has no inbound links, and also it probably wouldn’t get flagged as an error in the tool because the tool wouldn’t find it.

You’ll notice I put a tilde before the number zero. We’ll come to that.

PageRank sculpting is another thing that I think is interesting because people still try to do it even though it’s not worked for a really long time. So this is an imaginary scenario that is not imaginary at all. It’s really common, Moz probably has this exact scenario, where your homepage links to some pages you care about and also some pages you don’t really care about, certainly from an SEO perspective, such as your privacy policy. Kind of sucks because, in this extreme example here, having a privacy policy has just randomly halved the strength of a page you care about. No one wants that.

So what people used to do was they would use a link level nofollow. They use a link level nofollow, which . . . So the idea was, and it worked at the time, and by at the time, I mean like 2002 or something. But people still try this on new websites today. The idea was that effectively the link level nofollow removed this link, so it was as if your homepage only linked to one page. Great, everyone is a winner.

Side note I talked about before. So no page actually has zero PageRank. A page with no links in the PageRank model has the PageRank one over the number of pages on the internet. That’s the seeding probability that before everything starts going and cycles round and figures out what the stable equilibrium PageRank is, they assume that there’s an equal chance you’re on any page on the internet. One divided by the number of pages on the internet is a very small number, so we can think of it as zero.

This was changed, our level nofollow hack was changed again a very, very long time ago such that if you use a link level nofollow, and by the way, this is also true if you use robots.txt to do this, this second link will still be counted in when we go here and we have this divided by two to say we are halving, there’s an equal chance that you go to either of these pages. This page still gets that reduction because it was one of two links, but this page at the bottom now has no strength at all because it was only linked through a nofollow. So if you do this now, it’s a worst of both world scenario. And you might say, “Oh, I don’t actually care whether my privacy policy has zero strength,” whatever. But you do care because your privacy policy probably links through the top nav to every other page on your website. So you’re still doing yourself a disservice.

Second side note, I said link level nofollow, meaning nofollow in the HTML is an attribute to a link. There is also page level nofollow, which I struggled to think of a single good use case for. Basically, a page level nofollow means we are going to treat every single link on this page as nofollow. So we’re just going to create a PageRank dead-end. This is a strange thing to do. Sometimes people use robots.txt, which basically does the same thing. If I block this page with robota.txt, that’s the same in terms of the PageRank consequences, except there are other good reasons to do that, like I might not want Google to ever see this, or I might want to prevent a massive waste of Google’s crawlers’ time so that they spend more time crawling the rest of my site or something like this. There are reasons to use robots.txt. Page level nofollow is we’re going to create that dead-end, but also we’re going to waste Google’s time crawling it anyway.

Some of the extreme scenarios I just talked about, particularly the one with the privacy policy, changed a lot for the better for everyone in 2004 with something called reasonable surfer, which you occasionally still hear people talking about now, but mostly implicitly. And it is probably actually an under-discussed or underheld in mind topic.

So these days, and by these days, I mean for the last 17 years, if one of these links was that massive call to action and another one of these links was in the footer, like a privacy policy link often is, then Google will apply some sense and say the chance people click on this one . . . Google was trying to figure out probabilities here, remember. So we’ll split this. This 0.9 and 0.1 still have to add up to 1, but we’ll split them in a more reasonable fashion. Yeah, they were doing that a long time ago. They’ve probably got very, very good at it by now.

Noindex is an interesting one because, traditionally, you would think that has nothing to do with PageRank. So, yeah, a noindex tag just means this should never show up in search results, this page at the bottom, which is fine. There are some valid reasons to do that. Maybe you’re worried that it will show up for the wrong query that something else on your site is trying to show up for, or maybe it contains sensitive information or something like this. Okay, fine. However, when you put a noindex tag on something, Google eventually stops crawling it. Everyone sort of intuitively knew all the pieces of this puzzle, but Google only acknowledged that this behavior is what happens a couple of years ago.

So Google eventually stops crawling it, and when Google stops crawling on it, it stops passing PageRank. So noindex follow, which used to be quite a good thing or we thought quite a good thing to do for a page like an HTML sitemap page or something like that, like an HTML sitemap page, clearly you don’t want to show up in search results because it’s kind of crap and a poor reflection on your site and not a good UX and this kind of thing. But it is a good way to pass equity through to a bunch of deep pages, or so we thought. It turns out probably not. It was equivalent to that worst case scenario, page level nofollow in the long run that we talked about earlier. And again, this is probably why noindex is flagged as an error in tools like Moz Pro, although often it’s not well explained or understood.

My pet theory on how links work is that, at this stage, they’re no longer a popularity proxy because there’s better ways of doing that. But they are a brand proxy for a frequently cited brand. Citation and link are often used synonymously in this industry, so that kind of makes sense. However, once you actually start ranking in the top 5 or 10, my experience is that links become less and less relevant the more and more competitive a position you’re in because Google has increasingly better data to figure out whether people want to click on you or not. This is some data from 2009, contrasting ranking correlations in positions 6 to 10, versus positions 1 to 5. Basically, both brand and link become less relevant, or the easily measured versions become less relevant, which again is kind of exploring that theory that the higher up you rank, the more bespoke and user signal-based it might become.

This is some older data, where I basically looked at to what extent you can use Domain Authority to predict rankings, which is this blue bar, to what extent you could use branded search volume to predict rankings, which is this green bar, and to what extent you could use a model containing them both to predict rankings, which is not really any better than just using branded search volume. This is obviously simplified and flawed data, but this is some evidence towards the hypothesis that links are used as a brand proxy.

MARKETING

Streamlining Processes for Increased Efficiency and Results

How can businesses succeed nowadays when technology rules? With competition getting tougher and customers changing their preferences often, it’s a challenge. But using marketing automation can help make things easier and get better results. And in the future, it’s going to be even more important for all kinds of businesses.

So, let’s discuss how businesses can leverage marketing automation to stay ahead and thrive.

Benefits of automation marketing automation to boost your efforts

First, let’s explore the benefits of marketing automation to supercharge your efforts:

Marketing automation simplifies repetitive tasks, saving time and effort.

With automated workflows, processes become more efficient, leading to better productivity. For instance, automation not only streamlines tasks like email campaigns but also optimizes website speed, ensuring a seamless user experience. A faster website not only enhances customer satisfaction but also positively impacts search engine rankings, driving more organic traffic and ultimately boosting conversions.

Automation allows for precise targeting, reaching the right audience with personalized messages.

With automated workflows, processes become more efficient, leading to better productivity. A great example of automated workflow is Pipedrive & WhatsApp Integration in which an automated welcome message pops up on their WhatsApp

within seconds once a potential customer expresses interest in your business.

Increases ROI

By optimizing campaigns and reducing manual labor, automation can significantly improve return on investment.

Leveraging automation enables businesses to scale their marketing efforts effectively, driving growth and success. Additionally, incorporating lead scoring into automated marketing processes can streamline the identification of high-potential prospects, further optimizing resource allocation and maximizing conversion rates.

Harnessing the power of marketing automation can revolutionize your marketing strategy, leading to increased efficiency, higher returns, and sustainable growth in today’s competitive market. So, why wait? Start automating your marketing efforts today and propel your business to new heights, moreover if you have just learned ways on how to create an online business

How marketing automation can simplify operations and increase efficiency

Understanding the Change

Marketing automation has evolved significantly over time, from basic email marketing campaigns to sophisticated platforms that can manage entire marketing strategies. This progress has been fueled by advances in technology, particularly artificial intelligence (AI) and machine learning, making automation smarter and more adaptable.

One of the main reasons for this shift is the vast amount of data available to marketers today. From understanding customer demographics to analyzing behavior, the sheer volume of data is staggering. Marketing automation platforms use this data to create highly personalized and targeted campaigns, allowing businesses to connect with their audience on a deeper level.

The Emergence of AI-Powered Automation

In the future, AI-powered automation will play an even bigger role in marketing strategies. AI algorithms can analyze huge amounts of data in real-time, helping marketers identify trends, predict consumer behavior, and optimize campaigns as they go. This agility and responsiveness are crucial in today’s fast-moving digital world, where opportunities come and go in the blink of an eye. For example, we’re witnessing the rise of AI-based tools from AI website builders, to AI logo generators and even more, showing that we’re competing with time and efficiency.

Combining AI-powered automation with WordPress management services streamlines marketing efforts, enabling quick adaptation to changing trends and efficient management of online presence.

Moreover, AI can take care of routine tasks like content creation, scheduling, and testing, giving marketers more time to focus on strategic activities. By automating these repetitive tasks, businesses can work more efficiently, leading to better outcomes. AI can create social media ads tailored to specific demographics and preferences, ensuring that the content resonates with the target audience. With the help of an AI ad maker tool, businesses can efficiently produce high-quality advertisements that drive engagement and conversions across various social media platforms.

Personalization on a Large Scale

Personalization has always been important in marketing, and automation is making it possible on a larger scale. By using AI and machine learning, marketers can create tailored experiences for each customer based on their preferences, behaviors, and past interactions with the brand.

This level of personalization not only boosts customer satisfaction but also increases engagement and loyalty. When consumers feel understood and valued, they are more likely to become loyal customers and brand advocates. As automation technology continues to evolve, we can expect personalization to become even more advanced, enabling businesses to forge deeper connections with their audience. As your company has tiny homes for sale California, personalized experiences will ensure each customer finds their perfect fit, fostering lasting connections.

Integration Across Channels

Another trend shaping the future of marketing automation is the integration of multiple channels into a cohesive strategy. Today’s consumers interact with brands across various touchpoints, from social media and email to websites and mobile apps. Marketing automation platforms that can seamlessly integrate these channels and deliver consistent messaging will have a competitive edge. When creating a comparison website it’s important to ensure that the platform effectively aggregates data from diverse sources and presents it in a user-friendly manner, empowering consumers to make informed decisions.

Omni-channel integration not only betters the customer experience but also provides marketers with a comprehensive view of the customer journey. By tracking interactions across channels, businesses can gain valuable insights into how consumers engage with their brand, allowing them to refine their marketing strategies for maximum impact. Lastly, integrating SEO services into omni-channel strategies boosts visibility and helps businesses better understand and engage with their customers across different platforms.

The Human Element

While automation offers many benefits, it’s crucial not to overlook the human aspect of marketing. Despite advances in AI and machine learning, there are still elements of marketing that require human creativity, empathy, and strategic thinking.

Successful marketing automation strikes a balance between technology and human expertise. By using automation to handle routine tasks and data analysis, marketers can focus on what they do best – storytelling, building relationships, and driving innovation.

Conclusion

The future of marketing automation looks promising, offering improved efficiency and results for businesses of all sizes.

As AI continues to advance and consumer expectations change, automation will play an increasingly vital role in keeping businesses competitive.

By embracing automation technologies, marketers can simplify processes, deliver more personalized experiences, and ultimately, achieve their business goals more effectively than ever before.

MARKETING

Will Google Buy HubSpot? | Content Marketing Institute

Google + HubSpot. Is it a thing?

This week, a flurry of news came down about Google’s consideration of purchasing HubSpot.

The prospect dismayed some. It delighted others.

But is it likely? Is it even possible? What would it mean for marketers? What does the consideration even mean for marketers?

Well, we asked CMI’s chief strategy advisor, Robert Rose, for his take. Watch this video or read on:

Why Alphabet may want HubSpot

Alphabet, the parent company of Google, apparently is contemplating the acquisition of inbound marketing giant HubSpot.

The potential price could be in the range of $30 billion to $40 billion. That would make Alphabet’s largest acquisition by far. The current deal holding that title happened in 2011 when it acquired Motorola Mobility for more than $12 billion. It later sold it to Lenovo for less than $3 billion.

If the HubSpot deal happens, it would not be in character with what the classic evil villain has been doing for the past 20 years.

At first glance, you might think the deal would make no sense. Why would Google want to spend three times as much as it’s ever spent to get into the inbound marketing — the CRM and marketing automation business?

At a second glance, it makes a ton of sense.

I don’t know if you’ve noticed, but I and others at CMI spend a lot of time discussing privacy, owned media, and the deprecation of the third-party cookie. I just talked about it two weeks ago. It’s really happening.

All that oxygen being sucked out of the ad tech space presents a compelling case that Alphabet should diversify from third-party data and classic surveillance-based marketing.

Yes, this potential acquisition is about data. HubSpot would give Alphabet the keys to the kingdom of 205,000 business customers — and their customers’ data that almost certainly numbers in the tens of millions. Alphabet would also gain access to the content, marketing, and sales information those customers consumed.

Conversely, the deal would provide an immediate tip of the spear for HubSpot clients to create more targeted programs in the Alphabet ecosystem and upload their data to drive even more personalized experiences on their own properties and connect them to the Google Workspace infrastructure.

When you add in the idea of Gemini, you can start to see how Google might monetize its generative AI tool beyond figuring out how to use it on ads on search results pages.

What acquisition could mean for HubSpot customers

I may be stretching here but imagine this world. As a Hubspoogle customer, you can access an interface that prioritizes your owned media data (e.g., your website, your e-commerce catalog, blog) when Google’s Gemini answers a question).

Recent reports also say Google may put up a paywall around the new premium features of its artificial intelligence-powered Search Generative Experience. Imagine this as the new gating for marketing. In other words, users can subscribe to Google’s AI for free, but Hubspoogle customers can access that data and use it to create targeted offers.

The acquisition of HubSpot would immediately make Google Workspace a more robust competitor to Microsoft 365 Office for small- and medium-sized businesses as they would receive the ADDED capability of inbound marketing.

But in the world of rented land where Google is the landlord, the government will take notice of the acquisition. But — and it’s a big but, I cannot lie (yes, I just did that). The big but is whether this acquisition dance can happen without going afoul of regulatory issues.

Some analysts say it should be no problem. Others say, “Yeah, it wouldn’t go.” Either way, would anybody touch it in an election year? That’s a whole other story.

What marketers should realize

So, what’s my takeaway?

It’s a remote chance that Google will jump on this hard, but stranger things have happened. It would be an exciting disruption in the market.

The sure bet is this. The acquisition conversation — as if you needed more data points — says getting good at owned media to attract and build audiences and using that first-party data to provide better communication and collaboration with your customers are a must.

It’s just a matter of time until Google makes a move. They might just be testing the waters now, but they will move here. But no matter what they do, if you have your customer data house in order, you’ll be primed for success.

HANDPICKED RELATED CONTENT:

Cover image by Joseph Kalinowski/Content Marketing Institute

MARKETING

5 Psychological Tactics to Write Better Emails

Welcome to Creator Columns, where we bring expert HubSpot Creator voices to the Blogs that inspire and help you grow better.

I’ve tested 100s of psychological tactics on my email subscribers. In this blog, I reveal the five tactics that actually work.

You’ll learn about the email tactic that got one marketer a job at the White House.

You’ll learn how I doubled my 5 star reviews with one email, and why one strange email from Barack Obama broke all records for donations.

5 Psychological Tactics to Write Better Emails

Imagine writing an email that’s so effective it lands you a job at the White House.

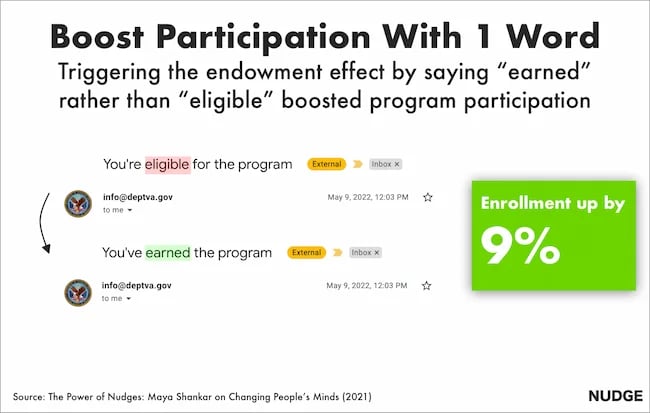

Well, that’s what happened to Maya Shankar, a PhD cognitive neuroscientist. In 2014, the Department of Veterans Affairs asked her to help increase signups in their veteran benefit scheme.

Maya had a plan. She was well aware of a cognitive bias that affects us all—the endowment effect. This bias suggests that people value items higher if they own them. So, she changed the subject line in the Veterans’ enrollment email.

Previously it read:

- Veterans, you’re eligible for the benefit program. Sign up today.

She tweaked one word, changing it to:

- Veterans, you’ve earned the benefits program. Sign up today.

This tiny tweak had a big impact. The amount of veterans enrolling in the program went up by 9%. And Maya landed a job working at the White House

Inspired by these psychological tweaks to emails, I started to run my own tests.

Alongside my podcast Nudge, I’ve run 100s of email tests on my 1,000s of newsletter subscribers.

Here are the five best tactics I’ve uncovered.

1. Show readers what they’re missing.

Nobel prize winning behavioral scientists Daniel Kahneman and Amos Tversky uncovered a principle called loss aversion.

Loss aversion means that losses feel more painful than equivalent gains. In real-world terms, losing $10 feels worse than how gaining $10 feels good. And I wondered if this simple nudge could help increase the number of my podcast listeners.

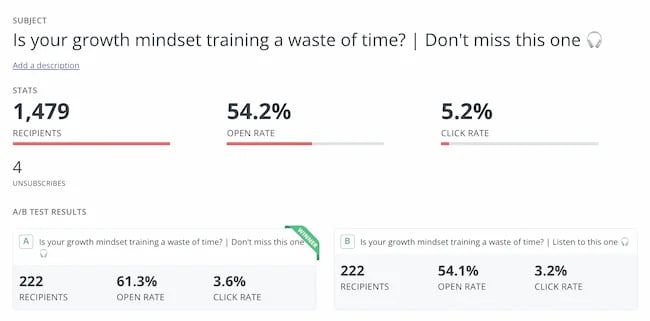

For my test, I tweaked the subject line of the email announcing an episode. The control read:

“Listen to this one”

In the loss aversion variant it read:

“Don’t miss this one”

It is very subtle loss aversion. Rather than asking someone to listen, I’m saying they shouldn’t miss out. And it worked. It increased the open rate by 13.3% and the click rate by 12.5%. Plus, it was a small change that cost me nothing at all.

2. People follow the crowd.

In general, humans like to follow the masses. When picking a dish, we’ll often opt for the most popular. When choosing a movie to watch, we tend to pick the box office hit. It’s a well-known psychological bias called social proof.

I’ve always wondered if it works for emails. So, I set up an A/B experiment with two subject lines. Both promoted my show, but one contained social proof.

The control read: New Nudge: Why Brands Should Flaunt Their Flaws

The social proof variant read: New Nudge: Why Brands Should Flaunt Their Flaws (100,000 Downloads)

I hoped that by highlighting the episode’s high number of downloads, I’d encourage more people to listen. Fortunately, it worked.

The open rate went from 22% to 28% for the social proof version, and the click rate, (the number of people actually listening to the episode), doubled.

3. Praise loyal subscribers.

The consistency principle suggests that people are likely to stick to behaviours they’ve previously taken. A retired taxi driver won’t swap his car for a bike. A hairdresser won’t change to a cheap shampoo. We like to stay consistent with our past behaviors.

I decided to test this in an email.

For my test, I attempted to encourage my subscribers to leave a review for my podcast. I sent emails to 400 subscribers who had been following the show for a year.

The control read: “Could you leave a review for Nudge?”

The consistency variant read: “You’ve been following Nudge for 12 months, could you leave a review?”

My hypothesis was simple. If I remind people that they’ve consistently supported the show they’ll be more likely to leave a review.

It worked.

The open rate on the consistency version of the email was 7% higher.

But more importantly, the click rate, (the number of people who actually left a review), was almost 2x higher for the consistency version. Merely telling people they’d been a fan for a while doubled my reviews.

4. Showcase scarcity.

We prefer scarce resources. Taylor Swift gigs sell out in seconds not just because she’s popular, but because her tickets are hard to come by.

Swifties aren’t the first to experience this. Back in 1975, three researchers proved how powerful scarcity is. For the study, the researchers occupied a cafe. On alternating weeks they’d make one small change in the cafe.

On some weeks they’d ensure the cookie jar was full.

On other weeks they’d ensure the cookie jar only contained two cookies (never more or less).

In other words, sometimes the cookies looked abundantly available. Sometimes they looked like they were almost out.

This changed behaviour. Customers who saw the two cookie jar bought 43% more cookies than those who saw the full jar.

It sounds too good to be true, so I tested it for myself.

I sent an email to 260 subscribers offering free access to my Science of Marketing course for one day only.

In the control, the subject line read: “Free access to the Science of Marketing course”

For the scarcity variant it read: “Only Today: Get free access to the Science of Marketing Course | Only one enrol per person.”

130 people received the first email, 130 received the second. And the result was almost as good as the cookie finding. The scarcity version had a 15.1% higher open rate.

5. Spark curiosity.

All of the email tips I’ve shared have only been tested on my relatively small audience. So, I thought I’d end with a tip that was tested on the masses.

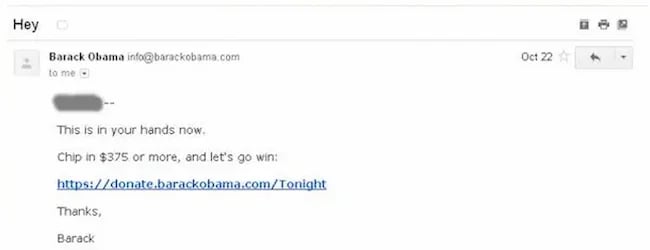

Back in 2012, Barack Obama and his campaign team sent hundreds of emails to raise funds for his campaign.

Of the $690 million he raised, most came from direct email appeals. But there was one email, according to ABC news, that was far more effective than the rest. And it was an odd one.

The email that drew in the most cash, had a strange subject line. It simply said “Hey.”

The actual email asked the reader to donate, sharing all the expected reasons, but the subject line was different.

It sparked curiosity, it got people wondering, is Obama saying Hey just to me?

Readers were curious and couldn’t help but open the email. According to ABC it was “the most effective pitch of all.”

Because more people opened, it raised more money than any other email. The bias Obama used here is the curiosity gap. We’re more likely to act on something when our curiosity is piqued.

Loss aversion, social proof, consistency, scarcity and curiosity—all these nudges have helped me improve my emails. And I reckon they’ll work for you.

It’s not guaranteed of course. Many might fail. But running some simple a/b tests for your emails is cost free, so why not try it out?

This blog is part of Phill Agnew’s Marketing Cheat Sheet series where he reveals the scientifically proven tips to help you improve your marketing. To learn more, listen to his podcast Nudge, a proud member of the Hubspot Podcast Network.

-

WORDPRESS6 days ago

WORDPRESS6 days agoTurkish startup ikas attracts $20M for its e-commerce platform designed for small businesses

-

PPC7 days ago

PPC7 days agoA History of Google AdWords and Google Ads: Revolutionizing Digital Advertising & Marketing Since 2000

-

MARKETING6 days ago

MARKETING6 days agoRoundel Media Studio: What to Expect From Target’s New Self-Service Platform

-

SEO5 days ago

SEO5 days agoGoogle Limits News Links In California Over Proposed ‘Link Tax’ Law

-

MARKETING7 days ago

MARKETING7 days agoUnlocking the Power of AI Transcription for Enhanced Content Marketing Strategies

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 12, 2024

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Search Results Can Be Harmful & Dangerous In Some Cases

-

SEO4 days ago

SEO4 days ago10 Paid Search & PPC Planning Best Practices

![5 Psychological Tactics to Write Better Emails → Download Now: The Beginner's Guide to Email Marketing [Free Ebook]](https://articles.entireweb.com/wp-content/uploads/2023/02/11-Free-Email-Hacks-to-Step-Up-Your-Productivity.png)

You must be logged in to post a comment Login