The new app is called watchGPT and as I tipped off already, it gives you access to ChatGPT from your Apple Watch. Now the $10,000 question (or more accurately the $3.99 question, as that is the one-time cost of the app) is why having ChatGPT on your wrist is remotely necessary, so let’s dive into what exactly the app can do.

NEWS

Google Update November 2019

There is substantial evidence that an unannounced Google Update is underway. This update has been affecting sites across a wide range of niches. Most of the feedback is negative although there are winners mixed in, including winners in the spam community.

Impact Felt Across Many Industries

Forensic SEO expert Alan Bleiweiss (@AlanBleiweiss) noted in a tweet how this update affected a plurality of industries. He tweeted:

“I monitor 47 sites

- Mental Health Knowledge base & Directory – up 20%

- Travel booking – up 14%

- Travel booking – up 25%

- Recipes – up 12%

- B to C eCom – up 20%

- Tech news – down 20%

- Skin care affiliate – down 48%

- Alternative health – not impacted

- Other Recipe sites – not impacted”

Note: The double listing for travel sites is not a typo. It’s a reference to two travel sites.

Recipe Bloggers Report Update Effects

Google does not target specific niches. The recipe blogger niche is a highly organized community. Because of that when something big happens the community’s voice will be amplified.

Thus it was that the recipe blogger community noticed this update. As of earlier today there was a growing list of 47 recipe blogs that had reported losses from this update.

Casey Markee of MediaWyse (@MediaWyse) who specializes in food blog SEO said this about those 47 sites that were suffering from Google’s November 2019 update:

“All of them, big sites, small sites, medium sites, all are showing like 30%+ drops. I know it’s tough on bloggers to see drops and thing “OMG I need to make some dramatic changes.”

But that’s like throwing darts at a board, blindfolded, in the dark, while underwater. Definitely need to WAIT for more data and until this “update” (or whatever it is) has fully rolled-out.”

I agree, it’s best to wait to hear what Google says about this update. I took a quick look at two of the recipe sites that were lost traffic and both of them had heavy keyword term densities.

For example, I extracted the Heading outlines (H1, H2) of both sites and they both were hitting their target keywords hard in every single heading. A keyword density report of two sample pages revealed that the target keywords were being used as high as 5% of all words on the page.

Travel Bloggers

Leslie Harvey of Trips with Tykes (@TripsWithTykes) tweeted:

“Seeing it reported heavily in the travel blog world too – dozens of high traffic travel blogs are significantly down from anecdotes I’m hearing.”

Many First Time Google Update Casualties

A curious note about this update is that there are many publishers reporting that they have never been hit by a previous update and are surprised to have finally been hit by the November 2019 Google update. As a representative example, one publisher tweeted:

“I’m down 25% organic in the last two days. I’ve seen positive impacts from every previous algo update.”

Jim @ UncoveringPA (@UncoveringPA) tweeted:

“Definitely frustrating. First time I’ve ever gotten a big hit from a Google update. Down 20-25%. For my local site, it seems mostly that more official sites got boosted over mine, even though mine has better and more in depth content.”

Facebook-Hat Observations

A member of the private SEO Signals Lab Facebook group had a similar experience.

As an example, a Facebook member stated that his seven year old site that’s been in the top three for years suddenly dropped to page two and three.

Perhaps of interest or maybe not, this member reported having used content analysis software to analyze the SERPs to understand the kind of words are ranking for queries. Regardless if that’s a clue or not, this is another person out of many who reported having never suffered from a previous Google update and are now having a difficult time with this update.

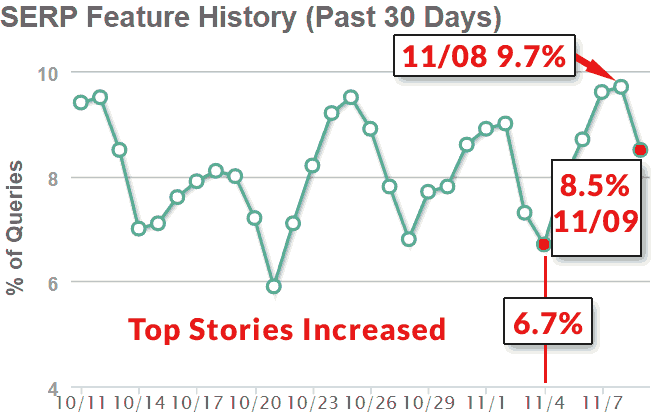

Another Facebook member reported a 20% change in traffic but with little change in keyword positions. That kind of result is sometimes caused by an increase in People Also Ask, Featured Snippets, Carousels, Top Stories and other “helpful” Google features that tend to push organic results down.

According to Moz’s Mozcast, the biggest changes has been in the Top Stories, with a spike beginning on November 5th, 2019 that peaked on Thursday November 7th, when evidence of an update was beginning to surge.

Could that be causing some of the traffic declines? It’s hard to say, these are the first days of the update and information is still trickling in.

In general, there are more people reporting ranking losses than increases in the SEO Signals Labs group. And on Twitter there are many reporting losses, far more than winners.

Gray Hat Update Experience

Members of the self-described “gray hat” Proper SEO Facebook group are generally positive. This group is focused on Private Blog Network (PBN) links. The Admin of the group posted an Accuranker graph showing that all his keywords were on a steep upward trajectory, with one member saying his graph resembled a hockey stick.

Another member remarked that he was having a week to week improvement in sales on the order of 30%, with Friday tracking at a 70% improvement.

Several members noted that there have been huge gains in Google Local changes.

Overall, the gray hats are responding more positive than negative.

Black Hat Update Reaction

Over at the Black Hat World forums members are discussing dramatic changes to their rankings. Some are reporting losses as high as 40%.

A few however are reporting improvements from Google’s November update.

According to one member:

“There must be something going on, I’ve seen 2 people on amazon affiliate forum stating that their traffic took a huge nose dive. Mine is up 30% yesterday and today.”

While in another discussion a black hat member commented:

“Just today I saw massive improvements finally… “

No-Hat SEO Observations on Google Update

Over on WebmasterWorld Forums, members started noticing changes on Wednesday November 6th . One member noted on Thursday that they hadn’t seen conversions in 24 hours. Another member noted:

“I’m seeing serps filled with malware and sneaky redirects from .cf .tk .ml .gq .ga domains.

Google can’t tell apart anymore a legitimate site and a spam site. This is serious. The anti-spam team lost the battle.”

That observation seems to affirm the positive reports seen in black hat and gray hat communities.

Member Paperchaser said:

“Entertainment industry here, I see lot of movement on my end, lot of sites losing their ranking heavily and couple hours later everything gets back to where they were.”

Fishing Hat SEO Reaction to Update

Someone asked me if I’m a white hat SEO. My response was that I’m more of a camo fishing hat type of SEO. In other words, I color within the lines while doing what needs to be done to get results.

That means understanding what Google is trying to accomplish and working within those parameters. It’s not about “tricking” Google. It’s about knowing the lines and coloring within those lines.

It’s also about being critical of SEO information that is unsupported by any kind of Google research or patents. For example, for the past year there has been a line of thought that it’s important to add author bios to websites. That’s been debunked by Googlers like John Mueller.

There have been numerous responses to recent updates that have proven false because they were based on poor sources of information or poor reasoning. Thus, many of today’s poor observations such as:

- The advice that to build an authors page

- Advice to display expert accreditation on the site

- The advice to improve E-A-T (Google’s confirmed there is no E-A-T ranking factor)

All of that advice have been debunked by Gary Illyes and John Mueller.

That poor advice came from using Google’s Search Quality Raters Guidelines as a way to understand Google’s algorithm.

The Search Quality Raters Guidelines can be used to set goals for what a website can be and from those goals one can create strategies to achieve those high standards.

But that document was never a road map of Google’s algorithm. It was a road map for how to rate a website. That’s all. Two different things. But that document is useless for trying to understand why a site is no longer ranking because there are no ranking secrets in the Search Quality Raters Guidelines.

Google Does Not Target Individual Niches

Google rarely targets an industry. In the past some SEOs promoted the idea that Google was targeting medical sites. They were wrong and now we are stuck with the ineptly named Medic Update to remind the SEO industry that Google does not target specific industries.

Google is Not Targeting Recipe Blogs

Anyone who says that Google is targeting the recipe blogs is wrong. This update has affected publishers from all niches. It’s a broad update that affects a wide range of site topics.

Takeaway About Google’s November Update

The point then is to wait until we hear from Google.

Most of the past updates have focused on relevance through understanding user queries better, understanding what web pages are about, and understanding the link signal better.

It could be that Google is rolling out a combination of changes related to content, queries and backlinks. That’s a safe bet… but at this point, but we simply don’t know. To say that Google is targeting specific kinds of sites or types of content is to skate on thin ice.

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.

NEWS

We asked ChatGPT what will be Google (GOOG) stock price for 2030

Investors who have invested in Alphabet Inc. (NASDAQ: GOOG) stock have reaped significant benefits from the company’s robust financial performance over the last five years. Google’s dominance in the online advertising market has been a key driver of the company’s consistent revenue growth and impressive profit margins.

In addition, Google has expanded its operations into related fields such as cloud computing and artificial intelligence. These areas show great promise as future growth drivers, making them increasingly attractive to investors. Notably, Alphabet’s stock price has been rising due to investor interest in the company’s recent initiatives in the fast-developing field of artificial intelligence (AI), adding generative AI features to Gmail and Google Docs.

However, when it comes to predicting the future pricing of a corporation like Google, there are many factors to consider. With this in mind, Finbold turned to the artificial intelligence tool ChatGPT to suggest a likely pricing range for GOOG stock by 2030. Although the tool was unable to give a definitive price range, it did note the following:

“Over the long term, Google has a track record of strong financial performance and has shown an ability to adapt to changing market conditions. As such, it’s reasonable to expect that Google’s stock price may continue to appreciate over time.”

GOOG stock price prediction

While attempting to estimate the price range of future transactions, it is essential to consider a variety of measures in addition to the AI chat tool, which includes deep learning algorithms and stock market experts.

Finbold collected forecasts provided by CoinPriceForecast, a finance prediction tool that utilizes machine self-learning technology, to anticipate Google stock price by the end of 2030 to compare with ChatGPT’s projection.

According to the most recent long-term estimate, which Finbold obtained on March 20, the price of Google will rise beyond $200 in 2030 and touch $247 by the end of the year, which would indicate a 141% gain from today to the end of the year.

Google has been assigned a recommendation of ‘strong buy’ by the majority of analysts working on Wall Street for a more near-term time frame. Significantly, 36 analysts of the 48 have recommended a “strong buy,” while seven people have advocated a “buy.” The remaining five analysts had given a ‘hold’ rating.

The average price projection for Alphabet stock over the last three months has been $125.32; this objective represents a 22.31% upside from its current price. It’s interesting to note that the maximum price forecast for the next year is $160, representing a gain of 56.16% from the stock’s current price of $102.46.

While the outlook for Google stock may be positive, it’s important to keep in mind that some potential challenges and risks could impact its performance, including competition from ChatGPT itself, which could affect Google’s price.

Disclaimer: The content on this site should not be considered investment advice. Investing is speculative. When investing, your capital is at risk.

NEWS

This Apple Watch app brings ChatGPT to your wrist — here’s why you want it

ChatGPT feels like it is everywhere at the moment; the AI-powered tool is rapidly starting to feel like internet connected home devices where you are left wondering if your flower pot really needed Bluetooth. However, after hearing about a new Apple Watch app that brings ChatGPT to your favorite wrist computer, I’m actually convinced this one is worth checking out.

-

MARKETING7 days ago

MARKETING7 days agoRoundel Media Studio: What to Expect From Target’s New Self-Service Platform

-

SEO6 days ago

SEO6 days agoGoogle Limits News Links In California Over Proposed ‘Link Tax’ Law

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Core Update Volatility, Helpful Content Update Gone, Dangerous Google Search Results & Google Ads Confusion

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 12, 2024

-

SEO6 days ago

SEO6 days ago10 Paid Search & PPC Planning Best Practices

-

MARKETING6 days ago

MARKETING6 days ago2 Ways to Take Back the Power in Your Business: Part 2

-

MARKETING4 days ago

MARKETING4 days ago5 Psychological Tactics to Write Better Emails

-

PPC6 days ago

PPC6 days agoCritical Display Error in Brand Safety Metrics On Twitter/X Corrected