SEO

11 Disadvantages Of ChatGPT Content

ChatGPT produces content that is comprehensive and plausibly accurate.

But researchers, artists, and professors warn of shortcomings to be aware of which degrade the quality of the content.

In this article, we’ll look at 11 disadvantages of ChatGPT content. Let’s dive in.

1. Phrase Usage Makes It Detectable As Non-Human

Researchers studying how to detect machine-generated content have discovered patterns that make it sound unnatural.

One of these quirks is how AI struggles with idioms.

An idiom is a phrase or saying with a figurative meaning attached to it, for example, “every cloud has a silver lining.”

A lack of idioms within a piece of content can be a signal that the content is machine-generated – and this can be part of a detection algorithm.

This is what the 2022 research paper Adversarial Robustness of Neural-Statistical Features in Detection of Generative Transformers says about this quirk in machine-generated content:

“Complex phrasal features are based on the frequency of specific words and phrases within the analyzed text that occur more frequently in human text.

…Of these complex phrasal features, idiom features retain the most predictive power in detection of current generative models.”

This inability to use idioms contributes to making ChatGPT output sound and read unnaturally.

2. ChatGPT Lacks Ability For Expression

An artist commented on how the output of ChatGPT mimics what art is, but lacks the actual qualities of artistic expression.

Expression is the act of communicating thoughts or feelings.

ChatGPT output doesn’t contain expressions, only words.

It cannot produce content that touches people emotionally on the same level as a human can – because it has no actual thoughts or feelings.

Musical artist Nick Cave, in an article posted to his Red Hand Files newsletter, commented on a ChatGPT lyric that was sent to him, which was created in the style of Nick Cave.

He wrote:

“What makes a great song great is not its close resemblance to a recognizable work.

…it is the breathless confrontation with one’s vulnerability, one’s perilousness, one’s smallness, pitted against a sense of sudden shocking discovery; it is the redemptive artistic act that stirs the heart of the listener, where the listener recognizes in the inner workings of the song their own blood, their own struggle, their own suffering.”

Cave called the ChatGPT lyrics a mockery.

This is the ChatGPT lyric that resembles a Nick Cave lyric:

“I’ve got the blood of angels, on my hands

I’ve got the fire of hell, in my eyes

I’m the king of the abyss, I’m the ruler of the dark

I’m the one that they fear, in the shadows they hark”

And this is an actual Nick Cave lyric (Brother, My Cup Is Empty):

“Well I’ve been sliding down on rainbows

I’ve been swinging from the stars

Now this wretch in beggar’s clothing

Bangs his cup across the bars

Look, this cup of mine is empty!

Seems I’ve misplaced my desires

Seems I’m sweeping up the ashes

Of all my former fires”

It’s easy to see that the machine-generated lyric resembles the artist’s lyric, but it doesn’t really communicate anything.

Nick Cave’s lyrics tell a story that resonates with the pathos, desire, shame, and willful deception of the person speaking in the song. It expresses thoughts and feelings.

It’s easy to see why Nick Cave calls it a mockery.

3. ChatGPT Does Not Produce Insights

An article published in The Insider quoted an academic who noted that academic essays generated by ChatGPT lack insights about the topic.

ChatGPT summarizes the topic but does not offer a unique insight into the topic.

Humans create through knowledge, but also through their personal experience and subjective perceptions.

Professor Christopher Bartel of Appalachian State University is quoted by The Insider as saying that, while a ChatGPT essay may exhibit high grammar qualities and sophisticated ideas, it still lacked insight.

Bartel said:

“They are really fluffy. There’s no context, there’s no depth or insight.”

Insight is the hallmark of a well-done essay and it’s something that ChatGPT is not particularly good at.

This lack of insight is something to keep in mind when evaluating machine-generated content.

4. ChatGPT Is Too Wordy

A research paper published in January 2023 discovered patterns in ChatGPT content that makes it less suitable for critical applications.

The paper is titled, How Close is ChatGPT to Human Experts? Comparison Corpus, Evaluation, and Detection.

The research showed that humans preferred answers from ChatGPT in more than 50% of questions answered related to finance and psychology.

But ChatGPT failed at answering medical questions because humans preferred direct answers – something the AI didn’t provide.

The researchers wrote:

“…ChatGPT performs poorly in terms of helpfulness for the medical domain in both English and Chinese.

The ChatGPT often gives lengthy answers to medical consulting in our collected dataset, while human experts may directly give straightforward answers or suggestions, which may partly explain why volunteers consider human answers to be more helpful in the medical domain.”

ChatGPT tends to cover a topic from different angles, which makes it inappropriate when the best answer is a direct one.

Marketers using ChatGPT must take note of this because site visitors requiring a direct answer will not be satisfied with a verbose webpage.

And good luck ranking an overly wordy page in Google’s featured snippets, where a succinct and clearly expressed answer that can work well in Google Voice may have a better chance to rank than a long-winded answer.

OpenAI, the makers of ChatGPT, acknowledges that giving verbose answers is a known limitation.

The announcement article by OpenAI states:

“The model is often excessively verbose…”

The ChatGPT bias toward providing long-winded answers is something to be mindful of when using ChatGPT output, as you may encounter situations where shorter and more direct answers are better.

5. ChatGPT Content Is Highly Organized With Clear Logic

ChatGPT has a writing style that is not only verbose but also tends to follow a template that gives the content a unique style that isn’t human.

This inhuman quality is revealed in the differences between how humans and machines answer questions.

The movie Blade Runner has a scene featuring a series of questions designed to reveal whether the subject answering the questions is a human or an android.

These questions were a part of a fictional test called the “Voigt-Kampff test“.

One of the questions is:

“You’re watching television. Suddenly you realize there’s a wasp crawling on your arm. What do you do?”

A normal human response would be to say something like they would scream, walk outside and swat it, and so on.

But when I posed this question to ChatGPT, it offered a meticulously organized answer that summarized the question and then offered logical multiple possible outcomes – failing to answer the actual question.

Screenshot Of ChatGPT Answering A Voight-Kampff Test Question

The answer is highly organized and logical, giving it a highly unnatural feel, which is undesirable.

6. ChatGPT Is Overly Detailed And Comprehensive

ChatGPT was trained in a way that rewarded the machine when humans were happy with the answer.

The human raters tended to prefer answers that had more details.

But sometimes, such as in a medical context, a direct answer is better than a comprehensive one.

What that means is that the machine needs to be prompted to be less comprehensive and more direct when those qualities are important.

From OpenAI:

“These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues.”

7. ChatGPT Lies (Hallucinates Facts)

The above-cited research paper, How Close is ChatGPT to Human Experts?, noted that ChatGPT has a tendency to lie.

It reports:

“When answering a question that requires professional knowledge from a particular field, ChatGPT may fabricate facts in order to give an answer…

For example, in legal questions, ChatGPT may invent some non-existent legal provisions to answer the question.

…Additionally, when a user poses a question that has no existing answer, ChatGPT may also fabricate facts in order to provide a response.”

The Futurism website documented instances where machine-generated content published on CNET was wrong and full of “dumb errors.”

CNET should have had an idea this could happen, because OpenAI published a warning about incorrect output:

“ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.”

CNET claims to have submitted the machine-generated articles to human review prior to publication.

A problem with human review is that ChatGPT content is designed to sound persuasively correct, which may fool a reviewer who is not a topic expert.

8. ChatGPT Is Unnatural Because It’s Not Divergent

The research paper, How Close is ChatGPT to Human Experts? also noted that human communication can have indirect meaning, which requires a shift in topic to understand it.

ChatGPT is too literal, which causes the answers to sometimes miss the mark because the AI overlooks the actual topic.

The researchers wrote:

“ChatGPT’s responses are generally strictly focused on the given question, whereas humans’ are divergent and easily shift to other topics.

In terms of the richness of content, humans are more divergent in different aspects, while ChatGPT prefers focusing on the question itself.

Humans can answer the hidden meaning under the question based on their own common sense and knowledge, but the ChatGPT relies on the literal words of the question at hand…”

Humans are better able to diverge from the literal question, which is important for answering “what about” type questions.

For example, if I ask:

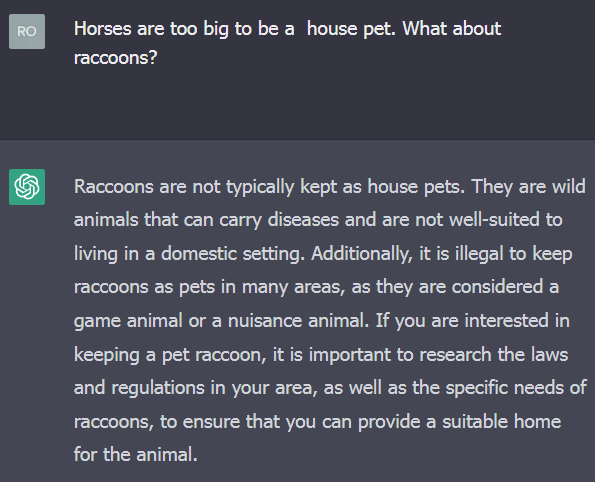

“Horses are too big to be a house pet. What about raccoons?”

The above question is not asking if a raccoon is an appropriate pet. The question is about the size of the animal.

ChatGPT focuses on the appropriateness of the raccoon as a pet instead of focusing on the size.

Screenshot of an Overly Literal ChatGPT Answer

Screenshot from ChatGPT, January 2023

Screenshot from ChatGPT, January 20239. ChatGPT Contains A Bias Towards Being Neutral

The output of ChatGPT is generally neutral and informative. It’s a bias in the output that can appear helpful but isn’t always.

The research paper we just discussed noted that neutrality is an unwanted quality when it comes to legal, medical, and technical questions.

Humans tend to pick a side when offering these kinds of opinions.

10. ChatGPT Is Biased To Be Formal

ChatGPT output has a bias that prevents it from loosening up and answering with ordinary expressions. Instead, its answers tend to be formal.

Humans, on the other hand, tend to answer questions with a more colloquial style, using everyday language and slang – the opposite of formal.

ChatGPT doesn’t use abbreviations like GOAT or TL;DR.

The answers also lack instances of irony, metaphors, and humor, which can make ChatGPT content overly formal for some content types.

The researchers write:

“…ChatGPT likes to use conjunctions and adverbs to convey a logical flow of thought, such as “In general”, “on the other hand”, “Firstly,…, Secondly,…, Finally” and so on.

11. ChatGPT Is Still In Training

ChatGPT is currently still in the process of training and improving.

OpenAI recommends that all content generated by ChatGPT should be reviewed by a human, listing this as a best practice.

OpenAI suggests keeping humans in the loop:

“Wherever possible, we recommend having a human review outputs before they are used in practice.

This is especially critical in high-stakes domains, and for code generation.

Humans should be aware of the limitations of the system, and have access to any information needed to verify the outputs (for example, if the application summarizes notes, a human should have easy access to the original notes to refer back).”

Unwanted Qualities Of ChatGPT

It’s clear that there are many issues with ChatGPT that make it unfit for unsupervised content generation. It contains biases and fails to create content that feels natural or contains genuine insights.

Further, its inability to feel or author original thoughts makes it a poor choice for generating artistic expressions.

Users should apply detailed prompts in order to generate content that is better than the default content it tends to output.

Lastly, human review of machine-generated content is not always enough, because ChatGPT content is designed to appear correct, even when it’s not.

That means it’s important that human reviewers are subject-matter experts who can discern between correct and incorrect content on a specific topic.

More resources:

Featured image by Shutterstock/fizkes

SEO

Google Clarifies Vacation Rental Structured Data

Google’s structured data documentation for vacation rentals was recently updated to require more specific data in a change that is more of a clarification than it is a change in requirements. This change was made without any formal announcement or notation in the developer pages changelog.

Vacation Rentals Structured Data

These specific structured data types makes vacation rental information eligible for rich results that are specific to these kinds of rentals. However it’s not available to all websites. Vacation rental owners are required to be connected to a Google Technical Account Manager and have access to the Google Hotel Center platform.

VacationRental Structured Data Type Definitions

The primary changes were made to the structured data property type definitions where Google defines what the required and recommended property types are.

The changes to the documentation is in the section governing the Recommended properties and represents a clarification of the recommendations rather than a change in what Google requires.

The primary changes were made to the structured data type definitions where Google defines what the required and recommended property types are.

The changes to the documentation is in the section governing the Recommended properties and represents a clarification of the recommendations rather than a change in what Google requires.

Address Schema.org property

This is a subtle change but it’s important because it now represents a recommendation that requires more precise data.

This is what was recommended before:

“streetAddress”: “1600 Amphitheatre Pkwy.”

This is what it now recommends:

“streetAddress”: “1600 Amphitheatre Pkwy, Unit 6E”

Address Property Change Description

The most substantial change is to the description of what the “address” property is, becoming more descriptive and precise about what is recommended.

The description before the change:

PostalAddress

Information about the street address of the listing. Include all properties that apply to your country.

The description after the change:

PostalAddress

The full, physical location of the vacation rental.

Provide the street address, city, state or region, and postal code for the vacation rental. If applicable, provide the unit or apartment number.

Note that P.O. boxes or other mailing-only addresses are not considered full, physical addresses.

This is repeated in the section for address.streetAddress property

This is what it recommended before:

address.streetAddress Text

The full street address of your vacation listing.

And this is what it recommends now:

address.streetAddress Text

The full street address of your vacation listing, including the unit or apartment number if applicable.

Clarification And Not A Change

Although these updates don’t represent a change in Google’s guidance they are nonetheless important because they offer clearer guidance with less ambiguity as to what is recommended.

Read the updated structured data guidance:

Vacation rental (VacationRental) structured data

Featured Image by Shutterstock/New Africa

SEO

Google On Hyphens In Domain Names

Google’s John Mueller answered a question on Reddit about why people don’t use hyphens with domains and if there was something to be concerned about that they were missing.

Domain Names With Hyphens For SEO

I’ve been working online for 25 years and I remember when using hyphens in domains was something that affiliates did for SEO when Google was still influenced by keywords in the domain, URL, and basically keywords anywhere on the webpage. It wasn’t something that everyone did, it was mainly something that was popular with some affiliate marketers.

Another reason for choosing domain names with keywords in them was that site visitors tended to convert at a higher rate because the keywords essentially prequalified the site visitor. I know from experience how useful two-keyword domains (and one word domain names) are for conversions, as long as they didn’t have hyphens in them.

A consideration that caused hyphenated domain names to fall out of favor is that they have an untrustworthy appearance and that can work against conversion rates because trustworthiness is an important factor for conversions.

Lastly, hyphenated domain names look tacky. Why go with tacky when a brandable domain is easier for building trust and conversions?

Domain Name Question Asked On Reddit

This is the question asked on Reddit:

“Why don’t people use a lot of domains with hyphens? Is there something concerning about it? I understand when you tell it out loud people make miss hyphen in search.”

And this is Mueller’s response:

“It used to be that domain names with a lot of hyphens were considered (by users? or by SEOs assuming users would? it’s been a while) to be less serious – since they could imply that you weren’t able to get the domain name with fewer hyphens. Nowadays there are a lot of top-level-domains so it’s less of a thing.

My main recommendation is to pick something for the long run (assuming that’s what you’re aiming for), and not to be overly keyword focused (because life is too short to box yourself into a corner – make good things, course-correct over time, don’t let a domain-name limit what you do online). The web is full of awkward, keyword-focused short-lived low-effort takes made for SEO — make something truly awesome that people will ask for by name. If that takes a hyphen in the name – go for it.”

Pick A Domain Name That Can Grow

Mueller is right about picking a domain name that won’t lock your site into one topic. When a site grows in popularity the natural growth path is to expand the range of topics the site coves. But that’s hard to do when the domain is locked into one rigid keyword phrase. That’s one of the downsides of picking a “Best + keyword + reviews” domain, too. Those domains can’t grow bigger and look tacky, too.

That’s why I’ve always recommended brandable domains that are memorable and encourage trust in some way.

Read the post on Reddit:

Read Mueller’s response here.

Featured Image by Shutterstock/Benny Marty

SEO

Reddit Post Ranks On Google In 5 Minutes

Google’s Danny Sullivan disputed the assertions made in a Reddit discussion that Google is showing a preference for Reddit in the search results. But a Redditor’s example proves that it’s possible for a Reddit post to rank in the top ten of the search results within minutes and to actually improve rankings to position #2 a week later.

Discussion About Google Showing Preference To Reddit

A Redditor (gronetwork) complained that Google is sending so many visitors to Reddit that the server is struggling with the load and shared an example that proved that it can only take minutes for a Reddit post to rank in the top ten.

That post was part of a 79 post Reddit thread where many in the r/SEO subreddit were complaining about Google allegedly giving too much preference to Reddit over legit sites.

The person who did the test (gronetwork) wrote:

“…The website is already cracking (server down, double posts, comments not showing) because there are too many visitors.

…It only takes few minutes (you can test it) for a post on Reddit to appear in the top ten results of Google with keywords related to the post’s title… (while I have to wait months for an article on my site to be referenced). Do the math, the whole world is going to spam here. The loop is completed.”

Reddit Post Ranked Within Minutes

Another Redditor asked if they had tested if it takes “a few minutes” to rank in the top ten and gronetwork answered that they had tested it with a post titled, Google SGE Review.

gronetwork posted:

“Yes, I have created for example a post named “Google SGE Review” previously. After less than 5 minutes it was ranked 8th for Google SGE Review (no quotes). Just after Washingtonpost.com, 6 authoritative SEO websites and Google.com’s overview page for SGE (Search Generative Experience). It is ranked third for SGE Review.”

It’s true, not only does that specific post (Google SGE Review) rank in the top 10, the post started out in position 8 and it actually improved ranking, currently listed beneath the number one result for the search query “SGE Review”.

Screenshot Of Reddit Post That Ranked Within Minutes

Anecdotes Versus Anecdotes

Okay, the above is just one anecdote. But it’s a heck of an anecdote because it proves that it’s possible for a Reddit post to rank within minutes and get stuck in the top of the search results over other possibly more authoritative websites.

hankschrader79 shared that Reddit posts outrank Toyota Tacoma forums for a phrase related to mods for that truck.

Google’s Danny Sullivan responded to that post and the entire discussion to dispute that Reddit is not always prioritized over other forums.

Danny wrote:

“Reddit is not always prioritized over other forums. [super vhs to mac adapter] I did this week, it goes Apple Support Community, MacRumors Forum and further down, there’s Reddit. I also did [kumo cloud not working setup 5ghz] recently (it’s a nightmare) and it was the Netgear community, the SmartThings Community, GreenBuildingAdvisor before Reddit. Related to that was [disable 5g airport] which has Apple Support Community above Reddit. [how to open an 8 track tape] — really, it was the YouTube videos that helped me most, but it’s the Tapeheads community that comes before Reddit.

In your example for [toyota tacoma], I don’t even get Reddit in the top results. I get Toyota, Car & Driver, Wikipedia, Toyota again, three YouTube videos from different creators (not Toyota), Edmunds, a Top Stories unit. No Reddit, which doesn’t really support the notion of always wanting to drive traffic just to Reddit.

If I guess at the more specific query you might have done, maybe [overland mods for toyota tacoma], I get a YouTube video first, then Reddit, then Tacoma World at third — not near the bottom. So yes, Reddit is higher for that query — but it’s not first. It’s also not always first. And sometimes, it’s not even showing at all.”

hankschrader79 conceded that they were generalizing when they wrote that Google always prioritized Reddit. But they also insisted that that didn’t diminish what they said is a fact that Google’s “prioritization” forum content has benefitted Reddit more than actual forums.

Why Is The Reddit Post Ranked So High?

It’s possible that Google “tested” that Reddit post in position 8 within minutes and that user interaction signals indicated to Google’s algorithms that users prefer to see that Reddit post. If that’s the case then it’s not a matter of Google showing preference to Reddit post but rather it’s users that are showing the preference and the algorithm is responding to those preferences.

Nevertheless, an argument can be made that user preferences for Reddit can be a manifestation of Familiarity Bias. Familiarity Bias is when people show a preference for things that are familiar to them. If a person is familiar with a brand because of all the advertising they were exposed to then they may show a bias for the brand products over unfamiliar brands.

Users who are familiar with Reddit may choose Reddit because they don’t know the other sites in the search results or because they have a bias that Google ranks spammy and optimized websites and feel safer reading Reddit.

Google may be picking up on those user interaction signals that indicate a preference and satisfaction with the Reddit results but those results may simply be biases and not an indication that Reddit is trustworthy and authoritative.

Is Reddit Benefiting From A Self-Reinforcing Feedback Loop?

It may very well be that Google’s decision to prioritize user generated content may have started a self-reinforcing pattern that draws users in to Reddit through the search results and because the answers seem plausible those users start to prefer Reddit results. When they’re exposed to more Reddit posts their familiarity bias kicks in and they start to show a preference for Reddit. So what could be happening is that the users and Google’s algorithm are creating a self-reinforcing feedback loop.

Is it possible that Google’s decision to show more user generated content has kicked off a cycle where more users are exposed to Reddit which then feeds back into Google’s algorithm which in turn increases Reddit visibility, regardless of lack of expertise and authoritativeness?

Featured Image by Shutterstock/Kues

-

PPC7 days ago

PPC7 days agoCompetitor Monitoring: 7 ways to keep watch on the competition

-

WORDPRESS6 days ago

WORDPRESS6 days agoTurkish startup ikas attracts $20M for its e-commerce platform designed for small businesses

-

PPC6 days ago

PPC6 days ago31 Ready-to-Go Mother’s Day Messages for Social Media, Email, & More

-

PPC6 days ago

PPC6 days agoA History of Google AdWords and Google Ads: Revolutionizing Digital Advertising & Marketing Since 2000

-

WORDPRESS7 days ago

WORDPRESS7 days agoThrive Architect vs Divi vs Elementor

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoMore Google March 2024 Core Update Ranking Volatility

-

MARKETING5 days ago

MARKETING5 days agoRoundel Media Studio: What to Expect From Target’s New Self-Service Platform

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Search Results Can Be Harmful & Dangerous In Some Cases

You must be logged in to post a comment Login