SEO

How To Build A Recommender System With TF-IDF And NMF (Python)

Topic clusters and recommender systems can help SEO experts to build a scalable internal linking architecture.

And as we know, internal linking can impact both user experience and search rankings. It’s an area we want to get right.

In this article, we will use Wikipedia data to build topic clusters and recommender systems with Python and the Pandas data analysis tool.

To achieve this, we will use the Scikit-learn library, a free software machine learning library for Python, with two main algorithms:

- TF-IDF: Term frequency-inverse document frequency.

- NMF: Non-negative matrix factorization, which is a group of algorithms in multivariate analysis and linear algebra that can be used to analyze dimensional data.

Specifically, we will:

- Extract all of the links from a Wikipedia article.

- Read text from Wikipedia articles.

- Create a TF-IDF map.

- Split queries into clusters.

- Build a recommender system.

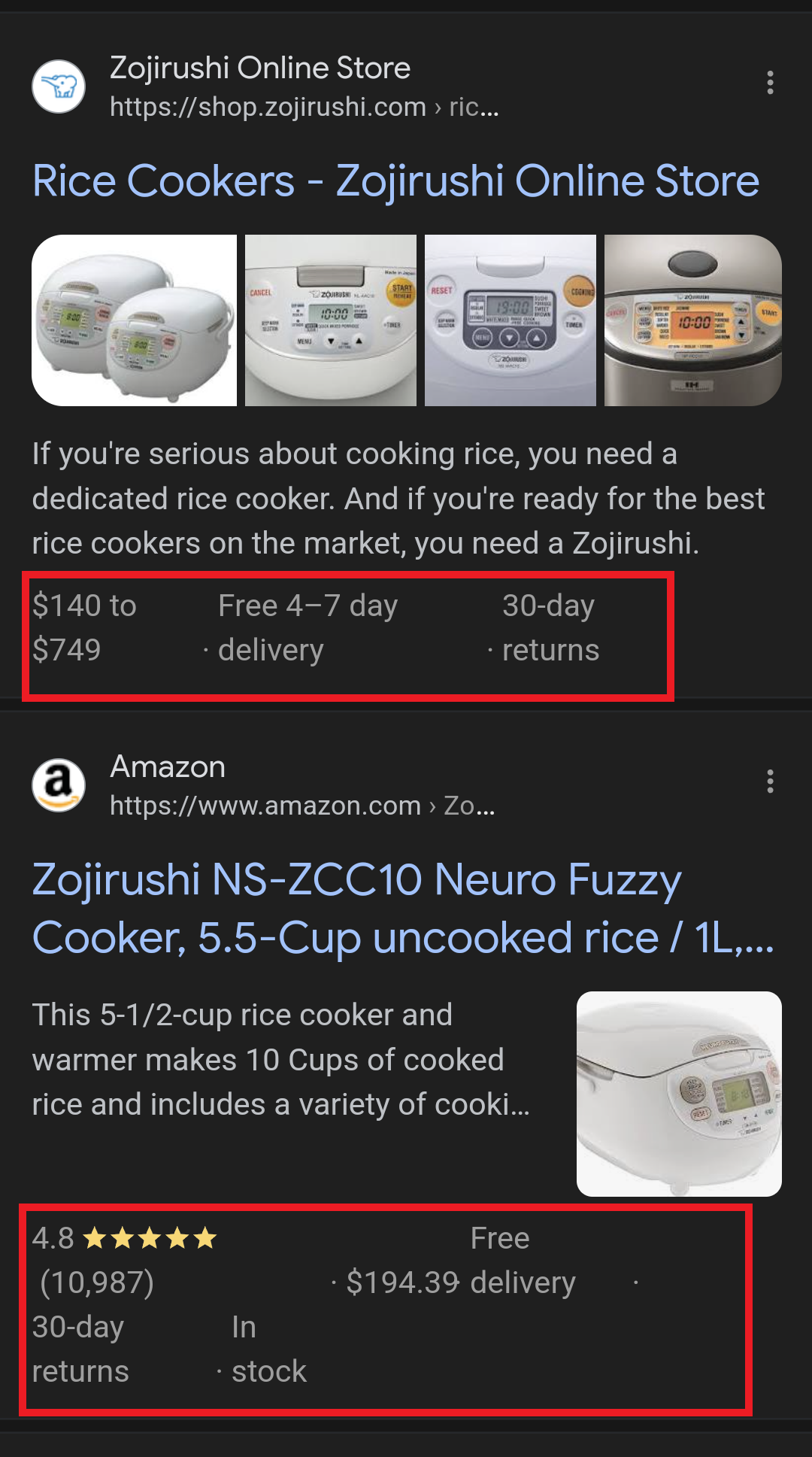

Here is an example of topic clusters that you will be able to build:

Moreover, here’s the overview of the recommender system that you can recreate.

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022Ready? Let’s get a few definitions and concepts you’ll want to know out of the way first.

The Difference Between Topic Clusters & Recommender Systems

Topic clusters and recommender systems can be built in different ways.

In this case, the former is grouped by IDF weights and the latter by cosine similarity.

In simple SEO terms:

- Topic clusters can help to create an architecture where all articles are linked to.

- Recommender systems can help to create an architecture where the most relevant pages are linked to.

What Is TF-IDF?

TF-IDF, or term frequency-inverse document frequency, is a figure that expresses the statistical importance of any given word to the document collection as a whole.

TF-IDF is calculated by multiplying term frequency and inverse document frequency.

TF-IDF = TF * IDF

- TF: Number of times a word appears in a document/number of words in the document.

- IDF: log(Number of documents / Number of documents that contain the word).

To illustrate this, let’s consider this situation with Machine Learning as a target word:

- Document A contains the target word 10 times out of 100 words.

- In the entire corpus, 30 documents out of 200 documents also contain the target word.

Then, the formula would be:

TF-IDF = (10/100) * log(200/30)

What TF-IDF Is Not

TF-IDF is not something new. It’s not something that you need to optimize for.

According to John Mueller, it’s an old information retrieval concept that isn’t worth focusing on for SEO.

There is nothing in it that will help you outperform your competitors.

Still, TF-IDF can be useful to SEOs.

Learning how TF-IDF works gives insight into how a computer can interpret human language.

Consequently, one can leverage that understanding to improve the relevancy of the content using similar techniques.

What Is Non-negative Matrix Factorization (NMF)?

Non-negative matrix factorization, or NMF, is a dimension reduction technique often used in unsupervised learning that combines the product of non-negative features into a single one.

In this article, NMF will be used to define the number of topics we want all the articles to be grouped under.

Definition Of Topic Clusters

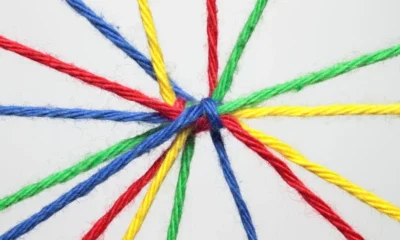

Topic clusters are groupings of related terms that can help you create an architecture where all articles are interlinked or on the receiving end of internal links.

Definition Of Recommender Systems

Recommender systems can help to create an architecture where the most relevant pages are linked to.

Building A Topic Cluster

Topic clusters and recommender systems can be built in different ways.

In this case, topic clusters are grouped by IDF weights and the Recommender systems by cosine similarity.

Extract All The Links From A Specific Wikipedia Article

Extracting links on a Wikipedia page is done in two steps.

First, select a specific subject. In this case, we use the Wikipedia article on machine learning.

Second, use the Wikipedia API to find all the internal links on the article.

Here is how to query the Wikipedia API using the Python requests library.

import requests

main_subject="Machine learning"

url="https://en.wikipedia.org/w/api.php"

params = {

'action': 'query',

'format': 'json',

'generator':'links',

'titles': main_subject,

'prop':'pageprops',

'ppprop':'wikibase_item',

'gpllimit':1000,

'redirects':1

}

r = requests.get(url, params=params)

r_json = r.json()

linked_pages = r_json['query']['pages']

page_titles = [p['title'] for p in linked_pages.values()]

At last, the result is a list of all the pages linked from the initial article.

-

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022

These links represent each of the entities used for the topic clusters.

Select A Subset Of Articles

For performance purposes, we will select only the first 200 articles (including the main article on machine learning).

# select first X articles num_articles = 200 pages = page_titles[:num_articles] # make sure to keep the main subject on the list pages += [main_subject] # make sure there are no duplicates on the list pages = list(set(pages))

Read Text From The Wikipedia Articles

Now, we need to extract the content of each article to perform the calculations for the TF-IDF analysis.

To do so, we will fetch the API again for each of the pages stored in the pages variable.

From each response, we will store the text from the page and add it to a list called text_db.

Note that you may need to install tqdm and lxml packages to use them.

import requests

from lxml import html

from tqdm.notebook import tqdm

text_db = []

for page in tqdm(pages):

response = requests.get(

'https://en.wikipedia.org/w/api.php',

params={

'action': 'parse',

'page': page,

'format': 'json',

'prop':'text',

'redirects':''

}

).json()

raw_html = response['parse']['text']['*']

document = html.document_fromstring(raw_html)

text=""

for p in document.xpath('//p'):

text += p.text_content()

text_db.append(text)

print('Done')

This query will return a list in which each element represent the text of the corresponding Wikipedia page.

## Print number of articles

print('Number of articles extracted: ', len(text_db))

Output:

Number of articles extracted: 201

As we can see, there are 201 articles.

This is because we added the article on “Machine learning” on top of the top 200 links from that page.

Furthermore, we can select the first article (index 0) and read the first 300 characters to gain a better understanding.

# read first 300 characters of 1st article text_db[0][:300]

Output:

'nBiology is the scientific study of life.[1][2][3] It is a natural science with a broad scope but has several unifying themes that tie it together as a single, coherent field.[1][2][3] For instance, all organisms are made up of cells that process hereditary information encoded in genes, which can '

Create A TF-IDF Map

In this section, we will rely on pandas and TfidfVectorizer to create a Dataframe that contains the bi-grams (two consecutive words) of each article.

Here, we are using TfidfVectorizer.

This is the equivalent of using CountVectorizer followed by TfidfTransformer, which you may see in other tutorials.

In addition, we need to remove the “noise”. In the field of Natural Language Processing, words like “the”, “a”, “I”, “we” are called “stopwords”.

In the English language, stopwords have low relevancy for SEOs and are overrepresented in documents.

Hence, using nltk, we will add a list of English stopwords to the TfidfVectorizer class.

import pandas as pd from sklearn.feature_extraction.text import TfidfVectorizer from nltk.corpus import stopwords

# Create a list of English stopwords stop_words = stopwords.words('english')

# Instantiate the class vec = TfidfVectorizer( stop_words=stop_words, ngram_range=(2,2), # bigrams use_idf=True )

# Train the model and transform the data tf_idf = vec.fit_transform(text_db)

# Create a pandas DataFrame df = pd.DataFrame( tf_idf.toarray(), columns=vec.get_feature_names(), index=pages )

# Show the first lines of the DataFrame df.head()

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022In the DataFrame above:

- Rows are the documents.

- Columns are the bi-grams (two consecutive words).

- The values are the word frequencies (tf-idf).

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022Sort The IDF Vectors

Below, we are sorting the Inverse document frequency vectors by relevance.

idf_df = pd.DataFrame(

vec.idf_,

index=vec.get_feature_names(),

columns=['idf_weigths']

)

idf_df.sort_values(by=['idf_weigths']).head(10)

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022Specifically, the IDF vectors are calculated from the log of the number of articles divided by the number of articles containing each word.

The greater the IDF, the more relevant it is to an article.

The lower the IDF, the more common it is across all articles.

- 1 mention out of 1 articles = log(1/1) = 0.0

- 1 mention out of 2 articles = log(2/1) = 0.69

- 1 mention out of 10 articles = log(10/1) = 2.30

- 1 mention out of 100 articles = log(100/1) = 4.61

Split Queries Into Clusters Using NMF

Using the tf_idf matrix, we will split queries into topical clusters.

Each cluster will contain closely related bi-grams.

Firstly, we will use NMF to reduce the dimensionality of the matrix into topics.

Simply put, we will group 201 articles into 25 topics.

from sklearn.decomposition import NMF from sklearn.preprocessing import normalize # (optional) Disable FutureWarning of Scikit-learn from warnings import simplefilter simplefilter(action='ignore', category=FutureWarning) # select number of topic clusters n_topics = 25 # Create an NMF instance nmf = NMF(n_components=n_topics) # Fit the model to the tf_idf nmf_features = nmf.fit_transform(tf_idf) # normalize the features norm_features = normalize(nmf_features)

We can see that the number of bigrams stays the same, but articles are grouped into topics.

# Compare processed VS unprocessed dataframes

print('Original df: ', df.shape)

print('NMF Processed df: ', nmf.components_.shape)

Secondly, for each of the 25 clusters, we will provide query recommendations.

# Create clustered dataframe the NMF clustered df

components = pd.DataFrame(

nmf.components_,

columns=[df.columns]

)

clusters = {}

# Show top 25 queries for each cluster

for i in range(len(components)):

clusters[i] = []

loop = dict(components.loc[i,:].nlargest(25)).items()

for k,v in loop:

clusters[i].append({'q':k[0],'sim_score': v})

Thirdly, we will create a data frame that shows the recommendations.

# Create dataframe using the clustered dictionary

grouping = pd.DataFrame(clusters).T

grouping['topic'] = grouping[0].apply(lambda x: x['q'])

grouping.drop(0, axis=1, inplace=True)

grouping.set_index('topic', inplace=True)

def show_queries(df):

for col in df.columns:

df[col] = df[col].apply(lambda x: x['q'])

return df

# Only display the query in the dataframe

clustered_queries = show_queries(grouping)

clustered_queries.head()

Finally, the result is a DataFrame showing 25 topics along with the top 25 bigrams for each topic.

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022Building A Recommender System

Now, instead of building topic clusters, we will now build a recommender system using the same normalized features from the previous step.

The normalized features are stored in the norm_features variable.

# compute cosine similarities of each cluster

data = {}

# create dataframe

norm_df = pd.DataFrame(norm_features, index=pages)

for page in pages:

# select page recommendations

recommendations = norm_df.loc[page,:]

# Compute cosine similarity

similarities = norm_df.dot(recommendations)

data[page] = []

loop = dict(similarities.nlargest(20)).items()

for k, v in loop:

if k != page:

data[page].append({'q':k,'sim_score': v})

What the code above does is:

- Loops through each of the pages selected at the start.

- Selects the corresponding row in the normalized dataframe.

- Computes the cosine similarity of all the bigram queries.

- Selects the top 20 queries sorted by similarity score.

After the execution, we are left with a dictionary of pages containing lists of recommendations sorted by similarity score.

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022The next step is to convert that dictionary into a DataFrame.

# convert dictionary to dataframe

recommender = pd.DataFrame(data).T

def show_queries(df):

for col in df.columns:

df[col] = df[col].apply(lambda x: x['q'])

return df

show_queries(recommender).head()

The resulting DataFrame shows the parent query along with sorted recommended topics in each column.

Screenshot from Pandas, February 2022

Screenshot from Pandas, February 2022Voilà!

We are done building our own recommender system and topic cluster.

Interesting Contributions From The SEO Community

I am a big fan of Daniel Heredia, who has also played around with TF-IDF by finding relevant words with TF IDF, textblob, and Python.

Python tutorials can be daunting.

A single article may not be enough.

If that is the case, I encourage you to read Koray Tuğberk GÜBÜR’s tutorial, which exposes a similar way to use TF-IDF.

Billy Bonaros also came up with a creative application of TF-IDF in Python and showed how to create a TF-IDF keyword research tool.

Conclusion

In the end, I hope you have learned a logic here that can be adapted to any website.

Understanding how topic clusters and recommender systems can help improve a website’s architecture is a valuable skill for any SEO pro wishing to scale your work.

Using Python and Scikit-learn, you have learned how to build your own – and have learned the basics of TF-IDF and of non-negative matrix factorization in the process.

More resources:

Featured Image: Kateryna Reka/Shutterstock

Source link

SEO

25 WordPress Alternatives Best For SEO

WordPress powers hundreds of millions of websites, but it is not the only content management system (CMS) option.

There’s a diverse marketplace of publishing platforms for those seeking alternatives. This review provides an overview of 25 leading alternatives to WordPress across key website categories.

We explore user-friendly website builders like Wix, Squarespace, and Weebly, which offer drag-and-drop simplicity. We look at flexible open-source options for developers and tech-savvy users, including Joomla, Drupal, and Hugo.

Ecommerce merchants can choose between hosted platforms like Shopify or open-source solutions like Magento. We also cover blogging-focused options like Ghost and Tumblr, web hosting providers like Bluehost, and community management tools like vBulletin.

For each alternative, we summarize the key features, benefits, and drawbacks to consider. Factors like budget, technical abilities, and website goals are examined to help identify the best fit based on individual needs.

While WordPress powers a large share of sites, there’s no shortage of quality options for creating the perfect online presence for those seeking alternatives.

Why Consider A WordPress Alternative?

There are several reasons why someone might consider a WordPress alternative for their website:

- Specific needs: While WordPress is versatile, some websites may have particular requirements for which other platforms are better suited.

- Ease of use: Some users may find WordPress challenging, especially if they lack technical skills.

- Maintenance and security: As an open-source platform, WordPress requires users to handle updates, backups, and security measures themselves.

- Built-in features: Some alternatives come with built-in features that WordPress requires plugins for.

- Customization: While WordPress offers many customization options, some users may prefer platforms that allow more granular control over the website’s appearance and functionality.

- Simplicity: Other publishing platforms might be a better fit for users who want a simple platform to publish content without dealing with the complexities of managing a website.

How To Choose An Alternative To WordPress

Choosing the right WordPress alternative depends on your specific needs and goals. To help you make an informed decision, consider the following factors:

- Purpose of your website: Determine its primary purpose. Is it a blog, an online store, a portfolio, or a complex business website?

- Budget: Consider your budget for building and maintaining your website. Some alternatives are free, while others require a subscription or a one-time payment.

- Technical skills: Assess your technical skills and those of your team. Some alternatives are designed for users with little coding experience, while others may require more technical knowledge.

- Customization and flexibility: Evaluate how much control you want over your website’s appearance and functionality.

- Scalability: Consider your website’s potential for growth. If you anticipate a significant increase in traffic or content, choose a platform that can scale with your needs.

- Support and community: Look into the level of support and the size of the community surrounding each alternative.

- Hosting: Decide whether you prefer a self-hosted solution or a hosted platform.

- Features: List the features your website requires. Ensure that your alternative offers these features natively or through extensions.

Once you’ve considered these factors, research various WordPress alternatives and compare them based on your requirements.

Read reviews, explore user communities, and, if possible, test out the platforms through free trials or demos.

This will help you better understand how each alternative works and whether it aligns with your needs and expectations.

25 Best WordPress Alternatives

1. Wix

Wix is best suited for individuals, small businesses, and entrepreneurs who want to create a professional-looking website without extensive technical skills or a large budget.

The platform’s user-friendly drag-and-drop interface makes it easy for users to design and customize their websites, offering various templates and design elements suitable for multiple purposes.

As a hosted platform, Wix takes care of technical aspects like server maintenance and security updates, making it ideal for those who don’t want to deal with these issues.

Wix also offers a free plan, allowing users to create a website without cost, although with limitations such as Wix branding and a non-custom domain.

One nuance to remember when using Wix is that once you’ve chosen a template and started building your site, it can be challenging to switch to a different template without redesigning your content.

Key Features:

- Full hosting solution.

- No software to self-install.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- All-in-one website builder and platform.

- Free version available.

- Premade designs and templates.

- Free subdomain available for all plans.

- Free custom domain available with paid plans.

- Customer support.

Cons:

- There is no option to retain complete control of your site as you can with WordPress.

- No access to source code.

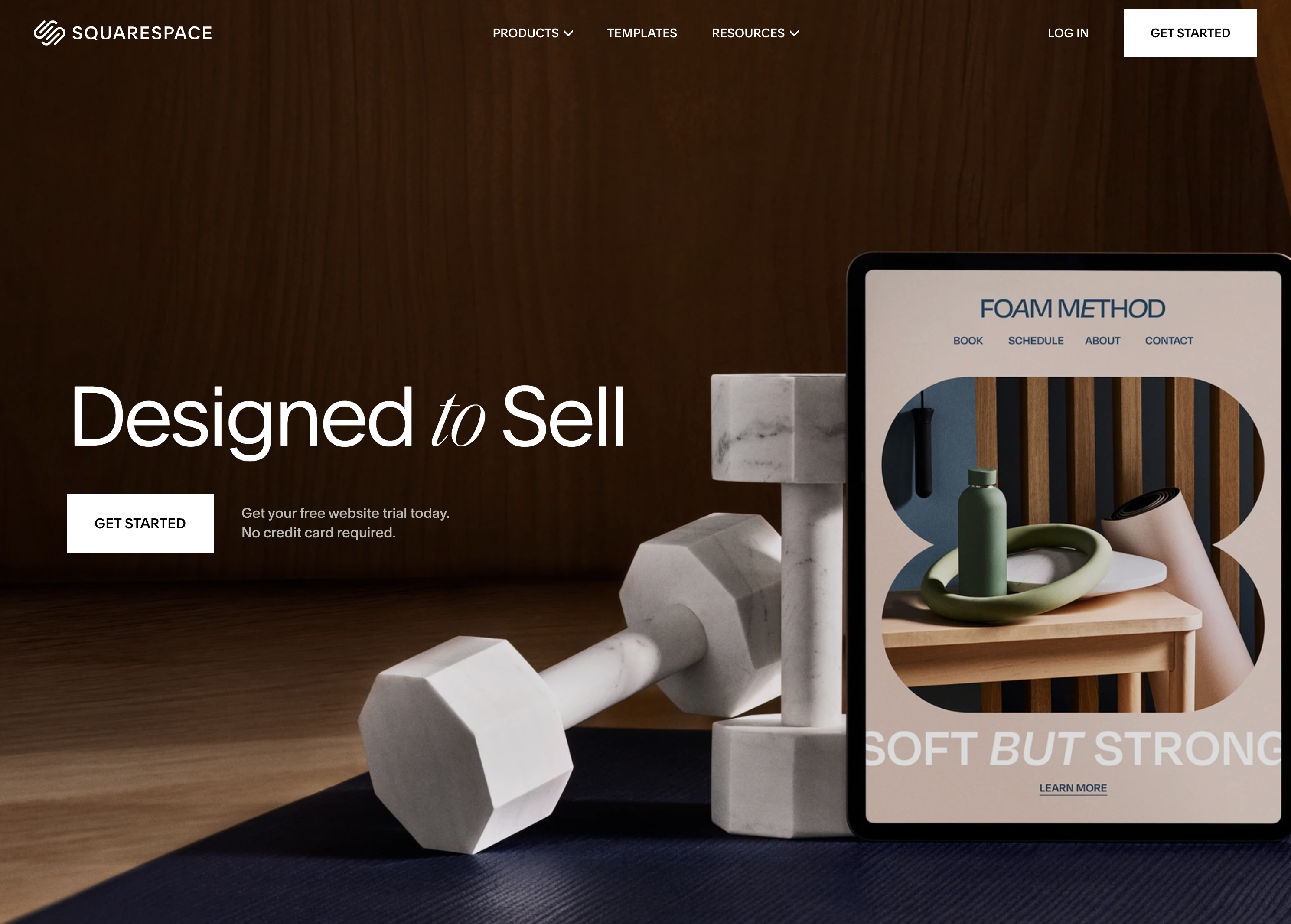

2. Squarespace

Screenshot from: squarespace.com, March 2024.

Screenshot from: squarespace.com, March 2024.Squarespace is best suited for creatives, bloggers, and small business owners who want to create visually stunning websites with minimal technical knowledge.

The platform is known for its sleek, modern templates, which showcase content beautifully and are optimized for mobile devices.

As an all-in-one platform, Squarespace includes hosting, domain registration, and various tools for managing your website.

While Squarespace offers a high degree of customization, it may not be as flexible as some alternatives. The platform has a specific structure and set of features, which can be limiting for those who require advanced functionality or integrations.

Nonetheless, for most users, Squarespace’s built-in features and integrations are more than sufficient.

One tip for getting the most out of Squarespace is to use its built-in SEO and marketing tools, such as custom meta descriptions, alt tags, and automatic sitemaps.

Key Features:

- Complete hosting solution (including video).

- No software to self-install.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- All-in-one website builder and platform.

- Free version available.

- Premade designs and templates.

- Free subdomain available for all plans.

- Free custom domain available with an annual subscription.

- Customer support.

Cons:

- There is no option to retain complete control of your site as you can with WordPress.

- No custom coding.

- No access to source code.

- No third-party extensions.

3. Weebly

Screenshot from: weebly.com, March 2024.

Screenshot from: weebly.com, March 2024.Weebly is best suited for individuals, small businesses, and entrepreneurs who want to create a simple, functional website without investing much time or money.

The platform’s drag-and-drop interface and intuitive editor make it beginner-friendly and easy to use, even for those without website-building experience.

However, it may not be as powerful or flexible as other alternatives, with a limited set of features and integrations that can be restrictive for those requiring advanced functionality or custom solutions.

One tip for getting the most out of Weebly is to explore its app center, which offers a range of third-party apps and integrations to extend your website’s functionality, including tools for marketing, social media, and ecommerce.

Key Features:

- Full hosting solution.

- No software to self-install.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- All-in-one website builder and platform.

- Free version available.

- Premade designs and templates.

- Free subdomain available.

- Inexpensive premium plans are as low as $6.00 per month.

- Free custom domain available with premium plans.

- Customer support.

Cons:

- No option to retain complete control of your site as you can with WordPress.

- No access to source code.

- The free version restricts you to a maximum of five pages.

4. Google Sites

Screenshot from: workspace.google.com/intl/en_ph/lp/sites/, March 2024.

Screenshot from: workspace.google.com/intl/en_ph/lp/sites/, March 2024.Google Sites is best suited for individuals, educators, and small businesses who need a simple, easy-to-use platform for creating basic websites or intranets. Its seamless integration with other Google tools, like Google Docs, Sheets, and Drive, makes it an excellent choice for those familiar with and heavily using these tools.

Google Sites also offers collaboration features, allowing multiple users to work on the same website simultaneously, making it ideal for team projects or class websites.

However, it’s a relatively basic website builder compared to other alternatives, with limited features and customization options. It may not be the best choice for those needing advanced functionality or design flexibility.

Additionally, it lacks built-in ecommerce features, making it less suitable for online stores.

One tip for getting the most out of Google Sites is leveraging its integration with other Google tools, such as embedding Google Docs, Sheets, or Slides into your web pages or using Google Forms to collect visitor data.

Key Features:

- The creator has complete control over page access and permissions.

- Tools can be accessed anywhere.

- It can be used as a basic project management program.

- Plenty of web development and deployment options.

- Real-time editing.

- Uses website speed optimization tools to minimize loading times.

Pros:

- Fast to get started and easy to use.

- Free to use.

- Integrated with other Google products.

Cons:

- Limited functionality compared to other website builders.

- It may not work with non-Google apps.

- Limited customization options.

- No SEO tools, and you can’t edit metadata.

- It cannot integrate Facebook pixels.

5. Jekyll

Screenshot from: jekyllrb.com, March 2024.

Screenshot from: jekyllrb.com, March 2024.Jekyll is best suited for developers, bloggers, and tech-savvy individuals who prefer a lightweight, flexible website creation platform. It’s particularly popular among the GitHub community, as it can be easily integrated with GitHub Pages for free hosting.

Jekyll requires specific technical knowledge, as users must be comfortable working with the command line and writing code. While Jekyll offers plugins and themes to extend its functionality, users may need to rely on their coding skills to customize their website fully.

One tip for getting the most out of Jekyll is to utilize its built-in blogging features, which offer a simple, intuitive way to create and manage blog posts using Markdown.

Another nuance to remember is that Jekyll generates static pages that may not be the best choice for websites requiring frequent updates or complex functionality.

Key Features:

- No programming is involved.

- SEO is built-in.

- GitHub manages redirects.

- Easy setup of custom domains.

Pros:

- No server maintenance.

- Very fast.

- Secure.

- Free hosting.

- Free SSL certificate.

- Works with GitHub as CMS.

Cons:

- It can’t create contact forms.

- No dynamic content options.

- Posts cannot be scheduled.

- Does not include image manipulation functionality.

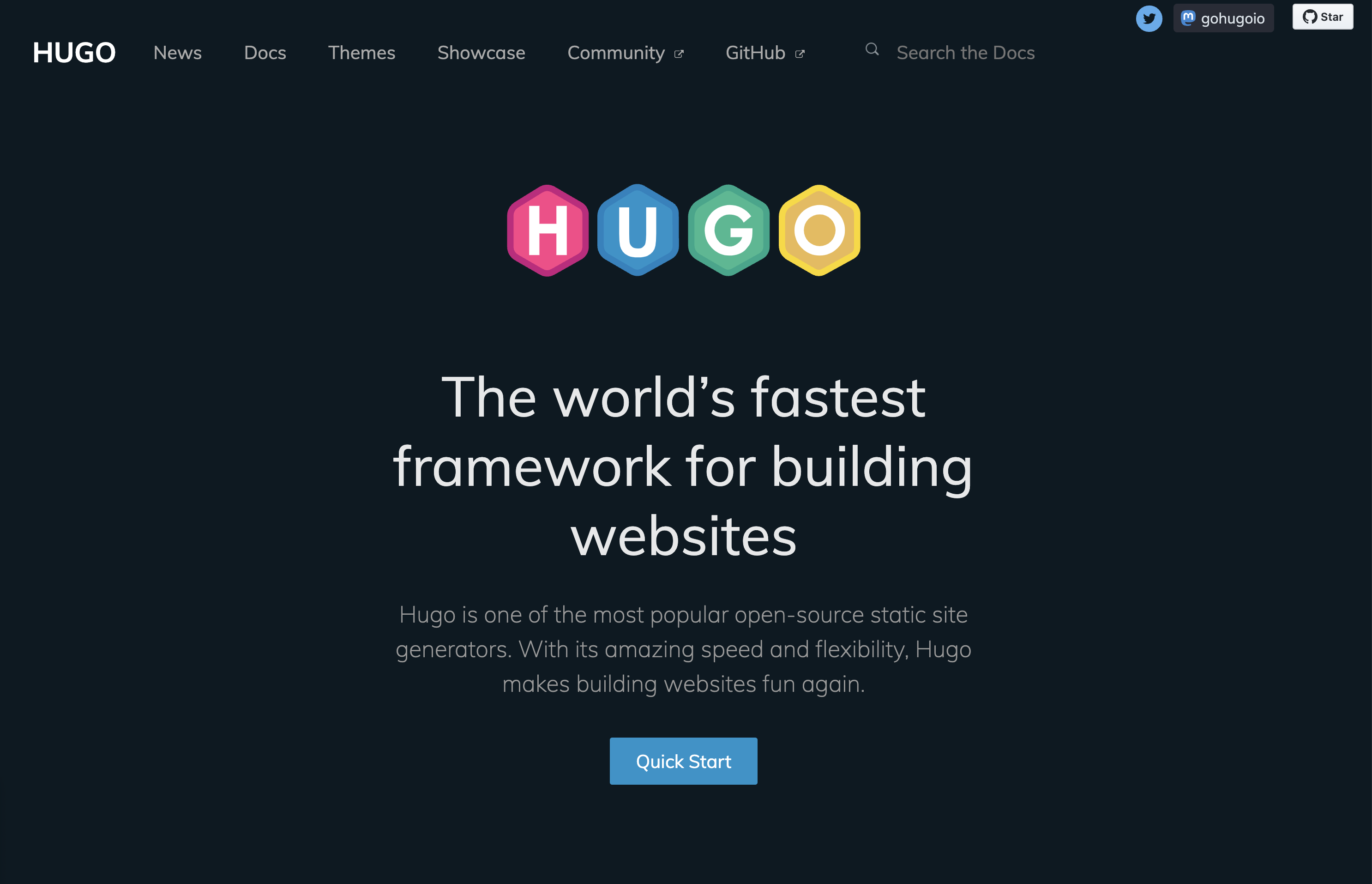

6. Hugo

Screenshot from: gohugo.io, March 2024.

Screenshot from: gohugo.io, March 2024.Hugo is best suited for developers, bloggers, and content creators who value speed, flexibility, and simplicity. Its lightning-fast build times and static page generation make it ideal for those who frequently update their site or publish new content regularly.

While Hugo offers themes and templates to help users get started, creating a unique design may require coding skills.

One tip for getting the most out of Hugo is to leverage its built-in shortcodes, which allow users to easily add complex functionality to their web pages without writing extensive code.

Another nuance to remember is that, as a static site generator, Hugo may not be the best choice for websites that require dynamic features like user authentication or real-time data updates.

Key Features:

- Can build most websites in seconds.

- Cross-platform with easy installation.

- Allows you to host your site anywhere.

- Customizable URLs.

- “Minutes to Read” and “WordCount” functionality.

- Integrated Google Analytics and Disqus comment support.

Pros:

- It easily integrates with Google Calendar and other apps.

- Easy to use with responsive customer service.

- Multilingual capabilities are built-in.

- Extendable as needed.

Cons:

- It can’t create one-off tasks.

- It can be confusing upon initial use, particularly in templating syntax.

- No plugins are available.

- Limited text formatting features.

7. Webflow

Screenshot from: webflow.com, March 2024.

Screenshot from: webflow.com, March 2024.Webflow is best suited for freelance designers and small agencies who want complete control over their website’s design without worrying about hosting, security, or performance.

One nuance of Webflow is that extending a site’s functionality is not as straightforward as installing a plugin like WordPress.

Users must either set up integrations between their Webflow site and other platforms using third-party tools like Zapier, or they can embed custom code blocks on pages to add features.

A key aspect to note about Webflow is its pricing structure. Building a site is completely free, and users only need to purchase a site plan and custom domain when they are ready to launch.

This makes it an attractive option for freelancers and small teams who want to design and prototype sites without upfront costs, paying only when they are ready to go live.

Key Features:

- More than 100 templates to choose from.

- Design is prioritized, with animation, interaction, and parallax scrolling options.

- Offers automatically generated sitemaps and customizable 301 redirects.

- Multiple payment options for ecommerce sites and automatic tax calculation.

Pros:

- Affordable plans range from free to $235 for top-tier ecommerce plans.

- Free starter plan.

- Numerous learning and help resources.

- Good range of templates.

- Good security.

Cons:

- Steep learning curve.

- Integration with social media can be frustrating.

- Advanced capabilities aren’t built-in and require integration.

8. Joomla

Screenshot from: joomla.org, March 2024.

Screenshot from: joomla.org, March 2024.Joomla is best suited for creating social networking, community, and membership sites. With its built-in multilingual support and advanced user and content management options, Joomla enables site owners to manage hundreds of users, create custom post types, and publish content in multiple languages.

One nuance of Joomla is that it has a steeper learning curve compared to more beginner-friendly CMSs like WordPress.

While Joomla aims to combine the power and flexibility of Drupal with the user-friendliness of WordPress, users with some web development experience will be better equipped to understand and take full advantage of Joomla’s built-in features and settings.

Users can choose from over 6,000 extensions available in the official directory to extend a Joomla site’s functionality. However, unlike WordPress plugins that can be installed with just a few clicks, Joomla extensions must be installed via the backend.

This process requires more technical know-how and may be challenging for beginners.

Key Features:

- Almost 6,000 extensions are available.

- Traditional content editing (no drag-and-drop visual editor).

- Optimized for mobile (depending on the template).

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- Free, open-source software.

- Premade designs and templates.

- Access to source code.

Cons:

- No free subdomains or custom domains are available.

- No customer support.

- Requires a PHP-enable server to run.

- Fewer templates and extensions than WordPress.

9. Drupal

Screenshot from: drupal.org, March 2024.

Screenshot from: drupal.org, March 2024.Drupal is best suited for large corporations, government agencies, and universities with dedicated teams of developers. With its extensive customization options and ability to handle large data and heavy traffic, Drupal is ideal for complex, high-performance websites.

One key nuance of Drupal is its steep learning curve. Drupal is designed for developers or users with a strong understanding of HTML, CSS, and PHP.

Customizing a Drupal site involves working with numerous modules and themes, which can be highly configurable but require technical expertise to set up and maintain.

For organizations with the necessary technical resources, Drupal’s flexibility and robustness make it a top choice for building highly secure and customized websites.

Key Features:

- Content management system (CMS).

- Over 47,000 modules are available.

- Traditional content editing (no drag-and-drop visual editor).

- Optimized for mobile (depending on the theme you choose).

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- Free, open-source software.

- Premade designs and templates.

- Access to source code.

- Strong security and data encryption.

Cons:

- No free subdomains.

- No customer support.

- Requires a PHP-enabled server to run.

10. DataLife Engine

Screenshot from: dle-news.com, March 2024.

Screenshot from: dle-news.com, March 2024.DataLife Engine (DLE) is best suited for media companies, news websites, and blogs, prioritizing SEO, security, and performance. The platform’s focus on handling high traffic levels with minimal server load makes it an attractive choice for websites that expect significant visitors and must ensure a smooth user experience.

DLE’s user-friendly interface and content management features suit organizations with multiple users involved in creating and publishing well-suited content.

The platform’s ability to track statistics and automatically filter words in comments can benefit media websites and blogs that need to moderate user-generated content and analyze audience engagement.

However, there are some nuances to consider when using DLE. The limited number of plugins and themes may restrict how much websites can customize their appearance and functionality compared to other CMSs like WordPress.

It’s also important to note that while DLE supports English users, they are considered a secondary focus.

Key Features:

- Content management system (CMS).

- Designed for multiple users.

- SEO-focused.

- Tracks statistics.

- Automatically filters words in comments.

- It supports an unlimited number of categories.

- Low server load.

- Allows plugins.

Pros:

- Stores data using MySQL.

- Excellent user experience

- Websites load quickly, even on low-end servers.

- Excellent for publishing news and blog posts.

Cons:

- No free version licenses vary from $79 for basic to $199 for unlimited.

- English users are a secondary focus.

- A limited number of plugins and themes.

- The lowest license doesn’t include customer support.

11. Sitefinity

Screenshot from: progress.com/sitefinity-cms/, March 2024.

Screenshot from: progress.com/sitefinity-cms/, March 2024.Progress’ Sitefinity is best suited for organizations that manage multiple websites, brands, or marketing channels from a single platform.

The CMS’s ability to sync assets across pages and sites makes it an attractive choice for companies with a diverse online presence. It streamlines content management and ensures consistency across various touchpoints.

One notable advantage of Sitefinity is its low-cost license compared to other CMS options, which may make it an attractive choice for budget-conscious organizations.

Additionally, the minimal coding required for integration and the flexible deployment time can help businesses reduce development costs and bring their websites to market faster.

However, Sitefinity setup and administration can be challenging. Organizations may need to invest time and resources into training their teams or hiring experienced professionals to ensure a smooth implementation and ongoing management of the platform.

Key Features:

- Manage multiple sites from one location.

- Sync assets across pages and sites.

- It makes personalization simpler.

- Integrated analytics and optimization.

- Four versions include basic, marketing-focused, PaaS, and ecommerce.

- Multilingual capabilities.

Pros:

- Low-cost license compared to other CMS.

- No setup fee.

- Minimal coding is required for integration.

- Flexible deployment time shortens time to market.

- Options for marketing automation.

Cons:

- Free trial, but no free version.

- Setup and administration can be challenging.

- No mobile interface.

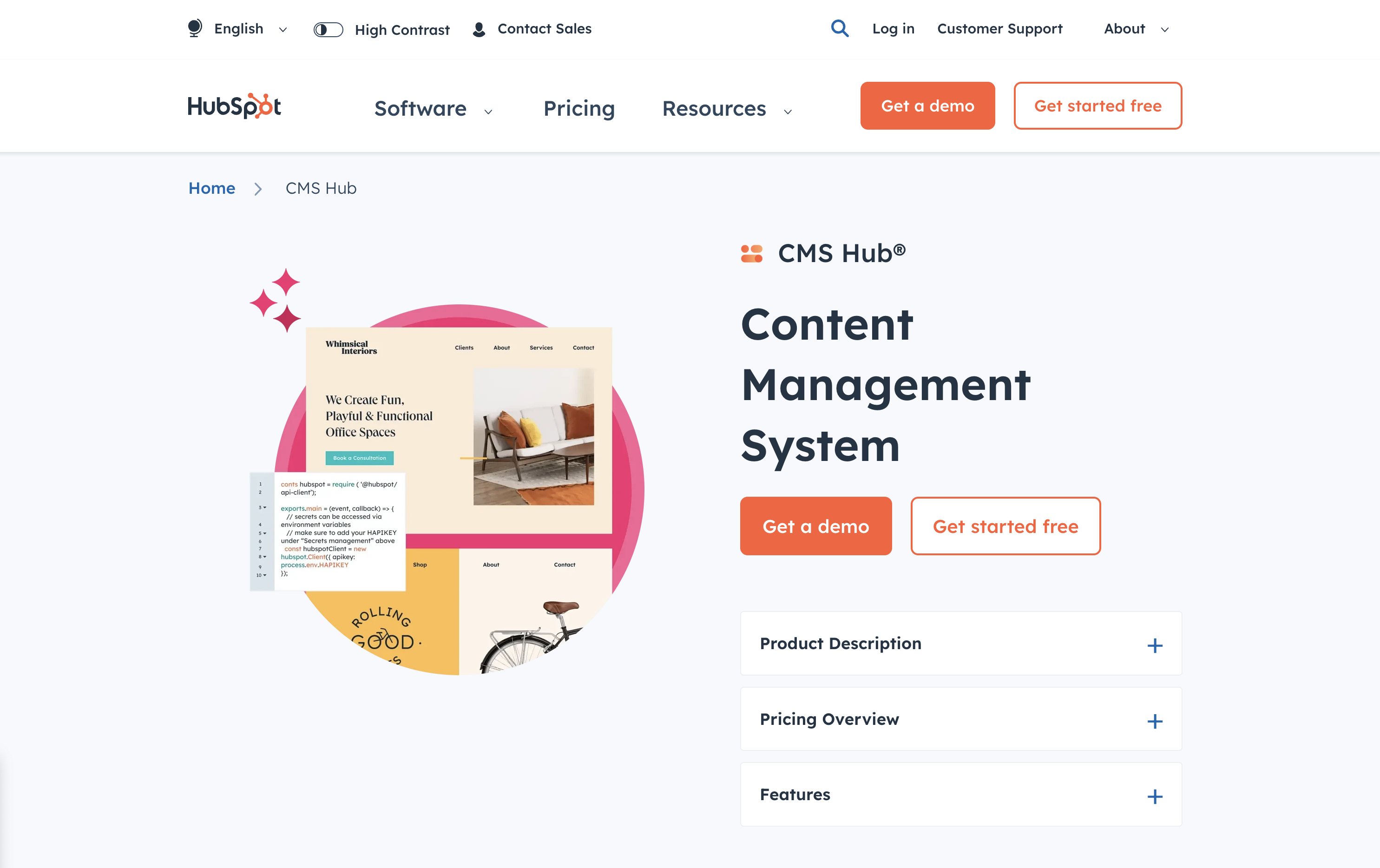

12. CMS Hub

Screenshot from: hubspot.com/products/cms, March 2024.

Screenshot from: hubspot.com/products/cms, March 2024.CMS Hub, previously known as Hubspot CMS, is best suited for businesses that already use HubSpot’s marketing, sales, or service tools and want to integrate their website with their existing HubSpot ecosystem.

It combines the ease of use of a drag-and-drop website builder with the flexibility and performance of a CMS.

CMS Hub seamlessly integrates with HubSpot’s CRM platform, allowing businesses to create personalized content experiences, optimize marketing efforts, and streamline sales processes.

It also focuses on security and performance, with HubSpot handling website hosting, SSL certification, and CDN configuration.

However, while CMS Hub offers customization options, it may not be as flexible as other CMSs like WordPress or Drupal, potentially limiting businesses with particular design or functionality requirements.

Additionally, CMS Hub’s pricing model can be expensive compared to other CMS options, so companies must carefully consider their budget and weigh the benefits of its all-in-one approach.

Key Features:

- Cloud-based.

- Includes SEO recommendations.

- Includes numerous themes and responsive templates.

- Fully integrated CRM.

- Drag-and-drop webpage editor.

- Built-in security.

Pros:

- Adaptive A/B testing helps you identify the best page layout.

- All-in-one publishing tools.

- Built-in SEO tools.

- Supports smart content with personalized rules.

- Mobile pages supported with Google AMP.

Cons:

- Does not support ecommerce.

- No automatic backup and recovery.

13. Contentful

Screenshot from: contentful.com, March 2024.

Screenshot from: contentful.com, March 2024.Contentful is a headless CMS best suited for businesses and developers requiring a flexible, scalable, and customizable content management solution.

It’s particularly well-suited for organizations delivering content across multiple channels, such as websites, mobile apps, and IoT devices.

One of Contentful’s key advantages is its content modeling capabilities. The platform allows users to create custom content models that can be easily adapted to their needs.

When using Contentful, it’s important to remember that it’s a headless CMS that focuses on content management and delivery rather than providing a built-in front end or presentation layer.

Developers must build a front end using their preferred tools and frameworks and then integrate with Contentful’s API to retrieve and display the content.

Another aspect to consider is the learning curve associated with Contentful. While the platform is designed to be user-friendly, it may take some time for content editors and managers to become familiar with its interface and content modeling concepts.

Features:

- RESTful API gives you complete control over assets, translations, and versions.

- Customizable interface and framework that works across third-party component providers.

- It provides regional autonomy, so pieces in multiple languages and time zones can be published globally.

- Content modeling allows you to structure content by channel.

- Single sign-on and secure access.

Pros:

- Focus on integration simplifies the technology stack.

- User-friendly with a clean interface.

- Free version for up to five users.

- Good scalability.

Cons:

- Expensive for an upgraded version ($489/month).

- Poor internal search tools.

- Modeling content can be tricky.

14. Adobe Experience Manager

Screenshot from: business.adobe.com/products/experience-manager/adobe-experience-manager.html, March 2024

Screenshot from: business.adobe.com/products/experience-manager/adobe-experience-manager.html, March 2024Adobe Experience Manager (AEM) is an enterprise-level CMS best suited for large organizations with complex content management needs and a significant budget. AEM’s target audience includes global brands, financial institutions, healthcare providers, and government agencies.

One of AEM’s key strengths is its tight integration with other Adobe products, such as Analytics, Target, and Creative Cloud.

This integration allows organizations to leverage the full power of Adobe’s digital marketing suite, enabling them to create, manage, and optimize content and experiences across the entire customer journey.

AEM also offers advanced features like intelligent content delivery, AI-powered content tagging, and multi-site management, making it a comprehensive solution for enterprise content management.

The platform’s complexity and extensive feature set can overwhelm smaller organizations or teams with limited resources. Additionally, AEM’s licensing and implementation costs are among the highest in the market, making it a significant investment for any organization.

Key Features:

- Comprehensive marketing platform.

- End-to-end digital document solution.

- Enterprise-level security.

- Analytics included.

- Intelligent search.

- Scalable to your needs.

Pros:

- Streamlines workflows by keeping everything on one platform.

- Individual marketers can handle authoring and publishing.

- Easy authorization of workflow.

- Can handle massive content loads.

- Can manage multiple sites at once.

Cons:

- Steep learning curve.

- Requires different sign-ins to access other areas.

- Doesn’t integrate well with external DAMs.

- Not ideal for communities and forums.

Ecommerce Platforms

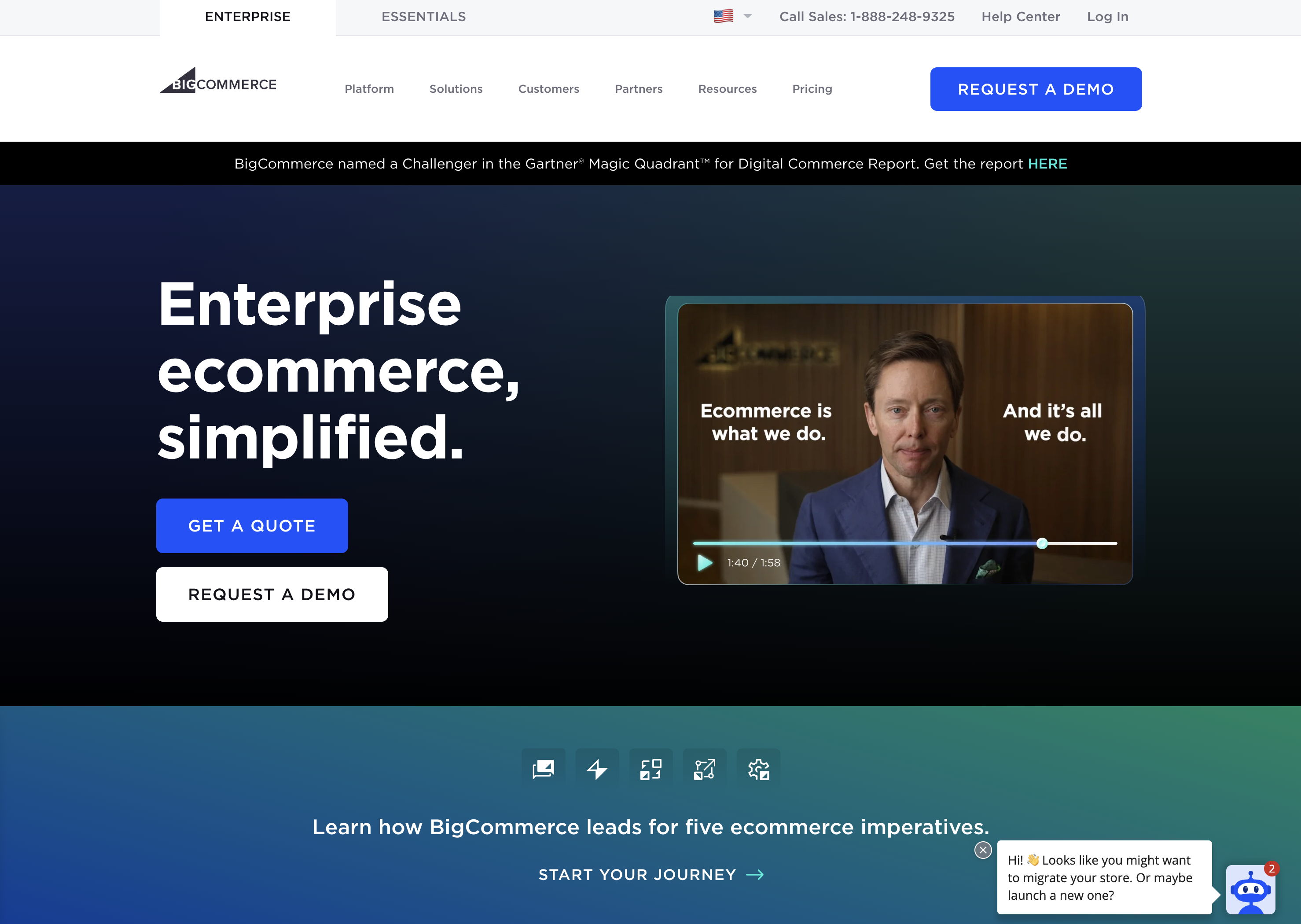

15. BigCommerce

Screenshot from: bigcommerce.com, March 2024.

Screenshot from: bigcommerce.com, March 2024.BigCommerce is a hosted ecommerce platform best suited for businesses of all sizes looking to create and manage an online store. It caters to many users, from small and medium-sized businesses to large enterprises.

One of BigCommerce’s key advantages is its scalability. The platform accommodates businesses as they grow, offering features like unlimited products, file storage, and bandwidth.

BigCommerce also provides a range of advanced ecommerce functionalities, such as multi-channel selling, abandoned cart recovery, and built-in SEO tools, which can help businesses optimize their online sales performance.

When considering BigCommerce, it’s important to remember that while the platform offers a wide range of features, some of the more advanced functionalities may require a higher-tier plan or additional costs.

BigCommerce also enforces certain design limitations on its themes, which may restrict the level of customization available without diving into custom coding.

Key Features:

- Full hosting solution.

- No software to self-install.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- High level of customization options.

- Over 100 themes to choose from (including some free).

- No platform commission fees.

- Free subdomain available.

- Customer support.

Cons:

- No free version is available.

- No access to source code.

- Pricing is based on revenue, which isn’t great if you have tight margins.

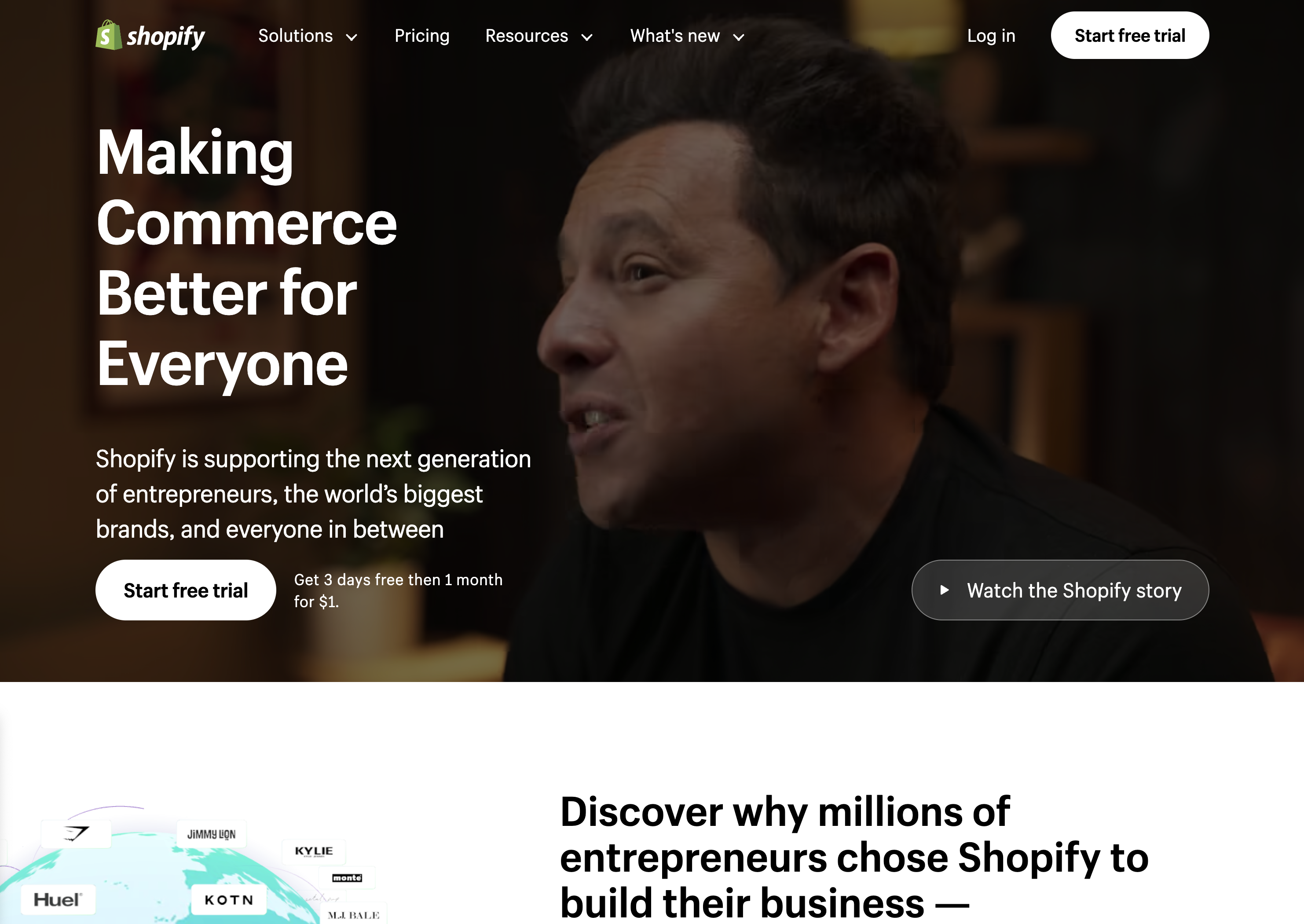

16. Shopify

Screenshot from: shopify.com, March 2024.

Screenshot from: shopify.com, March 2024.Shopify is an ecommerce platform well-suited for entrepreneurs and small business owners who want to establish an online presence quickly without extensive technical expertise.

Shopify’s extensive app marketplace allows businesses to extend its functionality and customize their online store to meet specific needs.

The platform also provides built-in features like inventory management, payment processing, and abandoned cart recovery to help streamline operations and optimize sales performance.

When using Shopify, consider the platform’s transaction fees, which vary depending on the payment gateway. Some advanced design changes may require HTML, CSS, and Liquid knowledge.

Despite these considerations, Shopify remains a top choice for businesses seeking a reliable, scalable, and feature-rich ecommerce platform.

Key Features:

- Full hosting solution.

- No software to self-install.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Blog module.

- Ecommerce store.

- Social media tools.

Pros:

- All-in-one website builder and platform.

- Premade designs and templates.

- Free subdomain available.

- Customer support.

Cons:

- No free version is available.

- No access to source code.

- Platform commission fees.

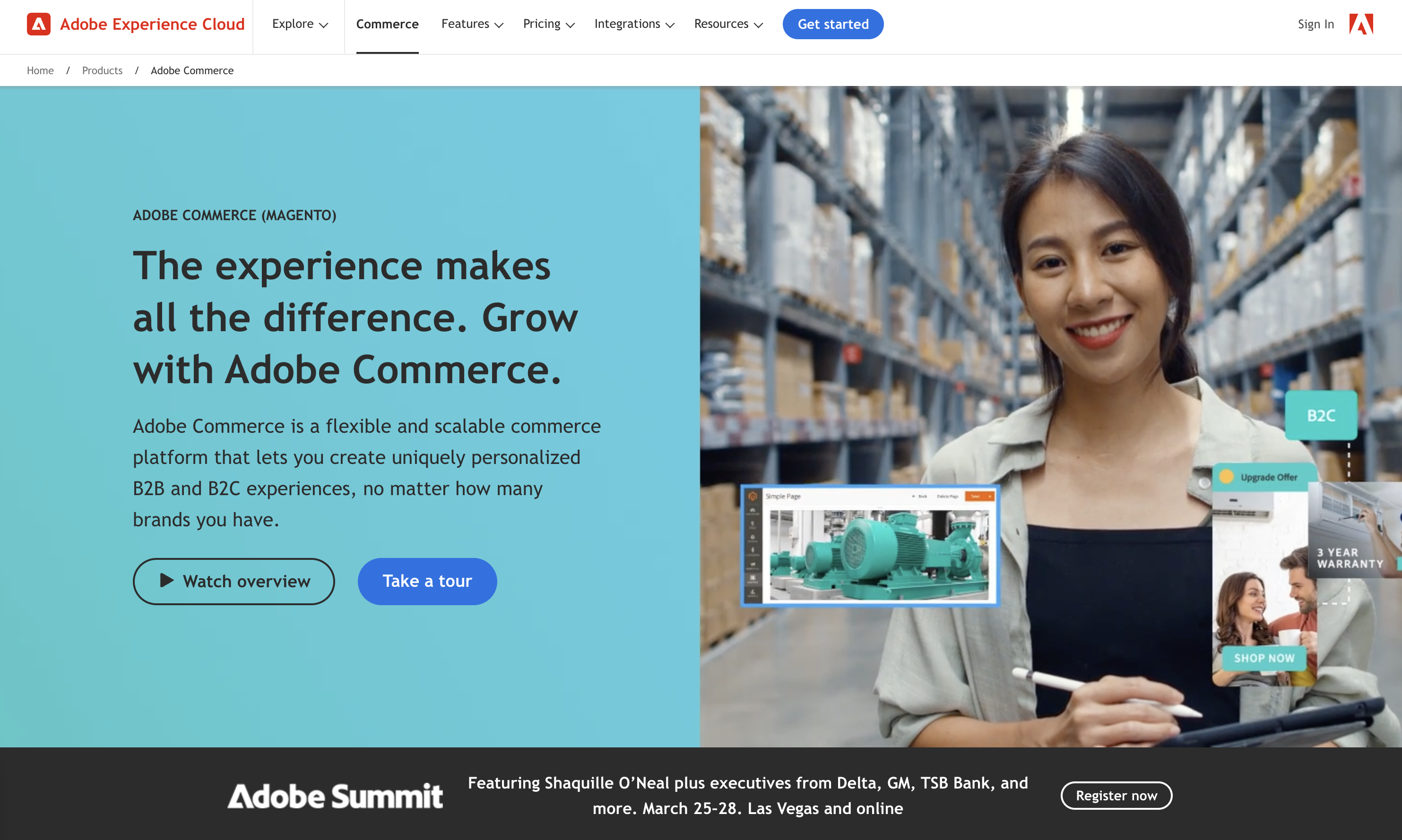

17. Magento

Screenshot from: business.adobe.com/products/magento/magento-commerce.html, March 2024

Screenshot from: business.adobe.com/products/magento/magento-commerce.html, March 2024Magento is an open-source ecommerce platform best suited for medium to large enterprises with complex online selling needs.

Magento’s flexibility and scalability come with a steeper learning curve than other ecommerce platforms. It requires more technical expertise to set up, customize, and maintain, making it less suitable for small businesses or users without web development knowledge.

When using Magento, remember that its powerful features and customization options can impact website performance if not optimized properly.

Choosing a reliable hosting provider and working with experienced Magento developers is crucial for ensuring optimal store performance and security.

Key Features:

- Option to pay for Magento Commerce for a complete hosting platform or download the free, open-source software to install on your web server.

- Drag-and-drop visual builder.

- Extensions are available.

- Optimized for mobile.

- Ecommerce store.

- Social media tools.

Pros:

- All-in-one ecommerce platform or open-source ecommerce software package.

- Free version available.

- Designed for large-scale ecommerce.

- Premade designs and templates.

- Free subdomain available (mainly for setup and testing purposes).

- Customer support (paid version only).

- Access to source code with the downloadable version.

Cons:

- No blog module, although you can add it as an extension.

- Not optimized for web projects or website purposes outside of ecommerce.

- The steep learning curve for inexperienced users.

- A large investment for small-scale ecommerce.

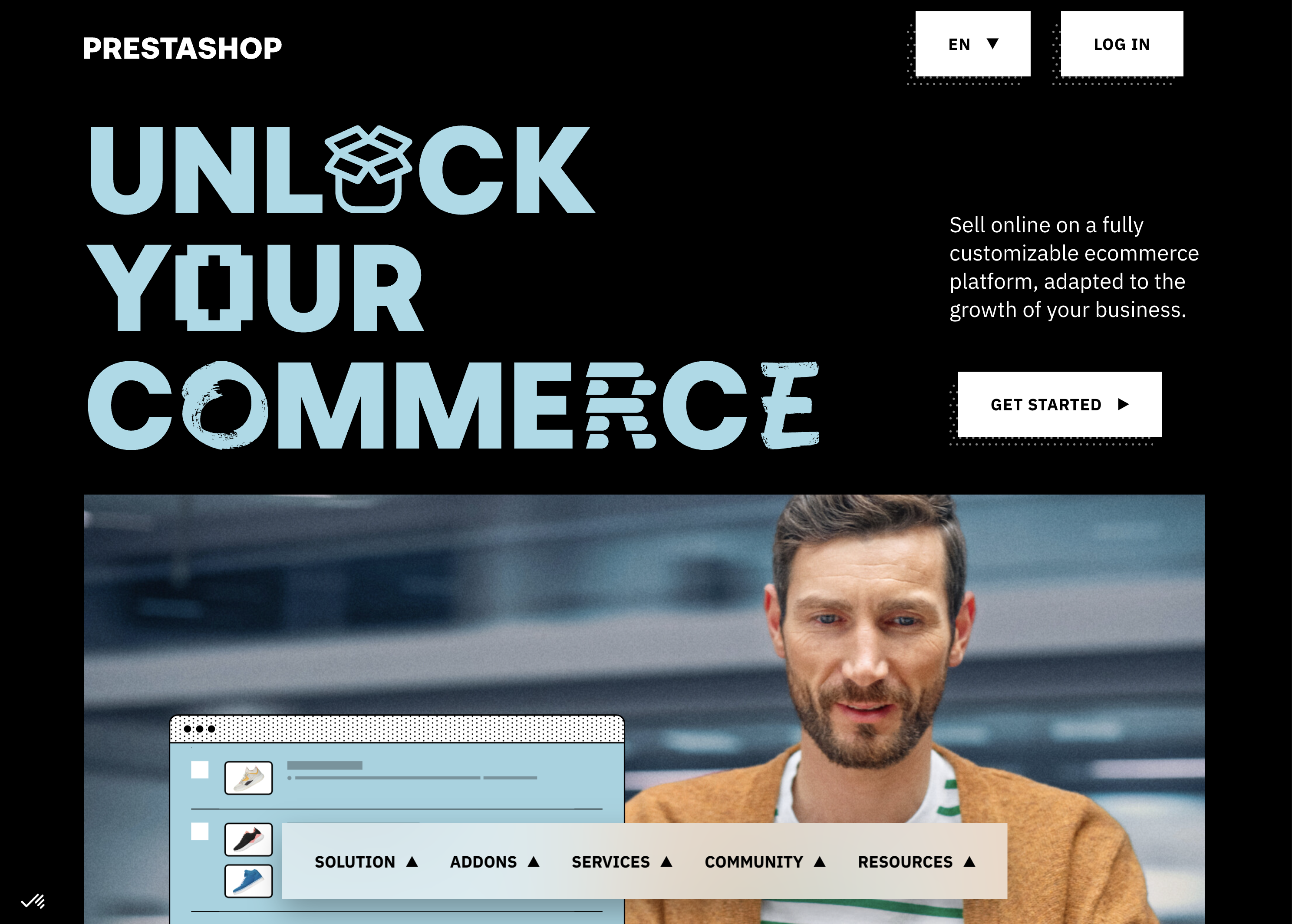

18. PrestaShop

Screenshot from: prestashop.com, March 2024.

Screenshot from: prestashop.com, March 2024.PrestaShop is an open-source ecommerce platform best suited for small to medium-sized businesses looking for a cost-effective and customizable solution.

PrestaShop offers a wide range of themes and modules, allowing businesses to customize their online store’s appearance and functionality. The platform also has a strong community of developers and users, providing support and resources for store owners.

While PrestaShop is generally easy to use, some advanced customizations may require coding knowledge. Additionally, the number of installed modules can impact the platform’s performance.

Key Features:

- Customizable to your needs, including themes and features.

- Includes backend tools like payments, shipping and data.

- Community of translators for multilanguage digital stores.

- Secure payment modules.

- Scalable.

- Includes demographic assistance.

Pros:

- Free version available.

- Open source so that you can customize your site to your needs.

- 5,000+ themes, modules, and services are available with the premium plan.

- Excellent user experience.

Cons:

- Limited scalability.

- No support team.

- Initial setup requires some programming knowledge.

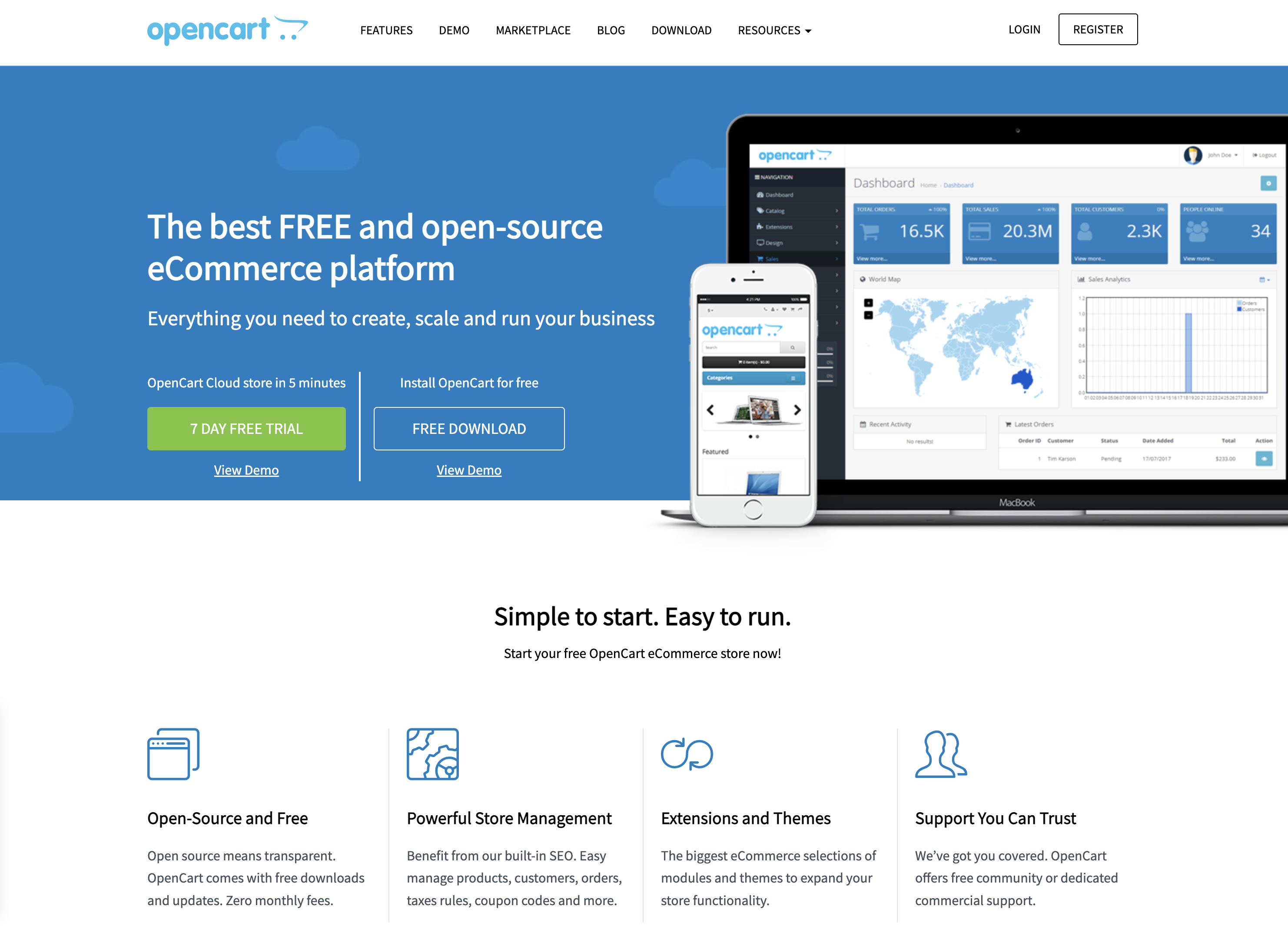

19. OpenCart

Screenshot from: opencart.com, March 2024.

Screenshot from: opencart.com, March 2024.OpenCart is a free, open-source ecommerce platform best suited for small—to medium-sized businesses with limited budgets.

OpenCart offers a decent range of features and extensions, allowing businesses to customize their online store. However, its marketplace is smaller than other platforms, which may limit advanced functionality options.

When using OpenCart, be mindful of its performance limitations as the store grows. Optimizing and carefully selecting extensions may be required to maintain a smooth user experience.

Additionally, its simplicity may not be suitable for businesses with complex ecommerce needs.

Features:

- The administrator dashboard gives you information at a glance.

- User management allows you to assign permissions and separate access.

- Allows you to run multiple stores from one dashboard.

- Customizable variables let you include options for sizes, colors, or anything else.

Pros:

- The platform is entirely free, as are many add-ons.

- Extensive metrics and reports were provided.

- Works with your current payment gateway.

- Comes with dedicated technical support.

- Flexible.

Cons:

- Often creates duplicate pages, which can cause SEO problems.

- Not all extensions, modules, plugins, and add-ons work well together.

- Checkout can be slow, particularly if you have numerous plugins.

- Can be difficult to import a list of inventory.

- Requires some degree of technical ability for optimal use.

Blogging Platforms

20. Medium

Screenshot from: medium.com, March 2024.

Screenshot from: medium.com, March 2024.Medium is a publishing platform best suited for individual bloggers, writers, and content creators who want to share their ideas and stories with a built-in audience.

Medium’s clean and minimalistic interface allows readers to concentrate on the content. The platform also offers a built-in social network, making it easy for writers to connect with readers and other creators.

However, this simplicity comes with limited customization options for branding and design.

When using Medium, it’s important to understand that the platform controls the distribution and monetization of content. While this can lead to increased exposure, it also means less control over the presentation and ownership of your content compared to self-hosted solutions.

Key Features:

- Full hosting solution.

- No software to self-install.

- Optimized for mobile.

- Blog module.

- Limited social media tools.

Pros:

- A community site for blogs.

- Free version available.

- Medium Partner Program to earn revenue.

- Customer support.

Cons:

- No extensions.

- No ecommerce stores.

- No premade designs or themes.

- No free subdomains.

- No third-party extensions.

- No access to source code.

21. Ghost

Screenshot from: ghost.org, March 2024.

Screenshot from: ghost.org, March 2024.Ghost is a lightweight, open-source publishing platform best suited for bloggers, writers, and small publications who value simplicity and performance. It’s designed for users who want a clean, focused writing experience without the complexity of more feature-rich CMS platforms.

Ghost offers a simple, intuitive editor and a minimalistic default theme, allowing users to create and publish content quickly.

The platform also provides built-in SEO features and supports memberships and subscriptions, making it a good choice for content creators looking to monetize their work.

As Ghost primarily focuses on publishing, it may not be the best fit for users who require extensive customization options or advanced functionality beyond blogging.

Key Features:

- You can subscribe through Ghost’s hosting platform or download the free, open-source software to install on your web server.

- Basic drag-and-drop visual builder.

- Extensions are available through integrations with other tools.

- Optimized for mobile.

- Blog module.

- Ecommerce store (subscription only).

- Social media tools.

Pros:

- All-in-one website builder and platform.

- Free version available.

- Premade designs and templates.

- Free subdomain available with the paid version.

- Customer support.

- Access to source code.

Cons:

- Not compatible with all third-party web hosts.

- Highly specialized with limited capabilities beyond blogging.

- Not built to scale up into a business site or complex website.

22. Tumblr

Screenshot from: tumblr.com, March 2024.

Screenshot from: tumblr.com, March 2024.Tumblr is a microblogging and social networking platform best suited for younger audiences who enjoy sharing short-form multimedia content.

Tumblr’s emphasis on community and content discovery makes it easy for users to connect with others who share similar interests. The platform’s reblogging feature spreads content quickly, increasing visibility and engagement.

When using Tumblr, it’s important to understand the platform’s unique culture and demographics. Tumblr is known for its diverse, often niche communities, which can be both a strength and a challenge for brands and marketers.

Additionally, while Tumblr offers some customization options, it may not be the best choice for users who require a highly professional or branded online presence.

Key Features:

- Features strong social media functionality.

- Customizable.

- Google Analytics Integration.

- Unlimited storage.

- Ad-free blog themes.

- Free SSL certification.

Pros:

- Free to use; no upgrades are required to access all features.

- Free web hosting.

- User-friendly and easy to set up.

- No storage limits.

- Can post audio, video, images, gifs, and more.

Cons:

- Daily posting limit (250/day).

- Files must be under 10 MB.

- No plugins.

- Safety and security leave something to be desired.

- Unsuited to long-form content.

23. Bluehost

Screenshot from: bluehost.com, March 2024.

Screenshot from: bluehost.com, March 2024.Bluehost is a web hosting provider best suited for beginners and small businesses looking for an affordable, easy-to-use hosting solution.

Bluehost’s advantages are its user-friendly interface and one-click installations for popular CMS platforms like WordPress.

This makes it easy for users with limited technical knowledge to set up and manage their websites. Bluehost also provides 24/7 customer support and a free SSL certificate with each hosting plan.

While Bluehost is known for its reliability and performance, it may not be the best choice for websites with high traffic or complex requirements. Some users have reported issues with slow loading speeds and limited storage space on shared hosting plans.

Key Features:

- Domain names can be purchased through Bluehost.

- Versatile hosting options let you choose what works best for you.

- Dedicated servers and virtual private servers are available.

- A variety of plans are available based on your needs.

- Comes with customer service chat options.

Pros:

- The first term is inexpensive.

- Lots of storage and unlimited bandwidth.

- Good uptime.

- Free SSL certificates.

Cons:

- Extra features come with added costs, which can get pricey.

- High renewal rates.

- Speed could be better.

- All servers are U.S.-based.

24. Blogger

Screenshot from: blogger.com, March 2024.

Screenshot from: blogger.com, March 2024.Blogger is a free, beginner-friendly blogging platform best suited for hobbyists, casual bloggers, and those who want to start a blog without investing in a self-hosted solution. It’s ideal for users who prioritize simplicity and ease of use over advanced customization options.

Blogger offers a straightforward, intuitive interface that makes it easy for users to create and publish blog posts.

The platform provides a selection of customizable templates and allows users to add gadgets and widgets to enhance their blog’s functionality. However, unlike other blogging platforms, Blogger’s design and customization options are relatively limited.

Blogger’s simplicity and lack of advanced features may make it unsuitable for professional bloggers or those looking to create a more sophisticated online presence.

Features:

- Clear analytics.

- Included layout/themes.

- Monetization options, including Google Adsense integration.

- Uses Google security.

- Unlimited storage.

Pros:

- Free to use.

- Extremely user-friendly.

- Free SSL security.

- Good uptime.

Cons:

- You don’t own your website.

- Fewer options and control over design.

- Limited support.

- Hard to port to a different platform.

Community Management

25. vBulletin

vBulletin is a proprietary forum software best suited for businesses, organizations, and communities looking to create and manage an online discussion platform.

vBulletin offers many features, including private messaging, user groups, and content management tools, making it a powerful solution for managing large, active communities.

The platform also provides a high level of customization, allowing administrators to tailor the look and feel of their forum to match their brand or website.

One of the primary considerations when using vBulletin is its licensing cost, which can be a significant investment for some users.

Additionally, while vBulletin offers a range of customization options, some technical knowledge may be required to optimize and maintain the platform entirely.

Key Features:

- Built-in SEO and security.

- Includes a chat app.

- Easy to get started.

- Built-in applications.

- Optimized for mobile users.

- Blogging functionality.

- Fully customizable.

Pros:

- Frequent patches and bug fixes.

- Customer support.

- Easy to install and get started.

- Designed to host forums.

- Includes templates.

Cons:

- No free option.

- Limited features compared to some other platforms.

- Requires some tech skills to take full advantage of the functionality.

- It can’t customize code for the cloud-based version.

Which One Is Right For You?

With so many options, determining the right alternative to WordPress depends on your specific needs and goals.

For individuals and small businesses seeking an easy-to-use website builder, Wix, Squarespace, or Weebly offer intuitive drag-and-drop interfaces. Those prioritizing simplicity and speed may prefer static site generators like Jekyll or Hugo.

Developers and tech-savvy users who value flexibility and customization can explore headless CMS options like Contentful or more robust open-source platforms like Joomla and Drupal.

Ecommerce merchants must evaluate features like inventory management, payment processing, and scalability when choosing between Shopify, BigCommerce, WooCommerce, and others.

No matter your requirements, there is likely a WordPress alternative that is well-suited to your needs. Thoroughly assessing your website goals, budget, and technical abilities will help you select the right platform to build your ideal online presence.

With some research and planning, you can find the perfect alternative to take your website beyond what WordPress offers.

More Resources:

Featured Image: GaudiLab/Shutterstock

SEO

2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

WordPress security scanner WPScan’s 2024 WordPress vulnerability report calls attention to WordPress vulnerability trends and suggests the kinds of things website publishers (and SEOs) should be looking out for.

Some of the key findings from the report were that just over 20% of vulnerabilities were rated as high or critical level threats, with medium severity threats, at 67% of reported vulnerabilities, making up the majority. Many regard medium level vulnerabilities as if they are low-level threats and that’s a mistake because they’re not low level and should be regarded as deserving attention.

The WPScan report advised:

“While severity doesn’t translate directly to the risk of exploitation, it’s an important guideline for website owners to make an educated decision about when to disable or update the extension.”

WordPress Vulnerability Severity Distribution

Critical level vulnerabilities, the highest level of threat, represented only 2.38% of vulnerabilities, which is essentially good news for WordPress publishers. Yet as mentioned earlier, when combined with the percentages of high level threats (17.68%) the number or concerning vulnerabilities rises to almost 20%.

Here are the percentages by severity ratings:

- Critical 2.38%

- Low 12.83%

- High 17.68%

- Medium 67.12%

Authenticated Versus Unauthenticated

Authenticated vulnerabilities are those that require an attacker to first attain user credentials and their accompanying permission levels in order to exploit a particular vulnerability. Exploits that require subscriber-level authentication are the most exploitable of the authenticated exploits and those that require administrator level access present the least risk (although not always a low risk for a variety of reasons).

Unauthenticated attacks are generally the easiest to exploit because anyone can launch an attack without having to first acquire a user credential.

The WPScan vulnerability report found that about 22% of reported vulnerabilities required subscriber level or no authentication at all, representing the most exploitable vulnerabilities. On the other end of the scale of the exploitability are vulnerabilities requiring admin permission levels representing a total of 30.71% of reported vulnerabilities.

Permission Levels Required For Exploits

Vulnerabilities requiring administrator level credentials represented the highest percentage of exploits, followed by Cross Site Request Forgery (CSRF) with 24.74% of vulnerabilities. This is interesting because CSRF is an attack that uses social engineering to get a victim to click a link from which the user’s permission levels are acquired. This is a mistake that WordPress publishers should be aware of because all it takes is for an admin level user to follow a link which then enables the hacker to assume admin level privileges to the WordPress website.

The following is the percentages of exploits ordered by roles necessary to launch an attack.

Ascending Order Of User Roles For Vulnerabilities

- Author 2.19%

- Subscriber 10.4%

- Unauthenticated 12.35%

- Contributor 19.62%

- CSRF 24.74%

- Admin 30.71%

Most Common Vulnerability Types Requiring Minimal Authentication

Broken Access Control in the context of WordPress refers to a security failure that can allow an attacker without necessary permission credentials to gain access to higher credential permissions.

In the section of the report that looks at the occurrences and vulnerabilities underlying unauthenticated or subscriber level vulnerabilities reported (Occurrence vs Vulnerability on Unauthenticated or Subscriber+ reports), WPScan breaks down the percentages for each vulnerability type that is most common for exploits that are the easiest to launch (because they require minimal to no user credential authentication).

The WPScan threat report noted that Broken Access Control represents a whopping 84.99% followed by SQL injection (20.64%).

The Open Worldwide Application Security Project (OWASP) defines Broken Access Control as:

“Access control, sometimes called authorization, is how a web application grants access to content and functions to some users and not others. These checks are performed after authentication, and govern what ‘authorized’ users are allowed to do.

Access control sounds like a simple problem but is insidiously difficult to implement correctly. A web application’s access control model is closely tied to the content and functions that the site provides. In addition, the users may fall into a number of groups or roles with different abilities or privileges.”

SQL injection, at 20.64% represents the second most prevalent type of vulnerability, which WPScan referred to as both “high severity and risk” in the context of vulnerabilities requiring minimal authentication levels because attackers can access and/or tamper with the database which is the heart of every WordPress website.

These are the percentages:

- Broken Access Control 84.99%

- SQL Injection 20.64%

- Cross-Site Scripting 9.4%

- Unauthenticated Arbitrary File Upload 5.28%

- Sensitive Data Disclosure 4.59%

- Insecure Direct Object Reference (IDOR) 3.67%

- Remote Code Execution 2.52%

- Other 14.45%

Vulnerabilities In The WordPress Core Itself

The overwhelming majority of vulnerability issues were reported in third-party plugins and themes. However, there were in 2023 a total of 13 vulnerabilities reported in the WordPress core itself. Out of the thirteen vulnerabilities only one of them was rated as a high severity threat, which is the second highest level, with Critical being the highest level vulnerability threat, a rating scoring system maintained by the Common Vulnerability Scoring System (CVSS).

The WordPress core platform itself is held to the highest standards and benefits from a worldwide community that is vigilant in discovering and patching vulnerabilities.

Website Security Should Be Considered As Technical SEO

Site audits don’t normally cover website security but in my opinion every responsible audit should at least talk about security headers. As I’ve been saying for years, website security quickly becomes an SEO issue once a website’s ranking start disappearing from the search engine results pages (SERPs) due to being compromised by a vulnerability. That’s why it’s critical to be proactive about website security.

According to the WPScan report, the main point of entry for hacked websites were leaked credentials and weak passwords. Ensuring strong password standards plus two-factor authentication is an important part of every website’s security stance.

Using security headers is another way to help protect against Cross-Site Scripting and other kinds of vulnerabilities.

Lastly, a WordPress firewall and website hardening are also useful proactive approaches to website security. I once added a forum to a brand new website I created and it was immediately under attack within minutes. Believe it or not, virtually every website worldwide is under attack 24 hours a day by bots scanning for vulnerabilities.

Read the WPScan Report:

WPScan 2024 Website Threat Report

Featured Image by Shutterstock/Ljupco Smokovski

SEO

An In-Depth Guide And Best Practices For Mobile SEO

Over the years, search engines have encouraged businesses to improve mobile experience on their websites. More than 60% of web traffic comes from mobile, and in some cases based on the industry, mobile traffic can reach up to 90%.

Since Google has completed its switch to mobile-first indexing, the question is no longer “if” your website should be optimized for mobile, but how well it is adapted to meet these criteria. A new challenge has emerged for SEO professionals with the introduction of Interaction to Next Paint (INP), which replaced First Input Delay (FID) starting March, 12 2024.

Thus, understanding mobile SEO’s latest advancements, especially with the shift to INP, is crucial. This guide offers practical steps to optimize your site effectively for today’s mobile-focused SEO requirements.

What Is Mobile SEO And Why Is It Important?

The goal of mobile SEO is to optimize your website to attain better visibility in search engine results specifically tailored for mobile devices.

This form of SEO not only aims to boost search engine rankings, but also prioritizes enhancing mobile user experience through both content and technology.

While, in many ways, mobile SEO and traditional SEO share similar practices, additional steps related to site rendering and content are required to meet the needs of mobile users and the speed requirements of mobile devices.

Does this need to be a priority for your website? How urgent is it?

Consider this: 58% of the world’s web traffic comes from mobile devices.

If you aren’t focused on mobile users, there is a good chance you’re missing out on a tremendous amount of traffic.

Mobile-First Indexing

Additionally, as of 2023, Google has switched its crawlers to a mobile-first indexing priority.

This means that the mobile experience of your site is critical to maintaining efficient indexing, which is the step before ranking algorithms come into play.

Read more: Where We Are Today With Google’s Mobile-First Index

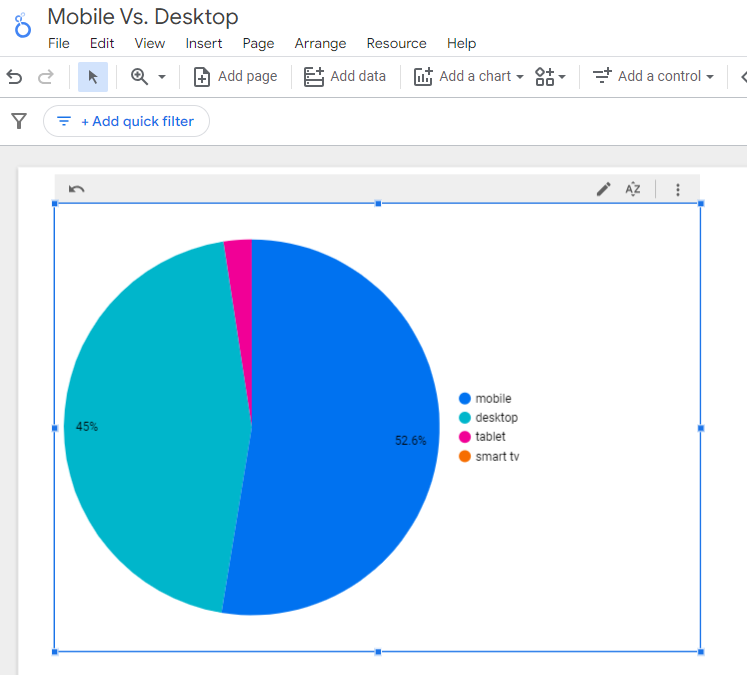

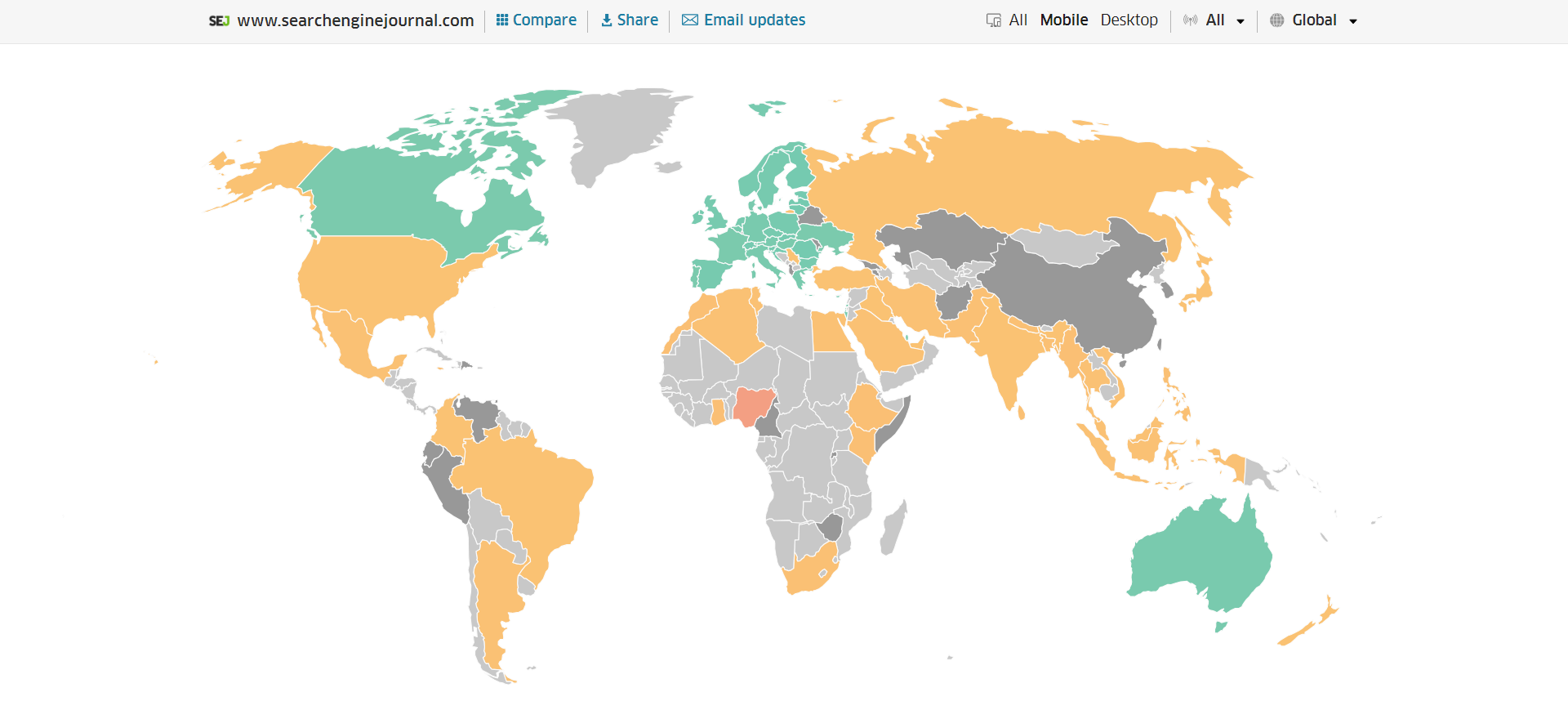

How Much Of Your Traffic Is From Mobile?

How much traffic potential you have with mobile users can depend on various factors, including your industry (B2B sites might attract primarily desktop users, for example) and the search intent your content addresses (users might prefer desktop for larger purchases, for example).

Regardless of where your industry and the search intent of your users might be, the future will demand that you optimize your site experience for mobile devices.

How can you assess your current mix of mobile vs. desktop users?

An easy way to see what percentage of your users is on mobile is to go into Google Analytics 4.

- Click Reports in the left column.

- Click on the Insights icon on the right side of the screen.

- Scroll down to Suggested Questions and click on it.

- Click on Technology.

- Click on Top Device model by Users.

- Then click on Top Device category by Users under Related Results.

- The breakdown of Top Device category will match the date range selected at the top of GA4.

You can also set up a report in Looker Studio.

- Add your site to the Data source.

- Add Device category to the Dimension field.

- Add 30-day active users to the Metric field.

- Click on Chart to select the view that works best for you.

Screenshot from Looker Studio, March 2024

Screenshot from Looker Studio, March 2024You can add more Dimensions to really dig into the data to see which pages attract which type of users, what the mobile-to-desktop mix is by country, which search engines send the most mobile users, and so much more.

Read more: Why Mobile And Desktop Rankings Are Different

How To Check If Your Site Is Mobile-Friendly

Now that you know how to build a report on mobile and desktop usage, you need to figure out if your site is optimized for mobile traffic.

While Google removed the mobile-friendly testing tool from Google Search Console in December 2023, there are still a number of useful tools for evaluating your site for mobile users.

Bing still has a mobile-friendly testing tool that will tell you the following:

- Viewport is configured correctly.

- Page content fits device width.

- Text on the page is readable.

- Links and tap targets are sufficiently large and touch-friendly.

- Any other issues detected.

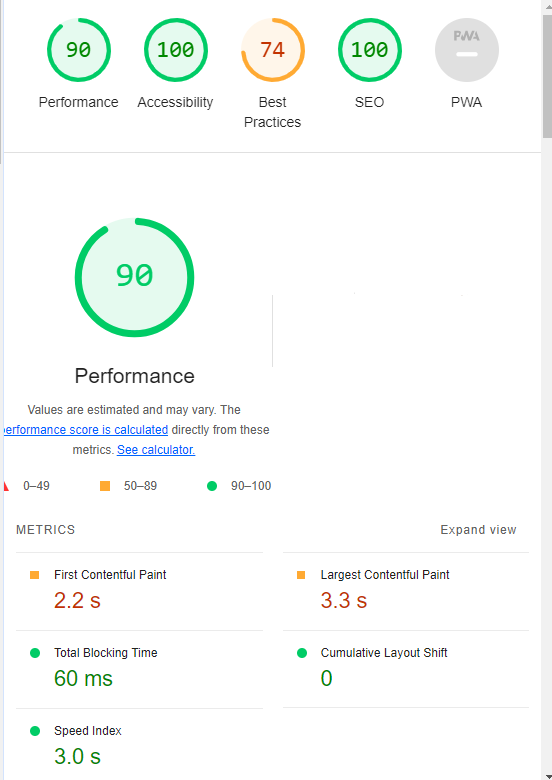

Google’s Lighthouse Chrome extension provides you with an evaluation of your site’s performance across several factors, including load times, accessibility, and SEO.

To use, install the Lighthouse Chrome extension.

- Go to your website in your browser.

- Click on the orange lighthouse icon in your browser’s address bar.

- Click Generate Report.

- A new tab will open and display your scores once the evaluation is complete.

Screenshot from Lighthouse, March 2024

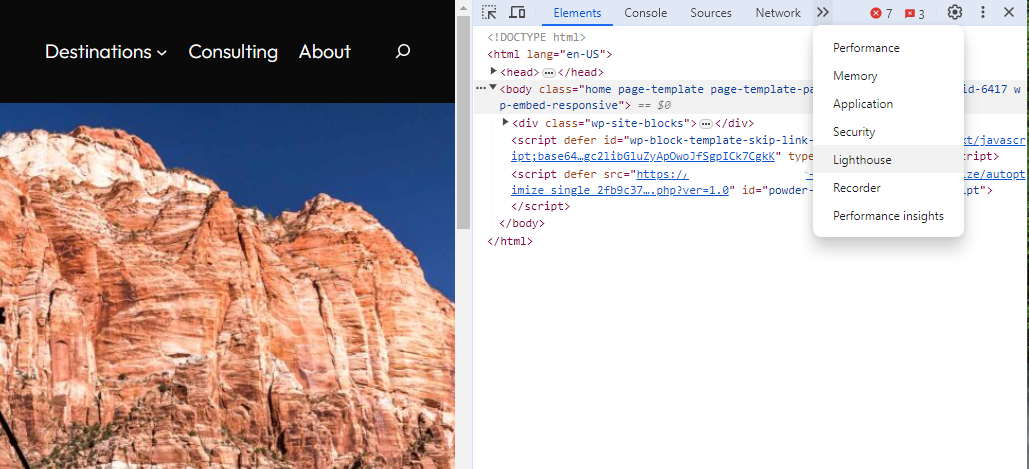

Screenshot from Lighthouse, March 2024You can also use the Lighthouse report in Developer Tools in Chrome.

- Simply click on the three dots next to the address bar.

- Select “More Tools.”

- Select Developer Tools.

- Click on the Lighthouse tab.

- Choose “Mobile” and click the “Analyze page load” button.

Screenshot from Lighthouse, March 2024

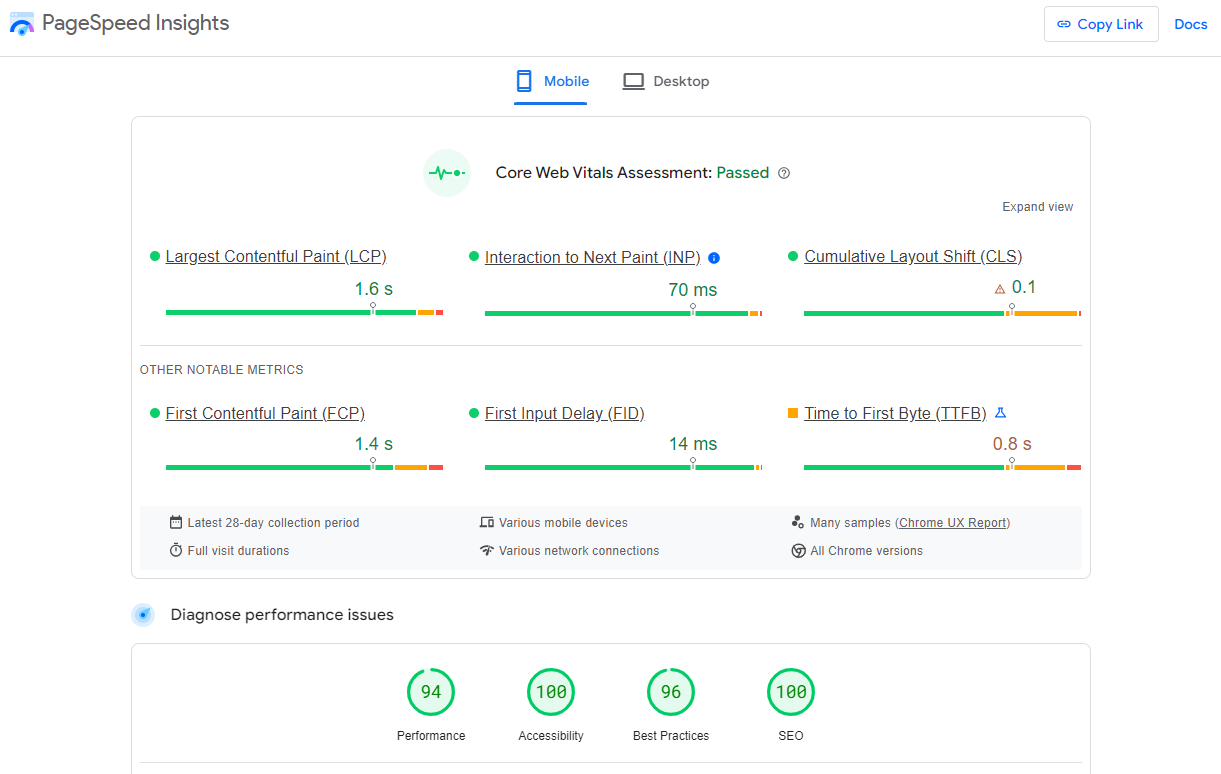

Screenshot from Lighthouse, March 2024Another option that Google offers is the PageSpeed Insights (PSI) tool. Simply add your URL into the field and click Analyze.

PSI will integrate any Core Web Vitals scores into the resulting view so you can see what your users are experiencing when they come to your site.

Screenshot from PageSpeed Insights, March 2024

Screenshot from PageSpeed Insights, March 2024Other tools, like WebPageTest.org, will graphically display the processes and load times for everything it takes to display your webpages.

With this information, you can see which processes block the loading of your pages, which ones take the longest to load, and how this affects your overall page load times.

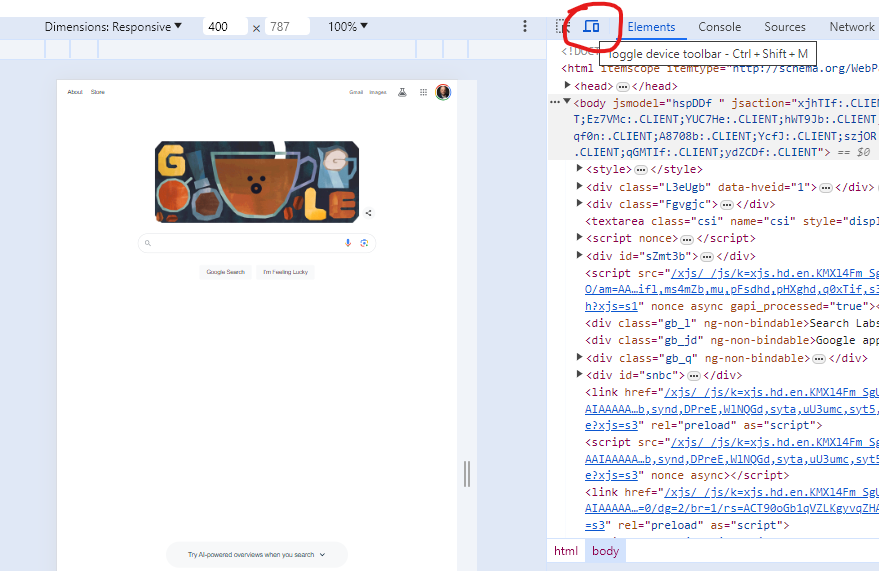

You can also emulate the mobile experience by using Developer Tools in Chrome, which allows you to switch back and forth between a desktop and mobile experience.

Screenshot from Google Chrome Developer Tools, March 2024

Screenshot from Google Chrome Developer Tools, March 2024Lastly, use your own mobile device to load and navigate your website:

- Does it take forever to load?

- Are you able to navigate your site to find the most important information?

- Is it easy to add something to cart?

- Can you read the text?

Read more: Google PageSpeed Insights Reports: A Technical Guide

How To Optimize Your Site Mobile-First

With all these tools, keep an eye on the Performance and Accessibility scores, as these directly affect mobile users.

Expand each section within the PageSpeed Insights report to see what elements are affecting your score.

These sections can give your developers their marching orders for optimizing the mobile experience.

While mobile speeds for cellular networks have steadily improved around the world (the average speed in the U.S. has jumped to 27.06 Mbps from 11.14 Mbps in just eight years), speed and usability for mobile users are at a premium.

Read more: Top 7 SEO Benefits Of Responsive Web Design

Best Practices For Mobile Optimization

Unlike traditional SEO, which can focus heavily on ensuring that you are using the language of your users as it relates to the intersection of your products/services and their needs, optimizing for mobile SEO can seem very technical SEO-heavy.

While you still need to be focused on matching your content with the needs of the user, mobile search optimization will require the aid of your developers and designers to be fully effective.

Below are several key factors in mobile SEO to keep in mind as you’re optimizing your site.

Site Rendering

How your site responds to different devices is one of the most important elements in mobile SEO.

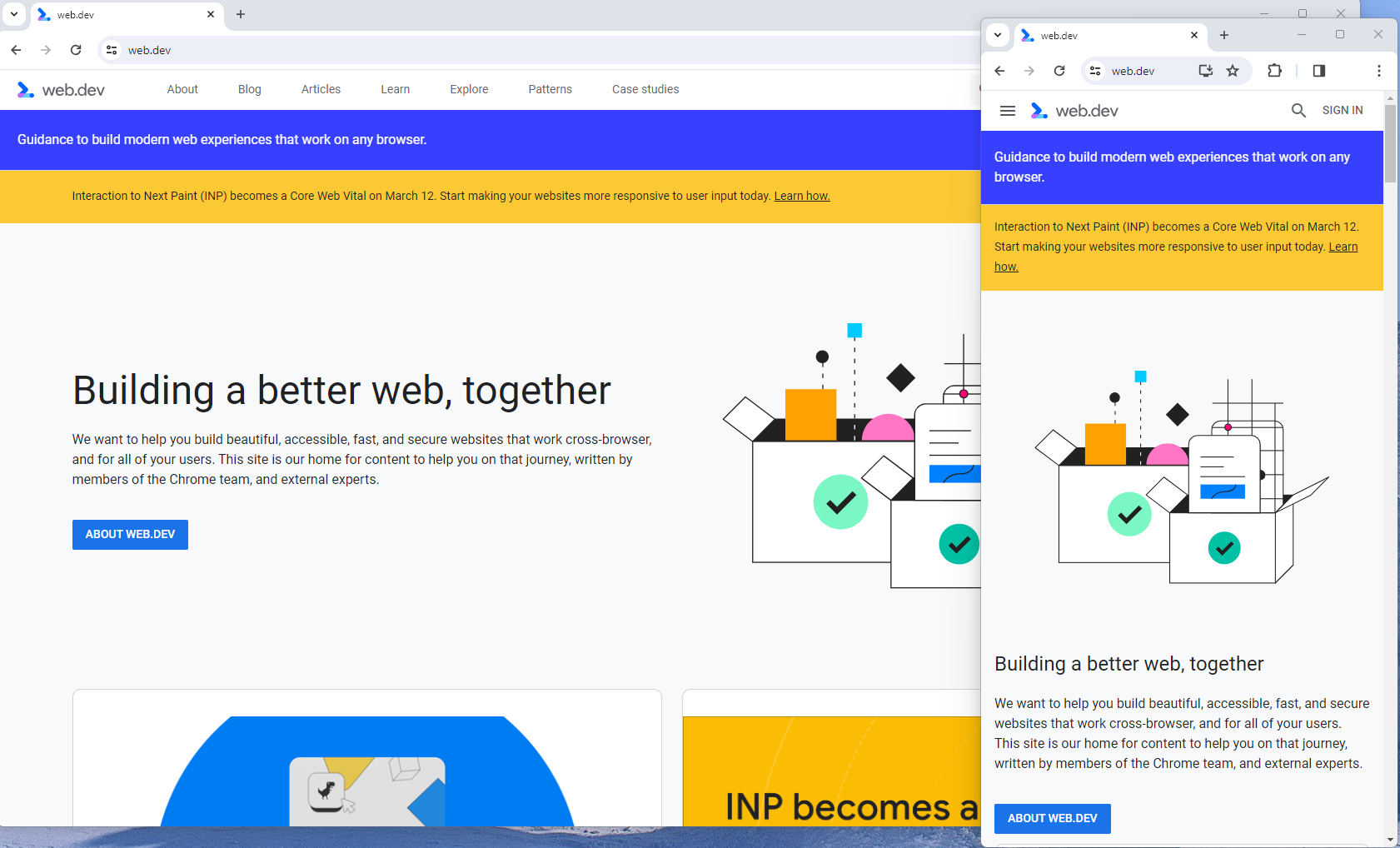

The two most common approaches to this are responsive design and dynamic serving.

Responsive design is the most common of the two options.

Using your site’s cascading style sheets (CSS) and flexible layouts, as well as responsive content delivery networks (CDN) and modern image file types, responsive design allows your site to adjust to a variety of screen sizes, orientations, and resolutions.

With the responsive design, elements on the page adjust in size and location based on the size of the screen.

You can simply resize the window of your desktop browser and see how this works.

Screenshot from web.dev, March 2024

Screenshot from web.dev, March 2024This is the approach that Google recommends.

Adaptive design, also known as dynamic serving, consists of multiple fixed layouts that are dynamically served to the user based on their device.

Sites can have a separate layout for desktop, smartphone, and tablet users. Each design can be modified to remove functionality that may not make sense for certain device types.

This is a less efficient approach, but it does give sites more control over what each device sees.

While these will not be covered here, two other options:

- Progressive Web Apps (PWA), which can seamlessly integrate into a mobile app.

- Separate mobile site/URL (which is no longer recommended).

Read more: An Introduction To Rendering For SEO

Interaction to Next Paint (INP)