TECHNOLOGY

The Dark Side of the Internet of Bodies

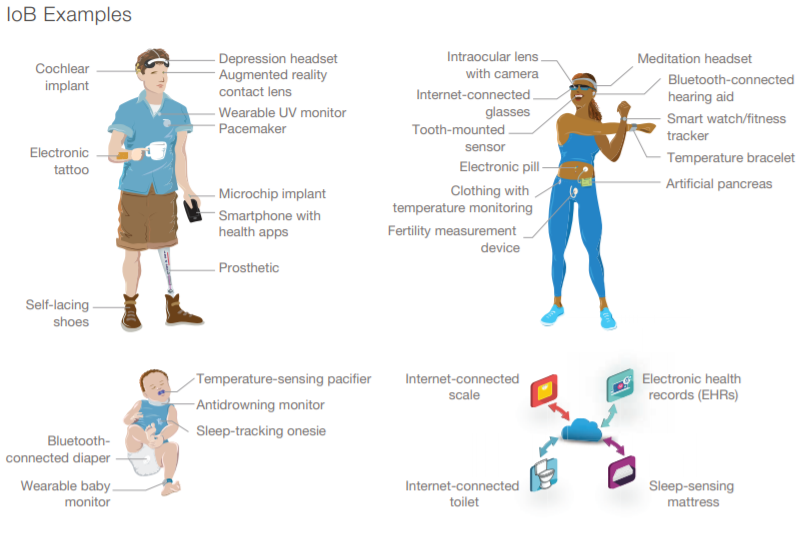

The Internet of Bodies (IoB) refers to the connection of devices that are worn or implanted on the body to the internet.

IoB devices include popular devices such as smart watches, fitness trackers, pacemakers, and insulin pumps. They are designed to improve our health and well-being.

The main purpose of IoB devices is to allow the constant monitoring of various bodily functions by providing real-time data and information to healthcare professionals. This can be beneficial for those with chronic health conditions, allowing for early detection of potential issues and more effective treatment. However, it also presents new security risks, as these devices are constantly connected to the internet and can be vulnerable to hacking. Hackers can potentially gain access to sensitive personal information and even control the functions of the device, potentially causing harm to the user.

As the Internet of Things (IoT) becomes more prevalent in our daily lives, connecting everything from our homes to our cars, the potential for malicious actors to exploit these connected devices for nefarious purposes is on the rise. One particularly concerning area of IoT is the Internet of Bodies (IoB), which includes devices implanted or worn on the body, such as pacemakers, insulin pumps, and smart watches. These devices are designed to improve our health and well-being, but they also open up new opportunities for hackers to gain access to our personal information and bodily functions.

What Are the Risks Associated with the Internet of Bodies (IoB)?

IoB devices have access to the human body, collecting vast quantities of personal biometric data. IoB devices pose serious risks, including hacking, privacy infringements, and malfunction.

The risks associated with IoB devices are not just theoretical; they have already been demonstrated in real-world attacks. In 2017, security researchers discovered that hackers could potentially gain access to a patient’s insulin pump and change the dosage, potentially causing serious harm or even death. Similarly, in 2018, researchers found that it was possible to hack into a pacemaker and change the settings, potentially putting the patient’s life at risk.

These types of attacks are particularly concerning because they target the most vulnerable members of society: the elderly and those with chronic health conditions. These individuals may not have the knowledge or resources to protect themselves from cyber attacks, and they may also be less able to recover from the physical and emotional effects of a hack.

Some Notable Examples of IoB Hacks

One notable example of an IoB hack occurred in 2017, when a hacker was able to remotely access a patient’s insulin pump and change the dosage. The patient, who had type 1 diabetes, was unaware of the hack and nearly died as a result of the incorrect dosage. The hacker was able to access the insulin pump through a vulnerability in the device’s wireless communication system.

In 2018, researchers at the security firm WhiteScope discovered that it was possible to hack into a pacemaker and change the settings. The researchers were able to gain access to the pacemaker by exploiting a vulnerability in the device’s wireless communication system. Once they had access, they were able to change the pacemaker’s settings and potentially put the patient’s life at risk.

Another example of an IoB hack occurred in 2020, when a group of hackers were able to gain access to a hospital’s network through a vulnerability in a smartwatch worn by a hospital employee. Once they had access to the network, the hackers were able to steal sensitive patient information, including medical records and personal identification numbers.

How to Protect Yourself from the Internet of Bodies

While the risks associated with IoB devices are significant, there are steps that individuals can take to protect themselves from hackers. One important step is to keep the device’s software up-to-date. Manufacturers often release updates that address known vulnerabilities, so it is important to install these updates as soon as they become available.

Another step that individuals can take is to be cautious when connecting their devices to unfamiliar networks. For example, it is generally not a good idea to connect your device to a public Wi-Fi network, as these networks are often unsecured and can be easily hacked.

Individuals should also be careful when downloading apps or software for their devices, as these can also be used to gain access to the device. It is important to only download apps from reputable sources and to be aware of any suspicious activity on the device.

Conclusion

People should be aware of the risks associated with IoB devices and take steps to protect themselves from hackers. This may include consulting with a healthcare professional or security expert, or taking a class on cyber security.

It is important for both individuals and healthcare professionals to be aware of the risks associated with the IoB and take steps to protect against hacking, such as keeping software up-to-date and being cautious when connecting to unfamiliar networks.

TECHNOLOGY

Next-gen chips, Amazon Q, and speedy S3

AWS re:Invent, which has been taking place from November 27 and runs to December 1, has had its usual plethora of announcements: a total of 21 at time of print.

Perhaps not surprisingly, given the huge potential impact of generative AI – ChatGPT officially turns one year old today – a lot of focus has been on the AI side for AWS’ announcements, including a major partnership inked with NVIDIA across infrastructure, software, and services.

Yet there has been plenty more announced at the Las Vegas jamboree besides. Here, CloudTech rounds up the best of the rest:

Next-generation chips

This was the other major AI-focused announcement at re:Invent: the launch of two new chips, AWS Graviton4 and AWS Trainium2, for training and running AI and machine learning (ML) models, among other customer workloads. Graviton4 shapes up against its predecessor with 30% better compute performance, 50% more cores and 75% more memory bandwidth, while Trainium2 delivers up to four times faster training than before and will be able to be deployed in EC2 UltraClusters of up to 100,000 chips.

The EC2 UltraClusters are designed to ‘deliver the highest performance, most energy efficient AI model training infrastructure in the cloud’, as AWS puts it. With it, customers will be able to train large language models in ‘a fraction of the time’, as well as double energy efficiency.

As ever, AWS offers customers who are already utilising these tools. Databricks, Epic and SAP are among the companies cited as using the new AWS-designed chips.

Zero-ETL integrations

AWS announced new Amazon Aurora PostgreSQL, Amazon DynamoDB, and Amazon Relational Database Services (Amazon RDS) for MySQL integrations with Amazon Redshift, AWS’ cloud data warehouse. The zero-ETL integrations – eliminating the need to build ETL (extract, transform, load) data pipelines – make it easier to connect and analyse transactional data across various relational and non-relational databases in Amazon Redshift.

A simple example of how zero-ETL functions can be seen is in a hypothetical company which stores transactional data – time of transaction, items bought, where the transaction occurred – in a relational database, but use another analytics tool to analyse data in a non-relational database. To connect it all up, companies would previously have to construct ETL data pipelines which are a time and money sink.

The latest integrations “build on AWS’s zero-ETL foundation… so customers can quickly and easily connect all of their data, no matter where it lives,” the company said.

Amazon S3 Express One Zone

AWS announced the general availability of Amazon S3 Express One Zone, a new storage class purpose-built for customers’ most frequently-accessed data. Data access speed is up to 10 times faster and request costs up to 50% lower than standard S3. Companies can also opt to collocate their Amazon S3 Express One Zone data in the same availability zone as their compute resources.

Companies and partners who are using Amazon S3 Express One Zone include ChaosSearch, Cloudera, and Pinterest.

Amazon Q

A new product, and an interesting pivot, again with generative AI at its core. Amazon Q was announced as a ‘new type of generative AI-powered assistant’ which can be tailored to a customer’s business. “Customers can get fast, relevant answers to pressing questions, generate content, and take actions – all informed by a customer’s information repositories, code, and enterprise systems,” AWS added. The service also can assist companies building on AWS, as well as companies using AWS applications for business intelligence, contact centres, and supply chain management.

Customers cited as early adopters include Accenture, BMW and Wunderkind.

Want to learn more about cybersecurity and the cloud from industry leaders? Check out Cyber Security & Cloud Expo taking place in Amsterdam, California, and London. Explore other upcoming enterprise technology events and webinars powered by TechForge here.

TECHNOLOGY

HCLTech and Cisco create collaborative hybrid workplaces

Digital comms specialist Cisco and global tech firm HCLTech have teamed up to launch Meeting-Rooms-as-a-Service (MRaaS).

Available on a subscription model, this solution modernises legacy meeting rooms and enables users to join meetings from any meeting solution provider using Webex devices.

The MRaaS solution helps enterprises simplify the design, implementation and maintenance of integrated meeting rooms, enabling seamless collaboration for their globally distributed hybrid workforces.

Rakshit Ghura, senior VP and Global head of digital workplace services, HCLTech, said: “MRaaS combines our consulting and managed services expertise with Cisco’s proficiency in Webex devices to change the way employees conceptualise, organise and interact in a collaborative environment for a modern hybrid work model.

“The common vision of our partnership is to elevate the collaboration experience at work and drive productivity through modern meeting rooms.”

Alexandra Zagury, VP of partner managed and as-a-Service Sales at Cisco, said: “Our partnership with HCLTech helps our clients transform their offices through cost-effective managed services that support the ongoing evolution of workspaces.

“As we reimagine the modern office, we are making it easier to support collaboration and productivity among workers, whether they are in the office or elsewhere.”

Cisco’s Webex collaboration devices harness the power of artificial intelligence to offer intuitive, seamless collaboration experiences, enabling meeting rooms with smart features such as meeting zones, intelligent people framing, optimised attendee audio and background noise removal, among others.

Want to learn more about cybersecurity and the cloud from industry leaders? Check out Cyber Security & Cloud Expo taking place in Amsterdam, California, and London. Explore other upcoming enterprise technology events and webinars powered by TechForge here.

TECHNOLOGY

Canonical releases low-touch private cloud MicroCloud

Canonical has announced the general availability of MicroCloud, a low-touch, open source cloud solution. MicroCloud is part of Canonical’s growing cloud infrastructure portfolio.

It is purpose-built for scalable clusters and edge deployments for all types of enterprises. It is designed with simplicity, security and automation in mind, minimising the time and effort to both deploy and maintain it. Conveniently, enterprise support for MicroCloud is offered as part of Canonical’s Ubuntu Pro subscription, with several support tiers available, and priced per node.

MicroClouds are optimised for repeatable and reliable remote deployments. A single command initiates the orchestration and clustering of various components with minimal involvement by the user, resulting in a fully functional cloud within minutes. This simplified deployment process significantly reduces the barrier to entry, putting a production-grade cloud at everyone’s fingertips.

Juan Manuel Ventura, head of architectures & technologies at Spindox, said: “Cloud computing is not only about technology, it’s the beating heart of any modern industrial transformation, driving agility and innovation. Our mission is to provide our customers with the most effective ways to innovate and bring value; having a complexity-free cloud infrastructure is one important piece of that puzzle. With MicroCloud, the focus shifts away from struggling with cloud operations to solving real business challenges” says

In addition to seamless deployment, MicroCloud prioritises security and ease of maintenance. All MicroCloud components are built with strict confinement for increased security, with over-the-air transactional updates that preserve data and roll back on errors automatically. Upgrades to newer versions are handled automatically and without downtime, with the mechanisms to hold or schedule them as needed.

With this approach, MicroCloud caters to both on-premise clouds but also edge deployments at remote locations, allowing organisations to use the same infrastructure primitives and services wherever they are needed. It is suitable for business-in-branch office locations or industrial use inside a factory, as well as distributed locations where the focus is on replicability and unattended operations.

Cedric Gegout, VP of product at Canonical, said: “As data becomes more distributed, the infrastructure has to follow. Cloud computing is now distributed, spanning across data centres, far and near edge computing appliances. MicroCloud is our answer to that.

“By packaging known infrastructure primitives in a portable and unattended way, we are delivering a simpler, more prescriptive cloud experience that makes zero-ops a reality for many Industries.“

MicroCloud’s lightweight architecture makes it usable on both commodity and high-end hardware, with several ways to further reduce its footprint depending on your workload needs. In addition to the standard Ubuntu Server or Desktop, MicroClouds can be run on Ubuntu Core – a lightweight OS optimised for the edge. With Ubuntu Core, MicroClouds are a perfect solution for far-edge locations with limited computing capabilities. Users can choose to run their workloads using Kubernetes or via system containers. System containers based on LXD behave similarly to traditional VMs but consume fewer resources while providing bare-metal performance.

Coupled with Canonical’s Ubuntu Pro + Support subscription, MicroCloud users can benefit from an enterprise-grade open source cloud solution that is fully supported and with better economics. An Ubuntu Pro subscription offers security maintenance for the broadest collection of open-source software available from a single vendor today. It covers over 30k packages with a consistent security maintenance commitment, and additional features such as kernel livepatch, systems management at scale, certified compliance and hardening profiles enabling easy adoption for enterprises. With per-node pricing and no hidden fees, customers can rest assured that their environment is secure and supported without the expensive price tag typically associated with cloud solutions.

Want to learn more about cybersecurity and the cloud from industry leaders? Check out Cyber Security & Cloud Expo taking place in Amsterdam, California, and London. Explore other upcoming enterprise technology events and webinars powered by TechForge here.

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Core Update Volatility, Helpful Content Update Gone, Dangerous Google Search Results & Google Ads Confusion

-

SEO6 days ago

SEO6 days ago10 Paid Search & PPC Planning Best Practices

-

MARKETING5 days ago

MARKETING5 days ago5 Psychological Tactics to Write Better Emails

-

MARKETING7 days ago

MARKETING7 days ago2 Ways to Take Back the Power in Your Business: Part 2

-

SEARCHENGINES5 days ago

SEARCHENGINES5 days agoWeekend Google Core Ranking Volatility

-

SEO6 days ago

SEO6 days agoWordPress Releases A Performance Plugin For “Near-Instant Load Times”

-

MARKETING6 days ago

MARKETING6 days agoThe power of program management in martech

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: April 15, 2024

You must be logged in to post a comment Login