MARKETING

Should you use your data warehouse as your CDP?

The advent of cloud-based data warehouses (DWHs) has brought simpler deployment, greater scale and better performance to a growing set of data-driven use cases. DWHs have become more prevalent in enterprise tech stacks, including martech stacks.

Inevitably, this begs the question: should you employ your existing DWH as a customer data platform (CDP)? After all, when you re-use an existing component in your stack, you can save resources and avoid new risks.

But the story isn’t so simple, and multiple potential design patterns await. Ultimately, there’s a case for and against using your DWH as a CDP. Let’s dig deeper.

DWH as a CDP may not be right for you

There are several inherent problems with using a DWH as a CDP. The first is obvious: not all organizations have a DWH in place. Sometimes, an enterprise DWH team does not have the time or resources to support customer-centered use cases. Other enterprises effectively deploy a CDP as a quasi-data warehouse. (Not all CDPs can do this, but you get the point.)

Let’s say you have most or all your customer data in a DWH. The problem for many, if not most, enterprises is that the data isn’t accessible in a marketer-friendly way. Typically, an enterprise DWH is constructed to support analytics use cases, not activation use cases. This affects how the data is labeled, managed, related and governed internally.

Recall that a DWH is essentially for storage and computing, which means data is stored in database tables with column names as attributes. You then write complex SQL statements to access that data. It’s unrealistic for your marketers to remember table and column names before they can create segments for activation. Or in other words, DWHs typically don’t support marketer self-service as most CDPs do.

This also touches on a broader structural issue. DWHs aren’t typically designed to support real-time marketing use cases that many CDPs target. It can perform quick calculations, and you can schedule ingestion and processing to transpire at frequent intervals, but it is still not real-time. Similarly, with some exceptions, a DWH doesn’t want to act off raw data, whereas marketers often want to employ raw data (typically events) to trigger certain activations.

Finally, remember that data and the ability to access it don’t maketh a CDP. Most CDPs offer some subset of additional capabilities you won’t find in a DWH, such as:

- Event subsystem with triggering.

- Anonymous identity resolution.

- Marketer-friendly interface for segmentation.

- Segment activation profiles with connectors.

- Potentially testing, personalization and recommendation services.

A DWH alone will not provide these capabilities, so you will need to source these elsewhere. Of course, DWH vendors have sizable partner marketplaces. You can find many alternatives, but they’re not native and will require integration and support effort.

Not surprisingly, then, there’s a lot of chatter about “composable CDPs” and the potential role of a DWH in that context. I’ve argued previously that composability is a spectrum, and you start losing benefits beyond a certain point.

Having issued all these caveats, a DWH can play a role as part of a customer data stack, including:

- Doing away with a CDP by activating directly from the DWH.

- Using the DWH as a quasi-CDP with a reverse ETL platform.

- Coexisting with a CDP.

Let’s look at these three design patterns.

1. Connecting marketing platforms directly to your DWH

This is perhaps the most extreme case I critiqued above, but some enterprises have made this work, especially in the pre-CDP era and platforms (like Snowflake with its broad ecosystem) are looking to try to solve this.

The idea here is that your engagement platform directly connects to push-pull data with a DWH. Many mature email and marketing automation platforms are natively wired to do this, albeit typically via batch push. Your marketers then use the messaging platform to create segments and send messages to those segments in the case of outbound marketing.

Imagine you had another marketing or engagement platform, a personalized website or ecommerce platform. Again you draw data from DWH, then employ the web application platform to create another set of segments for more targeted engagement.

Do you see the problem yet? There are two sets of segmentation interfaces already. What happens if you had 10 marketing platforms? 20? You will keep creating segments everywhere, so your omnichannel promise disappears.

Finally, what if you had to add another marketing platform that did not support direct ingestion from a DWH?

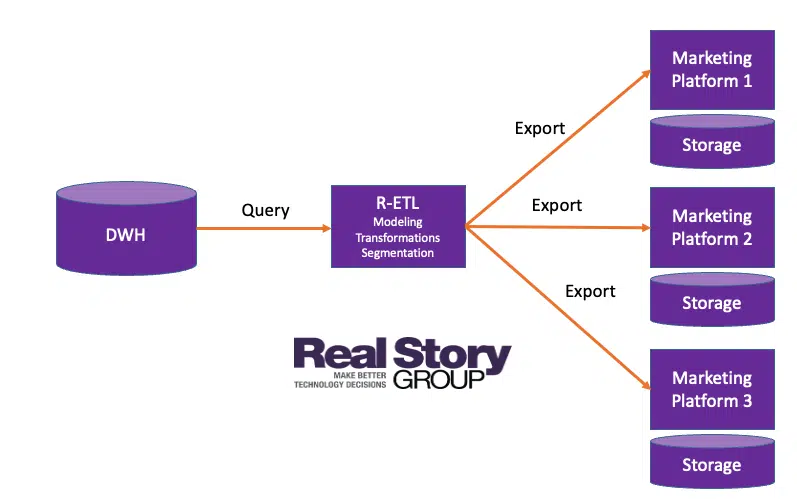

This approach solves several problems with the first pattern above. Notably, it allows (in theory) a non-DWH specialist to create universal segments virtually atop the DWH and activate multiple platforms. With transformation and a better connector framework, you can apply different label mappings and marketer-friendly data structures to different endpoints.

Here’s how it works. Reverse ETL platforms pull data from the DWH and send it to marketing platforms after any transformation. You can perform multiple transformations and send that data to several destinations simultaneously. You can even automate it and have exports run regularly at a predefined schedule.

But a copy of that data (or a subset of it) is actually copied over to target platforms, so you really don’t have just a single copy of data. Since the reverse-ETL platform does not have a copy of data, your required segments or audiences are always generated at query time (typically in batches). Then you export them over to destinations.

This is not a suitable approach if you want to have real-time triggers or always-on campaigns based on events. Sure, you can automate your exports at high frequency, but that’s not real-time. As you increase your export frequency, your costs will exponentially increase.

Also, while reverse-ETL tools provide a segmentation interface, they tend to be more technical and DataOps-focused rather than MOps-focused. Before declaring this a “business-friendly” solution suitable for marketer self-service, you must test it carefully.

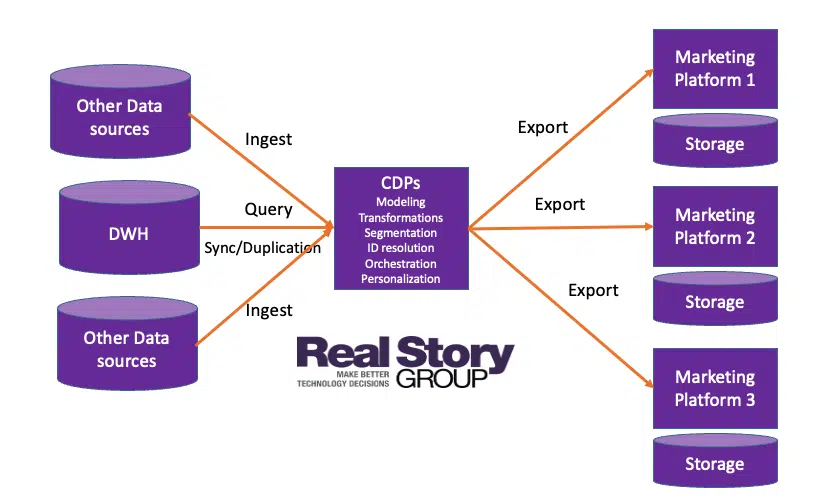

3. DWH co-exists with CDP

Your enterprise DWH serves as a customer data infrastructure layer that supplies data to your CDP (among other endpoints). Many, if not most, CDPs now offer some capabilities to sync from DWH platforms, notably Snowflake.

There are variations in how these CDPs can co-exist with DWH. Most CDPs sync and duplicate data into their repository, whereas others (including reverse-ETL vendors) don’t make a copy. However, there could be trade-offs you need to consider before finalizing what works for you.

In general, we tend to see larger enterprises preferring this design pattern, albeit with wide variance around where such critical services as customer identity resolution ultimately reside.

Dig deeper: Where should a CDP fit in your martech stack?

Wrap-up

DWH platforms play increasingly essential roles in martech stacks. However, you continue to have multiple architectural choices about which services you render within your data ecosystem.

I think it’s premature to rule out CDPs in your future. Each pattern has its trade-offs to keep in mind while evaluating your options.

Get MarTech! Daily. Free. In your inbox.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

MARKETING

Tinuiti Marketing Analytics Recognized by Forrester

Rapid Media Mix Modeling and Proprietary Tech Transform Brand Performance

Tinuiti, the largest independent full-funnel performance marketing agency, has been included in a recent Forrester Research report titled, “The Marketing Analytics Landscape, Q2 2024.” This report comprehensively overviews marketing analytics markets, use cases, and capabilities. B2C marketing leaders can use this research by Principal Analyst Tina Moffett to understand the intersection of marketing analytics capabilities and use cases to determine the vendor or service provider best positioned for their analytics and insights needs. Moffett describes the top marketing analytics markets as advertising agencies, marketing dashboards and business intelligence tools, marketing measurement and optimization platforms and service providers, and media analytics tools.

As an advertising agency, we believe Tinuiti is uniquely positioned to manage advertising campaigns for brands including buying, targeting, and measurement. Our proprietary measurement technology, Bliss Point by Tinuiti, allows us to measure the optimal level of investment to maximize impact and efficiency. According to the Forrester report, “only 30% of B2C marketing decision-makers say their organization uses marketing or media mix modeling (MMM),” so having a partner that knows, embraces, and utilizes MMM is important. As Tina astutely explains, data-driven agencies have amplified their marketing analytics competencies with data science expertise; and proprietary tools; and tailored their marketing analytics techniques based on industry, business, and data challenges.

Our Rapid Media Mix Modeling sets a new standard in the market with its exceptional speed, precision, and transparency. Our patented tech includes Rapid Media Mix Modeling, Always-on Incrementality, Brand Equity, Creative Insights, and Forecasting – it will get you to your Marketing Bliss Point in each channel, across your entire media mix, and your overall brand performance.

As a marketing leader you may ask yourself:

- How much of our marketing budget should we allocate to driving store traffic versus e-commerce traffic?

- How should we allocate our budget by channel to generate the most traffic and revenue possible?

- How many customers did we acquire in a specific region with our media spend?

- What is the impact of seasonality on our media mix?

- How should we adjust our budget accordingly?

- What is the optimal marketing channel mix to maximize brand awareness?

These are just a few of the questions that Bliss Point by Tinuiti can help you answer.

Learn more about our customer-obsessed, product-enabled, and fully integrated approach and how we’ve helped fuel full-funnel outcomes for the world’s most digital-forward brands like Poppi & Toms.

The Landscape report is available online to Forrester customers or for purchase here.

MARKETING

Ecommerce evolution: Blurring the lines between B2B and B2C

Understanding convergence

B2B and B2C ecommerce are two distinct models of online selling. B2B ecommerce is between businesses, such as wholesalers, distributors, and manufacturers. B2C ecommerce refers to transactions between businesses like retailers and consumer brands, directly to individual shoppers.

However, in recent years, the boundaries between these two models have started to fade. This is known as the convergence between B2B and B2C ecommerce and how they are becoming more similar and integrated.

Source: White Paper: The evolution of the B2B Consumer Buyer (ClientPoint, Jan 2024)

What’s driving this change?

Ever increasing customer expectations

Customers today expect the same level of convenience, speed, and personalization in their B2B transactions as they do in their B2C interactions. B2B buyers are increasingly influenced by their B2C experiences. They want research, compare, and purchase products online, seamlessly transitioning between devices and channels. They also prefer to research and purchase online, using multiple devices and channels.

Forrester, 68% of buyers prefer to research on their own, online . Customers today expect the same level of convenience, speed, and personalization in their B2B transactions as they do in their B2C interactions. B2B buyers are increasingly influenced by their B2C experiences. They want research, compare, and purchase products online, seamlessly transitioning between devices and channels. They also prefer to research and purchase online, using multiple devices and channels

Technology and omnichannel strategies

Technology enables B2B and B2C ecommerce platforms to offer more features and functionalities, such as mobile optimization, chatbots, AI, and augmented reality. Omnichannel strategies allow B2B and B2C ecommerce businesses to provide a seamless and consistent customer experience across different touchpoints, such as websites, social media, email, and physical stores.

However, with every great leap forward comes its own set of challenges. The convergence of B2B and B2C markets means increased competition. Businesses now not only have to compete with their traditional rivals, but also with new entrants and disruptors from different sectors. For example, Amazon Business, a B2B ecommerce platform, has become a major threat to many B2B ecommerce businesses, as it offers a wide range of products, low prices, and fast delivery

“Amazon Business has proven that B2B ecommerce can leverage popular B2C-like functionality” argues Joe Albrecht, CEO / Managing Partner, Xngage. . With features like Subscribe-and-Save (auto-replenishment), one-click buying, and curated assortments by job role or work location, they make it easy for B2B buyers to go to their website and never leave. Plus, with exceptional customer service and promotional incentives like Amazon Business Prime Days, they have created a reinforcing loyalty loop.

And yet, according to Barron’s, Amazon Business is only expected to capture 1.5% of the $5.7 Trillion addressable business market by 2025. If other B2B companies can truly become digital-first organizations, they can compete and win in this fragmented space, too.”

If other B2B companies can truly become digital-first organizations, they can also compete and win in this fragmented space

Joe AlbrechtCEO/Managing Partner, XNGAGE

Increasing complexity

Another challenge is the increased complexity and cost of managing a converging ecommerce business. Businesses have to deal with different customer segments, requirements, and expectations, which may require different strategies, processes, and systems. For instance, B2B ecommerce businesses may have to handle more complex transactions, such as bulk orders, contract negotiations, and invoicing, while B2C ecommerce businesses may have to handle more customer service, returns, and loyalty programs. Moreover, B2B and B2C ecommerce businesses must invest in technology and infrastructure to support their convergence efforts, which may increase their operational and maintenance costs.

How to win

Here are a few ways companies can get ahead of the game:

Adopt B2C-like features in B2B platforms

User-friendly design, easy navigation, product reviews, personalization, recommendations, and ratings can help B2B ecommerce businesses to attract and retain more customers, as well as to increase their conversion and retention rates.

According to McKinsey, ecommerce businesses that offer B2C-like features like personalization can increase their revenues by 15% and reduce their costs by 20%. You can do this through personalization of your website with tools like Product Recommendations that help suggest related products to increase sales.

Focus on personalization and customer experience

B2B and B2C ecommerce businesses need to understand their customers’ needs, preferences, and behaviors, and tailor their offerings and interactions accordingly. Personalization and customer experience can help B2B and B2C ecommerce businesses to increase customer satisfaction, loyalty, and advocacy, as well as to improve their brand reputation and competitive advantage. According to a Salesforce report, 88% of customers say that the experience a company provides is as important as its products or services.

Market based on customer insights

Data and analytics can help B2B and B2C ecommerce businesses to gain insights into their customers, markets, competitors, and performance, and to optimize their strategies and operations accordingly. Data and analytics can also help B2B and B2C ecommerce businesses to identify new opportunities, trends, and innovations, and to anticipate and respond to customer needs and expectations. According to McKinsey, data-driven organizations are 23 times more likely to acquire customers, six times more likely to retain customers, and 19 times more likely to be profitable.

What’s next?

The convergence of B2B and B2C ecommerce is not a temporary phenomenon, but a long-term trend that will continue to shape the future of ecommerce. According to Statista, the global B2B ecommerce market is expected to reach $20.9 trillion by 2027, surpassing the B2C ecommerce market, which is expected to reach $10.5 trillion by 2027. Moreover, the report predicts that the convergence of B2B and B2C ecommerce will create new business models, such as B2B2C, B2A (business to anyone), and C2B (consumer to business).

Therefore, B2B and B2C ecommerce businesses need to prepare for the converging ecommerce landscape and take advantage of the opportunities and challenges it presents. Here are some recommendations for B2B and B2C ecommerce businesses to navigate the converging landscape:

- Conduct a thorough analysis of your customers, competitors, and market, and identify the gaps and opportunities for convergence.

- Develop a clear vision and strategy for convergence, and align your goals, objectives, and metrics with it.

- Invest in technology and infrastructure that can support your convergence efforts, such as cloud, mobile, AI, and omnichannel platforms.

- Implement B2C-like features in your B2B platforms, and vice versa, to enhance your customer experience and satisfaction.

- Personalize your offerings and interactions with your customers, and provide them with relevant and valuable content and solutions.

- Leverage data and analytics to optimize your performance and decision making, and to innovate and differentiate your business.

- Collaborate and partner with other B2B and B2C ecommerce businesses, as well as with other stakeholders, such as suppliers, distributors, and customers, to create value and synergy.

- Monitor and evaluate your convergence efforts, and adapt and improve them as needed.

By following these recommendations, B2B and B2C ecommerce businesses can bridge the gap between their models and create a more integrated and seamless ecommerce experience for their customers and themselves.

MARKETING

Streamlining Processes for Increased Efficiency and Results

How can businesses succeed nowadays when technology rules? With competition getting tougher and customers changing their preferences often, it’s a challenge. But using marketing automation can help make things easier and get better results. And in the future, it’s going to be even more important for all kinds of businesses.

So, let’s discuss how businesses can leverage marketing automation to stay ahead and thrive.

Benefits of automation marketing automation to boost your efforts

First, let’s explore the benefits of marketing automation to supercharge your efforts:

Marketing automation simplifies repetitive tasks, saving time and effort.

With automated workflows, processes become more efficient, leading to better productivity. For instance, automation not only streamlines tasks like email campaigns but also optimizes website speed, ensuring a seamless user experience. A faster website not only enhances customer satisfaction but also positively impacts search engine rankings, driving more organic traffic and ultimately boosting conversions.

Automation allows for precise targeting, reaching the right audience with personalized messages.

With automated workflows, processes become more efficient, leading to better productivity. A great example of automated workflow is Pipedrive & WhatsApp Integration in which an automated welcome message pops up on their WhatsApp

within seconds once a potential customer expresses interest in your business.

Increases ROI

By optimizing campaigns and reducing manual labor, automation can significantly improve return on investment.

Leveraging automation enables businesses to scale their marketing efforts effectively, driving growth and success. Additionally, incorporating lead scoring into automated marketing processes can streamline the identification of high-potential prospects, further optimizing resource allocation and maximizing conversion rates.

Harnessing the power of marketing automation can revolutionize your marketing strategy, leading to increased efficiency, higher returns, and sustainable growth in today’s competitive market. So, why wait? Start automating your marketing efforts today and propel your business to new heights, moreover if you have just learned ways on how to create an online business

How marketing automation can simplify operations and increase efficiency

Understanding the Change

Marketing automation has evolved significantly over time, from basic email marketing campaigns to sophisticated platforms that can manage entire marketing strategies. This progress has been fueled by advances in technology, particularly artificial intelligence (AI) and machine learning, making automation smarter and more adaptable.

One of the main reasons for this shift is the vast amount of data available to marketers today. From understanding customer demographics to analyzing behavior, the sheer volume of data is staggering. Marketing automation platforms use this data to create highly personalized and targeted campaigns, allowing businesses to connect with their audience on a deeper level.

The Emergence of AI-Powered Automation

In the future, AI-powered automation will play an even bigger role in marketing strategies. AI algorithms can analyze huge amounts of data in real-time, helping marketers identify trends, predict consumer behavior, and optimize campaigns as they go. This agility and responsiveness are crucial in today’s fast-moving digital world, where opportunities come and go in the blink of an eye. For example, we’re witnessing the rise of AI-based tools from AI website builders, to AI logo generators and even more, showing that we’re competing with time and efficiency.

Combining AI-powered automation with WordPress management services streamlines marketing efforts, enabling quick adaptation to changing trends and efficient management of online presence.

Moreover, AI can take care of routine tasks like content creation, scheduling, and testing, giving marketers more time to focus on strategic activities. By automating these repetitive tasks, businesses can work more efficiently, leading to better outcomes. AI can create social media ads tailored to specific demographics and preferences, ensuring that the content resonates with the target audience. With the help of an AI ad maker tool, businesses can efficiently produce high-quality advertisements that drive engagement and conversions across various social media platforms.

Personalization on a Large Scale

Personalization has always been important in marketing, and automation is making it possible on a larger scale. By using AI and machine learning, marketers can create tailored experiences for each customer based on their preferences, behaviors, and past interactions with the brand.

This level of personalization not only boosts customer satisfaction but also increases engagement and loyalty. When consumers feel understood and valued, they are more likely to become loyal customers and brand advocates. As automation technology continues to evolve, we can expect personalization to become even more advanced, enabling businesses to forge deeper connections with their audience. As your company has tiny homes for sale California, personalized experiences will ensure each customer finds their perfect fit, fostering lasting connections.

Integration Across Channels

Another trend shaping the future of marketing automation is the integration of multiple channels into a cohesive strategy. Today’s consumers interact with brands across various touchpoints, from social media and email to websites and mobile apps. Marketing automation platforms that can seamlessly integrate these channels and deliver consistent messaging will have a competitive edge. When creating a comparison website it’s important to ensure that the platform effectively aggregates data from diverse sources and presents it in a user-friendly manner, empowering consumers to make informed decisions.

Omni-channel integration not only betters the customer experience but also provides marketers with a comprehensive view of the customer journey. By tracking interactions across channels, businesses can gain valuable insights into how consumers engage with their brand, allowing them to refine their marketing strategies for maximum impact. Lastly, integrating SEO services into omni-channel strategies boosts visibility and helps businesses better understand and engage with their customers across different platforms.

The Human Element

While automation offers many benefits, it’s crucial not to overlook the human aspect of marketing. Despite advances in AI and machine learning, there are still elements of marketing that require human creativity, empathy, and strategic thinking.

Successful marketing automation strikes a balance between technology and human expertise. By using automation to handle routine tasks and data analysis, marketers can focus on what they do best – storytelling, building relationships, and driving innovation.

Conclusion

The future of marketing automation looks promising, offering improved efficiency and results for businesses of all sizes.

As AI continues to advance and consumer expectations change, automation will play an increasingly vital role in keeping businesses competitive.

By embracing automation technologies, marketers can simplify processes, deliver more personalized experiences, and ultimately, achieve their business goals more effectively than ever before.

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoGoogle Core Update Volatility, Helpful Content Update Gone, Dangerous Google Search Results & Google Ads Confusion

-

SEO7 days ago

SEO7 days ago10 Paid Search & PPC Planning Best Practices

-

MARKETING5 days ago

MARKETING5 days ago5 Psychological Tactics to Write Better Emails

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoWeekend Google Core Ranking Volatility

-

MARKETING6 days ago

MARKETING6 days agoThe power of program management in martech

-

SEO6 days ago

SEO6 days agoWordPress Releases A Performance Plugin For “Near-Instant Load Times”

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: April 15, 2024

-

PPC5 days ago

PPC5 days ago20 Neuromarketing Techniques & Triggers for Better-Converting Copy