What social networks have learned since the 2016 election

On the eve on the 2020 U.S. election, tensions are running high.

The good news? 2020 isn’t 2016. Social networks are way better prepared to handle a wide array of complex, dangerous or otherwise ambiguous Election Day scenarios.

The bad news: 2020 is its own beast, one that’s unleashed a nightmare health scenario on a divided nation that’s even more susceptible now to misinformation, hyper-partisanship and dangerous ideas moving from the fringe to the center than it was four years ago.

The U.S. was caught off guard by foreign interference in the 2016 election, but shocking a nation that’s spent the last eight months expecting a convergence of worst-case scenarios won’t be so easy.

Social platforms have braced for the 2020 election in a way they didn’t in 2016. Here’s what they’re worried about and the critical lessons from the last four years that they’ll bring to bear.

Contested election results

President Trump has repeatedly signaled that he won’t accept the results of the election in the case that he loses — a shocking threat that could imperil American democracy, but one social platforms have been tracking closely. Trump’s erratic, often rule-bending behavior on social networks in recent months has served as a kind of stress test, allowing those platforms to game out different scenarios for the election.

Facebook and Twitter in particular have laid out detailed plans about what happens if the results of the election aren’t immediately clear or if a candidate refuses to accept official results once they’re tallied.

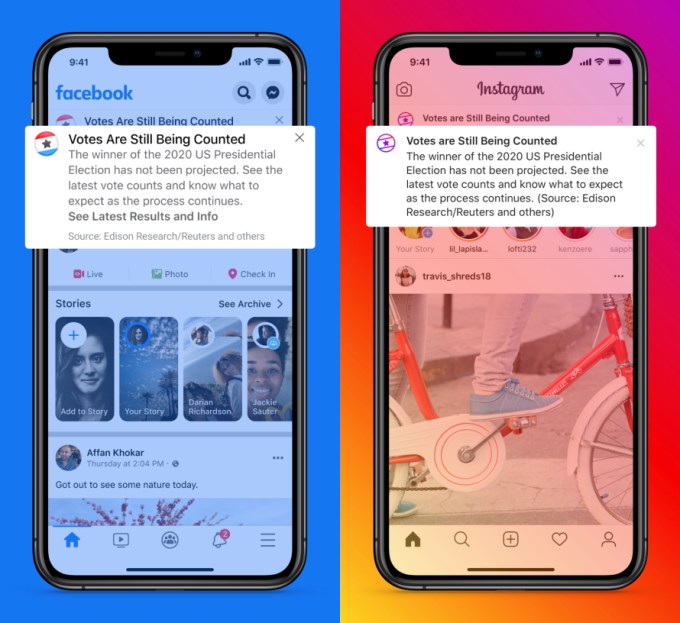

On election night, Facebook will pin a message to the top of both Facebook and Instagram telling users that vote counting is still underway. When authoritative results are in, Facebook will change those messages to reflect the official results. Importantly, U.S. election results might not be clear on election night or for some days afterward, a potential outcome for which Facebook and other social networks are bracing.

Image via Facebook

If a candidate declared victory prematurely, Facebook doesn’t say it will remove those claims, but it will pair them with its message that there’s no official result and voting is still underway.

Twitter released its plans for handling election results two months ago, explaining that it will either remove or attach a warning label to premature claims of victory before authoritative election results are in. The company also explicitly stated that it will act against any tweets “inciting unlawful conduct to prevent a peaceful transfer of power or orderly succession,” a shocking rule to have to articulate, but a necessary one in 2020.

On Monday, Twitter elaborated on its policy, saying that it would focus on labeling misleading tweets about the presidential election and other contested races. The company released a sample image of a label it would append, showing a warning stating that “this tweet is sharing inaccurate information.”

Last week, the company also began showing users large misinformation warnings at the top of their feeds. The messages told users that they “might encounter misleading information” about mail-in voting and also cautioned them that election results may not be immediately known.

According to Twitter, users who try to share tweets with misleading election-related misinformation will see a pop-up pointing them to vetted information and forcing them to click through a warning before sharing. Twitter also says it will act on any “disputed claims” that might cast doubt on voting, including “unverified information about election rigging, ballot tampering, vote tallying, or certification of election results.”

One other major change that many users probably already noticed is Twitter’s decision to disable retweets. Users can still retweet by clicking through a pop-up page, but Twitter made the change to encourage people to quote retweet instead. The effort to slow down the spread of misinformation was striking, and Twitter said it will stay in place through the end of election week, at least.

YouTube didn’t go into similar detail about its decision making, but the company previously said it will put an “informational” label on search results related to the election and below election-related videos. The label warns users that “results may not be final” and points them to the company’s election info hub.

Foreign disinformation

This is one area where social networks have made big strides. After Russian disinformation took root on social platforms four years ago, those companies now coordinate with one another and the government about the threats they’re seeing.

In the aftermath of 2016, Facebook eventually woke up to the idea that its platform could be leveraged to scale social ills like hate and misinformation. Its scorecard is uneven, but its actions against foreign disinformation have been robust, reducing that threat considerably.

A repeat of the same concerns from 2016 is unlikely. Facebook made aggressive efforts to find foreign coordinated disinformation campaigns across its platforms, and it publishes what it finds regularly and with little delay. But in 2020, the biggest concerns are coming from within the country — not without.

Most foreign information operations have been small so far, failing to gain much traction. Last month, Facebook removed a network of fake accounts connected to Iran. The operation was small and failed to generate much traction, but it shows that U.S. adversaries are still interested in trying out the tactic.

Misleading political ads

To address concerns around election misinformation in ads, Facebook opted for a temporary political ad blackout, starting at 12 a.m. PT on November 4 and continuing until the company deems it safe to toggle them back on. Facebook hasn’t accepted any new political ads since October 27 and previously said it won’t accept any ads that delegitimize the results of the election. Google will also pause election-related ads after polls close Tuesday.

Facebook has made a number of big changes to political ads since 2016, when Russia bought Facebook ads to meddle with U.S. politics. Political ads on the platform are subject to more scrutiny and much more transparency now and Facebook’s ad library emerged as an exemplary tool that allows anyone to see what ads have been published, who bought them and how much they spent.

Unlike Facebook, Twitter’s way of dealing with political advertising was cutting it off entirely. The company announced the change a year ago and hasn’t looked back since. TikTok also opted to disallow political ads.

Political violence

Politically motivated violence is a big worry this week in the U.S. — a concern that shows just how tense the situation has grown under four years of Trump. Leading into Tuesday, the president has repeatedly made false claims of voter fraud and encouraged his followers to engage in voter intimidation, a threat Facebook was clued into enough that it made a policy prohibiting “militarized” language around poll watching.

Facebook made a number of other meaningful recent changes, like banning the dangerous pro-Trump conspiracy theory QAnon and militias that use the platform to organize, though those efforts have come very late in the game.

Facebook was widely criticized for its inaction around a Trump post warning “when the looting starts, the shooting starts” during racial justice protests earlier this year, but its recent posture suggests similar posts might be taken more seriously now. We’ll be watching how Facebook handles emerging threats of violence this week.

Its recent decisive moves against extremism are important, but the platform has long incubated groups that use the company’s networking and event tools to come together for potential real-world violence. Even if they aren’t allowed on the platform any longer, many of those groups got organized and then moved their networks onto alternative social networks and private channels. Still, making it more difficult to organize violence on mainstream social networks is a big step in the right direction.

Twitter also addressed the potential threat of election-related violence in advance, noting that it may add warnings or require users to remove any tweets “inciting interference with the election” or encouraging violence.

Platform policy shifts in 2020

Facebook is the biggest online arena where U.S. political life plays out. While a similar number of Americans watch videos on YouTube, Facebook is where they go to duke it out over candidates, share news stories (some legitimate, some not) and generally express themselves politically. It’s a tinderbox in normal times — and 2020 is far from normal.

While Facebook acted against foreign threats quickly after 2016, the company dragged its feet on platform changes that could be perceived as politically motivated — a hesitation that backfired by incubating dangerous extremists and allowing many kinds of misinformation, particularly on the far-right, to survive and thrive.

In spite of Facebook’s lingering misguided political fears, there are reasons to be hopeful that the company might avert election-related catastrophes.

Whether it was inspired by the threat of a contested election, federal antitrust action or a possible Biden presidency, Facebook has signaled a shift to more thoughtful moderation with a flurry of recent policy enforcement decisions. An accompanying flurry of election-focused podcast and television ads suggests Facebook is worried about public perception too — and it should be.

Twitter’s plan for the election has been well-communicated and detailed. In 2020, the company treats its policy decisions with more transparency, communicates them in real time and isn’t afraid to admit to mistakes. The relatively small social network plays an outsized role in publishing political content that’s amplified elsewhere, so the choices it makes are critical for countering misinformation and extremism.

The companies that host and amplify online political conversation have learned some major lessons since 2016 — mostly the hard way. Let’s just hope it was enough to help them guide their roiling platforms through one of the most fraught moments in modern U.S. history.

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.

Christian family goes in hiding after being cleared of blasphemy

LAHORE, Pakistan — A court in Pakistan granted bail to a Christian falsely charged with blasphemy, but he and his family have separated and gone into hiding amid threats to their lives, sources said.

Haroon Shahzad, 45, was released from Sargodha District Jail on Nov. 15, said his attorney, Aneeqa Maria. Shahzad was charged with blasphemy on June 30 after posting Bible verses on Facebook that infuriated Muslims, causing dozens of Christian families in Chak 49 Shumaali, near Sargodha in Punjab Province, to flee their homes.

Lahore High Court Judge Ali Baqir Najfi granted bail on Nov. 6, but the decision and his release on Nov. 15 were not made public until now due to security fears for his life, Maria said.

Shahzad told Morning Star News by telephone from an undisclosed location that the false accusation has changed his family’s lives forever.

“My family has been on the run from the time I was implicated in this false charge and arrested by the police under mob pressure,” Shahzad told Morning Star News. “My eldest daughter had just started her second year in college, but it’s been more than four months now that she hasn’t been able to return to her institution. My other children are also unable to resume their education as my family is compelled to change their location after 15-20 days as a security precaution.”

Though he was not tortured during incarceration, he said, the pain of being away from his family and thinking about their well-being and safety gave him countless sleepless nights.

“All of this is due to the fact that the complainant, Imran Ladhar, has widely shared my photo on social media and declared me liable for death for alleged blasphemy,” he said in a choked voice. “As soon as Ladhar heard about my bail, he and his accomplices started gathering people in the village and incited them against me and my family. He’s trying his best to ensure that we are never able to go back to the village.”

Shahzad has met with his family only once since his release on bail, and they are unable to return to their village in the foreseeable future, he said.

“We are not together,” he told Morning Star News. “They are living at a relative’s house while I’m taking refuge elsewhere. I don’t know when this agonizing situation will come to an end.”

The Christian said the complainant, said to be a member of Islamist extremist party Tehreek-e-Labbaik Pakistan and also allegedly connected with banned terrorist group Lashkar-e-Jhangvi, filed the charge because of a grudge. Shahzad said he and his family had obtained valuable government land and allotted it for construction of a church building, and Ladhar and others had filed multiple cases against the allotment and lost all of them after a four-year legal battle.

“Another probable reason for Ladhar’s jealousy could be that we were financially better off than most Christian families of the village,” he said. “I was running a successful paint business in Sargodha city, but that too has shut down due to this case.”

Regarding the social media post, Shahzad said he had no intention of hurting Muslim sentiments by sharing the biblical verse on his Facebook page.

“I posted the verse a week before Eid Al Adha [Feast of the Sacrifice] but I had no idea that it would be used to target me and my family,” he said. “In fact, when I came to know that Ladhar was provoking the villagers against me, I deleted the post and decided to meet the village elders to explain my position.”

The village elders were already influenced by Ladhar and refused to listen to him, Shahzad said.

“I was left with no option but to flee the village when I heard that Ladhar was amassing a mob to attack me,” he said.

Shahzad pleaded with government authorities for justice, saying he should not be punished for sharing a verse from the Bible that in no way constituted blasphemy.

Similar to other cases

Shahzad’s attorney, Maria, told Morning Star News that events in Shahzad’s case were similar to other blasphemy cases filed against Christians.

“Defective investigation, mala fide on the part of the police and complainant, violent protests against the accused persons and threats to them and their families, forcing their displacement from their ancestral areas, have become hallmarks of all blasphemy allegations in Pakistan,” said Maria, head of The Voice Society, a Christian paralegal organization.

She said that the case filed against Shahzad was gross violation of Section 196 of the Criminal Procedure Code (CrPC), which states that police cannot register a case under the Section 295-A blasphemy statute against a private citizen without the approval of the provincial government or federal agencies.

Maria added that Shahzad and his family have continued to suffer even though there was no evidence of blasphemy.

“The social stigma attached with a blasphemy accusation will likely have a long-lasting impact on their lives, whereas his accuser, Imran Ladhar, would not have to face any consequence of his false accusation,” she said.

The judge who granted bail noted that Shahzad was charged with blasphemy under Section 295-A, which is a non-cognizable offense, and Section 298, which is bailable. The judge also noted that police had not submitted the forensic report of Shahzad’s cell phone and said evidence was required to prove that the social media was blasphemous, according to Maria.

Bail was set at 100,000 Pakistani rupees (US $350) and two personal sureties, and the judge ordered police to further investigate, she said.

Shahzad, a paint contractor, on June 29 posted on his Facebook page 1 Cor. 10:18-21 regarding food sacrificed to idols, as Muslims were beginning the four-day festival of Eid al-Adha, which involves slaughtering an animal and sharing the meat.

A Muslim villager took a screenshot of the post, sent it to local social media groups and accused Shahzad of likening Muslims to pagans and disrespecting the Abrahamic tradition of animal sacrifice.

Though Shahzad made no comment in the post, inflammatory or otherwise, the situation became tense after Friday prayers when announcements were made from mosque loudspeakers telling people to gather for a protest, family sources previously told Morning Star News.

Fearing violence as mobs grew in the village, most Christian families fled their homes, leaving everything behind.

In a bid to restore order, the police registered a case against Shahzad under Sections 295-A and 298. Section 295-A relates to “deliberate and malicious acts intended to outrage religious feelings of any class by insulting its religion or religious beliefs” and is punishable with imprisonment of up to 10 years and fine, or both. Section 298 prescribes up to one year in prison and a fine, or both, for hurting religious sentiments.

Pakistan ranked seventh on Open Doors’ 2023 World Watch List of the most difficult places to be a Christian, up from eighth the previous year.

Morning Star News is the only independent news service focusing exclusively on the persecution of Christians. The nonprofit’s mission is to provide complete, reliable, even-handed news in order to empower those in the free world to help persecuted Christians, and to encourage persecuted Christians by informing them that they are not alone in their suffering.

Free Religious Freedom Updates

Join thousands of others to get the FREEDOM POST newsletter for free, sent twice a week from The Christian Post.

Individual + Team Stats: Hornets vs. Timberwolves

CHARLOTTE HORNETS MINNESOTA TIMBERWOLVES You can follow us for future coverage by liking us on Facebook & following us on X: Facebook – All Hornets X – …

Source link

-

PPC4 days ago

PPC4 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

PPC7 days ago

PPC7 days ago4 New Google Ads Performance Max Updates: What You Need to Know

-

MARKETING7 days ago

MARKETING7 days agoWill Google Buy HubSpot? | Content Marketing Institute

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 16, 2024

-

SEO6 days ago

SEO6 days agoGoogle Clarifies Vacation Rental Structured Data

-

MARKETING6 days ago

MARKETING6 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 17, 2024

-

PPC7 days ago

PPC7 days agoHow to Collect & Use Customer Data the Right (& Ethical) Way