SOCIAL

Facebook Adds Additional Fact-Checking Resources via ‘Community Reviewers’

Facebook continues its confusing approach to fact checking with a new program that will see the appointment of a team of ‘community reviewers’, a diverse group of non-Facebook employees who will be called upon to check potentially false reports.

As explained by Facebook:

“The program will have community reviewers work as researchers to find information that can contradict the most obvious online hoaxes or corroborate other claims. These community reviewers are not Facebook employees but instead will be hired as contractors through one of our partners. They are not making final decisions themselves. Instead, their findings will be shared with the third-party fact-checkers as additional context as they do their own official review.”

The process will work like this:

First, Facebook’s machine learning process will identify potentially false claims and misinformation in posts, as it does already. When content is tagged as potentially false, Facebook’s system will then send the post in question on to the new team of community reviewers. The community reviewers will be prompted to check the post, and if they, through their own, additional research, find that the post is, in fact, incorrect, they’ll be able to attach their findings, which Facebook’s fact-checkers will then be able to refer to in their official assesment.

The process essentially adds a broader spectrum of input into the fact-checking process, which could improve the accuracy of the outcomes, and lessen accusations of bias.

Facebook has partnered with data company YouGov to select its new community review panel, after YouGov conducted research into how to best build such an input group.

As per Facebook:

“YouGov conducted an independent study of community reviewers and Facebook users. They determined that the requirements used to select community reviewers led to a pool that’s representative of the Facebook community in the US and reflects the diverse viewpoints – including political ideology – of Facebook users. They also found the judgments of corroborating claims by community reviewers were consistent with what most people using Facebook would conclude.”

Note the specific mention of political ideology. By enabling more people to provide input into its fact-checking process, Facebook’s working to improve both the relative accuracy of its findings, and to lessen accusations that its favoring one side of politics over another. Which has become a key sticking point in its various fact-checking and political transparency processes – which brings us to the confusing part of Facebook’s broader fact-checking improvements: its stance against fact-checking political ads.

Honestly, with every improvement, with every expansion of its overall fact-checking program, Facebook’s decision not to fact-check political ads seems less and less logical, or viable as a long-term measure.

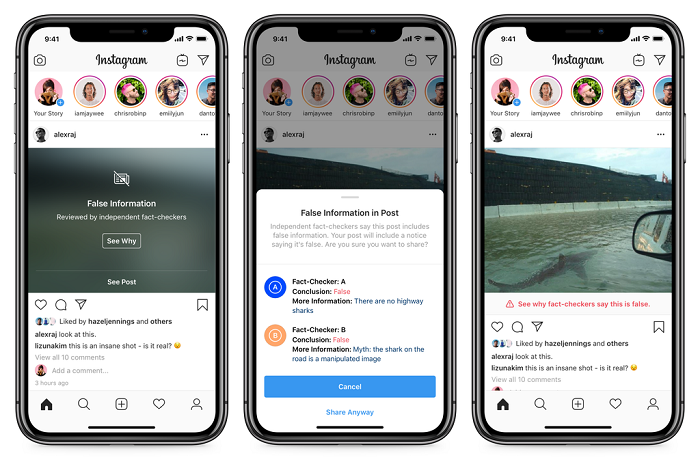

The company continues to invest significant resources into its fact-checking program, underlining its importance – just this week, Facebook expanded its fact-checking program on Instagram.

So its clearly important, Facebook clearly acknowledges that it needs to do what it can to limit the spread of misleading information and false claims on its platform, especially when it comes to concerning movements like anti-vaxxers and the like.

Misinformation is a problem – so why allow politicians, a group which has huge influence over public opinion, share false claims within their ad content?

Facebook has previously noted that it doesn’t want to be the referee on political speech, and that the public has a right to know what’s being said by political leaders.

As per Mark Zuckerberg, in his speech announcing Facebook’s political ads stance:

“Political advertising is more transparent on Facebook than anywhere else — we keep all political and issue ads in an archive so everyone can scrutinize them, and no TV or print does that. We don’t fact-check political ads. We don’t do this to help politicians, but because we think people should be able to see for themselves what politicians are saying. And if content is newsworthy, we also won’t take it down even if it would otherwise conflict with many of our standards.”

But the problem, as noted, is that political leaders carry significant sway. For example, if a recognized political figure were to come out and say that, yes, vaccines are dangerous, that would add serious weight to that movement, and embolden them to push harder in their campaigning. And under Facebook’s current rules, a political leader could do just that, via Facebook ads.

Does that seem like the right approach? And given Facebook’s broader efforts to stop the spread of misinformation, including this new update, does that not seem out of step with its overall effort?

Confusing. Unclear. But, for what it’s worth, Facebook is adding in a new way to improve the accuracy of its general fact-checking by running content by a more diverse collection of reviewers. So that’s something, I guess.