SOCIAL

Twitter and Facebook Announce Over 6,000 Account Removals Related to Political Manipulation

As we head into the holiday break, both Twitter and Facebook have announced a raft of new profile and Page removals as part of each platform’s ongoing investigations into co-ordinated manipulation of their networks for political influence campaigns.

And the scope of these latest removals is significant – the largest action of its type yet reported by Twitter:

- Twitter has removed 5,929 accounts originating from Saudi Arabia, which were part of a larger network of “88,000 accounts engaged in spammy behavior across a wide range of topics”.

- Facebook has removed 39 Facebook accounts, 344 Pages, 13 Groups and 22 Instagram accounts which were part of a domestic-focused network that originated in the country of Georgia

- Facebook has also removed 610 accounts, 89 Facebook Pages, 156 Groups and 72 Instagram accounts that originated in Vietnam and the US. The network of accounts focused primarily on the US and some on Vietnam, Spanish and Chinese-speaking audiences globally.

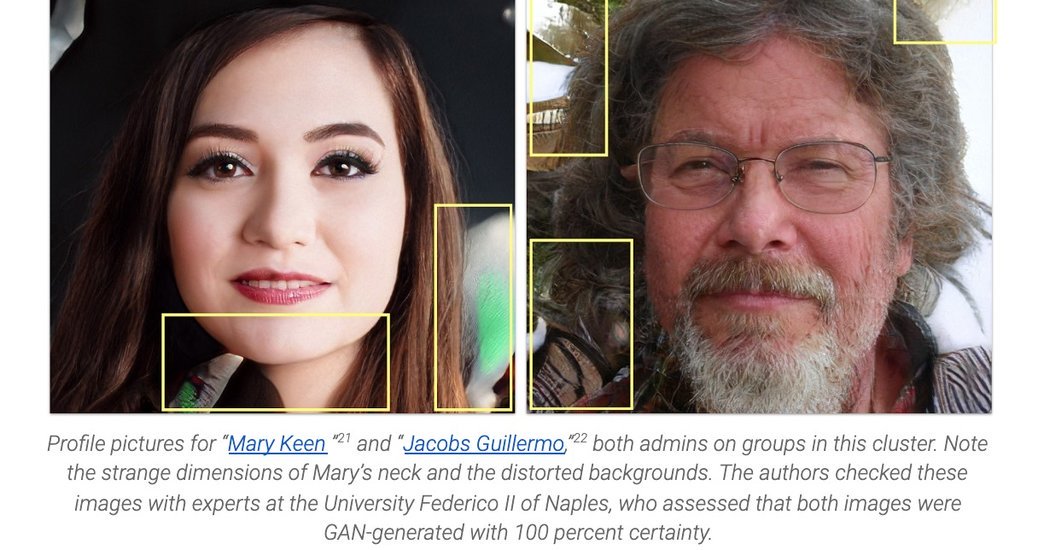

Of specific interest in this case is that, as reported by The New York Times, Facebook found that the latter instance – a network linked to Epoch Media Group – the Pages and profiles utilized “fake profile photos which had been generated with the help of artificial intelligence.”

That’s a particularly concerning development, which could point to the next phase of digital manipulation campaigns.

Twitter’s investigation focused on a Saudi marketing company called Smaat, which runs both political and commercial operations. Twitter says that while Smaat looks like a standard social media management agency on the surface, the company has links to the Saudi royal family, and recruited two Twitter employees “who searched internal databases for information about critics of the Saudi government”.

Smaat-operated profiles have sent over 32 million tweets, and gained millions of followers – and while many of the tweets from these profiles appear innocent, there are propaganda messages mixed in.

10/ Anti-Qatar and anti-Iran messages were common. There were lots of hashtags critical of the prince of Qatar, such as “Tamim, you are a liar like your father” (translated), and “Tamim supports the genocide of the Uighurs” and “From where did you get the yacht, Tamim?”

— Renee DiResta (@noUpside) December 20, 2019

These profiles also regularly asked users to “retweet” or “follow”, which lead to the creation of smaller sub-groups growing within the networks. Twitter also notes that there was “a substantial amount of automated “fluff” to make it hard to figure out what the accounts were focused on”.

The level of detail here is interesting, and provides some insights into the evolving tactics of such operations. Facebook has also provided specific examples of posts shared by the profiles it’s removed for coordinated inauthentic behavior.

These new account removals add to the thousands of documented account/profile deletions for coordinated manipulation across the two social platforms this year.

Here’s a reminder of the scope of those activities – all from 2019:

- Twitter removed 4779 accounts and their activity originating from Iran, while Facebook removed more than 800 Pages and 36 Facebook accounts, also linked to Iranian-backed organizations (initial action in January).

- Facebook removed 265 Facebook and Instagram accounts, Pages, Groups and events linked to Israel.

- Facebook removed 500 Facebook accounts, Pages and Groups linked to Russia (initial findings from January), while Twitter removed 4 accounts which were found to be connected with Russia’s Internet Research Agency (which has been linked to manipulation leading into the 2016 US Presidential Election)

- Facebook removed 103 Pages, Groups and accounts on both Facebook and Instagram which had been found to be engaging in coordinated inauthentic behavior as part of a network that originated in Pakistan

- Facebook removed 687 Facebook Pages and accounts which had engaged in coordinated inauthentic behavior in India

- Facebook took action against 420 Pages, Groups and accounts based in the Philippines which engaged in “coordinated inauthentic behavior” on Facebook and Instagram (initial action in January)

- Facebook banned 2,632 Pages, Groups and accounts which were found to be connected to state-backed operations originating from Iran, Russia, Macedonia and Kosovo

- Facebook removed 137 Facebook and Instagram accounts, Pages and Groups which were part of a domestic-focused network in the UK

- Facebook took action against 4 Pages, 26 Facebook accounts, and 1 Group which originated from Romania

- Twitter removed 130 accounts linked to Spain

- Facebook removed 168 Facebook accounts, 28 Pages and eight Instagram accounts for engaging in “coordinated inauthentic behavior” targeting people in Moldova

- Facebook removed 234 accounts, Pages and Groups from Facebook and Instagram as part of a domestic network in Indonesia

- Twitter removed 33 accounts connected to Venezuela

- Facebook removed 9 Facebook Pages and 6 Facebook accounts for engaging in “coordinated inauthentic behavior” originating from Bangladesh

- Facebook also took increased action in Myanmar, including the removal of at least three co-ordinated misinformation networks.

- Twitter and Facebook both removed networks of accounts sharing misinformation around the Hong Kong protests – Twitter removed 936 accounts, originating from within China, in August.

With the 2020 US Presidential election looming, you can bet that this will remain a key area of focus for both platforms, while, at some stage, there may also be a push for Facebook, in particular, to take stronger action against Pages which share false and misleading content.

At present, Facebook says that:

“Pages that repeatedly publish or share misinformation will see their distribution reduced and their ability to monetize and advertise removed.”

The removal of monetization is significant, but at some stage, Facebook might also need to consider removing these Pages altogether, as a means to further reinforce the need for admins to better vet the content they share and/or create, in order to stop the spread of false reports. If there’s a risk of losing your Page entirely – as opposed to facing a temporary sanction – that could put more onus on Page managers to be more diligent, and not simply post whatever comes across their path, and aligns with their cognitive bias.

Of course, any measure of this type is more complex in practice, and Facebook doesn’t want to get into overt censorship. But if misinformation is once again a key driver of voter behavior in 2020, you can bet the calls for more action on such will only get louder. And Facebook, in particular, is at the core of such distribution.