MARKETING

SEO in Real Life: Harnessing Visual Search for Optimization Opportunities

The author’s views are entirely his or her own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.

The most exciting thing about visual search is that it’s becoming a highly accessible way for users to interpret the real world, in real time, as they see it. Rather than being a passive observer, camera phones are now a primary resource for knowledge and understanding in daily life.

Users are searching with their own, unique photos to discover content. This includes interactions with products, brand experiences, stores, and employees, and means that SEO can and should be taken into consideration for a number of real world situations, including:

Though SEOs have little control over which photos people take, we can optimize our brand presentation to ensure we are easily discoverable by visual search tools. By prioritizing the presence of high impact visual search elements and coordinating online SEO with offline branding, businesses of all sizes can see results.

What is visual search?

Sometimes referred to as search-what-you-see, in the context of SEO, visual search is the act of querying a search engine with a photo rather than with text. To surface results , search engines and digital platforms use AI and visual recognition technology to identify elements in the image and supply the user with relevant information.

Though Google’s visual search tools are getting a lot of attention at the moment, they aren’t the only tech team that’s working on visual search. Pinterest has been at the forefront of this space for many years, and today you can see visual search in action on:

In the last year, Google has spoken extensively about their visual search capabilities, hinging a number of their search improvements on Google Lens and adding more and more functionality all the time. As a result, year on year usage of Google Lens has increased by three fold, with an estimated8 billion Google Lens searches taking place each month.

Though there are many lessons to be learned from the wide range of visual search tools, which each have their own data sets, for the purpose of this article we will be looking at visual search on Google Lens and Search.

Are visual search and image search SEO the same?

No, visual search optimization is not exactly the same as image search optimization. Image search optimization forms part of the visual search optimization process, but they’re not interchangeable.

Image search SEO

With Image Search you should prioritize helping images to surface when users enter text based queries. To do this, your images should be using image SEO best practices like:

-

Modern file formats

-

Alt text

-

Alt tags

-

Relevant file names

-

Schema markup

All of this helps Google to return an image search result for a text based query, but one of the main challenges with this approach is that it requires the user to know which term to enter.

For instance, with the query dinosaur with horns, an image search will return a few different dinosaur topic filters and lots of different images. To find the best result, I would need to filter and refine the query significantly.

Visual search SEO

With visual search, the image is the query, meaning that I can take a photo of a toy dinosaur with horns, search with Google Lens, then Google refines the query based on what it can see from the image.

When you compare the two search results, the SERP for the visual search is a better match for the initial image query because there are visual cues within the image. So I am only seeing results for a dinosaur with horns, that is quadrupedal, and only has horns on the face, not the frill.

From a user perspective, this is great because I didn’t have to type anything and I got a helpful result. And from Google’s perspective, this is also more efficient because they can assess the photo and decide which element to filter for first in order to get to the best SERP.

The standard image optimizations form part of what Google considers in order to surface relevant results, but if you stop there, you don’t get the full picture.

Which content elements are best interpreted in visual search

Visual search tools identify objects, text, and images, but certain elements are easier to identify than others. When users carry out a visual search, Google taps into multiple data sources to satisfy the query.

The knowledge graph,Vision AI, Google Maps, and other sources combine to surface search results, but in particular, Google’s tools have a few priority elements. When these elements are present in a photo Google can sort, identify, and/or visually match similar content to return results:

-

Landmarks are identified visually but are also connected to their physical location on Google Maps, meaning that local businesses or business owners should use imagery to demonstrate their location.

-

Logos are interpreted in their entirety, rather than as single letters. So even without any text, Google can understand that that swoop means Nike. This data comes from the logos in knowledge panels, website structured data, Google Business Profile, Google Merchant, and other sources, so they should all align.

-

Knowledge Graph Entities are used to tag and categorize images and have a significant impact on what SERP is displayed for a visual search. Google recognizes around 5 billion KGE, so it is worth considering which ones are most relevant to your brand and ensuring that they are visually represented on your site.

-

Text is extracted from images via Optical Character Recognition, which has some limitations — not all languages are recognized, nor are backwards letters. So if your users regularly search photos of printed menus or other printed text, you should consider readability of the fonts (or handwriting on specials boards) you use.

-

Faces are interpreted for sentiment, but the quantity of faces also comes into account, meaning that businesses that serve large groups of people — like event venues or cultural institutions — would do well to include images that demonstrate this.

|

Visual Search Element |

Corresponding Online Activity |

Priority Verticals |

|

Landmarks |

Website Images

Google Maps

Google Business Profile |

Tourism

Restaurants

Cultural Institutions

Local Businesses |

|

Logo |

Website Images

Website Structured Data

Google Merchant

Google Business Profile

Wikipedia

Knowledge Panel |

All |

|

Knowledge Graph Entities |

Website Images

Image Structured Data

Google Business Profile |

Ecommerce

Events

Cultural Institutions |

|

Text |

Website copy

Google Business Profile |

All |

|

Faces |

Website images

Google Business Profile |

Events

Tourism

Cultural Institutions |

How to optimize real world spaces for visual search

Just as standard SEO should be focused on meeting and anticipating customer needs, visual search SEO requires awareness of how customers interact with products and services in real world spaces. This means SEOs should apply the same attention to UCG that one would use for keyword research. To that end, I would argue we should also think about consciously applying optimizations to the potential content of these images.

Optimize sponsorship with unobstructed placements

This might seem like a no brainer, but in busy sponsorship spaces it can sometimes be a challenge. As an example, let’s take this photo from a visit to the Staples Center a few years ago.

Like any sports arena, this is filled to the brim with sponsorship endorsements on the court, the basket, and around the venue.

But when I run a visual search assessment for logos, the only one that can clearly be identified is the Kia logo in the jumbotron.

This isn’t because their logo is so distinct or unique, since there is another Kia logo under the basketball hoop, rather this is because the jumbotron placement is clean in terms of composition, with lots of negative space around the logo and fewer identifiable entities in the immediate vicinity.

Within the wider arena, many of the other sponsorship placements are being read as text, including Kia’s logo below the hoop. This has some value for these brands, but since text recognition doesn’t always complete the word, the results can be inconsistent.

So what does any of this have to do with SEO?

Well, Google Image Search now includes results that are using visual recognition, independent of text cues. Meaning that for a Google Image Search for the query kia staples center, two of the top five results do not have the word kia in the copy, alt text, or alt tags of the web pages they are sourced from. So, visual search is impacting rankings here, and with Google Imagesaccounting for roughly 20% of online searches, this can have a significant impact on search visibility.

What steps should you take to SEO your sponsorships?

Whether it’s major league or the local bowling league, in order to get the most benefit from visual search, if you are sponsoring something which is likely to be photographed extensively, you should:

-

Ensure that your real life sponsorship placement is in an unobscured location

-

Use the same logo in real life that is in your schema, GBP, and knowledge panel

-

Get a placement with good lighting and high contrast brand colors

-

Don’t rely on “light up” logos or flags that have inconsistent visibility on camera phones

You should also ensure that you’re aligning your real life presence with your digital activity. Include images of the sponsorship display on your website so that you can surface for relevant queries. If you dedicate a blog to the sponsorship activity that includes relevant images, image search optimizations, and copy, you increase your chances of outranking other content and bringing those clicks to your site.

Optimizing merch & uniforms for search

When creating merchandising and uniforms, visual discoverability for search should be a priority because users can search photos of promotional merch and images with team members in a number of ways and for an indefinite period of time.

Add text and/or logos

For instance, from my own camera roll, I have a few photos that can be categorized via theGoogle Photo machine-learning-powered image search with the query nasa. Two of these photos include the word “NASA” and the others include the logo.

Oddly enough, though, the photo of my Women of NASA LEGO set does not surface for this query. It shows for lego but not for nasa. Looking closely at the item itself, I can see that neither the NASA logo nor the text have been included in the design of the set.

Adding relevant text and/or logos to this set would have optimized this merchandise for both brands.

Stick to relevant brand colors

And since Google’s visual search AI is also able to discern brand colors, you should also prioritize merchandise that is in keeping with your brand colors. T-shirts and merch that deviate from your core color scheme will be less likely to make Visual Matches when users search via Google Lens.

In the example above, event merchandise that was created outside of the core brand colors of red, black, and white were much less recognizable than stationary typical colors.

Focus on in-person brand experiences

Creating experiences with customers in store and at events can be a great way to build brand relationships. It’s possible to leverage these activities for search if you take an SEO-centric approach.

Reduce competition

Let’s consider this image from a promotional experience in Las Vegas for Lyft. As a user, I enjoyed this immensely, so much so that I took a photo.

Though the Viva Lyft Vegas event was created by the rideshare company, in terms of visual search, Pabst are genuinely taking the blue ribbon, as they are the main entity identified in this query. But why?

First, Pabst has claimed their knowledge panel while Lyft has not, meaning that Lyft is less recognizable as a visual entity because it is less defined as an entity.

Second, though it does not have a Google Maps entry, the Las Vegas PBR sign has had landmark-esque treatment since it was installed, with features in The Neon Museum and a UNLV Neon Survey. All of this to say that, in this context, Lyft is being upstaged.

So to create a more SEO-friendly promotional space, they could have laid the groundwork by claiming their knowledge panel and reduced visual search competitors from the viewable space to make sure all eyes were on them.

Encourage optimized use-generated content

Sticking to Las Vegas, here is a typical touristy photo of me with friends outside the Excalibur Hotel:

And when I say that it’s typical, that’s not conjecture. A quick visual search reveals many other social media posts and websites with similar images.

This is what I refer to as that picture. You know the kinds of high occurrence UGC photos: under the castle at the entrance to Disneyland or even thepink wall at Paul Smith’s on Melrose Ave. These are the photos that everyone takes.

Can you SEO these photos for visual search? Yes, I believe you can in two ways:

-

Encourage people to take photos in certain places that you know, or have designed to include relevant entities, text, logos, and/or landmarks in the viewline. You can do this by declaring an area a scenic viewpoint or creating a photo friendly, dare I say “Instagrammable”, area in your store or venue.

-

Ensure frequently photographed mobile brand representations (e.g. mascots and/or vehicles) are easily recognizable via visual search. Where applicable, you should also claim their knowledge panels.

Once you’ve taken these steps, create dedicated content on your website with images that can serve as a “visual match” to this high frequency UGC. Include relevant copy and image search optimizations to demonstrate authority and make the most of this visibility.

How does this change SEO?

The notion of bringing visual search considerations to real world spaces may seem initially daunting, but this is also an opportunity for businesses of all sizes to consolidate brand identities in an effective way. Those working in SEO should coordinate efforts with PR, branding, and sponsorship teams to capture visual search traffic for brand wins.

MARKETING

How to Use AI For a More Effective Social Media Strategy, According to Ross Simmonds

Welcome to Creator Columns, where we bring expert HubSpot Creator voices to the Blogs that inspire and help you grow better.

It’s the age of AI, and our job as marketers is to keep up.

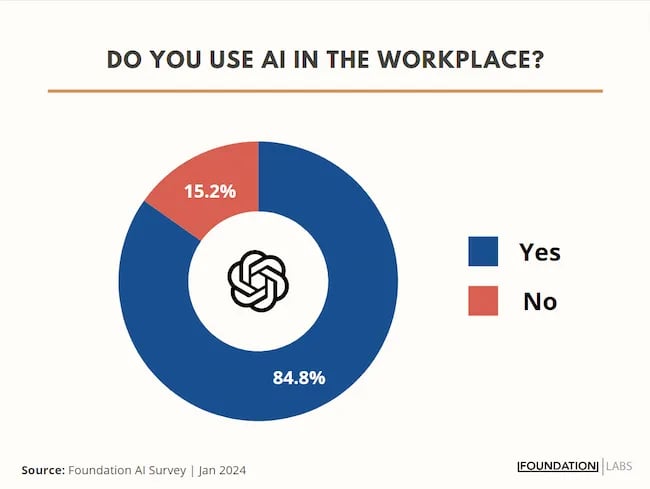

My team at Foundation Marketing recently conducted an AI Marketing study surveying hundreds of marketers, and more than 84% of all leaders, managers, SEO experts, and specialists confirmed that they used AI in the workplace.

If you can overlook the fear-inducing headlines, this technology is making social media marketers more efficient and effective than ever. Translation: AI is good news for social media marketers.

In fact, I predict that the marketers not using AI in their workplace will be using it before the end of this year, and that number will move closer and closer to 100%.

Social media and AI are two of the most revolutionizing technologies of the last few decades. Social media has changed the way we live, and AI is changing the way we work.

So, I’m going to condense and share the data, research, tools, and strategies that the Foundation Marketing Team and I have been working on over the last year to help you better wield the collective power of AI and social media.

Let’s jump into it.

What’s the role of AI in social marketing strategy?

In a recent episode of my podcast, Create Like The Greats, we dove into some fascinating findings about the impact of AI on marketers and social media professionals. Take a listen here:

Let’s dive a bit deeper into the benefits of this technology:

Benefits of AI in Social Media Strategy

AI is to social media what a conductor is to an orchestra — it brings everything together with precision and purpose. The applications of AI in a social media strategy are vast, but the virtuosos are few who can wield its potential to its fullest.

AI to Conduct Customer Research

Imagine you’re a modern-day Indiana Jones, not dodging boulders or battling snakes, but rather navigating the vast, wild terrain of consumer preferences, trends, and feedback.

This is where AI thrives.

Using social media data, from posts on X to comments and shares, AI can take this information and turn it into insights surrounding your business and industry. Let’s say for example you’re a business that has 2,000 customer reviews on Google, Yelp, or a software review site like Capterra.

Leveraging AI you can now have all 2,000 of these customer reviews analyzed and summarized into an insightful report in a matter of minutes. You simply need to download all of them into a doc and then upload them to your favorite Generative Pre-trained Transformer (GPT) to get the insights and data you need.

But that’s not all.

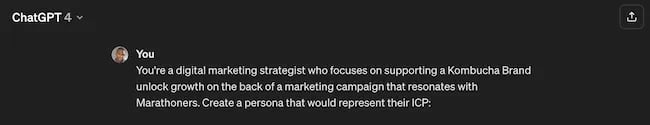

You can become a Prompt Engineer and write ChatGPT asking it to help you better understand your audience. For example, if you’re trying to come up with a persona for people who enjoy marathons but also love kombucha you could write a prompt like this to ChatGPT:

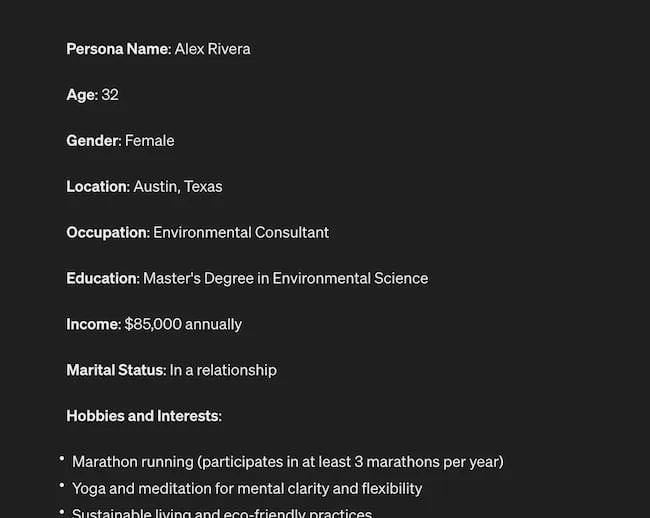

The response that ChatGPT provided back is quite good:

Below this it went even deeper by including a lot of valuable customer research data:

- Demographics

- Psychographics

- Consumer behaviors

- Needs and preferences

And best of all…

It also included marketing recommendations.

The power of AI is unbelievable.

Social Media Content Using AI

AI’s helping hand can be unburdening for the creative spirit.

Instead of marketers having to come up with new copy every single month for posts, AI Social Caption generators are making it easier than ever to craft catchy status updates in the matter of seconds.

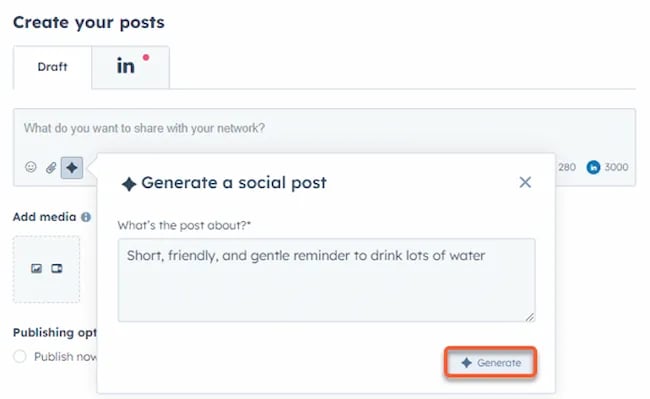

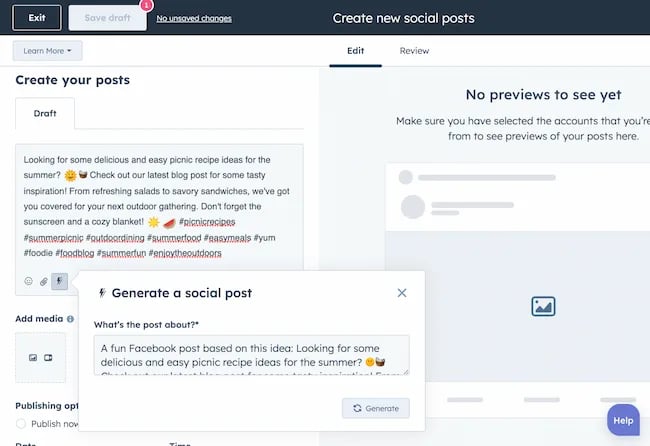

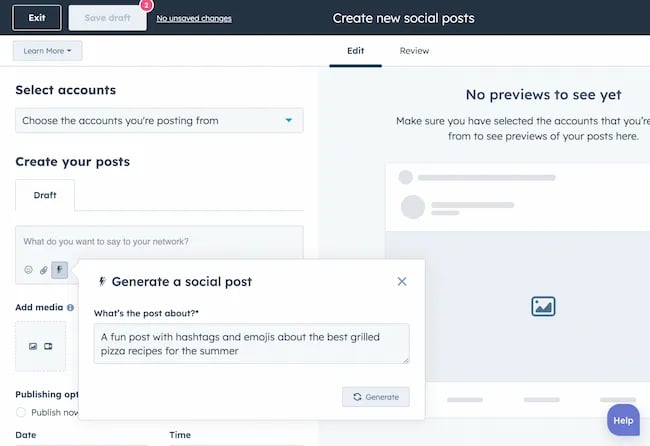

Tools like HubSpot make it as easy as clicking a button and telling the AI tool what you’re looking to create a post about:

The best part of these AI tools is that they’re not limited to one channel.

Your AI social media content assistant can help you with LinkedIn content, X content, Facebook content, and even the captions that support your post on Instagram.

It can also help you navigate hashtags:

With AI social media tools that generate content ideas or even write posts, it’s not about robots replacing humans. It’s about making sure that the human creators on your team are focused on what really matters — adding that irreplaceable human touch.

Enhanced Personalization

You know that feeling when a brand gets you, like, really gets you?

AI makes that possible through targeted content that’s tailored with a level of personalization you’d think was fortune-telling if the data didn’t paint a starker, more rational picture.

What do I mean?

Brands can engage more quickly with AI than ever before. In the early 2000s, a lot of brands spent millions of dollars to create social media listening rooms where they would hire social media managers to find and engage with any conversation happening online.

Thanks to AI, brands now have the ability to do this at scale with much fewer people all while still delivering quality engagement with the recipient.

Analytics and Insights

Tapping into AI to dissect the data gives you a CSI-like precision to figure out what works, what doesn’t, and what makes your audience tick. It’s the difference between guessing and knowing.

The best part about AI is that it can give you almost any expert at your fingertips.

If you run a report surrounding the results of your social media content strategy directly from a site like LinkedIn, AI can review the top posts you’ve shared and give you clear feedback on what type of content is performing, why you should create more of it, and what days of the week your content is performing best.

This type of insight that would typically take hours to understand.

Now …

Thanks to the power of AI you can upload a spreadsheet filled with rows and columns of data just to be met with a handful of valuable insights a few minutes later.

Improved Customer Service

Want 24/7 support for your customers?

It’s now possible without human touch.

Chatbots powered by AI are taking the lead on direct messaging experiences for brands on Facebook and other Meta properties to offer round-the-clock assistance.

The fact that AI can be trained on past customer queries and data to inform future queries and problems is a powerful development for social media managers.

Advertising on Social Media with AI

The majority of ad networks have used some variation of AI to manage their bidding system for years. Now, thanks to AI and its ability to be incorporated in more tools, brands are now able to use AI to create better and more interesting ad campaigns than ever before.

Brands can use AI to create images using tools like Midjourney and DALL-E in seconds.

Brands can use AI to create better copy for their social media ads.

Brands can use AI tools to support their bidding strategies.

The power of AI and social media is continuing to evolve daily and it’s not exclusively found in the organic side of the coin. Paid media on social media is being shaken up due to AI just the same.

How to Implement AI into Your Social Media Strategy

Ready to hit “Go” on your AI-powered social media revolution?

Don’t just start the engine and hope for the best. Remember the importance of building a strategy first. In this video, you can learn some of the most important factors ranging from (but not limited to) SMART goals and leveraging influencers in your day-to-day work:

The following seven steps are crucial to building a social media strategy:

- Identify Your AI and Social Media Goals

- Validate Your AI-Related Assumptions

- Conduct Persona and Audience Research

- Select the Right Social Channels

- Identify Key Metrics and KPIs

- Choose the Right AI Tools

- Evaluate and Refine Your Social Media and AI Strategy

Keep reading, roll up your sleeves, and follow this roadmap:

1. Identify Your AI and Social Media Goals

If you’re just dipping your toes into the AI sea, start by defining clear objectives.

Is it to boost engagement? Streamline your content creation? Or simply understand your audience better? It’s important that you spend time understanding what you want to achieve.

For example, say you’re a content marketing agency like Foundation and you’re trying to increase your presence on LinkedIn. The specificity of this goal will help you understand the initiatives you want to achieve and determine which AI tools could help you make that happen.

Are there AI tools that will help you create content more efficiently? Are there AI tools that will help you optimize LinkedIn Ads? Are there AI tools that can help with content repurposing? All of these things are possible and having a goal clearly identified will help maximize the impact. Learn more in this Foundation Marketing piece on incorporating AI into your content workflow.

Once you have identified your goals, it’s time to get your team on board and assess what tools are available in the market.

Recommended Resources:

2. Validate Your AI-Related Assumptions

Assumptions are dangerous — especially when it comes to implementing new tech.

Don’t assume AI is going to fix all your problems.

Instead, start with small experiments and track their progress carefully.

3. Conduct Persona and Audience Research

Social media isn’t something that you can just jump into.

You need to understand your audience and ideal customers. AI can help with this, but you’ll need to be familiar with best practices. If you need a primer, this will help:

Once you understand the basics, consider ways in which AI can augment your approach.

4. Select the Right Social Channels

Not every social media channel is the same.

It’s important that you understand what channel is right for you and embrace it.

The way you use AI for X is going to be different from the way you use AI for LinkedIn. On X, you might use AI to help you develop a long-form thread that is filled with facts and figures. On LinkedIn however, you might use AI to repurpose a blog post and turn it into a carousel PDF. The content that works on X and that AI can facilitate creating is different from the content that you can create and use on LinkedIn.

The audiences are different.

The content formats are different.

So operate and create a plan accordingly.

Recommended Tools and Resources:

5. Identify Key Metrics and KPIs

What metrics are you trying to influence the most?

Spend time understanding the social media metrics that matter to your business and make sure that they’re prioritized as you think about the ways in which you use AI.

These are a few that matter most:

- Reach: Post reach signifies the count of unique users who viewed your post. How much of your content truly makes its way to users’ feeds?

- Clicks: This refers to the number of clicks on your content or account. Monitoring clicks per campaign is crucial for grasping what sparks curiosity or motivates people to make a purchase.

- Engagement: The total social interactions divided by the number of impressions. This metric reveals how effectively your audience perceives you and their readiness to engage.

Of course, it’s going to depend greatly on your business.

But with this information, you can ensure that your AI social media strategy is rooted in goals.

6. Choose the Right AI Tools

The AI landscape is filled with trash and treasure.

Pick AI tools that are most likely to align with your needs and your level of tech-savviness.

For example, if you’re a blogger creating content about pizza recipes, you can use HubSpot’s AI social caption generator to write the message on your behalf:

The benefit of an AI tool like HubSpot and the caption generator is that what at one point took 30-40 minutes to come up with — you can now have it at your fingertips in seconds. The HubSpot AI caption generator is trained on tons of data around social media content and makes it easy for you to get inspiration or final drafts on what can be used to create great content.

Consider your budget, the learning curve, and what kind of support the tool offers.

7. Evaluate and Refine Your Social Media and AI Strategy

AI isn’t a magic wand; it’s a set of complex tools and technology.

You need to be willing to pivot as things come to fruition.

If you notice that a certain activity is falling flat, consider how AI can support that process.

Did you notice that your engagement isn’t where you want it to be? Consider using an AI tool to assist with crafting more engaging social media posts.

Make AI Work for You — Now and in the Future

AI has the power to revolutionize your social media strategy in ways you may have never thought possible. With its ability to conduct customer research, create personalized content, and so much more, thinking about the future of social media is fascinating.

We’re going through one of the most interesting times in history.

Stay equipped to ride the way of AI and ensure that you’re embracing the best practices outlined in this piece to get the most out of the technology.

MARKETING

Advertising in local markets: A playbook for success

Many brands, such as those in the home services industry or a local grocery chain, market to specific locations, cities or regions. There are also national brands that want to expand in specific local markets.

Regardless of the company or purpose, advertising on a local scale has different tactics than on a national scale. Brands need to connect their messaging directly with the specific communities they serve and media to their target demo. Here’s a playbook to help your company succeed when marketing on a local scale.

1. Understand local vs. national campaigns

Local advertising differs from national campaigns in several ways:

- Audience specificity: By zooming in on precise geographic areas, brands can tailor messaging to align with local communities’ customs, preferences and nuances. This precision targeting ensures that your message resonates with the right target audience.

- Budget friendliness: Local advertising is often more accessible for small businesses. Local campaign costs are lower, enabling brands to invest strategically within targeted locales. This budget-friendly nature does not diminish the need for strategic planning; instead, it emphasizes allocating resources wisely to maximize returns. As a result, testing budgets can be allocated across multiple markets to maximize learnings for further market expansion.

- Channel selection: Selecting the correct channels is vital for effective local advertising. Local newspapers, radio stations, digital platforms and community events each offer advantages. The key lies in understanding where your target audience spends time and focusing efforts to ensure optimal engagement.

- Flexibility and agility: Local campaigns can be adjusted more swiftly in response to market feedback or changes, allowing brands to stay relevant and responsive.

Maintaining brand consistency across local touchpoints reinforces brand identity and builds a strong, recognizable brand across markets.

2. Leverage customized audience segmentation

Customized audience segmentation is the process of dividing a market into distinct groups based on specific demographic criteria. This marketing segmentation supports the development of targeted messaging and media plans for local markets.

For example, a coffee chain might cater to two distinct segments: young professionals and retirees. After identifying these segments, the chain can craft messages, offers and media strategies relating to each group’s preferences and lifestyle.

To reach young professionals in downtown areas, the chain might focus on convenience, quality coffee and a vibrant atmosphere that is conducive to work and socializing. Targeted advertising on Facebook, Instagram or Connected TV, along with digital signage near office complexes, could capture the attention of this demographic, emphasizing quick service and premium blends.

Conversely, for retirees in residential areas, the chain could highlight a cozy ambiance, friendly service and promotions such as senior discounts. Advertisements in local print publications, community newsletters, radio stations and events like senior coffee mornings would foster a sense of community and belonging.

Dig deeper: Niche advertising: 7 actionable tactics for targeted marketing

3. Adapt to local market dynamics

Various factors influence local market dynamics. Brands that navigate changes effectively maintain a strong audience connection and stay ahead in the market. Here’s how consumer sentiment and behavior may evolve within a local market and the corresponding adjustments brands can make.

- Cultural shifts, such as changes in demographics or societal norms, can alter consumer preferences within a local community. For example, a neighborhood experiencing gentrification may see demand rise for specific products or services.

- Respond by updating your messaging to reflect the evolving cultural landscape, ensuring it resonates with the new demographic profile.

- Economic conditions are crucial. For example, during downturns, consumers often prioritize value and practicality.

- Highlight affordable options or emphasize the practical benefits of your offerings to ensure messaging aligns with consumers’ financial priorities. The impact is unique to each market and the marketing message must also be dynamic.

- Seasonal trends impact consumer behavior.

- Align your promotions and creative content with changing seasons or local events to make your offerings timely and relevant.

- New competitors. The competitive landscape demands vigilance because new entrants or innovative competitor campaigns can shift consumer preferences.

- Differentiate by focusing on your unique selling propositions, such as quality, customer service or community involvement, to retain consumer interest and loyalty.

4. Apply data and predictive analytics

Data and predictive analytics are indispensable tools for successfully reaching local target markets. These technologies provide consumer behavior insights, enabling you to anticipate market trends and adjust strategies proactively.

- Price optimization: By analyzing consumer demand, competitor pricing and market conditions, data analytics enables you to set prices that attract customers while ensuring profitability.

- Competitor analysis: Through analysis, brands can understand their positioning within the local market landscape and identify opportunities and threats. Predictive analytics offer foresight into competitors’ potential moves, allowing you to strategize effectively to maintain a competitive edge.

- Consumer behavior: Forecasting consumer behavior allows your brand to tailor offerings and marketing messages to meet evolving consumer needs and enhance engagement.

- Marketing effectiveness: Analytics track the success of advertising campaigns, providing insights into which strategies drive conversions and sales. This feedback loop enables continuous optimization of marketing efforts for maximum impact.

- Inventory management: In supply chain management, data analytics predict demand fluctuations, ensuring inventory levels align with market needs. This efficiency prevents stockouts or excess inventory, optimizing operational costs and meeting consumer expectations.

Dig deeper: Why you should add predictive modeling to your marketing mix

5. Counter external market influences

Consider a clothing retailer preparing for a spring collection launch. By analyzing historical weather data and using predictive analytics, the brand forecasts an unseasonably cool start to spring. Anticipating this, the retailer adjusts its campaign to highlight transitional pieces suitable for cooler weather, ensuring relevance despite an unexpected chill.

Simultaneously, predictive models signal an upcoming spike in local media advertising rates due to increased market demand. Retailers respond by reallocating a portion of advertising budgets to digital channels, which offer more flexibility and lower costs than traditional media. This shift enables brands to maintain visibility and engagement without exceeding budget, mitigating the impact of external forces on advertising.

6. Build consumer confidence with messaging

Localized messaging and tailored customer service enhance consumer confidence by demonstrating your brand’s understanding of the community. For instance, a grocery store that curates cooking classes featuring local cuisine or sponsors community events shows commitment to local culture and consumer interests.

Similarly, a bookstore highlighting local authors or topics relevant to the community resonates with local customers. Additionally, providing service that addresses local needs — such as bilingual service and local event support — reinforces the brand’s values and response to the community.

Through these localized approaches, brands can build trust and loyalty, bridging the gap between corporate presence and local relevance.

7. Dominate with local advertising

To dominate local markets, brands must:

- Harness hyper-targeted segmentation and geo-targeted advertising to reach and engage precise audiences.

- Create localized content that reflects community values, engage in community events, optimize campaigns for mobile and track results.

- Fine-tune strategies, outperform competitors and foster lasting relationships with customers.

These strategies will enable your message to resonate with local consumers, differentiate you in competitive markets and ensure you become a major player in your specific area.

Dig deeper: The 5 critical elements for local marketing success

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

MARKETING

Battling for Attention in the 2024 Election Year Media Frenzy

As we march closer to the 2024 U.S. presidential election, CMOs and marketing leaders need to prepare for a significant shift in the digital advertising landscape. Election years have always posed unique challenges for advertisers, but the growing dominance of digital media has made the impact more profound than ever before.

In this article, we’ll explore the key factors that will shape the advertising environment in the coming months and provide actionable insights to help you navigate these turbulent waters.

The Digital Battleground

The rise of cord-cutting and the shift towards digital media consumption have fundamentally altered the advertising landscape in recent years. As traditional TV viewership declines, political campaigns have had to adapt their strategies to reach voters where they are spending their time: on digital platforms.

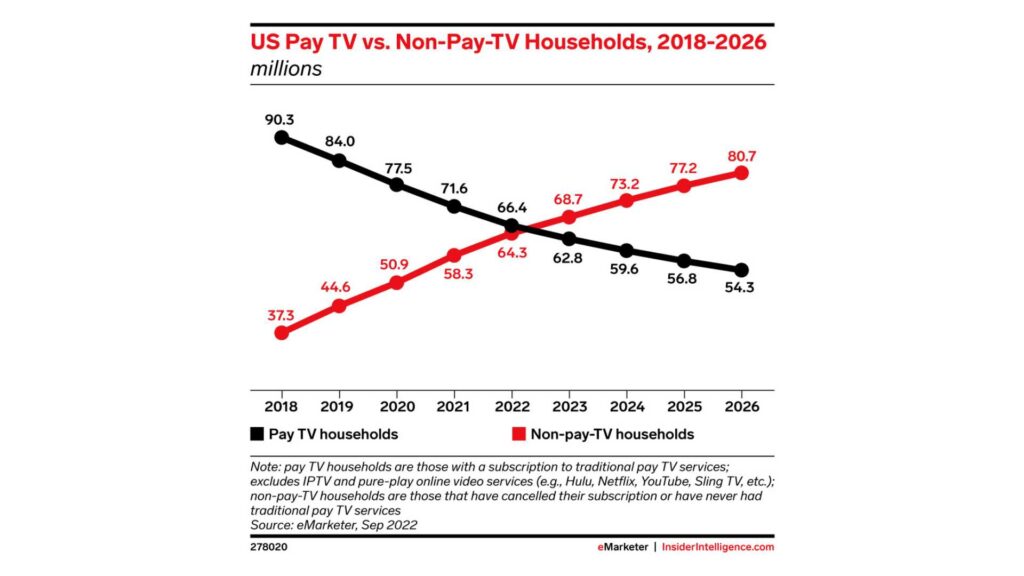

According to a recent report by eMarketer, the number of cord-cutters in the U.S. is expected to reach 65.1 million by the end of 2023, representing a 6.9% increase from 2022. This trend is projected to continue, with the number of cord-cutters reaching 72.2 million by 2025.

Moreover, a survey conducted by Pew Research Center in 2023 found that 62% of U.S. adults do not have a cable or satellite TV subscription, up from 61% in 2022 and 50% in 2019. This data further underscores the accelerating shift away from traditional TV and towards streaming and digital media platforms.

As these trends continue, political advertisers will have no choice but to follow their audiences to digital channels. In the 2022 midterm elections, digital ad spending by political campaigns reached $1.2 billion, a 50% increase from the 2018 midterms. With the 2024 presidential election on the horizon, this figure is expected to grow exponentially, as campaigns compete for the attention of an increasingly digital-first electorate.

For brands and advertisers, this means that the competition for digital ad space will be fiercer than ever before. As political ad spending continues to migrate to platforms like Meta, YouTube, and connected TV, the cost of advertising will likely surge, making it more challenging for non-political advertisers to reach their target audiences.

To navigate this complex and constantly evolving landscape, CMOs and their teams will need to be proactive, data-driven, and willing to experiment with new strategies and channels. By staying ahead of the curve and adapting to the changing media consumption habits of their audiences, brands can position themselves for success in the face of the electoral advertising onslaught.

Rising Costs and Limited Inventory

As political advertisers flood the digital market, the cost of advertising is expected to skyrocket. CPMs (cost per thousand impressions) will likely experience a steady climb throughout the year, with significant spikes anticipated in May, as college students come home from school and become more engaged in political conversations, and around major campaign events like presidential debates.

For media buyers and their teams, this means that the tried-and-true strategies of years past may no longer be sufficient. Brands will need to be nimble, adaptable, and willing to explore new tactics to stay ahead of the game.

Black Friday and Cyber Monday: A Perfect Storm

The challenges of election year advertising will be particularly acute during the critical holiday shopping season. Black Friday and Cyber Monday, which have historically been goldmines for advertisers, will be more expensive and competitive than ever in 2024, as they coincide with the final weeks of the presidential campaign.

To avoid being drowned out by the political noise, brands will need to start planning their holiday campaigns earlier than usual. Building up audiences and crafting compelling creative assets well in advance will be essential to success, as will a willingness to explore alternative channels and tactics. Relying on cold audiences come Q4 will lead to exceptionally high costs that may be detrimental to many businesses.

Navigating the Chaos

While the challenges of election year advertising can seem daunting, there are steps that media buyers and their teams can take to mitigate the impact and even thrive in this environment. Here are a few key strategies to keep in mind:

Start early and plan for contingencies: Begin planning your Q3 and Q4 campaigns as early as possible, with a focus on building up your target audiences and developing a robust library of creative assets.

Be sure to build in contingency budgets to account for potential cost increases, and be prepared to pivot your strategy as the landscape evolves.

Embrace alternative channels: Consider diversifying your media mix to include channels that may be less impacted by political ad spending, such as influencer marketing, podcast advertising, or sponsored content. Investing in owned media channels, like email marketing and mobile apps, can also provide a direct line to your customers without the need to compete for ad space.

Owned channels will be more important than ever. Use cheaper months leading up to the election to build your email lists and existing customer base so that your BF/CM can leverage your owned channels and warm audiences.

Craft compelling, shareable content: In a crowded and noisy advertising environment, creating content that resonates with your target audience will be more important than ever. Focus on developing authentic, engaging content that aligns with your brand values and speaks directly to your customers’ needs and desires.

By tapping into the power of emotional triggers and social proof, you can create content that not only cuts through the clutter but also inspires organic sharing and amplification.

Reflections

The 2024 election year will undoubtedly bring new challenges and complexities to the world of digital advertising. But by staying informed, adaptable, and strategic in your approach, you can navigate this landscape successfully and even find new opportunities for growth and engagement.

As a media buyer or agnecy, your role in steering your brand through these uncharted waters will be critical. By starting your planning early, embracing alternative channels and tactics, and focusing on creating authentic, resonant content, you can not only survive but thrive in the face of election year disruptions.

So while the road ahead may be uncertain, one thing is clear: the brands that approach this challenge with creativity, agility, and a steadfast commitment to their customers will be the ones that emerge stronger on the other side.

-

PPC4 days ago

PPC4 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoWill Google Buy HubSpot? | Content Marketing Institute

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 16, 2024

-

SEO7 days ago

SEO7 days agoGoogle Clarifies Vacation Rental Structured Data

-

MARKETING6 days ago

MARKETING6 days agoStreamlining Processes for Increased Efficiency and Results

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 17, 2024

-

SEO6 days ago

SEO6 days agoAn In-Depth Guide And Best Practices For Mobile SEO

-

PPC6 days ago

PPC6 days ago97 Marvelous May Content Ideas for Blog Posts, Videos, & More

![How to Use AI For a More Effective Social Media Strategy, According to Ross Simmonds Download Now: The 2024 State of Social Media Trends [Free Report]](https://articles.entireweb.com/wp-content/uploads/2024/04/How-to-Use-AI-For-a-More-Effective-Social-Media.png)

You must be logged in to post a comment Login