Reducing the spread of misinformation on social media: What would a do-over look like?

The news is awash with stories of platforms clamping down on misinformation and the angst involved in banning prominent members. But these are Band-Aids over a deeper issue — namely, that the problem of misinformation is one of our own design. Some of the core elements of how we’ve built social media platforms may inadvertently increase polarization and spread misinformation.

If we could teleport back in time to relaunch social media platforms like Facebook, Twitter and TikTok with the goal of minimizing the spread of misinformation and conspiracy theories from the outset … what would they look like?

This is not an academic exercise. Understanding these root causes can help us develop better prevention measures for current and future platforms.

Some of the core elements of how we’ve built social media platforms may inadvertently increase polarization and spread misinformation.

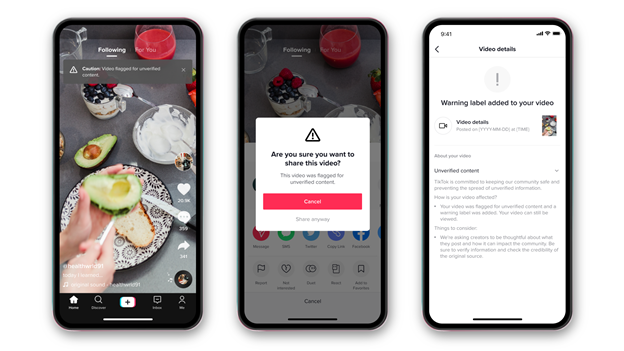

As one of the Valley’s leading behavioral science firms, we’ve helped brands like Google, Lyft and others understand human decision-making as it relates to product design. We recently collaborated with TikTok to design a new series of prompts (launched this week) to help stop the spread of potential misinformation on its platform.

Image Credits: Irrational Labs (opens in a new window)

The intervention successfully reduces shares of flagged content by 24%. While TikTok is unique amongst platforms, the lessons we learned there have helped shape ideas on what a social media redux could look like.

Create opt-outs

We can take much bigger swings at reducing the views of unsubstantiated content than labels or prompts.

In the experiment we launched together with TikTok, people saw an average of 1.5 flagged videos over a two-week period. Yet in our qualitative research, many users said they were on TikTok for fun; they didn’t want to see any flagged videos whatsoever. In a recent earnings call, Mark Zuckerberg also spoke of Facebook users’ tiring of hyperpartisan content.

We suggest giving people an “opt-out of flagged content” option — remove this content from their feeds entirely. To make this a true choice, this opt-out needs to be prominent, not buried somewhere users must seek it out. We suggest putting it directly in the sign-up flow for new users and adding an in-app prompt for existing users.

Shift the business model

There’s a reason false news spreads six times faster on social media than real news: Information that’s controversial, dramatic or polarizing is far more likely to grab our attention. And when algorithms are designed to maximize engagement and time spent on an app, this kind of content is heavily favored over more thoughtful, deliberative content.

The ad-based business model is at the core the problem; it’s why making progress on misinformation and polarization is so hard. One internal Facebook team tasked with looking into the issue found that, “our algorithms exploit the human brain’s attraction to divisiveness.” But the project and proposed work to address the issues was nixed by senior executives.

Essentially, this is a classic incentives problem. If business metrics that define “success” are no longer dependent on maximizing engagement/time on site, everything will change. Polarizing content will no longer need to be favored and more thoughtful discourse will be able to rise to the surface.

Design for connection

A primary part of the spread of misinformation is feeling marginalized and alone. Humans are fundamentally social creatures who look to be part of an in-group, and partisan groups frequently provide that sense of acceptance and validation.

We must therefore make it easier for people to find their authentic tribes and communities in other ways (versus those that bond over conspiracy theories).

Mark Zuckerberg says his ultimate goal with Facebook was to connect people. To be fair, in many ways Facebook has done that, at least on a surface level. But we should go deeper. Here are some ways:

We can design for more active one-on-one communication, which has been shown to increase well-being. We can also nudge offline connection. Imagine two friends are chatting on Facebook messenger or via comments on a post. How about a prompt to meet in person, when they live in the same city (post-COVID, of course)? Or if they’re not in the same city, a nudge to hop on a call or video.

In the scenario where they’re not friends and the interaction is more contentious, platforms can play a role in highlighting not only the humanity of the other person, but things one shares in common with the other. Imagine a prompt that showed, as you’re “shouting” online with someone, everything you have in common with that person.

Platforms should also disallow anonymous accounts, or at minimum encourage the use of real names. Clubhouse has good norm-setting on this: In the onboarding flow they say, “We use real names here.” Connection is based on the idea that we’re interacting with a real human. Anonymity obfuscates that.

Finally, help people reset

We should make it easy for people to get out of an algorithmic rabbit hole. YouTube has been under fire for its rabbit holes, but all social media platforms have this challenge. Once you click a video, you’re shown videos like it. This may help sometimes (getting to that perfect “how to” video sometimes requires a search), but for misinformation, this is a death march. One video on flat earth leads to another, as well as other conspiracy theories. We need to help people eject from their algorithmic destiny.

With great power comes great responsibility

More and more people now get their news from social media, and those who do are less likely to be correctly informed about important issues. It’s likely that this trend of relying on social media as an information source will continue.

Social media companies are thus in a unique position of power and have a responsibility to think deeply about the role they play in reducing the spread of misinformation. They should absolutely continue to experiment and run tests with research-informed solutions, as we did together with the TikTok team.

This work isn’t easy. We knew that going in, but we have an even deeper appreciation for this fact after working with the TikTok team. There are many smart, well-intentioned people who want to solve for the greater good. We’re deeply hopeful about our collective opportunity here to think bigger and more creatively about how to reduce misinformation, inspire connection and strengthen our collective humanity all at the same time.

Facebook Faces Yet Another Outage: Platform Encounters Technical Issues Again

Uppdated: It seems that today’s issues with Facebook haven’t affected as many users as the last time. A smaller group of people appears to be impacted this time around, which is a relief compared to the larger incident before. Nevertheless, it’s still frustrating for those affected, and hopefully, the issues will be resolved soon by the Facebook team.

Facebook had another problem today (March 20, 2024). According to Downdetector, a website that shows when other websites are not working, many people had trouble using Facebook.

This isn’t the first time Facebook has had issues. Just a little while ago, there was another problem that stopped people from using the site. Today, when people tried to use Facebook, it didn’t work like it should. People couldn’t see their friends’ posts, and sometimes the website wouldn’t even load.

Downdetector, which watches out for problems on websites, showed that lots of people were having trouble with Facebook. People from all over the world said they couldn’t use the site, and they were not happy about it.

When websites like Facebook have problems, it affects a lot of people. It’s not just about not being able to see posts or chat with friends. It can also impact businesses that use Facebook to reach customers.

Since Facebook owns Messenger and Instagram, the problems with Facebook also meant that people had trouble using these apps. It made the situation even more frustrating for many users, who rely on these apps to stay connected with others.

During this recent problem, one thing is obvious: the internet is always changing, and even big websites like Facebook can have problems. While people wait for Facebook to fix the issue, it shows us how easily things online can go wrong. It’s a good reminder that we should have backup plans for staying connected online, just in case something like this happens again.

Christian family goes in hiding after being cleared of blasphemy

LAHORE, Pakistan — A court in Pakistan granted bail to a Christian falsely charged with blasphemy, but he and his family have separated and gone into hiding amid threats to their lives, sources said.

Haroon Shahzad, 45, was released from Sargodha District Jail on Nov. 15, said his attorney, Aneeqa Maria. Shahzad was charged with blasphemy on June 30 after posting Bible verses on Facebook that infuriated Muslims, causing dozens of Christian families in Chak 49 Shumaali, near Sargodha in Punjab Province, to flee their homes.

Lahore High Court Judge Ali Baqir Najfi granted bail on Nov. 6, but the decision and his release on Nov. 15 were not made public until now due to security fears for his life, Maria said.

Shahzad told Morning Star News by telephone from an undisclosed location that the false accusation has changed his family’s lives forever.

“My family has been on the run from the time I was implicated in this false charge and arrested by the police under mob pressure,” Shahzad told Morning Star News. “My eldest daughter had just started her second year in college, but it’s been more than four months now that she hasn’t been able to return to her institution. My other children are also unable to resume their education as my family is compelled to change their location after 15-20 days as a security precaution.”

Though he was not tortured during incarceration, he said, the pain of being away from his family and thinking about their well-being and safety gave him countless sleepless nights.

“All of this is due to the fact that the complainant, Imran Ladhar, has widely shared my photo on social media and declared me liable for death for alleged blasphemy,” he said in a choked voice. “As soon as Ladhar heard about my bail, he and his accomplices started gathering people in the village and incited them against me and my family. He’s trying his best to ensure that we are never able to go back to the village.”

Shahzad has met with his family only once since his release on bail, and they are unable to return to their village in the foreseeable future, he said.

“We are not together,” he told Morning Star News. “They are living at a relative’s house while I’m taking refuge elsewhere. I don’t know when this agonizing situation will come to an end.”

The Christian said the complainant, said to be a member of Islamist extremist party Tehreek-e-Labbaik Pakistan and also allegedly connected with banned terrorist group Lashkar-e-Jhangvi, filed the charge because of a grudge. Shahzad said he and his family had obtained valuable government land and allotted it for construction of a church building, and Ladhar and others had filed multiple cases against the allotment and lost all of them after a four-year legal battle.

“Another probable reason for Ladhar’s jealousy could be that we were financially better off than most Christian families of the village,” he said. “I was running a successful paint business in Sargodha city, but that too has shut down due to this case.”

Regarding the social media post, Shahzad said he had no intention of hurting Muslim sentiments by sharing the biblical verse on his Facebook page.

“I posted the verse a week before Eid Al Adha [Feast of the Sacrifice] but I had no idea that it would be used to target me and my family,” he said. “In fact, when I came to know that Ladhar was provoking the villagers against me, I deleted the post and decided to meet the village elders to explain my position.”

The village elders were already influenced by Ladhar and refused to listen to him, Shahzad said.

“I was left with no option but to flee the village when I heard that Ladhar was amassing a mob to attack me,” he said.

Shahzad pleaded with government authorities for justice, saying he should not be punished for sharing a verse from the Bible that in no way constituted blasphemy.

Similar to other cases

Shahzad’s attorney, Maria, told Morning Star News that events in Shahzad’s case were similar to other blasphemy cases filed against Christians.

“Defective investigation, mala fide on the part of the police and complainant, violent protests against the accused persons and threats to them and their families, forcing their displacement from their ancestral areas, have become hallmarks of all blasphemy allegations in Pakistan,” said Maria, head of The Voice Society, a Christian paralegal organization.

She said that the case filed against Shahzad was gross violation of Section 196 of the Criminal Procedure Code (CrPC), which states that police cannot register a case under the Section 295-A blasphemy statute against a private citizen without the approval of the provincial government or federal agencies.

Maria added that Shahzad and his family have continued to suffer even though there was no evidence of blasphemy.

“The social stigma attached with a blasphemy accusation will likely have a long-lasting impact on their lives, whereas his accuser, Imran Ladhar, would not have to face any consequence of his false accusation,” she said.

The judge who granted bail noted that Shahzad was charged with blasphemy under Section 295-A, which is a non-cognizable offense, and Section 298, which is bailable. The judge also noted that police had not submitted the forensic report of Shahzad’s cell phone and said evidence was required to prove that the social media was blasphemous, according to Maria.

Bail was set at 100,000 Pakistani rupees (US $350) and two personal sureties, and the judge ordered police to further investigate, she said.

Shahzad, a paint contractor, on June 29 posted on his Facebook page 1 Cor. 10:18-21 regarding food sacrificed to idols, as Muslims were beginning the four-day festival of Eid al-Adha, which involves slaughtering an animal and sharing the meat.

A Muslim villager took a screenshot of the post, sent it to local social media groups and accused Shahzad of likening Muslims to pagans and disrespecting the Abrahamic tradition of animal sacrifice.

Though Shahzad made no comment in the post, inflammatory or otherwise, the situation became tense after Friday prayers when announcements were made from mosque loudspeakers telling people to gather for a protest, family sources previously told Morning Star News.

Fearing violence as mobs grew in the village, most Christian families fled their homes, leaving everything behind.

In a bid to restore order, the police registered a case against Shahzad under Sections 295-A and 298. Section 295-A relates to “deliberate and malicious acts intended to outrage religious feelings of any class by insulting its religion or religious beliefs” and is punishable with imprisonment of up to 10 years and fine, or both. Section 298 prescribes up to one year in prison and a fine, or both, for hurting religious sentiments.

Pakistan ranked seventh on Open Doors’ 2023 World Watch List of the most difficult places to be a Christian, up from eighth the previous year.

Morning Star News is the only independent news service focusing exclusively on the persecution of Christians. The nonprofit’s mission is to provide complete, reliable, even-handed news in order to empower those in the free world to help persecuted Christians, and to encourage persecuted Christians by informing them that they are not alone in their suffering.

Free Religious Freedom Updates

Join thousands of others to get the FREEDOM POST newsletter for free, sent twice a week from The Christian Post.

Individual + Team Stats: Hornets vs. Timberwolves

CHARLOTTE HORNETS MINNESOTA TIMBERWOLVES You can follow us for future coverage by liking us on Facebook & following us on X: Facebook – All Hornets X – …

Source link

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS5 days ago

WORDPRESS5 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO6 days ago

SEO6 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS6 days ago

WORDPRESS6 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

WORDPRESS7 days ago

[GET] The7 Website And Ecommerce Builder For WordPress