NEWS

Twitter tests more attention-grabbing misinformation labels

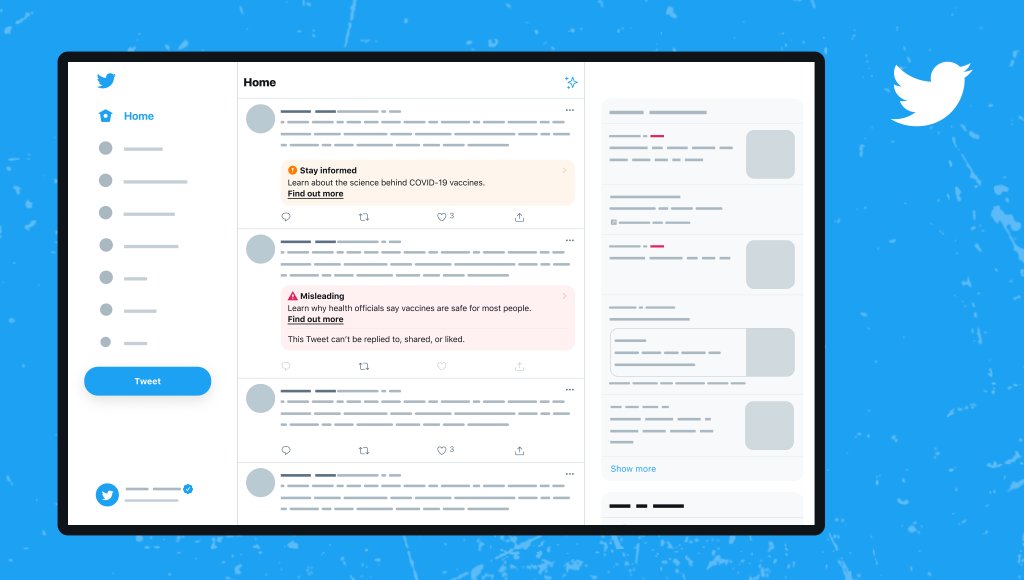

Twitter is considering changes to the way it contextualizes misleading tweets that the company doesn’t believe are dangerous enough to be removed from the platform outright.

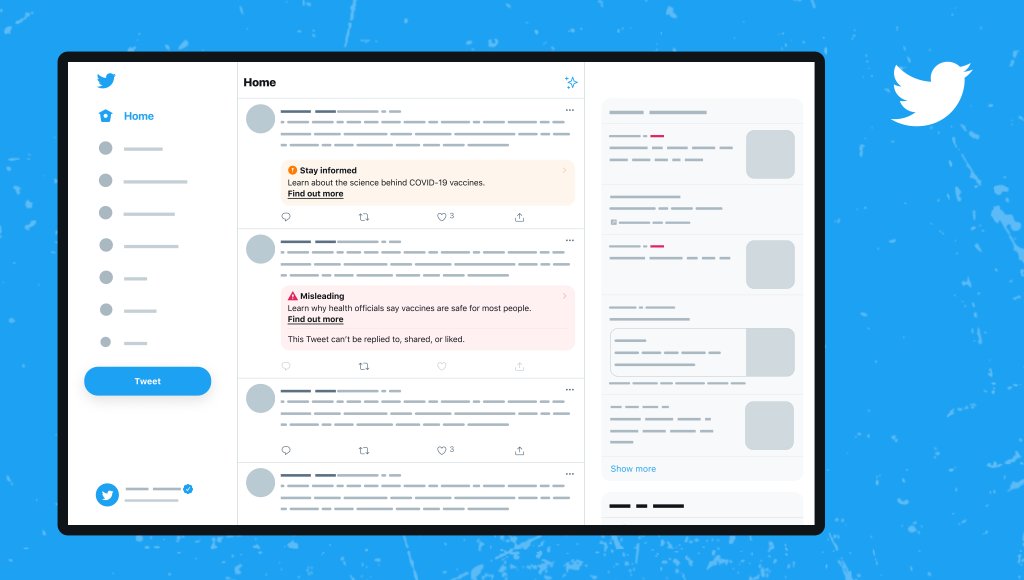

The company announced the test in a tweet Thursday with an image of the new misinformation labels. Within the limited test, those labels will appear with color-coded backgrounds now, making them much more visible in the feed while also giving users a way to quickly parse the information from visual cues. Some users will begin to see the change this week.

Tweets that Twitter deems “misleading” will get a red background with a short explanation and a notice that users can’t reply to, like or share the content. Yellow labels will appear on content that isn’t as actively misleading. In both cases, Twitter has made it more clear that you can click the labels to find verified information about the topic at hand (in this case, the pandemic).

“People who come across the new labels as a part of this limited test should expect a more communicative impact from the labels themselves both through copy, symbols and colors used to distill clear context about not only the label, but the information or content they are engaging with,” a Twitter spokesperson told TechCrunch.

Image Credits: Twitter

Twitter found that even tiny shifts in design could impact how people interacted with labeled tweets. In a test the company ran with a pink variation of the label, users clicked through to the authoritative information that Twitter provided more but they also quote-tweeted the content itself more, furthering its spread. Twitter says that it tested many variations on the written copy, colors and symbols that made their way into the new misinformation labels.

The changes come after a long public feedback period that convinced the company that misinformation labels needed to stand out better in a sea of tweets. Facebook’s own misinformation labels have also faced criticism for blending in too easily and failing to create much friction for potentially dangerous information on the platform.

Twitter first created content labels as a way to flag “manipulated media” — photos and videos altered to deliberately mislead people, like the doctored deepfake of Nancy Pelosi that went viral back in 2019. Last May, Twitter expanded its use of labels to address the wave of COVID-19 misinformation that swept over social media early in the pandemic.

A month ago, the company rolled out new labels specific to vaccine misinformation and introduced a strike-based system into its rules. The idea is for Twitter to build a toolkit it can use to respond in a proportional way to misinformation depending on the potential for real-world harm.

“… We know that even within the space of our policies, not all misleading claims are equally harmful,” a Twitter spokesperson said. “For example, telling someone to drink bleach in order to cure COVID is a more immediate and severe harm than sharing a viral image of a shark swimming on a flooded highway and claiming that’s footage from a hurricane. (That’s a real thing that happens every hurricane season.)”

Labels are just one of the content moderation options that Twitter developed over the course of the last couple of years, along with warnings that require a click-through and pop-up messages designed to subtly steer people away from impulsively sharing inflammatory tweets.

When Twitter decides not to remove content outright, it turns to an a la carte menu of potential content enforcement options:

- Apply a label and/or warning message to the Tweet

- Show a warning to people before they share or like the Tweet;

- Reduce the visibility of the Tweet on Twitter and/or prevent it from being recommended;

- Turn off likes, replies, and Retweets; and/or

- Provide a link to additional explanations or clarifications, such as in a curated landing page or relevant Twitter policies.

In most scenarios, the company will opt for all of the above.

“While there is no single answer to addressing the unique challenges presented by the range of types of misinformation, we believe investing in a multi-prong approach will allow us to be nimble and shift with the constantly changing dynamic of the public conversation,” the spokesperson said.