SEO

Is SEO Dead? A Fresh Look At The Age-Old Search Industry Question

The march of time is inevitable. And every year, some new technology pounds the nail into the coffin on something older.

Whether the horse and buggy are replaced by the automobile or the slide rule is replaced by the calculator, everything eventually becomes obsolete.

And if you listen to the rumors, this time around, it’s search engine optimization. Rest in peace, SEO: 1997-2022.

There’s just one tiny little problem.

SEO is still alive and kicking. It’s just as relevant today as it has ever been. If anything, it may even be more important.

Today, 53% of all website traffic comes from organic search.

In fact, the first search result in Google averages 26.9% of click-throughs on mobile devices and 32% on desktops.

And what’s helping Google determine which results belong at the top of search engine results pages (SERPs)? SEO, of course.

Need more proof? We have more statistics to back it up.

Thanks to consistent updates to the Google search algorithm, the entire SEO field is undergoing rapid evolution.

Completely ignoring the many small changes the search engine’s algorithm has undergone, we’ve seen several major updates in the last decade. Some of the more important ones are:

- Panda – First put into place in February 2011, Panda was focused on quality and user experience. It was designed to eliminate black hat SEO tactics and web spam.

- Hummingbird – Unveiled in August 2013, Hummingbird made the search engine’s core algorithm faster and more precise in anticipation of the growth of mobile search.

- RankBrain – Rolled out in spring 2015, this update was announced in October of that year. Integrating artificial intelligence (AI) into all queries, RankBrain uses machine learning to provide better answers to ambiguous queries.

- BERT – Initially released in November 2018 and updated in December 2019, this update helps Google understand natural language better.

- Vicinity – Put into place in December 2021, Vicinity was Google’s biggest local search update in five years. Using proximity targeting as a ranking factor, local businesses are weighted more heavily in query results.

Each of these updates changed the way Google works, so each required SEO professionals to rethink their approach and tweak their strategy to ensure they get the results needed. But the need for their services remained.

Now that it’s been established that SEO is not dead, it raises the question: Where did all this death talk come from in the first place?

Most of it is based on unfounded conjecture and wild speculation. The truth is that SEO is in a state of transition, which can be scary.

And that transition is driven by three things:

- Artificial intelligence and machine learning, particularly Google RankBrain.

- Shrinking organic space on SERPs.

- Digital personal assistants and voice search.

The Rise Of Machine Learning

You’ve probably already recognized the impact AI has had on the world.

This exciting new technology has started to appear everywhere, from voice assistants to predictive healthcare to self-driving automobiles.

And it has been a trending topic in SEO for quite a while.

Unfortunately, most of what’s out there is incomplete information gathered from reading patents, analyzing search engine behavior, and flat-out guessing.

And part of the reason it’s so difficult to get a handle on what’s happening in AI concerning search engines is its constant evolution.

However, we will examine two identifiable trends: machine learning and natural language.

Machine learning is just what it sounds like: machines that are learning.

For a more sophisticated definition, it can be described as “a method of data analysis that automates analytical model building… a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns and make decisions with minimal human intervention.”

For SEO purposes, this means gathering and analyzing information on content, user behaviors, citations, and patterns, and then using that information to create new rankings factors that are more likely to answer user queries accurately.

You will want to read this article for a more in-depth explanation of how that will work.

One of the most important factors machine learning uses when determining how to rank websites is our other trend – natural language.

From their earliest days, computers have used unique languages. And because it was very unlike the language humans don’t use, there was always a disconnect between user intent and what search engines delivered.

However, as technology has grown increasingly more advanced, Google has made great strides in this field.

The most important one for SEO professionals is RankBrain, Google’s machine learning system built upon the rewrite of Google’s core algorithm that we mentioned earlier, Hummingbird.

Nearly a decade ago, Google had the foresight to recognize that mobile devices were the future wave. Anticipating what this would mean for search, Hummingbird focused on understanding conversational speech.

RankBrain builds upon this, moving Google away from a search engine that follows the links between concepts to seeing the concepts they represent.

It moved the search engine away from matching keywords in a query to more precisely identifying user intent and delivering results that more accurately matched the search.

This meant identifying which words were important to the search and disregarding those that were not.

It also developed an understanding of synonyms, so if a webpage matches a query, it may appear in the results, even if it doesn’t include the searched-for keyword.

The biggest impact of RankBrain and machine learning has been on long-tail keywords.

In the past, websites would often jam in specific but rarely search-for keywords into their content. This allowed them to show up in queries for those topics.

RankBrain changed how Google handled these, which meant primarily focusing on long-tail keywords was no longer a good strategy. It also helped eliminate content from spammers who sought rankings for these terms.

Honey, I Shrunk The Organic Search Space

Search engines are big business, no one can deny that.

And since 2016, Google has slowly encroached on organic search results in favor of paid advertising. That was when sponsored ads were removed from the sidebar and put at the top of SERPs.

As a result, organic results were pushed further down the page, or “below the fold,” to borrow an anachronistic idiom.

From Google’s business perspective, this makes sense. The internet has become a huge part of the global economy, which means an ever-increasing number of companies are willing to pay for ad placement.

As a result of this seeming de-prioritization, organic SEO professionals are forced to develop innovative new strategies for not only showing up on the first page but also competing with paid ads.

Changes to local search have also affected SERPs. In its never-ending quest to provide more relevant results to users, Google added a local pack to search results. This group of three nearby businesses appears to satisfy the query. They are listed at the top of the first page of results, along with a map showing their location.

This was good news for local businesses who compete with national brands. For SEO professionals, however, it threw a new wrinkle into their work.

In addition to creating competition for local search results, this also opened the door for, you guessed it, local paid search ads.

And these are not the only things pushing organic results down the page. Depending on the search, your link may also have to compete with:

- Shopping ads.

- Automated extensions.

- Featured snippets.

- Video or image carousels.

- News stories.

Additionally, Google has begun directly answering questions (and suggested related questions and answers). This has given birth to a phenomenon known as “zero-click searches,” which are searches that end on the SERP without a click-through to another site.

In 2020, nearly 65% of all searches received no clicks, which is troubling for anyone who makes their living by generating them.

With this in mind, and as organic results sink lower and lower, it’s easy to see why some SEO professionals are becoming frustrated. But savvy web marketers see these as more than challenges – they see them as opportunities.

For example, if you can’t get your link at the top of a SERP, you can use structured data markup to grab a featured snippet. While this isn’t technically an SEO tactic, it is a way to generate clicks and traffic, which is the ultimate goal.

Use Your Voice

Not long ago, taking a note or making out your grocery list meant locating some paper and writing on it with a pen. Like a caveman.

Thankfully, those days are gone, or at least on their way out, having been replaced by technology.

Whether you’re using Siri to play your favorite song, asking Cortana how much the moon weighs, or having Alexa check the price of Apple stock, much of the internet is now available just by using your voice.

In 2020, 4.2 million digital personal assistant devices were being used worldwide. And that’s a number expected to double by 2024 as more and more people adopt the Amazon Echo, Sonos One, Google Nest Hub, and the like.

And users don’t even have their own one of these smart speakers to use the power of voice search. 90% of iPhone owners use Siri, and 75% of smartphone owners use Google Assistant.

With the advent of these virtual helpers, we’ve seen a big increase in voice searching. Here are some interesting facts about voice search:

Isn’t technology grand?

It depends on who you are. If you work in SEO (and because you’re reading this, we’re going to assume you do), this creates some problems.

After all, how do you generate clicks to your website if no clicks are involved?

The answer is quite obvious: You need to optimize for voice search.

Voice-controlled devices don’t operate like a manual search, so your SEO content needs to consider this.

The best way to do this again is to improve the quality of your information. Your content needs to be the best answer to a person’s question, ensuring it ranks at the very top and gets the verbal click-throughs (is that a term?) you need.

And because people have figured out that more specific queries generate more specific responses, it’s important that your content fills that niche.

In general, specificity seems to be a growing trend in SEO, so it’s no longer enough to just have a web copy that says, “t-shirts for sale.”

Instead, you need to drill down to exactly what your target is searching for, e.g., “medium Garfield t-shirts + yellow + long-sleeve.”

What Does All This Mean For SEO?

Now that we’ve looked at the major reasons why pessimists and cynics are falsely proclaiming the demise of SEO, let’s review what we’ve learned along the way:

- Google will never be satisfied with its algorithms. It will always feel there is room to grow and improve its ability to precisely answer a search query. And far from being the death knell for SEO, this ensures its importance moving forward.

- Machine learning, especially regarding natural language, allows Google to better understand the intent behind a search and as a result, present more relevant options. Your content should focus on answering queries instead of just including keywords.

- Long-tail keywords are important for answering specific questions, particularly in featured answer sections, but focusing solely on them is an outdated and ineffective strategy.

- Organic search results lose real estate to paid ads and other features. However, this presents opportunities for clever SEO professionals to shoot to the top via things like local search.

- Zero-click searches constitute nearly two-thirds of all searches, which hurts SEO numbers but allows you to claim a spot of prominence as a featured snippet using structured data.

- The use of voice search and personal digital assistants is on the rise. This calls for rethinking SEO strategies and optimizing content to be found and used by voice search.

Have you noticed a theme running through this entire piece? It’s evolution, survival of the fittest.

To ensure you don’t lose out on important web traffic, you must constantly monitor the SEO situation and adapt to changes, just like you always have.

Your strategy needs to become more sophisticated as new opportunities present themselves. It needs to be ready to pivot quickly.

And above all, you need to remember that your content is still the most important thing.

If you can best answer a query, your site will get the traffic you seek. If it can’t, you need to rework it until it does.

Just remember, like rock and roll, SEO will never die.

More Resources:

Featured Image: sitthiphong/Shutterstock

SEO

How To Write ChatGPT Prompts To Get The Best Results

ChatGPT is a game changer in the field of SEO. This powerful language model can generate human-like content, making it an invaluable tool for SEO professionals.

However, the prompts you provide largely determine the quality of the output.

To unlock the full potential of ChatGPT and create content that resonates with your audience and search engines, writing effective prompts is crucial.

In this comprehensive guide, we’ll explore the art of writing prompts for ChatGPT, covering everything from basic techniques to advanced strategies for layering prompts and generating high-quality, SEO-friendly content.

Writing Prompts For ChatGPT

What Is A ChatGPT Prompt?

A ChatGPT prompt is an instruction or discussion topic a user provides for the ChatGPT AI model to respond to.

The prompt can be a question, statement, or any other stimulus to spark creativity, reflection, or engagement.

Users can use the prompt to generate ideas, share their thoughts, or start a conversation.

ChatGPT prompts are designed to be open-ended and can be customized based on the user’s preferences and interests.

How To Write Prompts For ChatGPT

Start by giving ChatGPT a writing prompt, such as, “Write a short story about a person who discovers they have a superpower.”

ChatGPT will then generate a response based on your prompt. Depending on the prompt’s complexity and the level of detail you requested, the answer may be a few sentences or several paragraphs long.

Use the ChatGPT-generated response as a starting point for your writing. You can take the ideas and concepts presented in the answer and expand upon them, adding your own unique spin to the story.

If you want to generate additional ideas, try asking ChatGPT follow-up questions related to your original prompt.

For example, you could ask, “What challenges might the person face in exploring their newfound superpower?” Or, “How might the person’s relationships with others be affected by their superpower?”

Remember that ChatGPT’s answers are generated by artificial intelligence and may not always be perfect or exactly what you want.

However, they can still be a great source of inspiration and help you start writing.

Must-Have GPTs Assistant

I recommend installing the WebBrowser Assistant created by the OpenAI Team. This tool allows you to add relevant Bing results to your ChatGPT prompts.

This assistant adds the first web results to your ChatGPT prompts for more accurate and up-to-date conversations.

It is very easy to install in only two clicks. (Click on Start Chat.)

For example, if I ask, “Who is Vincent Terrasi?,” ChatGPT has no answer.

With WebBrower Assistant, the assistant creates a new prompt with the first Bing results, and now ChatGPT knows who Vincent Terrasi is.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023You can test other GPT assistants available in the GPTs search engine if you want to use Google results.

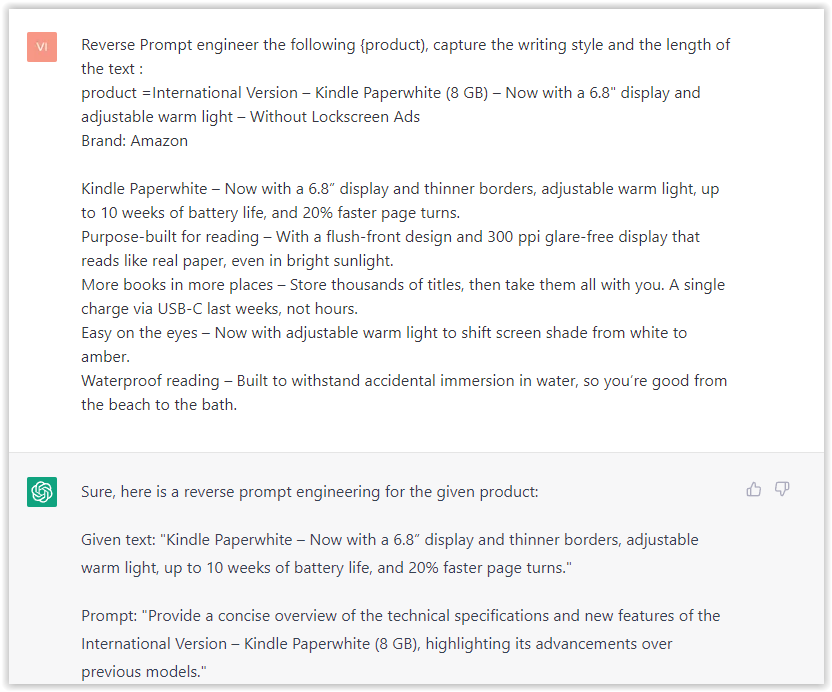

Master Reverse Prompt Engineering

ChatGPT can be an excellent tool for reverse engineering prompts because it generates natural and engaging responses to any given input.

By analyzing the prompts generated by ChatGPT, it is possible to gain insight into the model’s underlying thought processes and decision-making strategies.

One key benefit of using ChatGPT to reverse engineer prompts is that the model is highly transparent in its decision-making.

This means that the reasoning and logic behind each response can be traced, making it easier to understand how the model arrives at its conclusions.

Once you’ve done this a few times for different types of content, you’ll gain insight into crafting more effective prompts.

Prepare Your ChatGPT For Generating Prompts

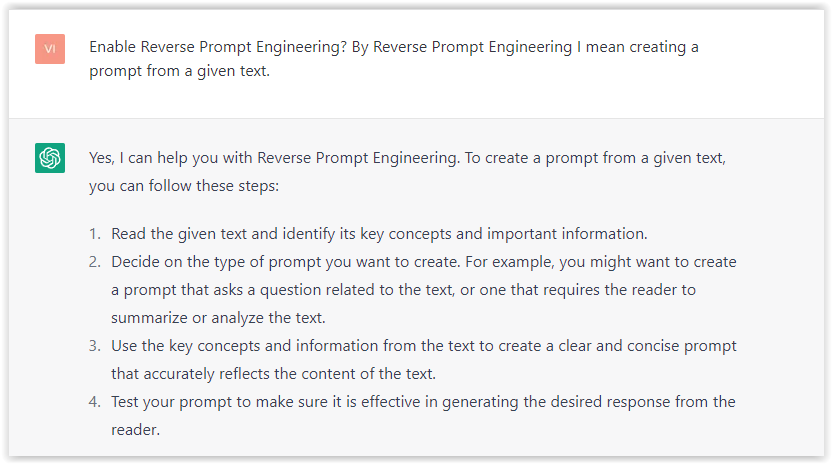

First, activate the reverse prompt engineering.

- Type the following prompt: “Enable Reverse Prompt Engineering? By Reverse Prompt Engineering I mean creating a prompt from a given text.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023ChatGPT is now ready to generate your prompt. You can test the product description in a new chatbot session and evaluate the generated prompt.

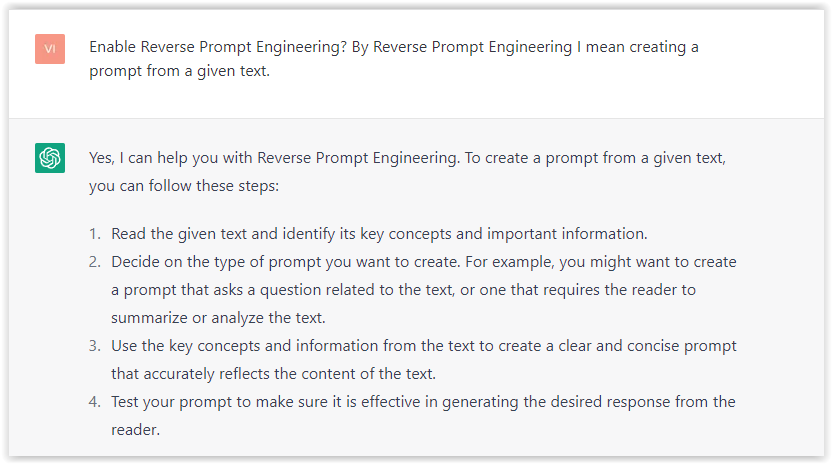

- Type: “Create a very technical reverse prompt engineering template for a product description about iPhone 11.”

Screenshot from ChatGPT, March 2023

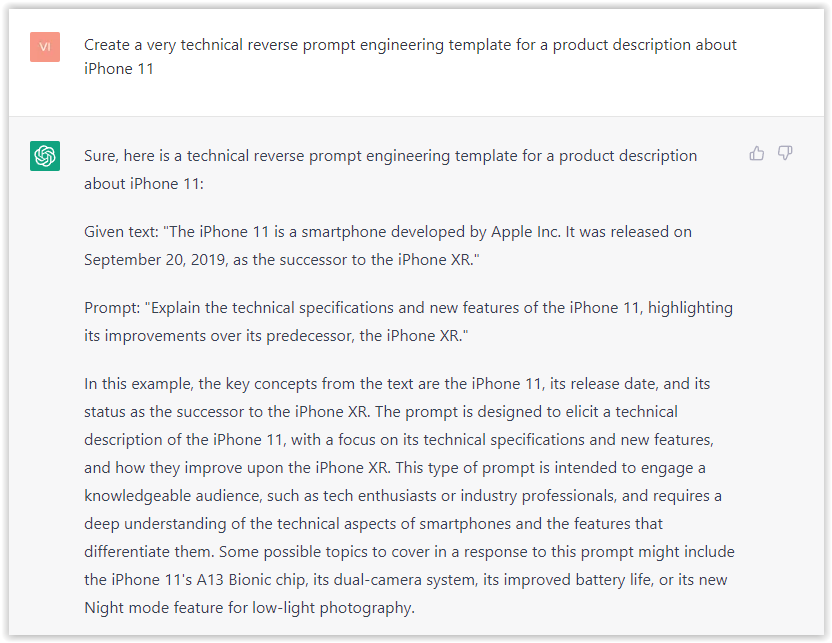

Screenshot from ChatGPT, March 2023The result is amazing. You can test with a full text that you want to reproduce. Here is an example of a prompt for selling a Kindle on Amazon.

- Type: “Reverse Prompt engineer the following {product), capture the writing style and the length of the text :

product =”

Screenshot from ChatGPT, March 2023

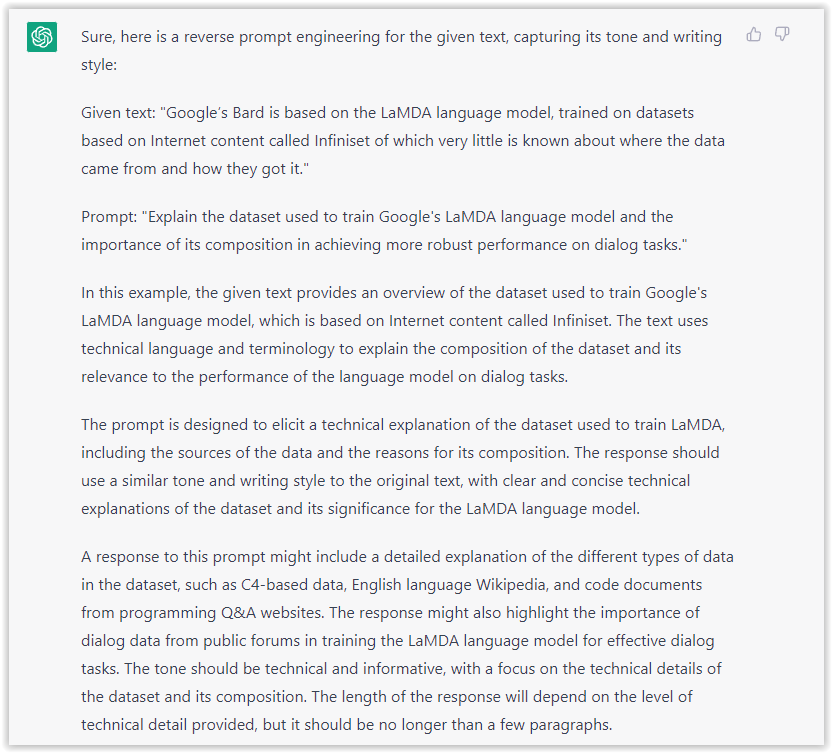

Screenshot from ChatGPT, March 2023I tested it on an SEJ blog post. Enjoy the analysis – it is excellent.

- Type: “Reverse Prompt engineer the following {text}, capture the tone and writing style of the {text} to include in the prompt :

text = all text coming from https://www.searchenginejournal.com/google-bard-training-data/478941/”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023But be careful not to use ChatGPT to generate your texts. It is just a personal assistant.

Go Deeper

Prompts and examples for SEO:

- Keyword research and content ideas prompt: “Provide a list of 20 long-tail keyword ideas related to ‘local SEO strategies’ along with brief content topic descriptions for each keyword.”

- Optimizing content for featured snippets prompt: “Write a 40-50 word paragraph optimized for the query ‘what is the featured snippet in Google search’ that could potentially earn the featured snippet.”

- Creating meta descriptions prompt: “Draft a compelling meta description for the following blog post title: ’10 Technical SEO Factors You Can’t Ignore in 2024′.”

Important Considerations:

- Always Fact-Check: While ChatGPT can be a helpful tool, it’s crucial to remember that it may generate inaccurate or fabricated information. Always verify any facts, statistics, or quotes generated by ChatGPT before incorporating them into your content.

- Maintain Control and Creativity: Use ChatGPT as a tool to assist your writing, not replace it. Don’t rely on it to do your thinking or create content from scratch. Your unique perspective and creativity are essential for producing high-quality, engaging content.

- Iteration is Key: Refine and revise the outputs generated by ChatGPT to ensure they align with your voice, style, and intended message.

Additional Prompts for Rewording and SEO:

– Rewrite this sentence to be more concise and impactful.

– Suggest alternative phrasing for this section to improve clarity.

– Identify opportunities to incorporate relevant internal and external links.

– Analyze the keyword density and suggest improvements for better SEO.

Remember, while ChatGPT can be a valuable tool, it’s essential to use it responsibly and maintain control over your content creation process.

Experiment And Refine Your Prompting Techniques

Writing effective prompts for ChatGPT is an essential skill for any SEO professional who wants to harness the power of AI-generated content.

Hopefully, the insights and examples shared in this article can inspire you and help guide you to crafting stronger prompts that yield high-quality content.

Remember to experiment with layering prompts, iterating on the output, and continually refining your prompting techniques.

This will help you stay ahead of the curve in the ever-changing world of SEO.

More resources:

Featured Image: Tapati Rinchumrus/Shutterstock

SEO

Measuring Content Impact Across The Customer Journey

Understanding the impact of your content at every touchpoint of the customer journey is essential – but that’s easier said than done. From attracting potential leads to nurturing them into loyal customers, there are many touchpoints to look into.

So how do you identify and take advantage of these opportunities for growth?

Watch this on-demand webinar and learn a comprehensive approach for measuring the value of your content initiatives, so you can optimize resource allocation for maximum impact.

You’ll learn:

- Fresh methods for measuring your content’s impact.

- Fascinating insights using first-touch attribution, and how it differs from the usual last-touch perspective.

- Ways to persuade decision-makers to invest in more content by showcasing its value convincingly.

With Bill Franklin and Oliver Tani of DAC Group, we unravel the nuances of attribution modeling, emphasizing the significance of layering first-touch and last-touch attribution within your measurement strategy.

Check out these insights to help you craft compelling content tailored to each stage, using an approach rooted in first-hand experience to ensure your content resonates.

Whether you’re a seasoned marketer or new to content measurement, this webinar promises valuable insights and actionable tactics to elevate your SEO game and optimize your content initiatives for success.

View the slides below or check out the full webinar for all the details.

SEO

How to Find and Use Competitor Keywords

Competitor keywords are the keywords your rivals rank for in Google’s search results. They may rank organically or pay for Google Ads to rank in the paid results.

Knowing your competitors’ keywords is the easiest form of keyword research. If your competitors rank for or target particular keywords, it might be worth it for you to target them, too.

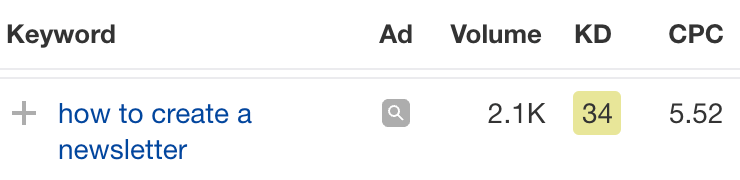

There is no way to see your competitors’ keywords without a tool like Ahrefs, which has a database of keywords and the sites that rank for them. As far as we know, Ahrefs has the biggest database of these keywords.

How to find all the keywords your competitor ranks for

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Organic keywords report

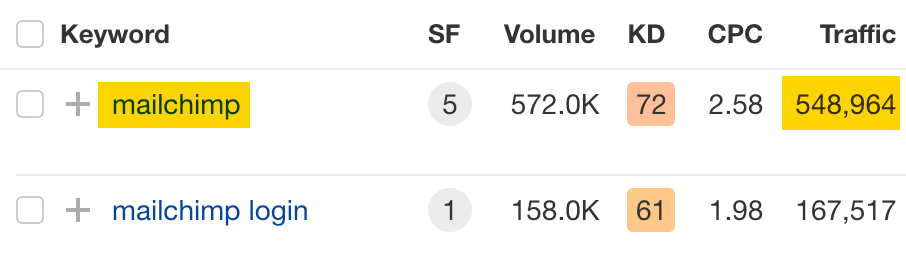

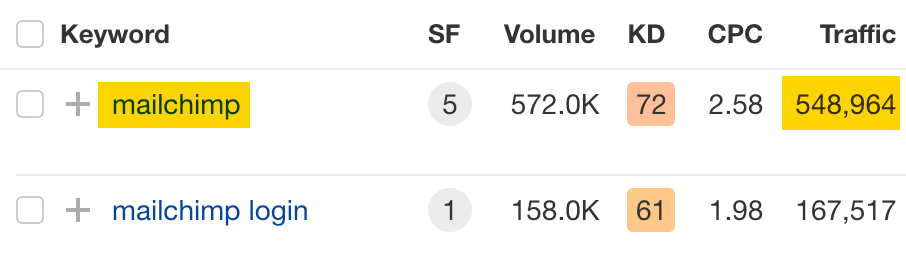

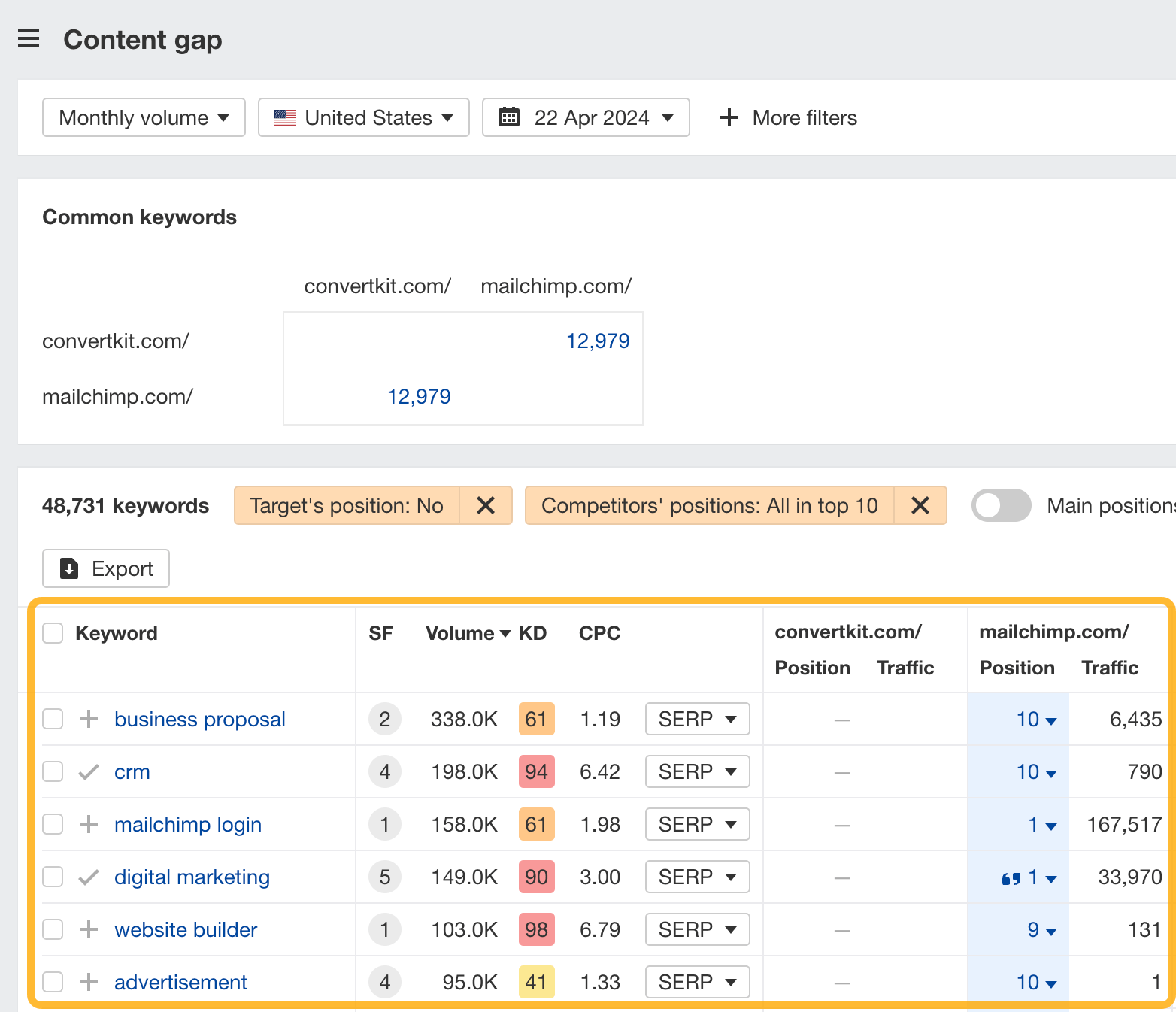

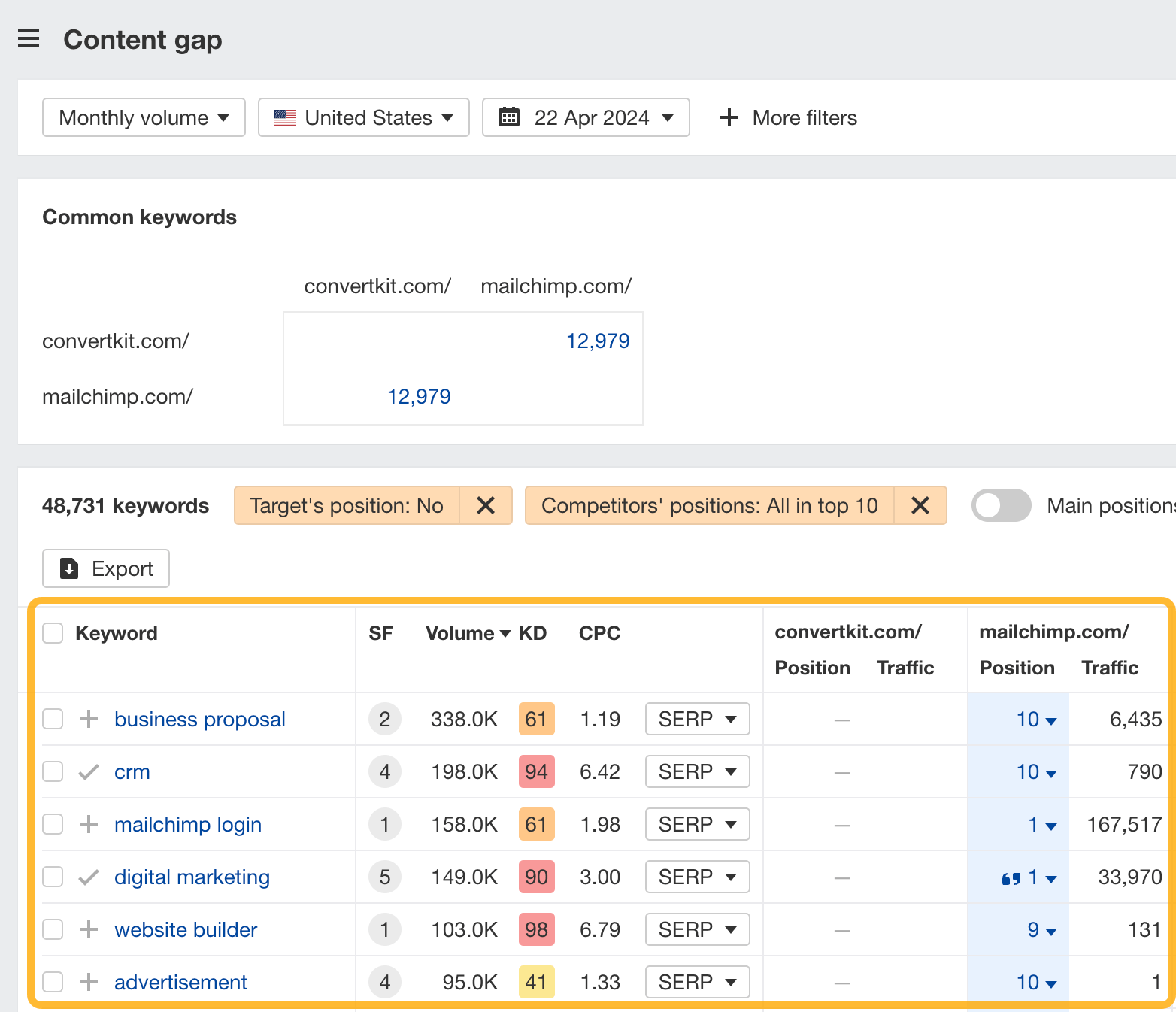

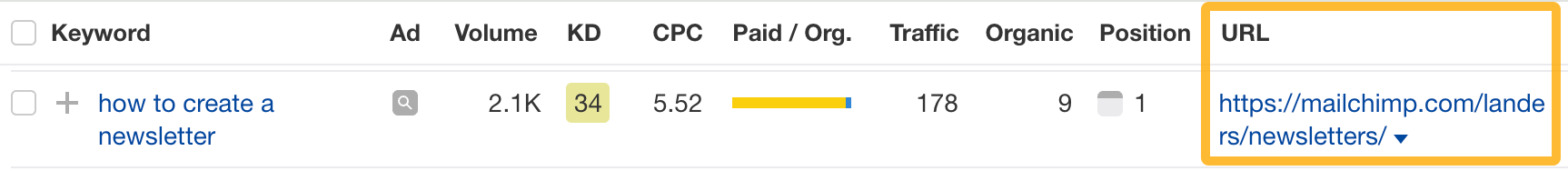

The report is sorted by traffic to show you the keywords sending your competitor the most visits. For example, Mailchimp gets most of its organic traffic from the keyword “mailchimp.”

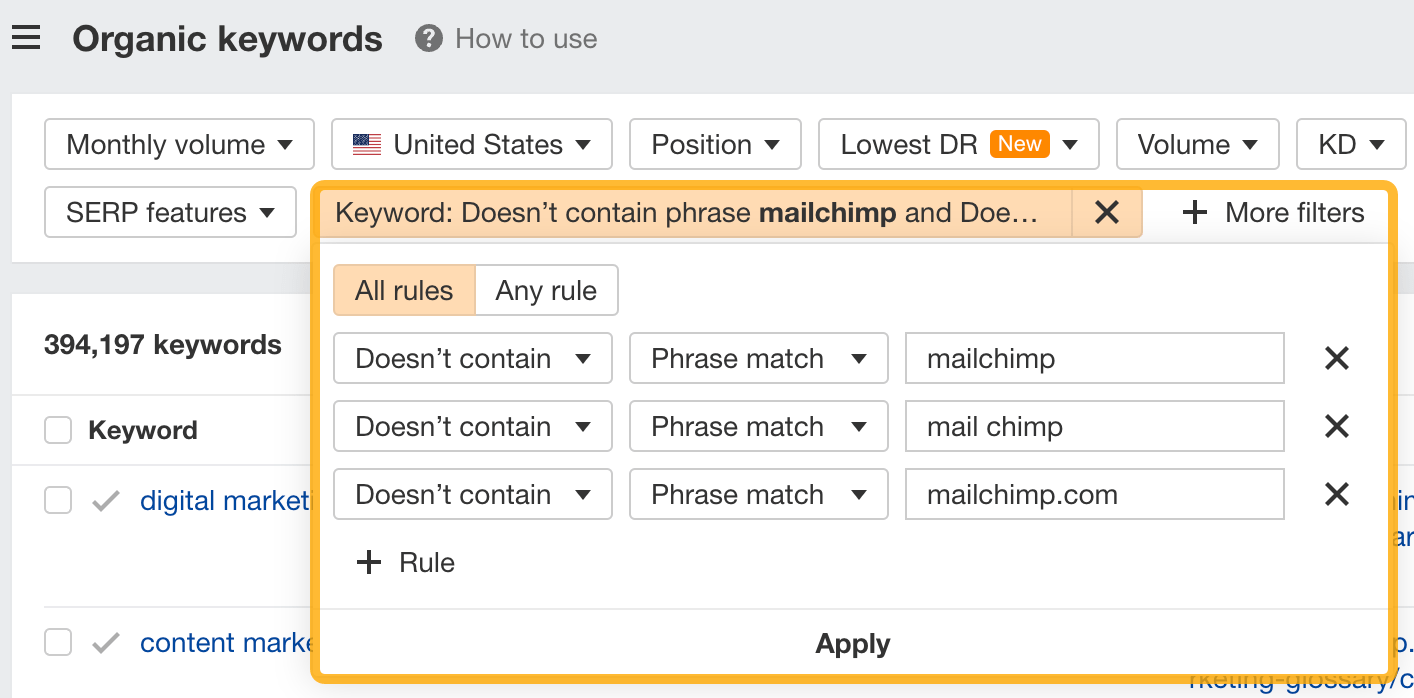

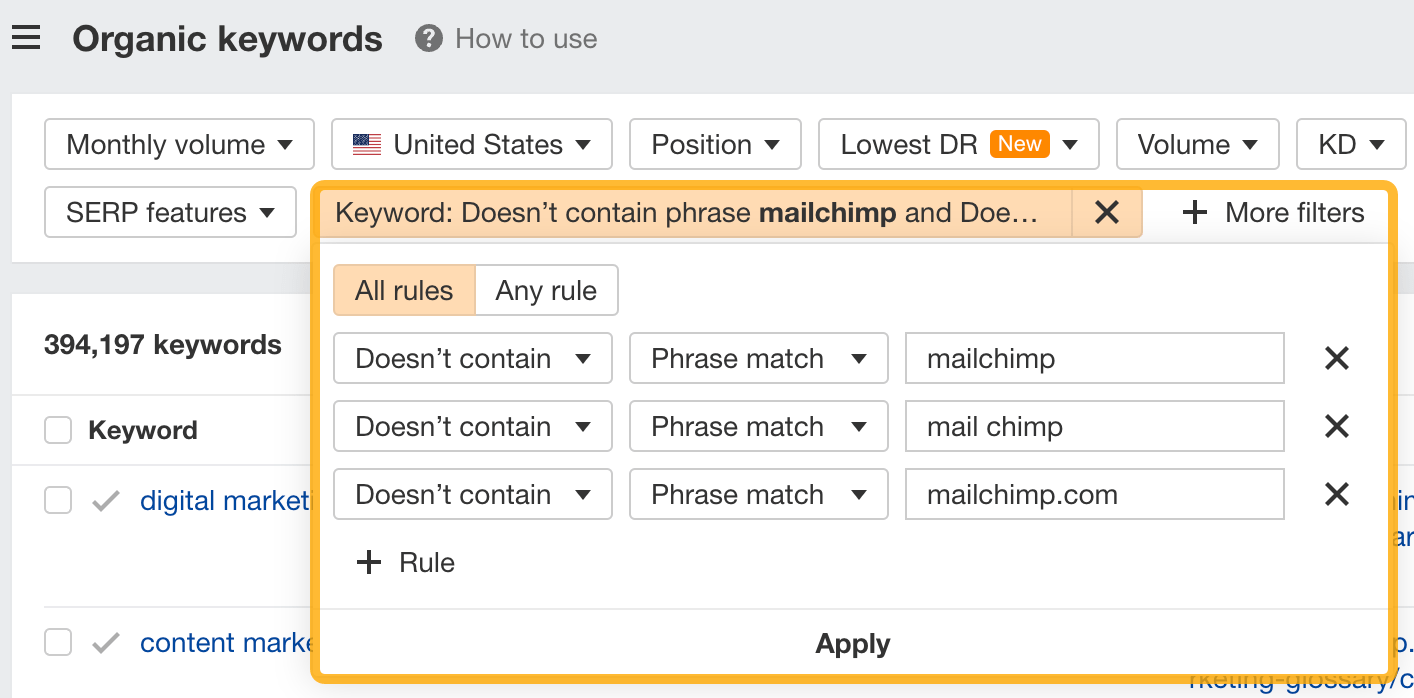

Since you’re unlikely to rank for your competitor’s brand, you might want to exclude branded keywords from the report. You can do this by adding a Keyword > Doesn’t contain filter. In this example, we’ll filter out keywords containing “mailchimp” or any potential misspellings:

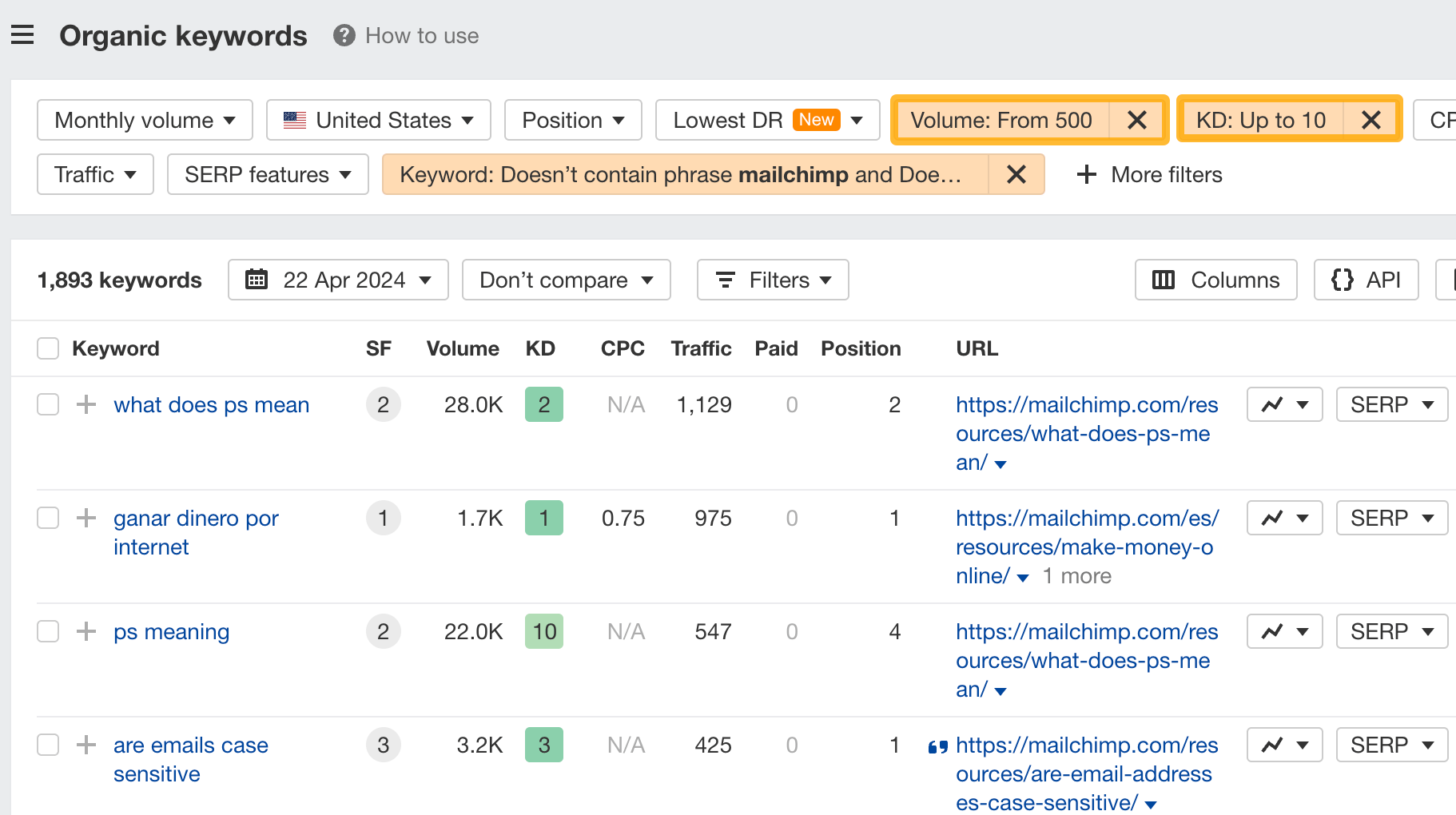

If you’re a new brand competing with one that’s established, you might also want to look for popular low-difficulty keywords. You can do this by setting the Volume filter to a minimum of 500 and the KD filter to a maximum of 10.

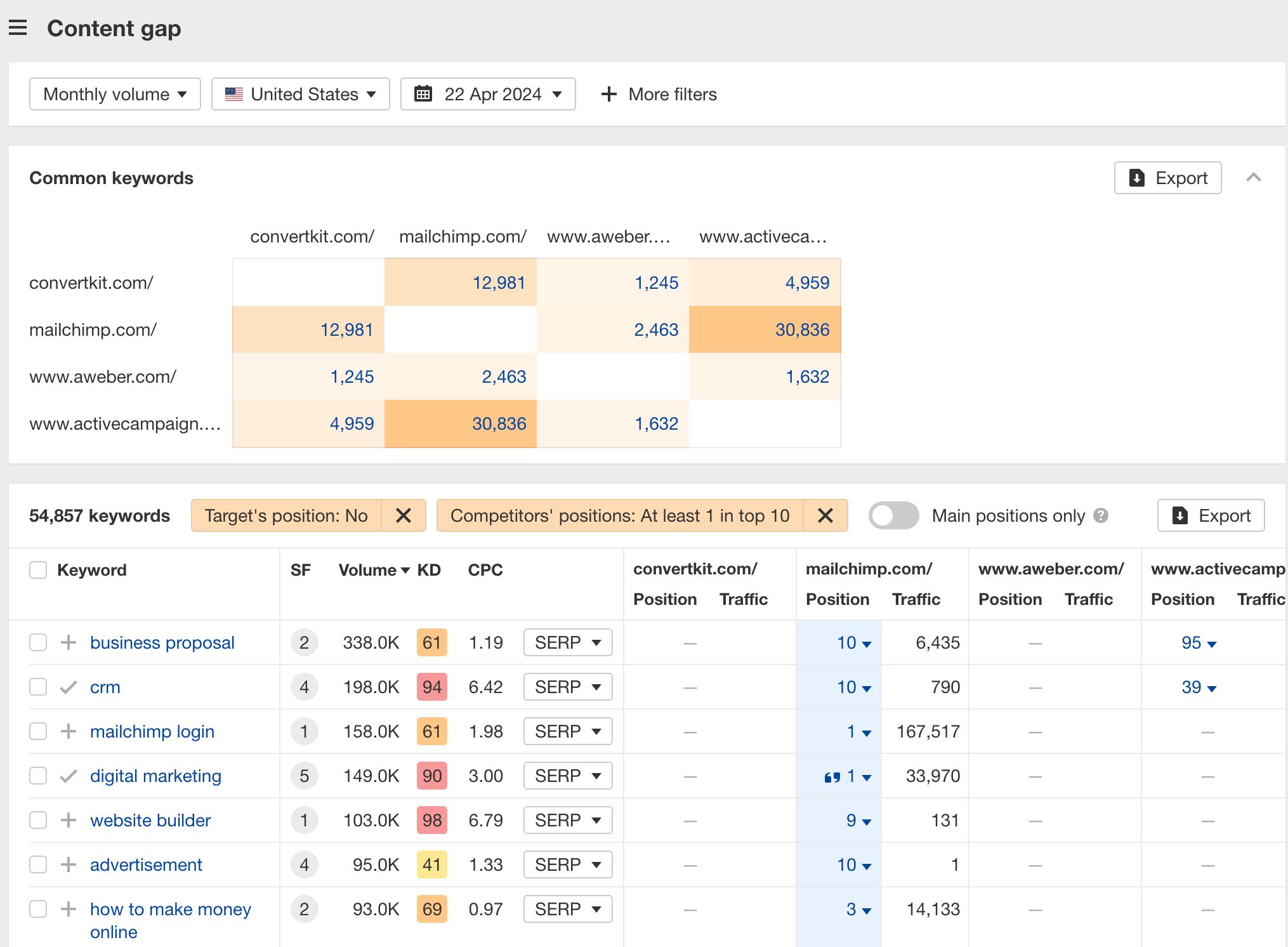

How to find keywords your competitor ranks for, but you don’t

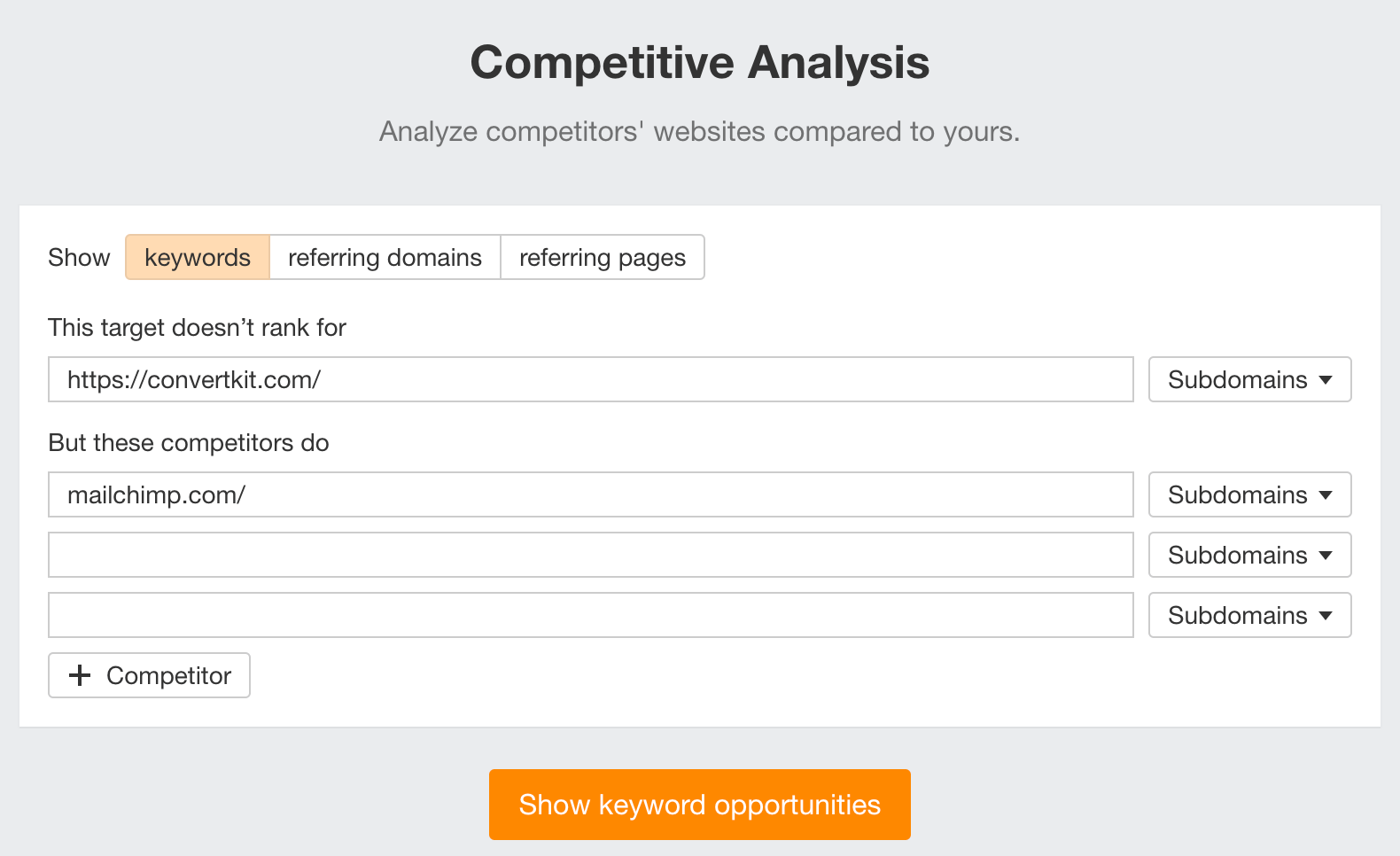

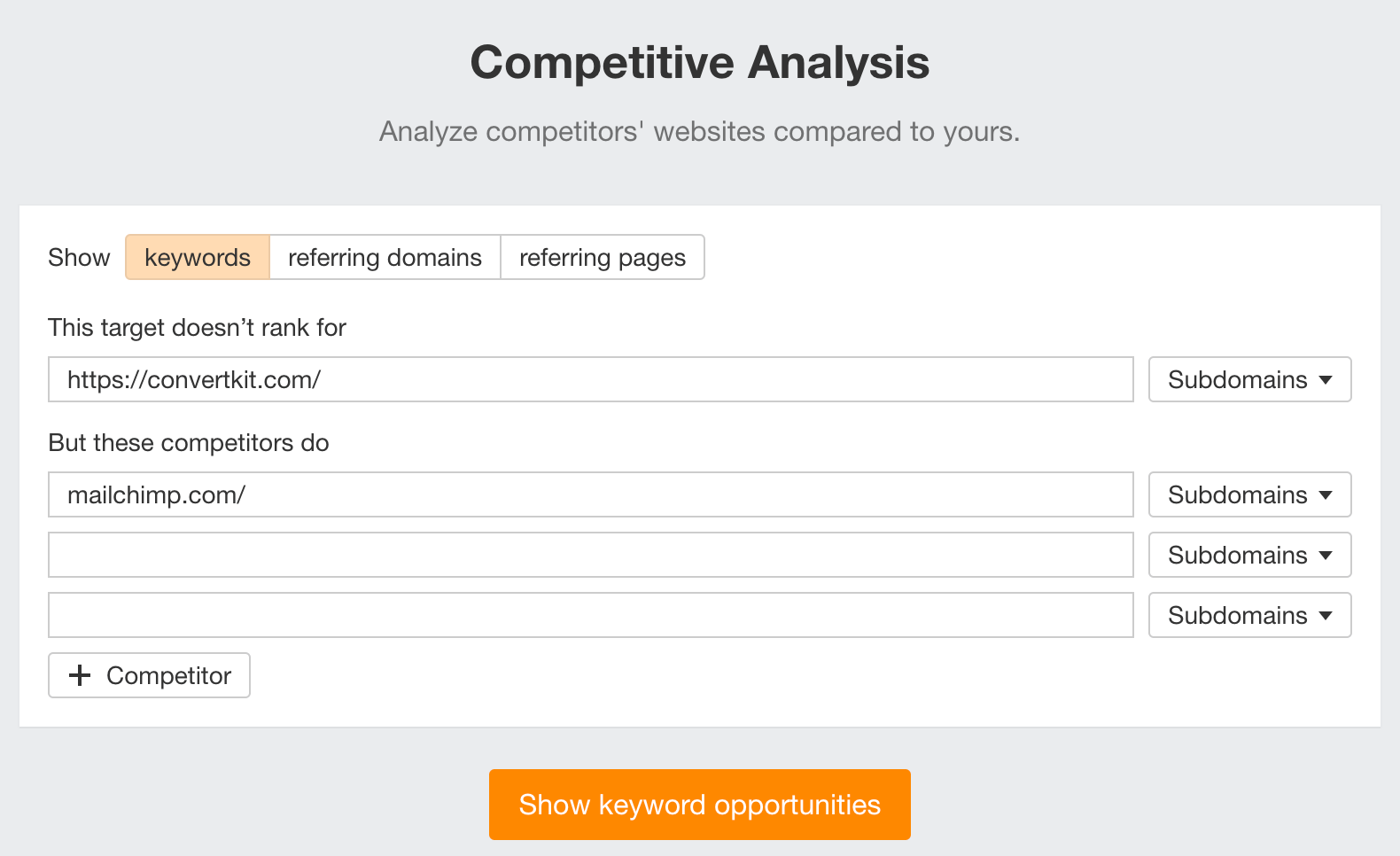

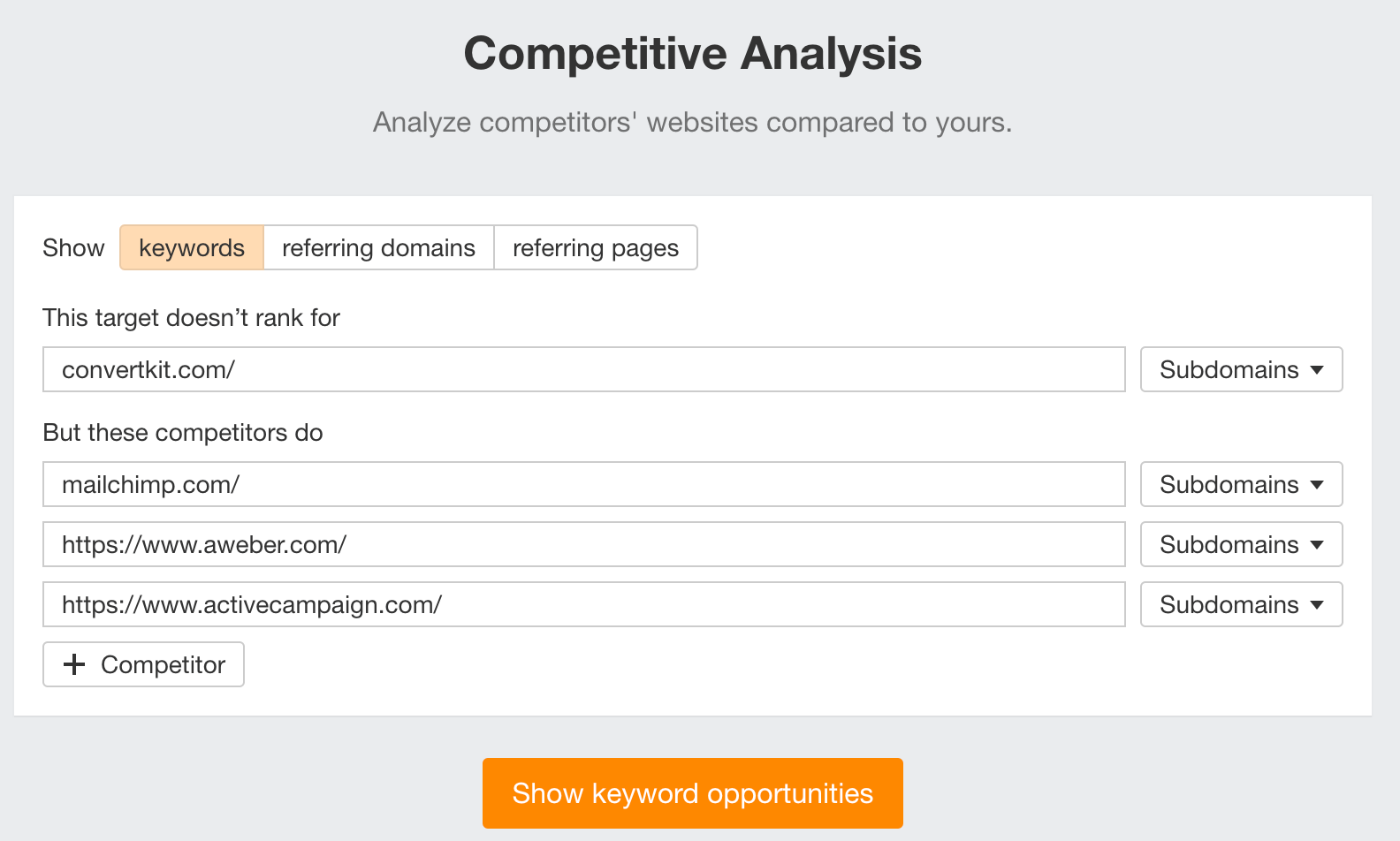

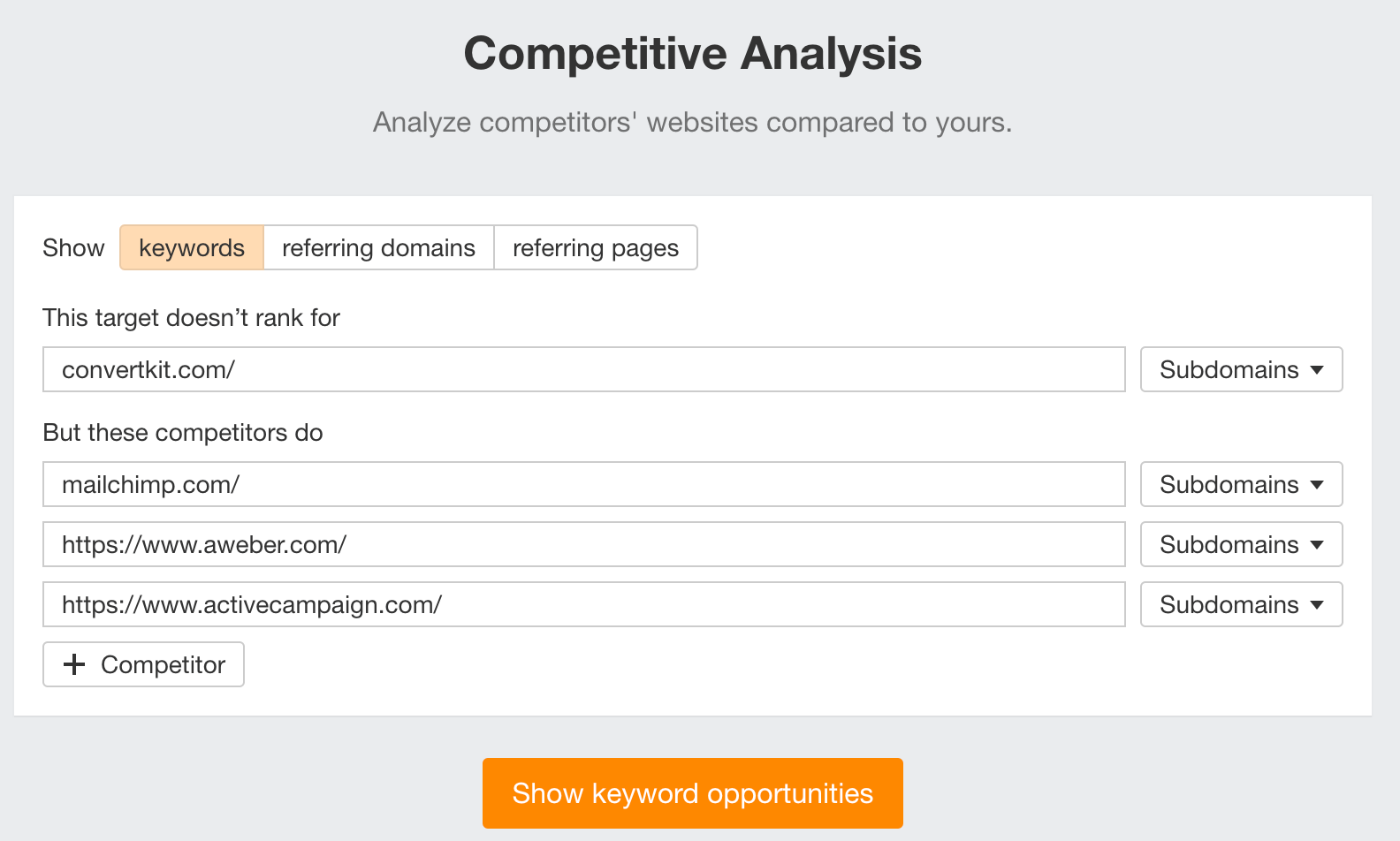

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter your competitor’s domain in the But these competitors do section

Hit “Show keyword opportunities,” and you’ll see all the keywords your competitor ranks for, but you don’t.

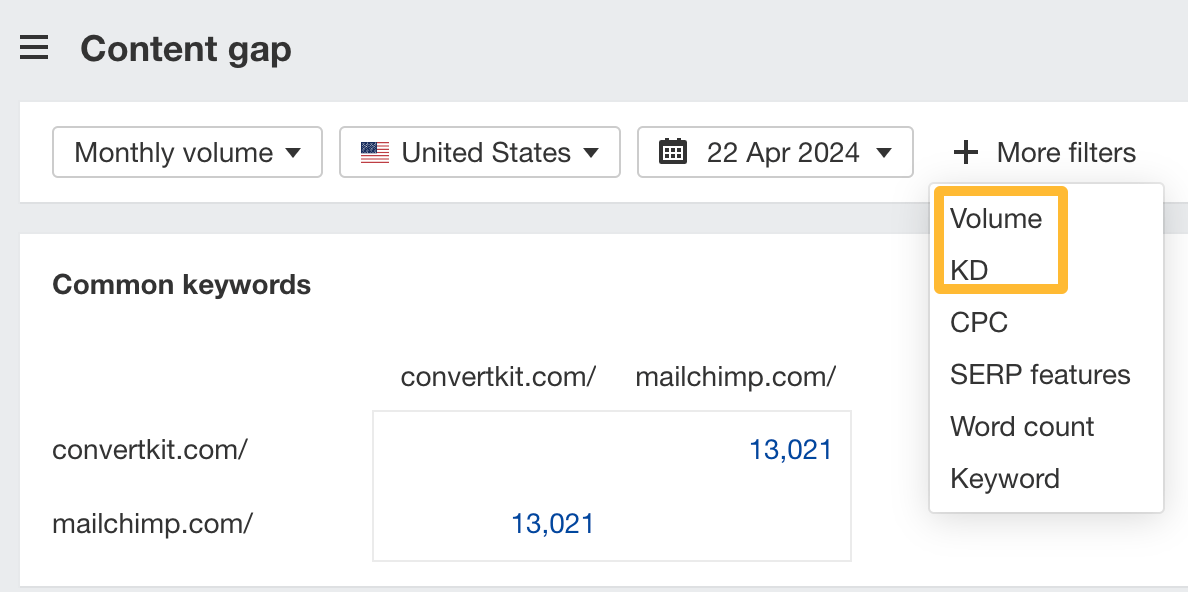

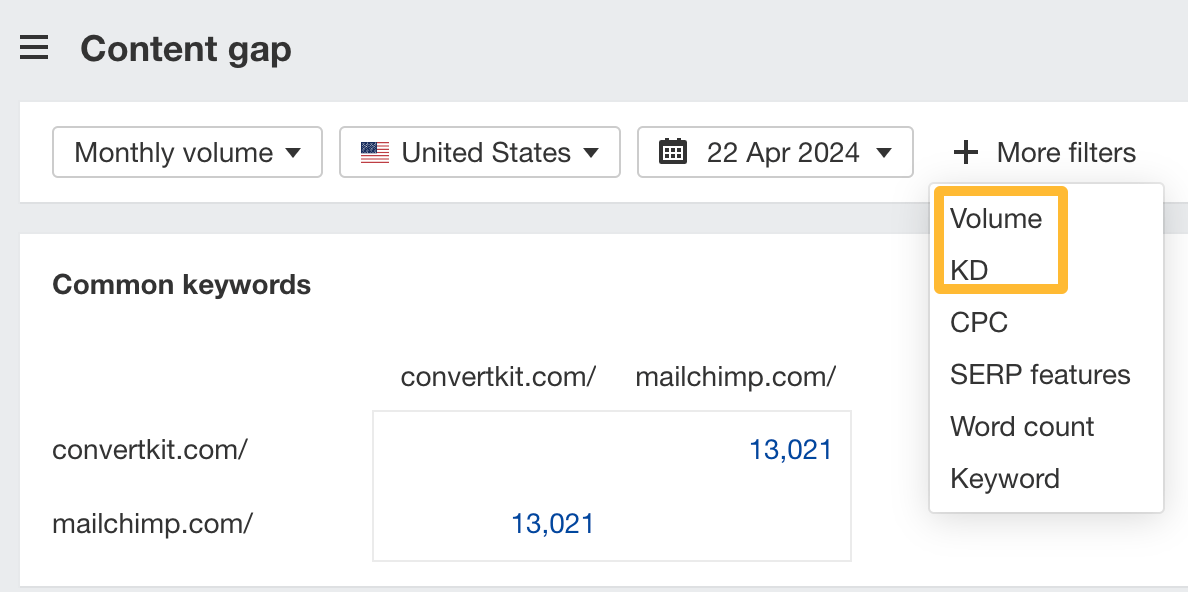

You can also add a Volume and KD filter to find popular, low-difficulty keywords in this report.

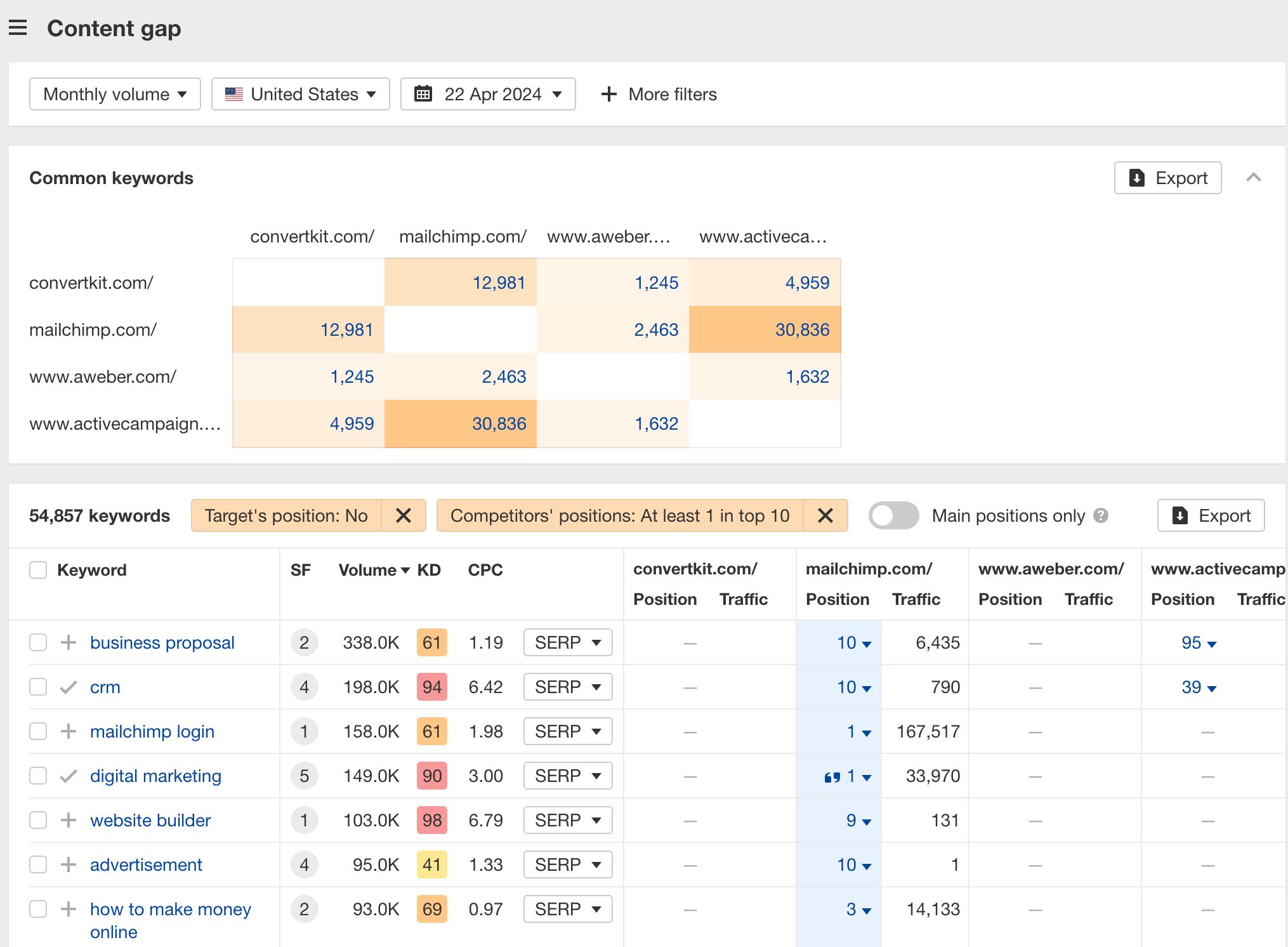

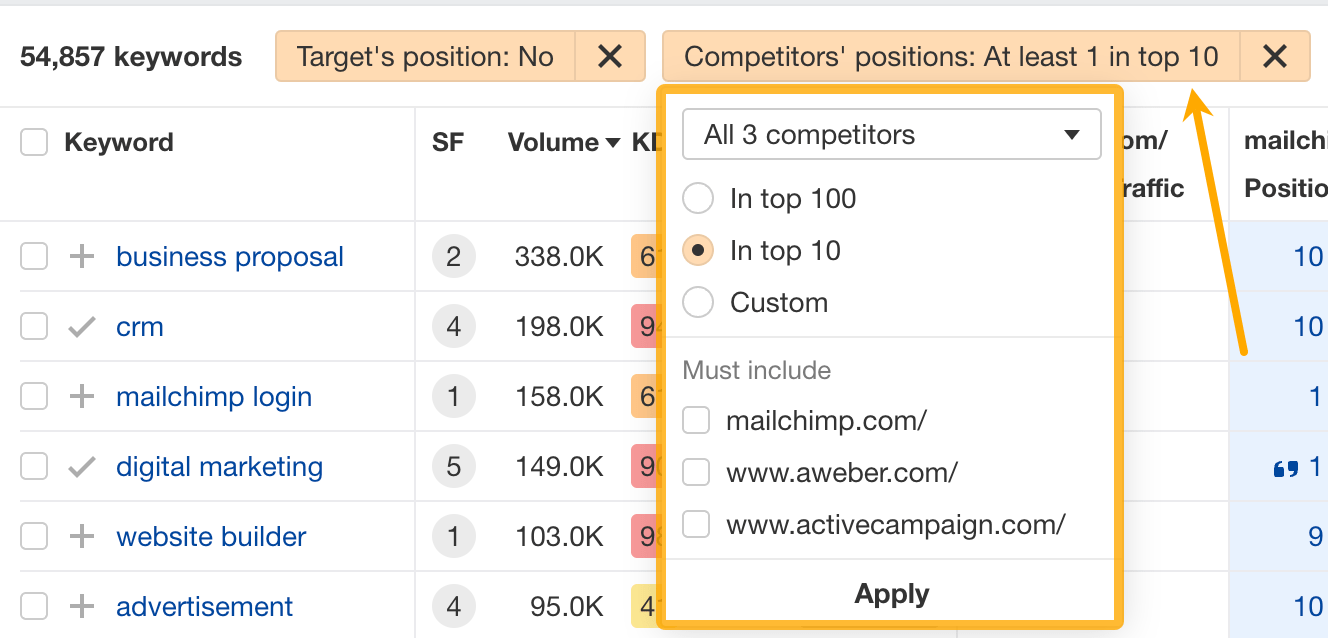

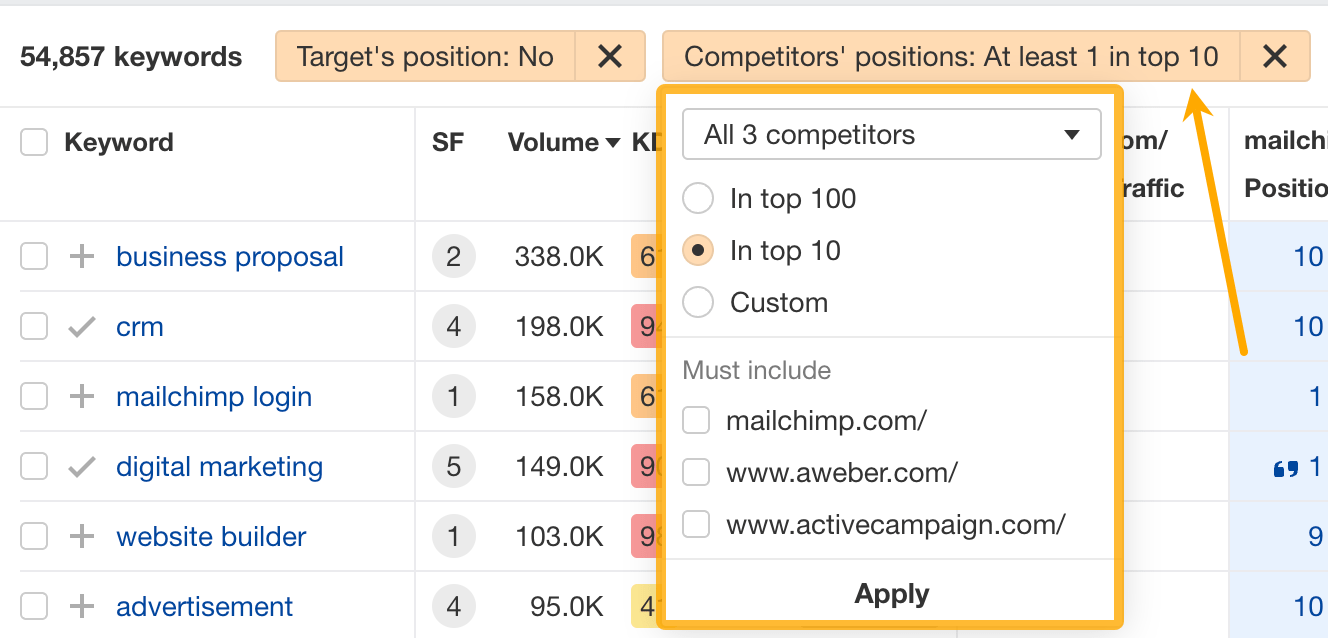

How to find keywords multiple competitors rank for, but you don’t

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter the domains of multiple competitors in the But these competitors do section

You’ll see all the keywords that at least one of these competitors ranks for, but you don’t.

You can also narrow the list down to keywords that all competitors rank for. Click on the Competitors’ positions filter and choose All 3 competitors:

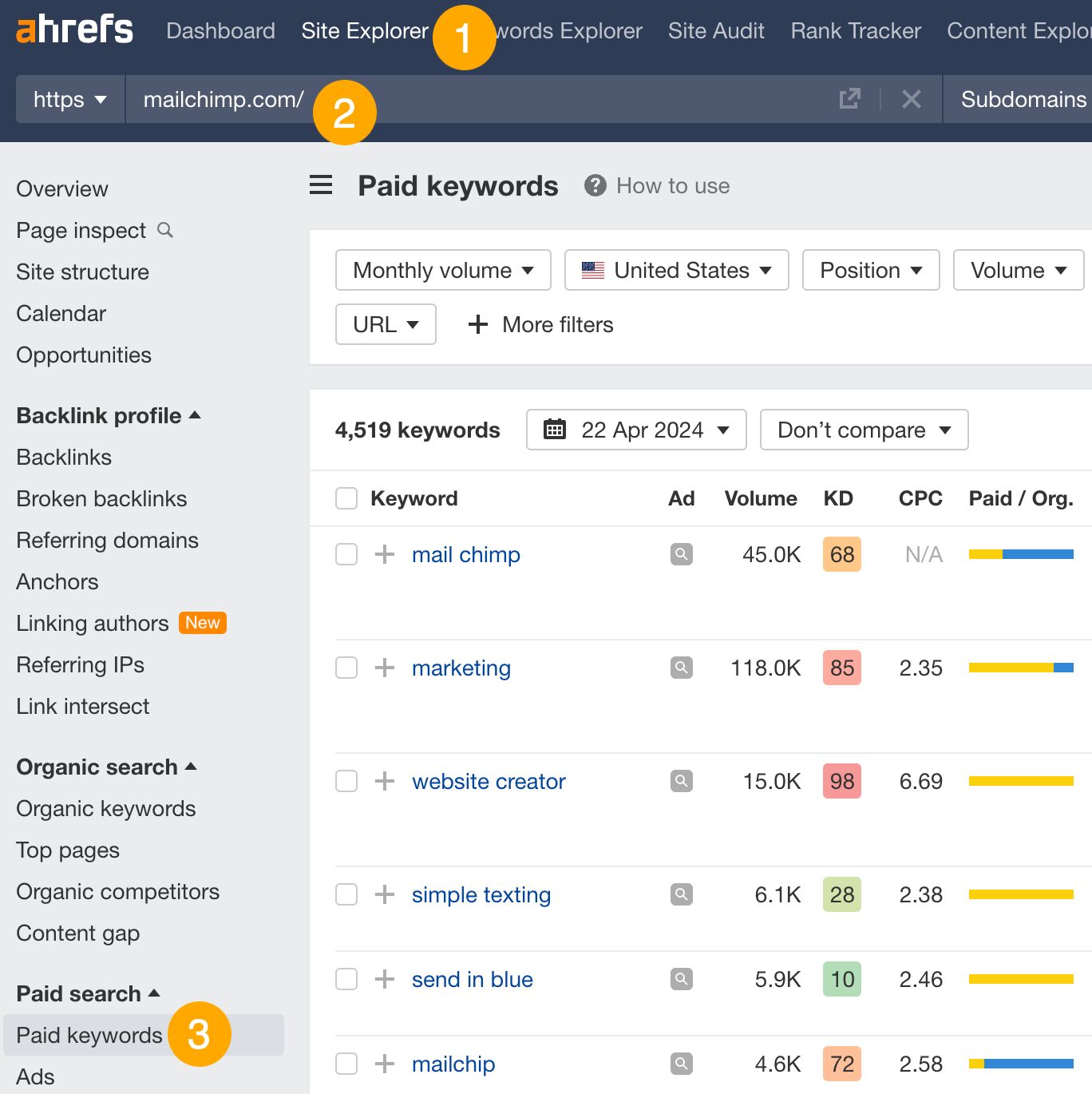

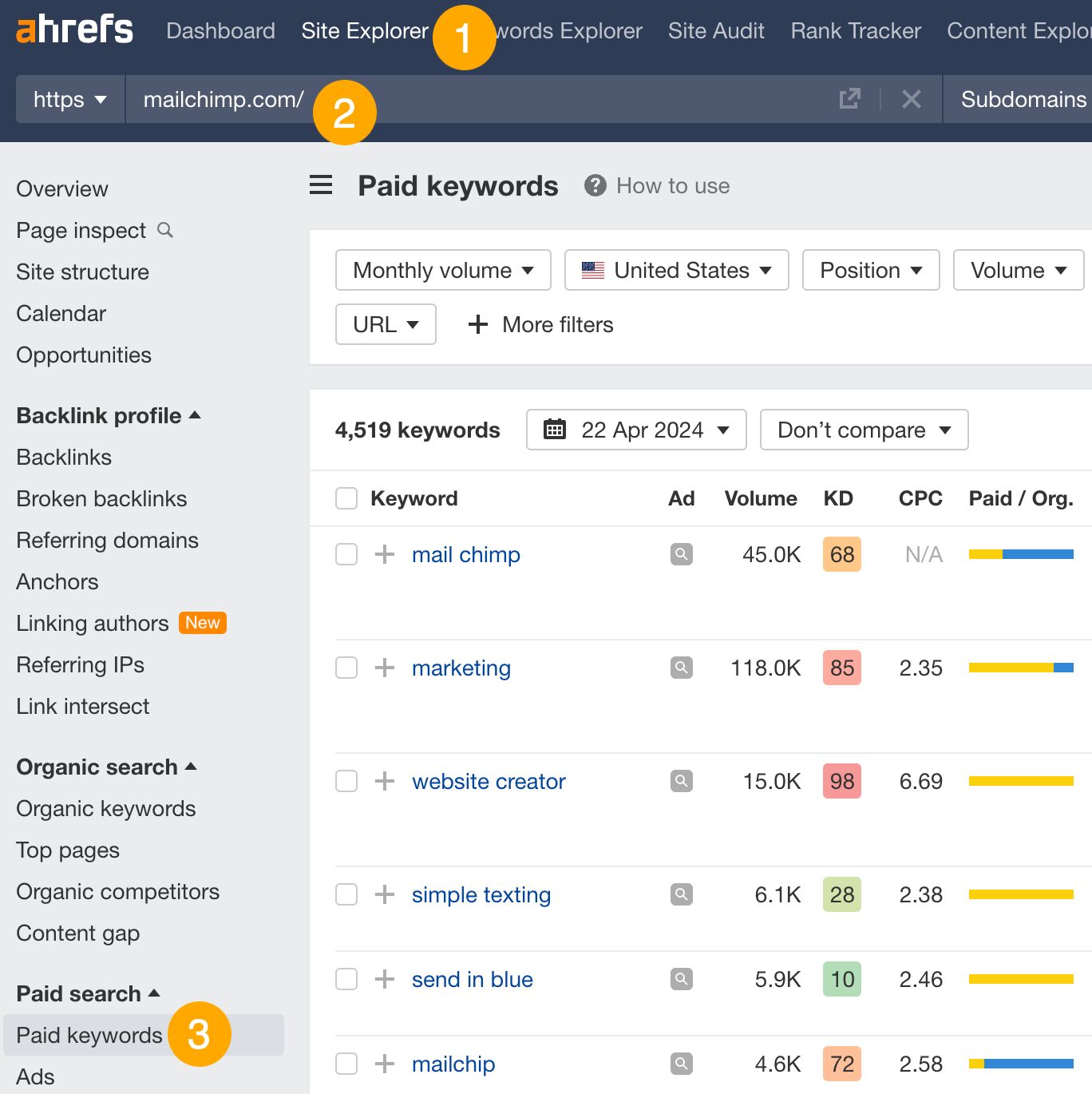

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Paid keywords report

This report shows you the keywords your competitors are targeting via Google Ads.

Since your competitor is paying for traffic from these keywords, it may indicate that they’re profitable for them—and could be for you, too.

You know what keywords your competitors are ranking for or bidding on. But what do you do with them? There are basically three options.

1. Create pages to target these keywords

You can only rank for keywords if you have content about them. So, the most straightforward thing you can do for competitors’ keywords you want to rank for is to create pages to target them.

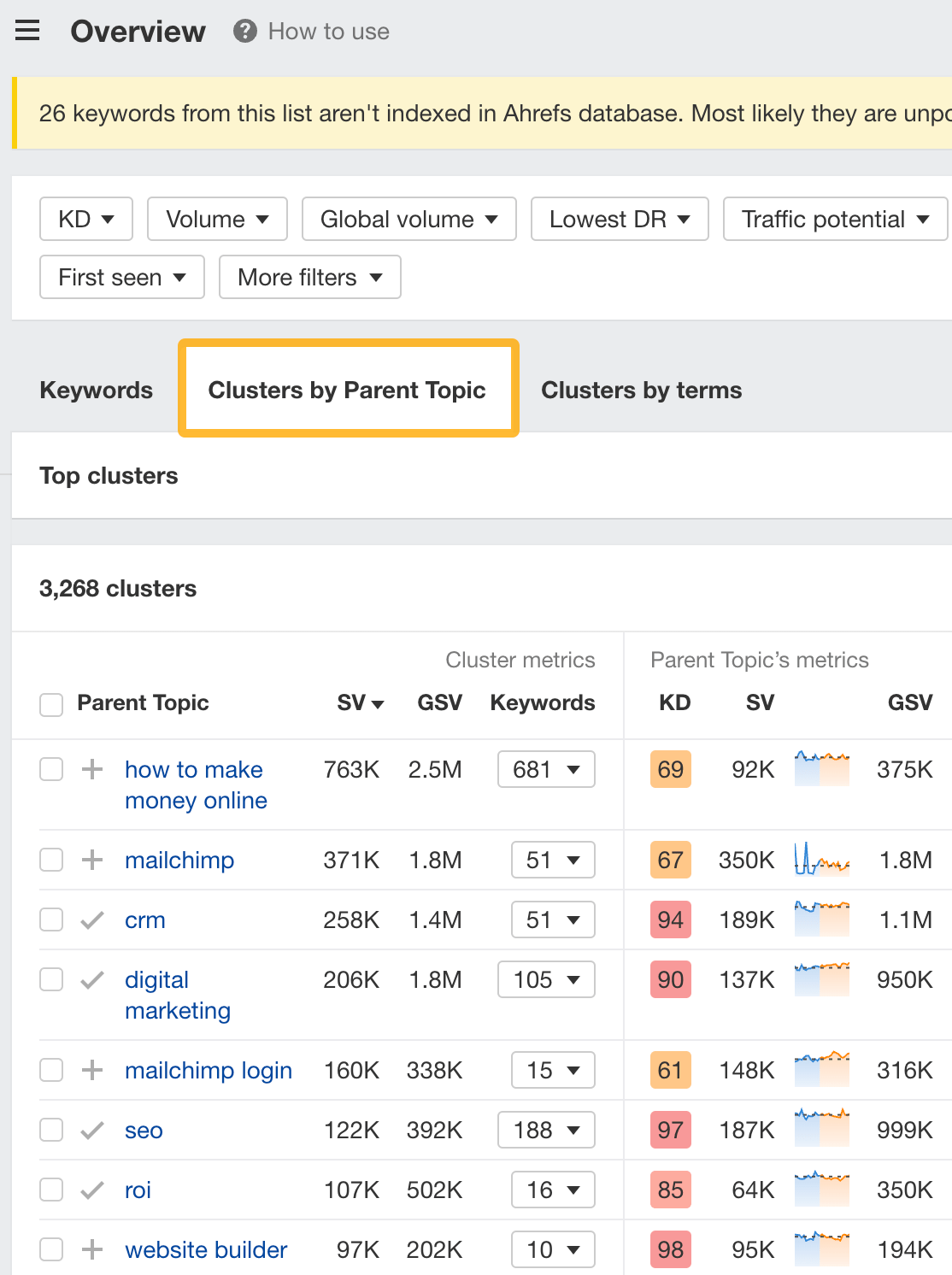

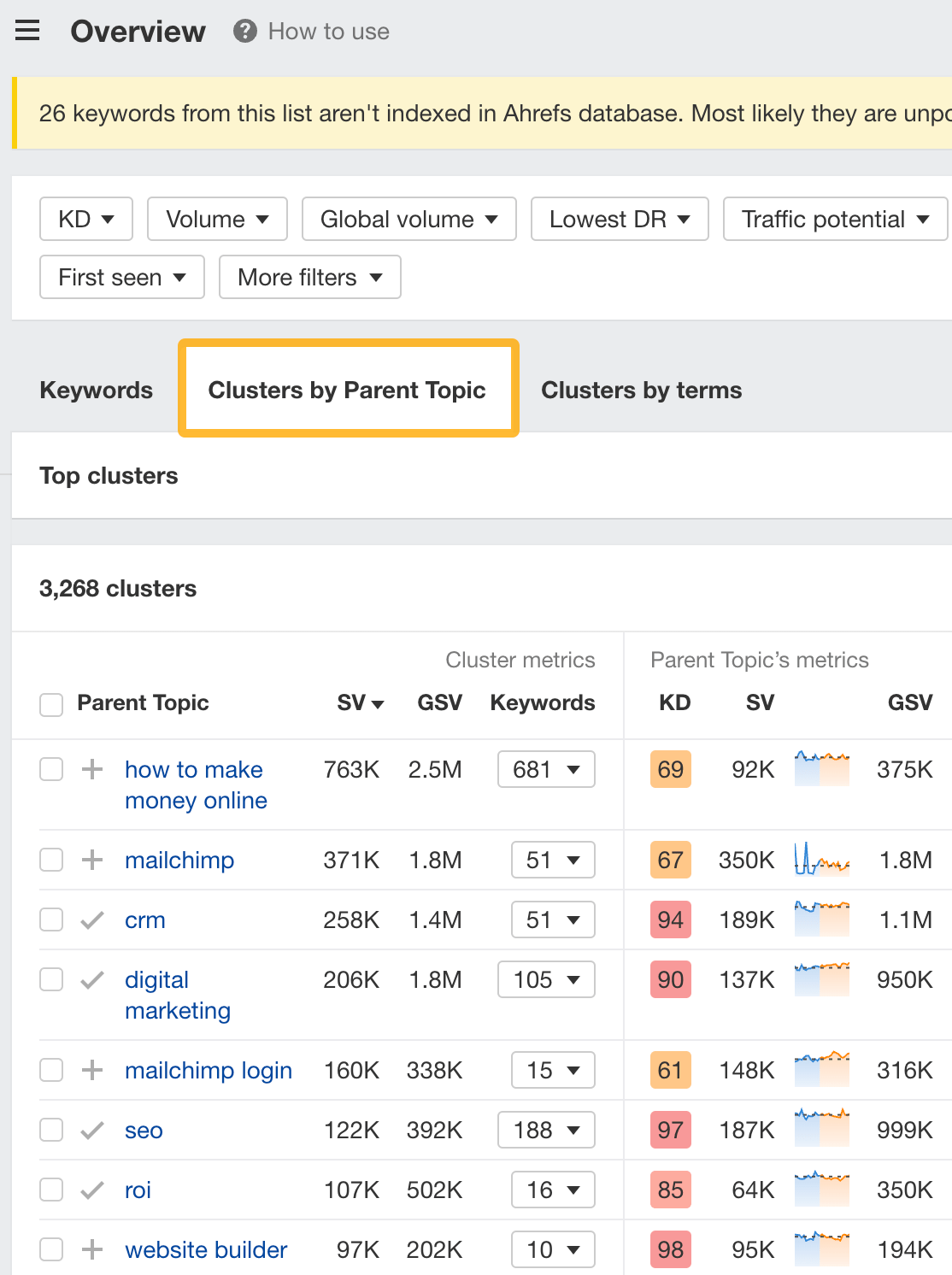

However, before you do this, it’s worth clustering your competitor’s keywords by Parent Topic. This will group keywords that mean the same or similar things so you can target them all with one page.

Here’s how to do that:

- Export your competitor’s keywords, either from the Organic Keywords or Content Gap report

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

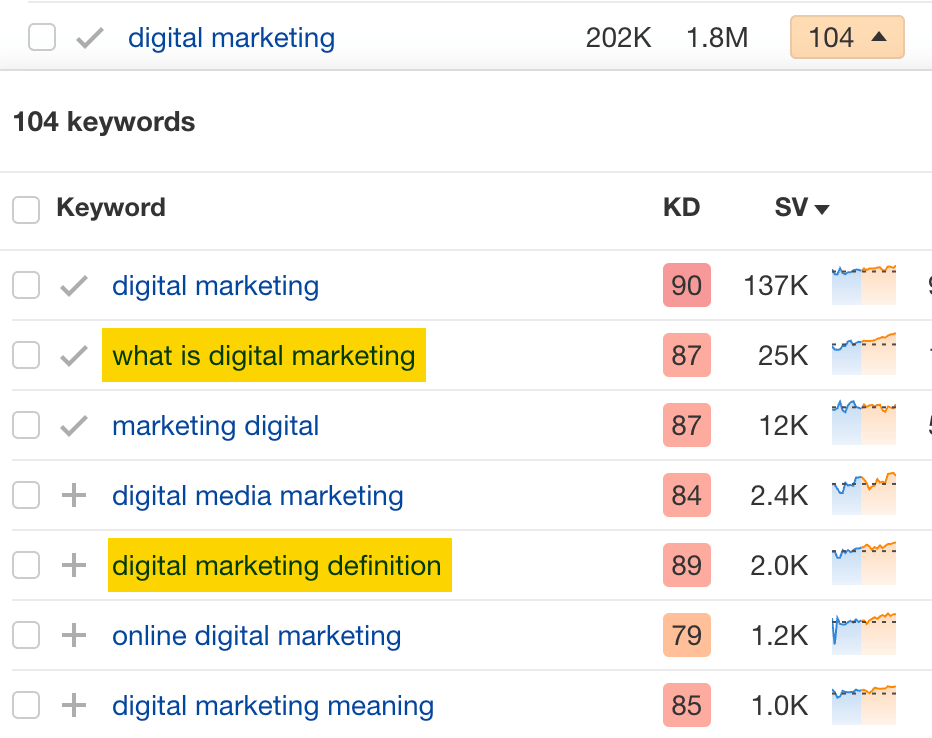

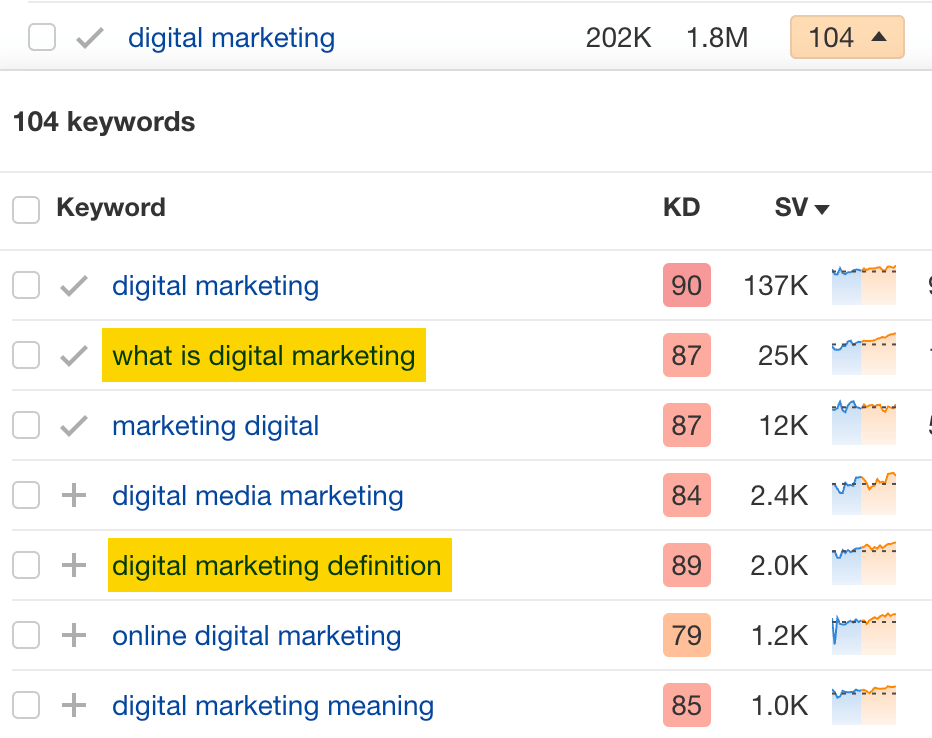

For example, MailChimp ranks for keywords like “what is digital marketing” and “digital marketing definition.” These and many others get clustered under the Parent Topic of “digital marketing” because people searching for them are all looking for the same thing: a definition of digital marketing. You only need to create one page to potentially rank for all these keywords.

2. Optimize existing content by filling subtopics

You don’t always need to create new content to rank for competitors’ keywords. Sometimes, you can optimize the content you already have to rank for them.

How do you know which keywords you can do this for? Try this:

- Export your competitor’s keywords

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

- Look for Parent Topics you already have content about

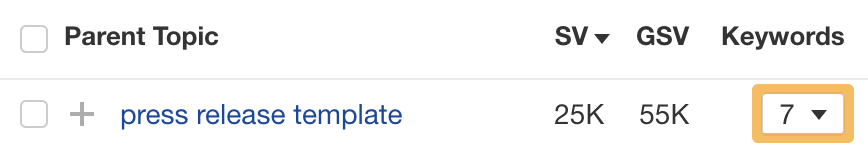

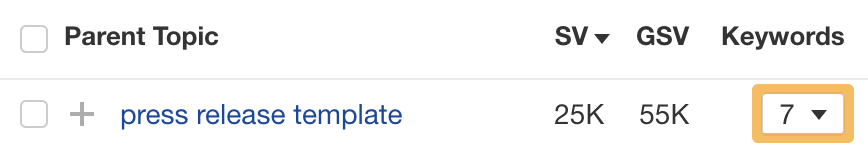

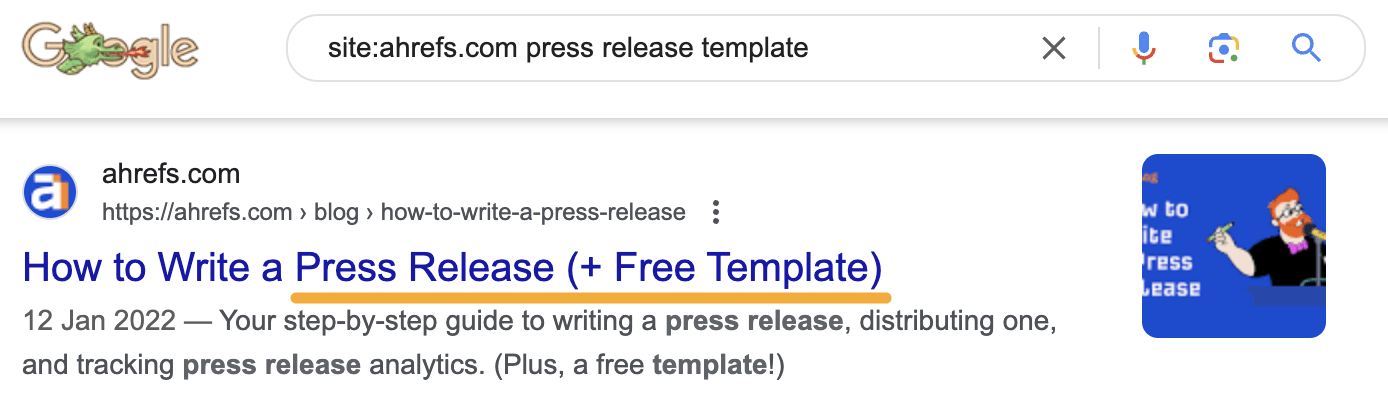

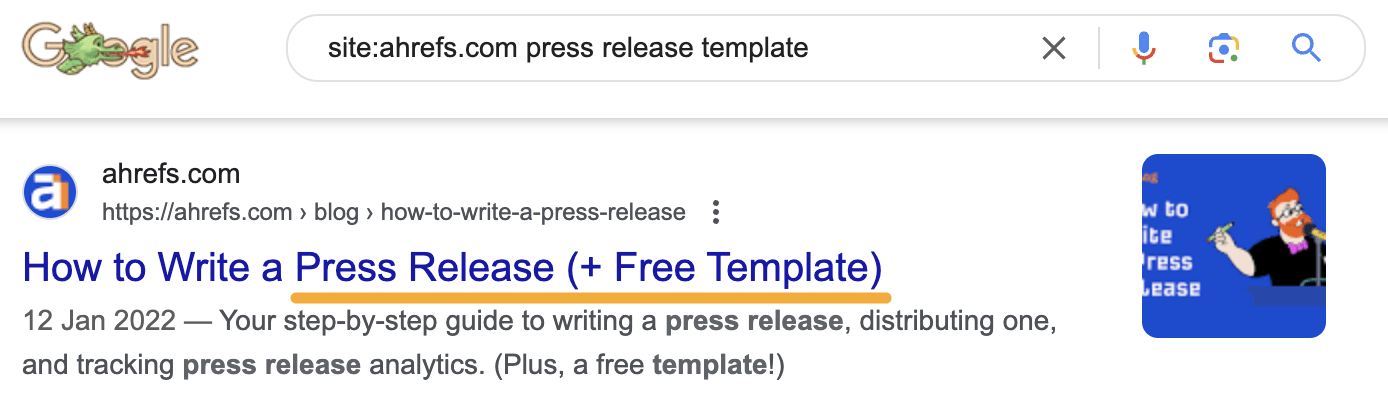

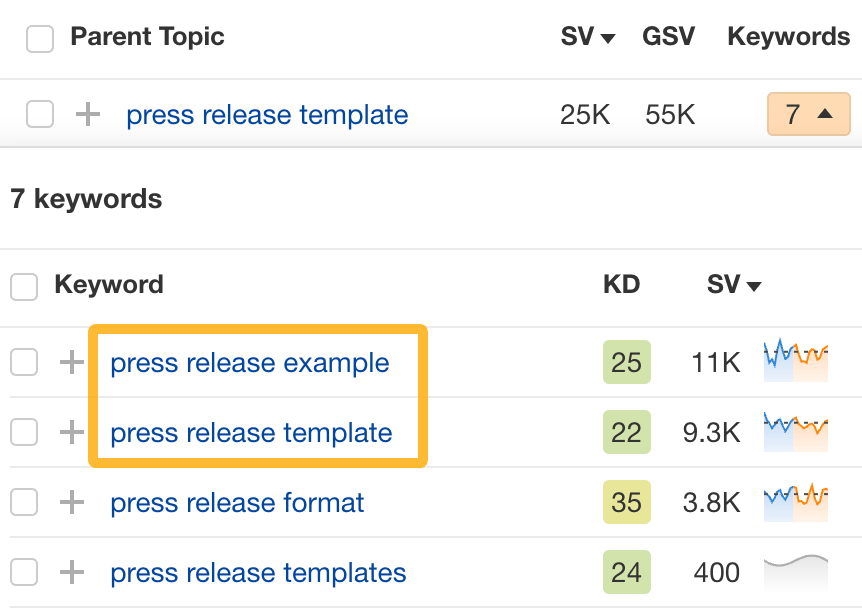

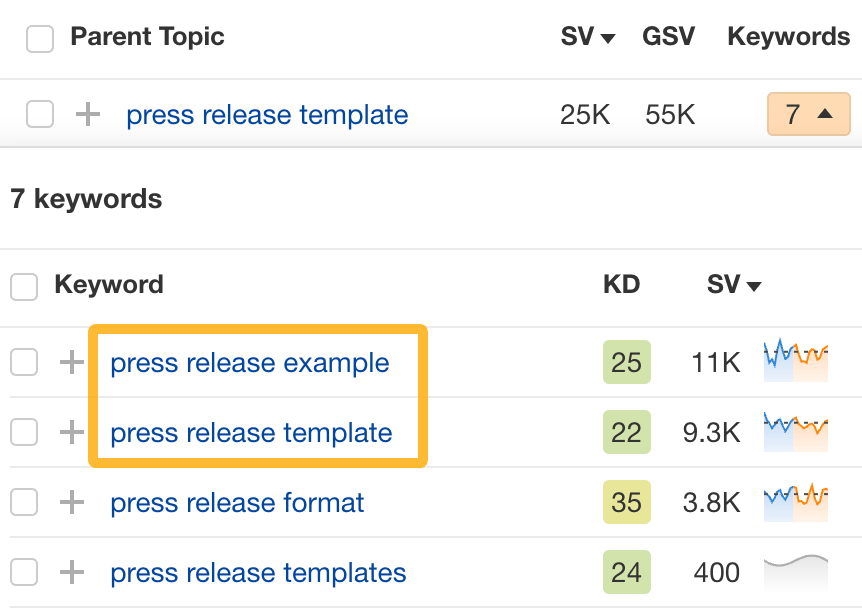

For example, if we analyze our competitor, we can see that seven keywords they rank for fall under the Parent Topic of “press release template.”

If we search our site, we see that we already have a page about this topic.

If we click the caret and check the keywords in the cluster, we see keywords like “press release example” and “press release format.”

To rank for the keywords in the cluster, we can probably optimize the page we already have by adding sections about the subtopics of “press release examples” and “press release format.”

3. Target these keywords with Google Ads

Paid keywords are the simplest—look through the report and see if there are any relevant keywords you might want to target, too.

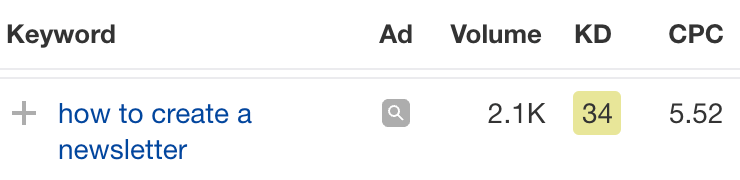

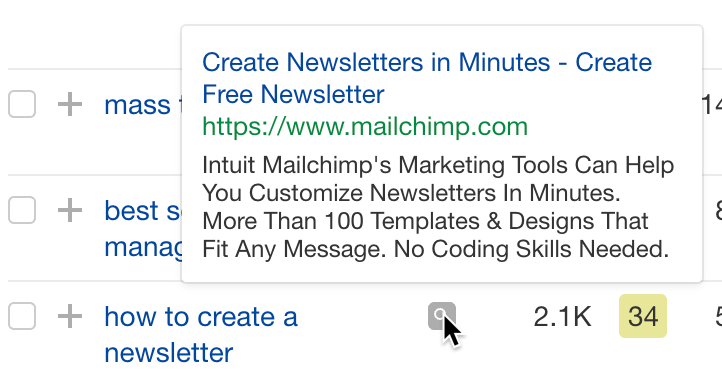

For example, Mailchimp is bidding for the keyword “how to create a newsletter.”

If you’re ConvertKit, you may also want to target this keyword since it’s relevant.

If you decide to target the same keyword via Google Ads, you can hover over the magnifying glass to see the ads your competitor is using.

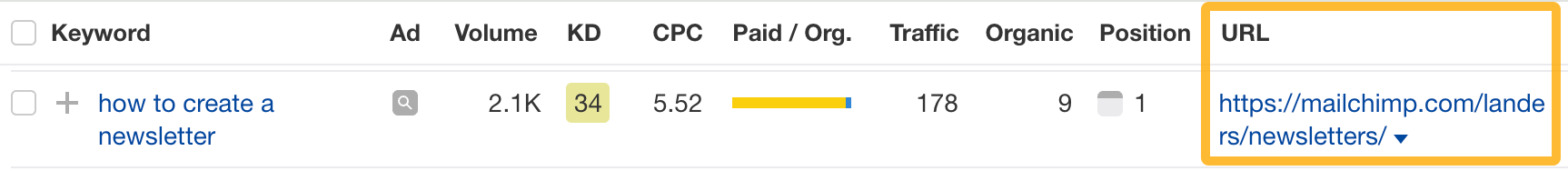

You can also see the landing page your competitor directs ad traffic to under the URL column.

Learn more

Check out more tutorials on how to do competitor keyword analysis:

-

PPC6 days ago

PPC6 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 19, 2024

-

MARKETING6 days ago

MARKETING6 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

WORDPRESS5 days ago

WORDPRESS5 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

SEO6 days ago

SEO6 days ago2024 WordPress Vulnerability Report Shows Errors Sites Keep Making

-

WORDPRESS6 days ago

WORDPRESS6 days ago10 Amazing WordPress Design Resouces – WordPress.com News

-

WORDPRESS7 days ago

[GET] The7 Website And Ecommerce Builder For WordPress

You must be logged in to post a comment Login