SOCIAL

Can Effective Regulation Reduce the Impact of Divisive Content on Social Networks?

Amid a new storm of controversy sparked by The Facebook Files, an expose of various internal research projects which, in some ways, suggest that Facebook isn’t doing enough to protect users from harm, the core question that needs to be addressed is often being distorted by inherent bias and specific targeting of Facebook, the company, as opposed to social media, and algorithmic content amplification as a concept.

That is, what do we do to fix it? What can be done, realistically, that will actually make a difference; what changes to regulation or policy could feasibly be implemented to reduce the amplification of harmful, divisive posts that are fueling more angst within society as a result of the increasing influence of social media apps?

It’s important to consider social media more broadly here, because every social platform uses algorithms to define content distribution and reach. Facebook is by far the biggest, and has more influence on key elements, like news content – and of course, the research insights themselves, in this case, came from Facebook.

The focus on Facebook, specifically, makes sense, but Twitter also amplifies content that sparks more engagement, LinkedIn sorts its feed based on what it determines will be most engaging. TikTok’s algorithm is highly attuned to your interests.

The problem, as highlighted by Facebook whistleblower Frances Haugen is algorithmic distribution, not Facebook itself – so what ideas do we have that can realistically improve that element?

And the further question then is, will social platforms be willing to make such changes, especially if they present a risk to their engagement and user activity levels?

Haugen, who’s an expert in algorithmic content matching, has proposed that social networks should be forced to stop using engagement-based algorithms altogether, via reforms to Section 230 laws, which currently protect social media companies from legal liability for what users share in their apps.

As explained by Haugen:

“If we had appropriate oversight, or if we reformed [Section] 230 to make Facebook responsible for the consequences of their intentional ranking decisions, I think they would get rid of engagement-based ranking.”

The concept here is that Facebook – and by extension, all social platforms – would be held accountable for the ways in which they amplify certain content. So if more people end up seeing, say, COVID misinformation because of algorithmic intervention, Facebook could be held legally liable for any impacts.

That would add significant risk to any decision-making around the construction of such algorithms, and as Haugen notes, that may then see the platforms forced to take a step back from measures which boost the reach of posts based on how users interact with such content.

Essentially, that would likely see social platforms forced to return to pre-algorithm days, when Facebook and other apps would simply show you a listing of the content from the pages and people you follow in chronological order, based on post time. That, in turn, would then reduce the motivation for people and brands to share more controversial, engagement-baiting content in order to play into the algorithm’s whims.

The idea has some merit – as various studies have shown, sparking emotional response with your social posts is key to maximizing engagement, and thus, reach based on algorithm amplification, and the most effective emotions, in this respect, are humor and anger. Jokes and funny videos still do well on all platforms, fueled by algorithm reach, but so too do anger-inducing hot takes, which partisan news outlets and personalities have run with, which could well be a key source of the division and angst we now see online.

To be clear, Facebook cannot solely be held responsible for such. Partisan publishers and controversial figures have long played a role in broader discourse, and they were sparking attention and engagement with their left-of-center opinions long before Facebook arrived. The difference now is that social networks facilitate such broad reach, while they also, through Likes and other forms of engagement, provide direct incentive for such, with individual users getting a dopamine hit by triggering response, and publishers driving more referral traffic, and gaining more exposure through provocation.

Really, a key issue in when considering the former outcome is that everyone now has a voice, and when everyone has a platform to share their thoughts and opinions, we’re all far more exposed to such, and far more aware. In the past, you likely had no idea about your uncle’s political persuasions, but now you know, because social media reminds you every day, and that type of peer sharing is also playing a role in broader division.

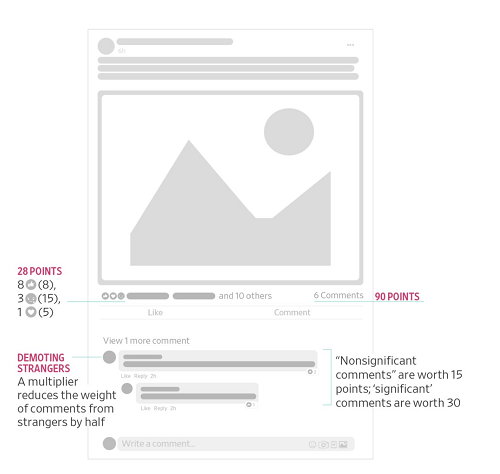

Haugen’s argument, however, is that Facebook incentivizes this – for example, one of the reports Haugen leaked to the Wall Street Journal outlines how Facebook updated its News Feed algorithm in 2018 to put more emphasis on engagement between users, and reduce political discussion, which had become an increasingly divisive element in the app. Facebook did this by changing its weighting for different types of engagement with posts.

The idea was that this would incentivize more discussion, by weighting replies more heavily – but as you can imagine, by putting more value on comments, in order to drive more reach, that also prompted more publishers and Pages to share increasingly divisive, emotionally-charged posts, in order to incite more reactions, and get higher share scores as a result. With this update, Likes were no longer the key driver of reach, as they had been, with Facebook making comments and Reactions (including ‘Angry’) increasingly important. As such, sparking discussion around political trends actually became more prominent, and exposed more users to such content in their feeds.

The suggestion then, based on this internal data, is that Facebook knew this, it knew that this change had ramped up divisive content. But they opted not to revert back, or implement another update, because engagement, a key measure for its business success, had indeed increased as a result.

In this sense, removing the algorithm motivation would make sense – or maybe, you could look to remove algorithm incentives for certain post types, like political discussion, while still maximizing the reach of more engaging posts from friends, catering to both engagement goals and divisive concerns.

That’s what Facebook’s Dave Gillis, who works on the platform’s product safety team has pointed to in a tweet thread, in response to the revelations.

As per Gillis:

“At the end of the WSJ piece about algorithmic feed ranking, it’s mentioned – almost in passing – that we switched away from engagement-based ranking for civic and health content in News Feed. But hang-on – that’s kind of a big deal, no? It’s probably reasonable to rank, say, cat videos and baby photos by likes etc. but handle other kinds of content with greater care. And that is, in fact, what our teams advocated to do: use different ranking signals for health and civic content, prioritizing quality + trustworthiness over engagement. We worked hard to understand the impact, get leadership on board – yep, Mark too – and it’s an important change.”

This could be a way forward, using different ranking signals for different types of content, which may work to enable optimal amplification of content, boosting beneficial user engagement, while also lessening the motivation for certain actors to post divisive material in order to feed into algorithmic reach.

Would that work? Again, it’s hard to say, because people would still be able to share posts, they’d still be able to comment and re-distribute material online, there are still many ways that amplification can happen outside of the algorithm itself.

In essence, there are merits to both suggestions, that social platforms could treat different types of content differently, or that algorithms could be eliminated to reduce the amplification of such material.

And as Haugen notes, focusing on the systems themselves is important, because content-based solutions open up various complexities when the material is posted in other languages and regions.

“In the case of Ethiopia, there are 100 million people and six languages. Facebook only supports two of those languages for integrity systems. This strategy of focusing on language-specific, content-specific systems for AI to save us is doomed to fail.”

Maybe, then, removing algorithms, or at least changing the regulations around how algorithms operate, would be an optimal solution, which could help to reduce the impacts of negative, rage-inducing content across the social media sphere.

But then we’re back to the original problem that Facebook’s algorithm was designed to solve – back in 2015 Facebook explained that it needed the News Feed algorithm not only to maximize user engagement, but also to help ensure that people saw all the updates of most relevance to them.

As it explained, the average Facebook user, at that time, had around 1, 500 posts eligible to appear in their News Feed on any given day, based on Pages they’d liked and their personal connections – while for some more active users, that number was more like 15,000. It’s simply not possible for people to read every single one of these updates every day, so Facebook’s key focus with the initial algorithm was to create a system that uncovered the best, most relevant content for each individual, in order to provide users with the most engaging experience, and subsequently keep them coming back.

As Facebook’s chief product officer Chris Cox explained to Time Magazine:

“If you could rate everything that happened on Earth today that was published anywhere by any of your friends, any of your family, any news source, and then pick the 10 that were the most meaningful to know today, that would be a really cool service for us to build. That is really what we aspire to have News Feed become.”

The News Feed approach has evolved a lot since then, but the fundamental challenge that it was designed to solve remains. People have too many connections, they follow too many Pages, they’re members of too many groups to get all of their updates, every day. Without the feed algorithm, they will miss relevant posts, relevant updates like family announcements and birthdays, and they simply won’t be as engaged in the Facebook experience.

Without the algorithm, Facebook will lose out, by failing to optimize for audience desires – and as highlighted in another of the reports shared as part of the Facebook Files, it’s actually already seeing engagement declines in some demographic subsets.

You can imagine that if Facebook were to eliminate the algorithm, or be forced to change its direction on this, that this graph will only get worse over time.

Zuck and Co. are therefore not likely to be keen on that solution, so a compromise, like the one proposed by Gillis, may be the best that can be expected. But that comes with its own flaws and risks.

Either way, it is worth noting that the focus of the debate needs to shift to algorithms more broadly, not just on Facebook alone, and whether there is actually a viable, workable way to change the incentives around algorithm-based systems to limit the distribution of more divisive elements.

Because that is a problem, no matter how Facebook or anyone else tries to spin it, which is why Haugen’s stance is important, as it may well be the spark that leads us to a new, more nuanced debate around this key element.