SOCIAL

Twitter Previews Potential New Account Safety Tools, Launches New Update for Birdwatch

Twitter’s looking to provide more options and transparency over its rule violations and moderation processes, with a range of new tools currently in consideration that could give users more ways to understand and action each instance.

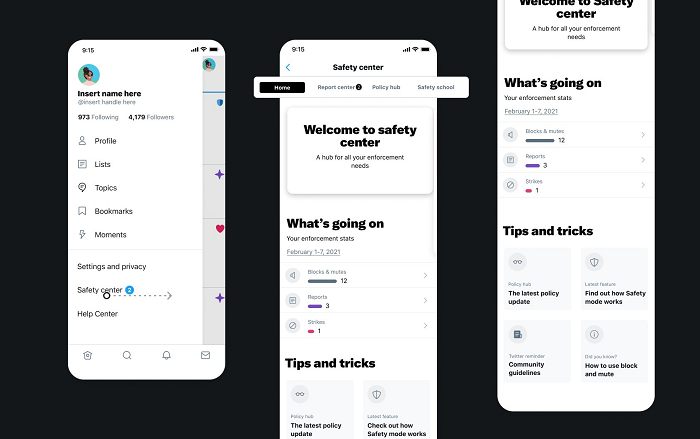

The first idea is a new Safety Center, which Twitter says would be ‘a one-stop shop for safety tools’.

As you can see here, the Safety Center concept, which would be accessible via the Twitter menu, would give users a full overview of any reports, blocks, mutes and strikes that they currently have in place on their account. The Safety Center would also give Twitter a means to provide updates on any outstanding reports (via the ‘Report Center’ tab).

The platform would also alert users if they’re close to being suspended due to policy violations, which may prompt them to re-think their behavior, while it would additionally include a link to Twitter policy guidelines.

The impetus here is to get more users more aware of Twitter’s rules, and keep them updated on their activity. One one hand, that could raise awareness, but it may also give people more leeway to push the boundaries, with a constant checking tool to see if they might get suspended, when they’d need to dial it back.

Twitter’s second concept is a new Policy Hub, which would provide a full overview of its rules and policies.

By making these documents more readily accessible, it could help to set clearer parameters around where Twitter draws the line – though its effectiveness, of course, would be dependent on users actually checking it.

A more direct concept, which could be more effective, is ‘Safety School’, which would give users a chance to avoid suspension for platform violations if they instead take a short course or quiz to learn about the rule/s that they broke.

It’s difficult to make out the full detail in this image, but essentially, the process would add a new warning to violating tweets which reads (at this stage):

“Heads up. Your latest Tweet breaks our policy on hateful conduct. You need to attend Safety School to avoid being suspended.”

Users would then be put through an overview of the specific rule that they broke, which could help to raise awareness of platform policies.

Another interesting concept Twitter is considering is a new ‘Weekly Safety Report’, which would show users how many people in their network are using its block and mute tools.

That could make it more acceptable for you to do the same. If you understood that many people on the platform are using these tools, including people you know, that could reduce the stigma around potentially damaging relationships by shutting users off.

Or it could be a source of concern – maybe these people are muting you.

As you can see in the example above, the display would also tell you how many of the accounts in your network regularly violate platform rules, so you know how many bad eggs you have in your Twitter flock.

Twitter’s also working on a new appeals process for rule violations in-stream.

That could make it easier for users to take action when they feel a restriction has been put in place unfairly, or incorrectly, which tarnishes their profile standing.

The peer elements here could add something more to the transparency, and accountability, of Twitter’s rule enforcement – though there may not be overly effective for users that have large followings that they don’t regularly interact with. Some people might have a lot of followers who they’re not heavily engaged with, so ideally, the tools that analyze your network only take into account those that you follow, as opposed to those that follow you.

That’s, logically, how the system would work, but there are some provisos based on how Twitter defines ‘your network’ in this sense.

On a related note, Twitter has also announced a new addition to its Birdwatch crowd-sourced fact-checking process, which will prompt users to review Birdwatch notes to add more weight to ratings.

Today we’re adding a new “Needs your help” tab to the Birdwatch Home page. This tab will surface Tweets that are typically rated by your fellow Birdwatchers but not always by you. pic.twitter.com/YcBrgdaYjp

— Birdwatch (@birdwatch) March 25, 2021

Twitter says that this addition will ensure a ‘more diverse range of feedback’ on Birdwatch alerts, increasing the accuracy of such reports.

It’s hard to tell whether the Birdwatch proposal will work, but it’s an interesting concept, using its user-base to better detect low quality or false content, in order to reduce its overall impact and reach.

In some ways, that’s more like Reddit, which relies on its user community to up and downvote content, which generally weeds out things like false reports. Interestingly, Twitter’s also considering up and downvote options for tweets, so it seems that the platform is indeed looking to Reddit as a potential inspiration for its efforts on this front.

Which, again, does make sense, but it’s hard to tell whether Twitter’s user community is as aligned with content quality on the platform as Redditors are within their subreddits, which they likely feel more ownership of, and community within.

Maybe, through additions like this, Twitter can build on that, which would make tools like Birdwatch a more valuable addition.