SOCIAL

YouTube Outlines Election Security Efforts as 2020 Presidential Race Begins

With the 2020 US Presidential race officially getting underway this week, YouTube has published a new post which outlines how it’s working to improve election security, and limit the spread of misinformation across its platform.

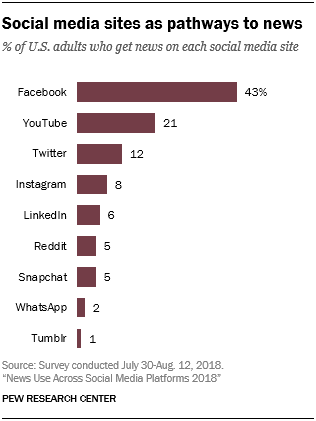

YouTube’s focus here is important – while Facebook is at the forefront of the push against misinformation, research shows that YouTube is the second most utilized social platform for news content, and with more than 2 billion monthly active users, its impact is significant.

First off, YouTube notes that it will remove digitally altered content, alluding, in some ways to the now infamous Nancy Pelosi video that Facebook refused to take down, but also likely pointing to the rising use of deepfakes, which all platforms are working to get ahead of before it becomes a more significant concern.

As per YouTube, the platform will not allow:

- Content that has been technically manipulated or doctored in a way that misleads users (beyond clips taken out of context) and may pose a serious risk of egregious harm; for example, a video that has been technically manipulated to make it appear that a government official is dead.

- Content that aims to mislead people about voting or the census processes, like telling viewers an incorrect voting date.

- Content that advances false claims related to the technical eligibility requirements for current political candidates and sitting elected government officials to serve in office, such as claims that a candidate is not eligible to hold office based on false information about citizenship status requirements to hold office in that country.

YouTube says that any content which violates these terms will be removed, along with channels that impersonate, misrepresent, or conceal their association with a government and/or those that use artificial means to increase their view counts, likes, etc.

YouTube says that it’s also working with Google’s Threat Analysis Group to identify bad actors and terminate their channels and accounts, which has already resulted in the removal of thousands of accounts and videos linked to such groups.

In addition to this, YouTube has re-iterated its efforts to amplify “authoritative voices”, which it first outlined back in December.

YouTube says that, while topics like music and entertainment are more reliant on the timeliness of information:

“…for subjects such as news, science and historical events, where accuracy and authoritativeness are key, the quality of information and context matter most – much more than engagement.”

As such, YouTube’s been working to ensure that videos from more recognized publishers are surfaced in related searches, which includes the videos displayed in its “watch next” panels in relation to news content. <

YouTube’s recommendation algorithms have previously been identified as a source of potential concern in this respect. Last June, The New York Times published an article which looked, in detail, at how a young American man was radicalized by YouTube content, sinking further and further down the rabbit hole with each tap on his ‘Up Next…’ recommendations.

YouTube’s now working to address this with the addition of information panels and previews designed to guide users to more reputable, trustworthy information.

As with all platforms, YouTube’s efforts on this front are of significant importance, working to limit the spread of misinformation and disinformation campaigns, and reduce their impacts on subsequent voter behavior. And while it’s difficult to quantify the full impacts that such campaigns can, and have, had, it is clear that social platforms are contributing to political perception, and playing a larger role in informing voters on key issues of note.

There’s a reason why the Trump campaign spent $20 million on Facebook ads in 2019 alone. In the modern era, elections are being won and lost on social platforms, and the policy decisions which stem from such end up impacting the entire world. The consideration that at least a portion of those decisions are being made based on incorrect information is a major concern, so it’s good to see the platforms working to improve in this respect.

Yet, concerns still remain. Social platforms are still incentivized to maximize engagement in order to boost their market perception, which means that they still may look to promote, or at least host, a level of controversial content in order to spark debate, and further their own performance goals.

That’s actually why we should welcome moves like Facebook shifting to a new ‘Family of Apps’ metric for its performance reports – rather than showcasing the usage stats for each of its individual elements, Facebook is looking to move towards an alternate metric which represents total, cumulative growth across Facebook, WhatsApp, Instagram and Messenger. That figure currently sits at 2.89b monthly actives.

Some have been critical of this move, as it may be seeking to hide predicted performance declines on its flagship app – but if Facebook can actually move perception away from active usage, it could make better decisions for the health of users, as opposed to being dictated by bottom-line pressure.

But then again, advertisers want maximum reach, so the platforms need to show that X many people are using their apps for X hours each day, or a similar metric.

The competing commercial goals and informational usage increases make for an awkward balance, which, as highlighted by YouTube here, each platform is working to maintain. But there are inherent difficulties in such, which we’re going to see more about in 2020.

Until then, we can only hope that efforts like this are working to improve the flow of accurate voter information.