MARKETING

How to Increase Survey Completion Rate With 5 Top Tips

Collecting high-quality data is crucial to making strategic observations about your customers. Researchers have to consider the best ways to design their surveys and then how to increase survey completion, because it makes the data more reliable.

I’m going to explain how survey completion plays into the reliability of data. Then, we’ll get into how to calculate your survey completion rate versus the number of questions you ask. Finally, I’ll offer some tips to help you increase survey completion rates.

My goal is to make your data-driven decisions more accurate and effective. And just for fun, I’ll use cats in the examples because mine won’t stop walking across my keyboard.

Why Measure Survey Completion

Let’s set the scene: We’re inside a laboratory with a group of cat researchers. They’re wearing little white coats and goggles — and they desperately want to know what other cats think of various fish.

They’ve written up a 10-question survey and invited 100 cats from all socioeconomic rungs — rough and hungry alley cats all the way up to the ones that thrice daily enjoy their Fancy Feast from a crystal dish.

Now, survey completion rates are measured with two metrics: response rate and completion rate. Combining those metrics determines what percentage, out of all 100 cats, finished the entire survey. If all 100 give their full report on how delicious fish is, you’d achieve 100% survey completion and know that your information is as accurate as possible.

But the truth is, nobody achieves 100% survey completion, not even golden retrievers.

With this in mind, here’s how it plays out:

- Let’s say 10 cats never show up for the survey because they were sleeping.

- Of the 90 cats that started the survey, only 25 got through a few questions. Then, they wandered off to knock over drinks.

- Thus, 90 cats gave some level of response, and 65 completed the survey (90 – 25 = 65).

- Unfortunately, those 25 cats who only partially completed the survey had important opinions — they like salmon way more than any other fish.

The cat researchers achieved 72% survey completion (65 divided by 90), but their survey will not reflect the 25% of cats — a full quarter! — that vastly prefer salmon. (The other 65 cats had no statistically significant preference, by the way. They just wanted to eat whatever fish they saw.)

Now, the Kitty Committee reviews the research and decides, well, if they like any old fish they see, then offer the least expensive ones so they get the highest profit margin.

CatCorp, their competitors, ran the same survey; however, they offered all 100 participants their own glass of water to knock over — with a fish inside, even!

Only 10 of their 100 cats started, but did not finish the survey. And the same 10 lazy cats from the other survey didn’t show up to this one, either.

So, there were 90 respondents and 80 completed surveys. CatCorp achieved an 88% completion rate (80 divided by 90), which recorded that most cats don’t care, but some really want salmon. CatCorp made salmon available and enjoyed higher profits than the Kitty Committee.

So you see, the higher your survey completion rates, the more reliable your data is. From there, you can make solid, data-driven decisions that are more accurate and effective. That’s the goal.

We measure the completion rates to be able to say, “Here’s how sure we can feel that this information is accurate.”

And if there’s a Maine Coon tycoon looking to invest, will they be more likely to do business with a cat food company whose decision-making metrics are 72% accurate or 88%? I suppose it could depend on who’s serving salmon.

What is survey completion rate?

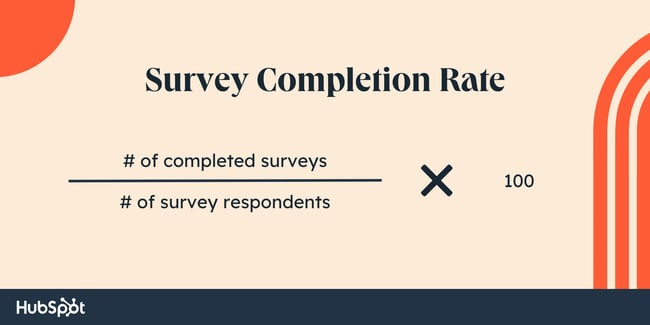

Survey completion rate refers to the number of completed surveys divided by the number of total survey respondents. The result is then multiplied by 100 to get a percentage. Survey respondents include those who completed the survey, and those who started the survey but didn’t complete it.

While math was not my strongest subject in school, I had the great opportunity to take several college-level research and statistics classes, and the software we used did the math for us. That’s why I used 100 cats — to keep the math easy so we could focus on the importance of building reliable data.

Now, we’re going to talk equations and use more realistic numbers. Here’s the formula:

So, we need to take the number of completed surveys and divide that by the number of people who responded to at least one of your survey questions. Even just one question answered qualifies them as a respondent (versus nonrespondent, i.e., the 10 lazy cats who never show up).

Now, you’re running an email survey for, let’s say, Patton Avenue Pet Company. We’ll guess that the email list has 5,000 unique addresses to contact. You send out your survey to all of them.

Your analytics data reports that 3,000 people responded to one or more of your survey questions. Then, 1,200 of those respondents actually completed the entire survey.

3,000/5000 = 0.6 = 60% — that’s your pool of survey respondents who answered at least one question. That sounds pretty good! But some of them didn’t finish the survey. You need to know the percentage of people who completed the entire survey. So here we go:

Completion rate equals the # of completed surveys divided by the # of survey respondents.

Completion rate = (1,200/3,000) = 0.40 = 40%

Voila, 40% of your respondents did the entire survey.

Response Rate vs. Completion Rate

Okay, so we know why the completion rate matters and how we find the right number. But did you also hear the term response rate? They are completely different figures based on separate equations, and I’ll show them side by side to highlight the differences.

- Completion Rate = # of Completed Surveys divided by # of Respondents

- Response Rate = # of Respondents divided by Total # of surveys sent out

Here are examples using the same numbers from above:

Completion Rate = (1200/3,000) = 0.40 = 40%

Response Rate = (3,000/5000) = 0.60 = 60%

So, they are different figures that describe different things:

- Completion rate: The percentage of your respondents that completed the entire survey. As a result, it indicates how sure we are that the information we have is accurate.

- Response rate: The percentage of people who responded in any way to our survey questions.

The follow-up question is: How can we make this number as high as possible in order to be closer to a truer and more complete data set from the population we surveyed?

There’s more to learn about response rates and how to bump them up as high as you can, but we’re going to keep trucking with completion rates!

What’s a good survey completion rate?

That is a heavily loaded question. People in our industry have to say, “It depends,” far more than anybody wants to hear it, but it depends. Sorry about that.

There are lots of factors at play, such as what kind of survey you’re doing, what industry you’re doing it in, if it’s an internal or external survey, the population or sample size, the confidence level you’d like to hit, the margin of error you’re willing to accept, etc.

But you can’t really get a high completion rate unless you increase response rates first.

So instead of focusing on what’s a good completion rate, I think it’s more important to understand what makes a good response rate. Aim high enough, and survey completions should follow.

I checked in with the Qualtrics community and found this discussion about survey response rates:

“Just wondering what are the average response rates we see for online B2B CX surveys? […]

Current response rates: 6%–8%… We are looking at boosting the response rates but would first like to understand what is the average.”

The best answer came from a government service provider that works with businesses. The poster notes that their service is free to use, so they get very high response rates.

“I would say around 30–40% response rates to transactional surveys,” they write. “Our annual pulse survey usually sits closer to 12%. I think the type of survey and how long it has been since you rendered services is a huge factor.”

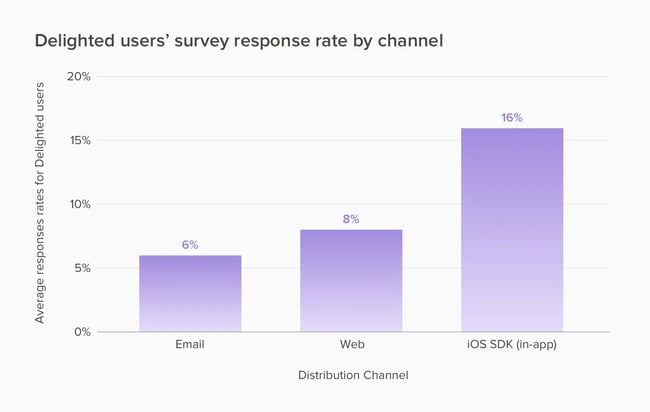

Since this conversation, “Delighted” (the Qualtrics blog) reported some fresher data:

The takeaway here is that response rates vary widely depending on the channel you use to reach respondents. On the upper end, the Qualtrics blog reports that customers had 85% response rates for employee email NPS surveys and 33% for email NPS surveys.

A good response rate, the blog writes, “ranges between 5% and 30%. An excellent response rate is 50% or higher.”

This echoes reports from Customer Thermometer, which marks a response rate of 50% or higher as excellent. Response rates between 5%-30% are much more typical, the report notes. High response rates are driven by a strong motivation to complete the survey or a personal relationship between the brand and the customer.

If your business does little person-to-person contact, you’re out of luck. Customer Thermometer says you should expect responses on the lower end of the scale. The same goes for surveys distributed from unknown senders, which typically yield the lowest level of responses.

According to SurveyMonkey, surveys where the sender has no prior relationship have response rates of 20% to 30% on the high end.

Whatever numbers you do get, keep making those efforts to bring response rates up. That way, you have a better chance of increasing your survey completion rate. How, you ask?

Tips to Increase Survey Completion

If you want to boost survey completions among your customers, try the following tips.

1. Keep your survey brief.

We shouldn’t cram lots of questions into one survey, even if it’s tempting. Sure, it’d be nice to have more data points, but random people will probably not hunker down for 100 questions when we catch them during their half-hour lunch break.

Keep it short. Pare it down in any way you can.

Survey completion rate versus number of questions is a correlative relationship — the more questions you ask, the fewer people will answer them all. If you have the budget to pay the respondents, it’s a different story — to a degree.

“If you’re paying for survey responses, you’re more likely to get completions of a decently-sized survey. You’ll just want to avoid survey lengths that might tire, confuse, or frustrate the user. You’ll want to aim for quality over quantity,” says Pamela Bump, Head of Content Growth at HubSpot.

2. Give your customers an incentive.

For instance, if they’re cats, you could give them a glass of water with a fish inside.

Offer incentives that make sense for your target audience. If they feel like they are being rewarded for giving their time, they will have more motivation to complete the survey.

This can even accomplish two things at once — if you offer promo codes, discounts on products, or free shipping, it encourages them to shop with you again.

3. Keep it smooth and easy.

Keep your survey easy to read. Simplifying your questions has at least two benefits: People will understand the question better and give you the information you need, and people won’t get confused or frustrated and just leave the survey.

4. Know your customers and how to meet them where they are.

Here’s an anecdote about understanding your customers and learning how best to meet them where they are.

Early on in her role, Pamela Bump, HubSpot’s Head of Content Growth, conducted a survey of HubSpot Blog readers to learn more about their expertise levels, interests, challenges, and opportunities. Once published, she shared the survey with the blog’s email subscribers and a top reader list she had developed, aiming to receive 150+ responses.

“When the 20-question survey was getting a low response rate, I realized that blog readers were on the blog to read — not to give feedback. I removed questions that wouldn’t serve actionable insights. When I reshared a shorter, 10-question survey, it passed 200 responses in one week,” Bump shares.

Tip 5. Gamify your survey.

Make it fun! Brands have started turning surveys into eye candy with entertaining interfaces so they’re enjoyable to interact with.

Your respondents could unlock micro incentives as they answer more questions. You can word your questions in a fun and exciting way so it feels more like a BuzzFeed quiz. Someone saw the opportunity to make surveys into entertainment, and your imagination — well, and your budget — is the limit!

Your Turn to Boost Survey Completion Rates

Now, it’s time to start surveying. Remember to keep your user at the heart of the experience. Value your respondents’ time, and they’re more likely to give you compelling information. Creating short, fun-to-take surveys can also boost your completion rates.

Editor’s note: This post was originally published in December 2010 and has been updated for comprehensiveness.

![How to Increase Survey Completion Rate With 5 Top Tips → Free Download: 5 Customer Survey Templates [Access Now]](https://articles.entireweb.com/wp-content/uploads/2023/12/How-to-Increase-Survey-Completion-Rate-With-5-Top-Tips.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)