SEO

What’s New In SE Ranking’s On-Page SEO Checker?

When it comes to SEO, on-page elements are factors that directly affect a site’s performance. This is why over the years, more and more tech companies are launching their own on-page SEO checker tool. These tools help make it easier for people who want to jumpstart SEO for their businesses. One of our favorite SEO tools to use, SE Ranking, takes this to another level by developing unique features that work cohesively with the other datasets in their toolbox.

SE Ranking for SEO Optimization

Currently, SE Ranking’s rank tracking tool is widely used among SEO professionals. To make the platform more cohesive, they have developed a broad range of much-needed functionalities to analyze and organize your SEO efforts.

Their new On-Page SEO Checker is an awesome addition that would help SEO specialists all over the world make an actionable plan to rank in search engines like Google.

The tool aims to analyze how a specific webpage can be optimized for its target keyword. So, I took a look at what it could monitor on a page, what makes it different from other on-page SEO tools out there, and how it helps improve the on-page SEO of a website.

Today, I’m going to share my experience with SE Ranking’s new on-page SEO tool.

SE Ranking’s On-Page SEO Checker in Action

To fully see how accurate and extensive the tool is, I used it to check a new website my team is currently working on. I entered the URL of the webpage and the keyword I aim to rank it for to get the audit started.

One thing to note is that the checker only accommodates one search query to analyze. So, it’s important to note that the results the tool will show differ for each search query you enter.

Here’s a rundown of some of our interesting finds:

It Scores Based On A Comprehensive Metric

The first thing SE Rankings’s on-page SEO checker shows is the webpage’s overall quality score. This is based on over 70 parameters Google considers when it ranks pages. The quality score is more than just an estimation of the webpage’s performance. I found that SE Ranking’s scoring system has a way of gauging which factors are prioritized more than the others.

According to SE Ranking “…metrics that have a strong impact on rankings have a greater impact on the page’s overall quality score, while metrics that are not decisive are given less weight.” (source: SE Ranking)

This means that the score is based on the number of checks, warnings, and errors detected by the tool in the different metrics it set in the analysis.

To help you prioritize your optimizations better, you can explore these categories one by one. For example, the URLs listed as ‘Errors’ require more urgent solutions, so you can start with them.

It Provides Suggestions to Optimize Metadata

Some of the most rigid diagnostics I found from the tool are the ones pointing to the webpage’s metadata. The page titles, URLs, meta descriptions, headings, and alt attributes are arguably the heart of SEO. These are some of the most important factors Google looks at to understand the content of your webpage.

What makes SE Ranking’s on-page SEO checker tool stand out is that it can detect issues that carry weight when it comes to keyword rankings. This is because the tool focuses on giving an in-depth analysis of on-page elements.

While other tools only look at the number of characters in your metadata, SE Ranking can assess if you have integrated your keywords within them. This ensures that your webpage is optimized in a way that caters to the keyword you want to rank it for.

Another thing I noticed is how instead of just listing down warnings and errors on your data, it also provides solutions on how you can improve it. This will help you save time and effort that can be used to optimize other areas of your webpage.

It Evaluates for Content Uniqueness

Duplicates in page content are red flags in SEO. The last thing you want to do is publish unoriginal content that lowers your chances of ranking in the search engines. Regularly checking if your content is unique for each page is important.

The checker detects and lists down the URLs of the pages your content is similar to, the gravity of their similarities, and which exact areas of the content are not unique. This gives you a clearer idea of what and where you should revise or improve.

Another unique thing you can take advantage of in this tool is that it looks at the uniqueness of the images that are found on your webpage. With this feature, you can guarantee that every aspect of your content is a non-duplicate.

Overall, this category will help make your webpage attractive to users, especially since what it offers is distinct and not repetitive.

It Assesses Keyword Usage

Your keyword usage will make or break your SEO efforts for a webpage. This is why on top of using the appropriate keyword, you have to integrate it into your webpage properly. SE Ranking’s checker also analyzes this by looking at the number of times a keyword is used on a webpage. At the same time, it detects which part of the content your keyword is placed in.

The “T” in the green circle stands for the title, “D” represented meta description, and “H1” for the Heading 1. This feature can guide you on how to utilize and place your keyword in areas where you need them to be.

Another good thing about this feature is it recognizes keyword variations and keyphrases in case you want to diversify your keyword usage.

It Provides A Page Speed Report and Recommendations

Good page speed provides good user experience. And when Google rolled out its Core Web Vitals update last year, the speed, responsiveness, and stability of a webpage became an important ranking factor.

The tool makes this easier by skipping the setting up process and directly showing the results.

Not only does SE Ranking give the numbers for loading speed, but it also presents specific suggestions that you can work on to improve these numbers.

In the image below, you’ll see specific fields where fixes can be applied. The checker also separates its results for each device. The preview of how the page looks like for each device at the right is just the cherry on top.

When you click ‘Resolve’ on the right side of each category, it will show the files that can be edited to contribute to a better loading speed. The suggestions show which elements you need to compress and how much space will be saved if you do so.

With these recommendations from the tool, you can make your webpage load faster and create swifter navigation for it. This allows your visitors to see the information they need from your webpage in a shorter amount of time which creates a good on-page user experience.

It Can Showcase Off-Page Data

This tool already provides a comprehensive diagnosis of on-page elements, but what makes it more special is it also gives an overview of the webpage’s backlink profile. After all, backlinks also carry a lot of weight in page ranking.

The checker shows the backlink record along with the outbound and internal links found on a webpage.

I think that the presentation of the data could be better if it also shows the anchor text and landing page used for each backlink. This is so you can get a more complete overview of your backlinks.

And, although it’s good to include how many of the backlinks are Dofollow links, SE Ranking can refine this more by indicating which ones are contributing to your webpage’s ranking.

Aside from looking at the backlinks, the tool also takes a look at how a webpage and its domain are performing on social media, particularly on Facebook and VK (a Russian social networking platform).

Consistent with the other metrics, SE Ranking provides suggestions for improvement such as making social media accounts accessible on the webpage. This will let people on social media see and engage with your content.

Moving forward, it would be useful if SE Ranking can include data from other major social media platforms such as Twitter, Instagram, and LinkedIn in the assessment. This is so it can better evaluate webpage performance across different audiences. At the same time, it can help determine which one you can put more effort into to make your business and content more visible.

On-Page Tool Comparison: SE Ranking vs. Xenu’s Link Sleuth vs. Screaming Frog

Xenu’s Link Sleuth is one of the older SEO tools used by professionals and Screaming Frog is another popular software I love using for my on-site crawls. To have a better grasp of what SE Ranking’s On-Page Checker feature can do, I compared it to the two software I mentioned in terms of how it presents its data and findings.

Findings For Page Speed Data

Xenu link sleuth doesn’t directly analyze page speed. It came into operation in 2009 and the features it has doesn’t seem to be up-to-date compared to a lot of on-page SEO tools my team is using.

The only thing I found that has an impact on a webpage’s loading speed is the ‘Size’ category, which points to large files found on a page. The thing is, it doesn’t indicate the unit of measurement it refers to.

Screaming Frog, on the other hand, can look into how fast your site is from a large number of webpage’s backend elements. For this tool, you have to connect your Google account and get your API key to get certain datasets such as your page speed findings.

Here’s what it shows before the setup process:

The same data can be found in SE Ranking without setting up your API. This helps if you’re not familiar with the more technical aspects of SEO.

Presentation of Content Data

Just like SE Ranking, XenuLink Sleuth can also read the content of a webpage. However, this is limited to only the page title. When it comes to the originality of the content, it doesn’t contribute much to ensuring that a webpage is unique across its domain and the internet.

Since Xenu’s Link Sleuth provides a limited number of categories for its on-page audit, the optimizations you can make to step up your webpage’s SEO are also limited.

Similar to SE Ranking, Screaming Frog has a feature that detects duplicate issues on a webpage’s content. Here’s how simple Screaming Frog presents content duplicate warnings:

SE Ranking presents this data a bit differently since it also checks if your webpage is unique from other ones available on the internet. It also highlights which specific lines among the pages are similar.

This is a recurring theme that I noticed in my comparison. For each category, SE Ranking provides a brief description of what it is, how it factors in SEO, and what ways you can optimize it.

This makes SE Ranking a beginner-friendly tool that will allow you to enhance your webpage like an expert.

Key Takeaway

SE Ranking’s on-page tool helps optimize your webpage to be unique, accessible, and attractive to visitors. It evaluates even the smallest on-page details that affect your ranking and shows ways to enhance them so you won’t have to.

The final touch? The results the checker generates can be exported via email or PDF. It also includes a checklist of the areas that need improvement on your webpage. This way, you can keep working on them without having to log in again from the SE Ranking website.

If you’re working on giving your website visibility in the search engines, try out SE Ranking’s On-Page SEO Checker tool for a smooth and comprehensive on-page diagnosis.

Try out SE Ranking’s on page checker by signing up today Click here!

SEO

How To Write ChatGPT Prompts To Get The Best Results

ChatGPT is a game changer in the field of SEO. This powerful language model can generate human-like content, making it an invaluable tool for SEO professionals.

However, the prompts you provide largely determine the quality of the output.

To unlock the full potential of ChatGPT and create content that resonates with your audience and search engines, writing effective prompts is crucial.

In this comprehensive guide, we’ll explore the art of writing prompts for ChatGPT, covering everything from basic techniques to advanced strategies for layering prompts and generating high-quality, SEO-friendly content.

Writing Prompts For ChatGPT

What Is A ChatGPT Prompt?

A ChatGPT prompt is an instruction or discussion topic a user provides for the ChatGPT AI model to respond to.

The prompt can be a question, statement, or any other stimulus to spark creativity, reflection, or engagement.

Users can use the prompt to generate ideas, share their thoughts, or start a conversation.

ChatGPT prompts are designed to be open-ended and can be customized based on the user’s preferences and interests.

How To Write Prompts For ChatGPT

Start by giving ChatGPT a writing prompt, such as, “Write a short story about a person who discovers they have a superpower.”

ChatGPT will then generate a response based on your prompt. Depending on the prompt’s complexity and the level of detail you requested, the answer may be a few sentences or several paragraphs long.

Use the ChatGPT-generated response as a starting point for your writing. You can take the ideas and concepts presented in the answer and expand upon them, adding your own unique spin to the story.

If you want to generate additional ideas, try asking ChatGPT follow-up questions related to your original prompt.

For example, you could ask, “What challenges might the person face in exploring their newfound superpower?” Or, “How might the person’s relationships with others be affected by their superpower?”

Remember that ChatGPT’s answers are generated by artificial intelligence and may not always be perfect or exactly what you want.

However, they can still be a great source of inspiration and help you start writing.

Must-Have GPTs Assistant

I recommend installing the WebBrowser Assistant created by the OpenAI Team. This tool allows you to add relevant Bing results to your ChatGPT prompts.

This assistant adds the first web results to your ChatGPT prompts for more accurate and up-to-date conversations.

It is very easy to install in only two clicks. (Click on Start Chat.)

For example, if I ask, “Who is Vincent Terrasi?,” ChatGPT has no answer.

With WebBrower Assistant, the assistant creates a new prompt with the first Bing results, and now ChatGPT knows who Vincent Terrasi is.

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023You can test other GPT assistants available in the GPTs search engine if you want to use Google results.

Master Reverse Prompt Engineering

ChatGPT can be an excellent tool for reverse engineering prompts because it generates natural and engaging responses to any given input.

By analyzing the prompts generated by ChatGPT, it is possible to gain insight into the model’s underlying thought processes and decision-making strategies.

One key benefit of using ChatGPT to reverse engineer prompts is that the model is highly transparent in its decision-making.

This means that the reasoning and logic behind each response can be traced, making it easier to understand how the model arrives at its conclusions.

Once you’ve done this a few times for different types of content, you’ll gain insight into crafting more effective prompts.

Prepare Your ChatGPT For Generating Prompts

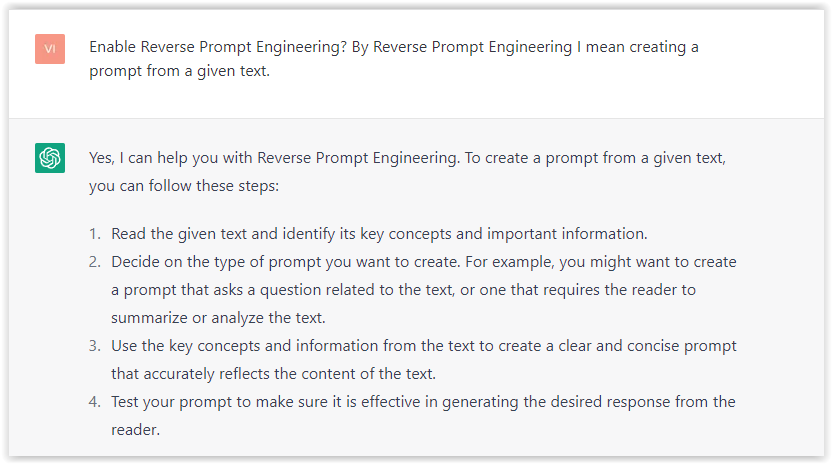

First, activate the reverse prompt engineering.

- Type the following prompt: “Enable Reverse Prompt Engineering? By Reverse Prompt Engineering I mean creating a prompt from a given text.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023ChatGPT is now ready to generate your prompt. You can test the product description in a new chatbot session and evaluate the generated prompt.

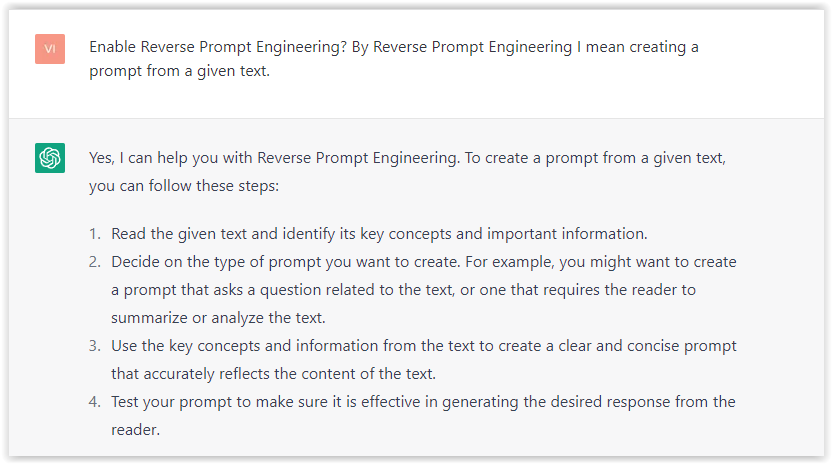

- Type: “Create a very technical reverse prompt engineering template for a product description about iPhone 11.”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023The result is amazing. You can test with a full text that you want to reproduce. Here is an example of a prompt for selling a Kindle on Amazon.

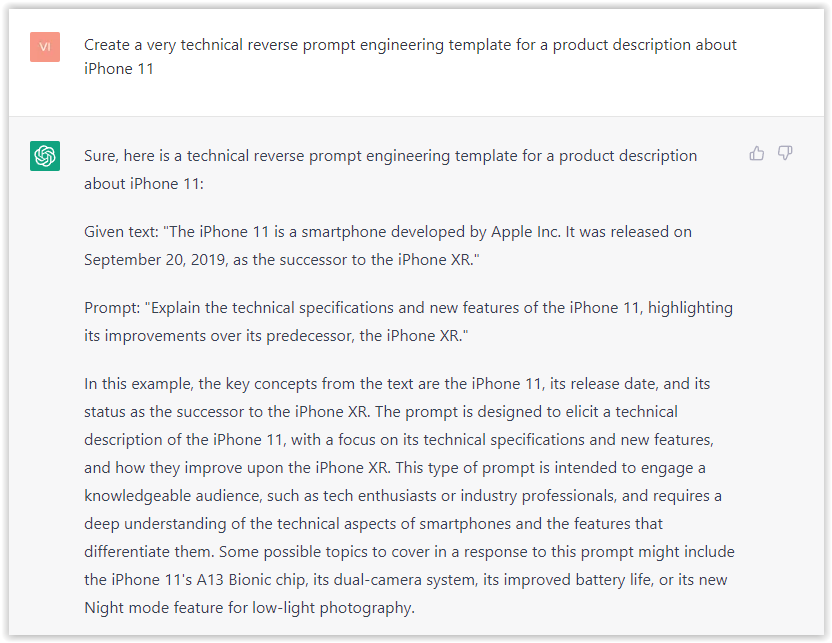

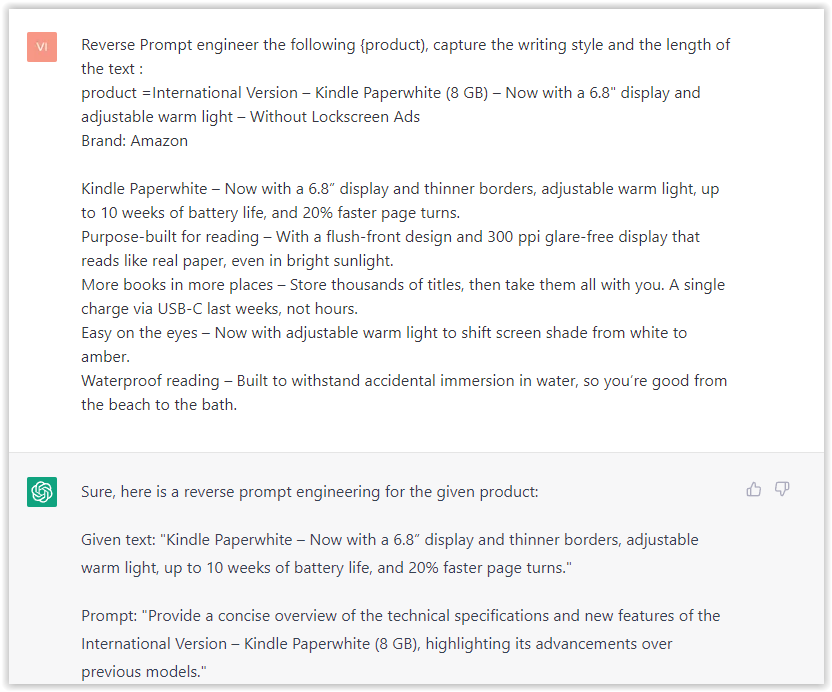

- Type: “Reverse Prompt engineer the following {product), capture the writing style and the length of the text :

product =”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023I tested it on an SEJ blog post. Enjoy the analysis – it is excellent.

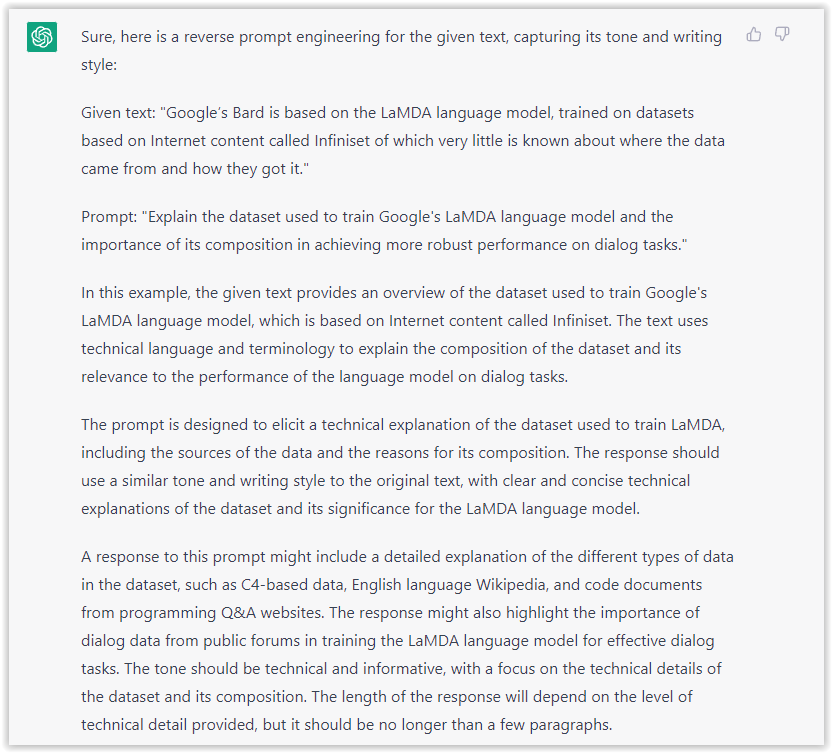

- Type: “Reverse Prompt engineer the following {text}, capture the tone and writing style of the {text} to include in the prompt :

text = all text coming from https://www.searchenginejournal.com/google-bard-training-data/478941/”

Screenshot from ChatGPT, March 2023

Screenshot from ChatGPT, March 2023But be careful not to use ChatGPT to generate your texts. It is just a personal assistant.

Go Deeper

Prompts and examples for SEO:

- Keyword research and content ideas prompt: “Provide a list of 20 long-tail keyword ideas related to ‘local SEO strategies’ along with brief content topic descriptions for each keyword.”

- Optimizing content for featured snippets prompt: “Write a 40-50 word paragraph optimized for the query ‘what is the featured snippet in Google search’ that could potentially earn the featured snippet.”

- Creating meta descriptions prompt: “Draft a compelling meta description for the following blog post title: ’10 Technical SEO Factors You Can’t Ignore in 2024′.”

Important Considerations:

- Always Fact-Check: While ChatGPT can be a helpful tool, it’s crucial to remember that it may generate inaccurate or fabricated information. Always verify any facts, statistics, or quotes generated by ChatGPT before incorporating them into your content.

- Maintain Control and Creativity: Use ChatGPT as a tool to assist your writing, not replace it. Don’t rely on it to do your thinking or create content from scratch. Your unique perspective and creativity are essential for producing high-quality, engaging content.

- Iteration is Key: Refine and revise the outputs generated by ChatGPT to ensure they align with your voice, style, and intended message.

Additional Prompts for Rewording and SEO:

– Rewrite this sentence to be more concise and impactful.

– Suggest alternative phrasing for this section to improve clarity.

– Identify opportunities to incorporate relevant internal and external links.

– Analyze the keyword density and suggest improvements for better SEO.

Remember, while ChatGPT can be a valuable tool, it’s essential to use it responsibly and maintain control over your content creation process.

Experiment And Refine Your Prompting Techniques

Writing effective prompts for ChatGPT is an essential skill for any SEO professional who wants to harness the power of AI-generated content.

Hopefully, the insights and examples shared in this article can inspire you and help guide you to crafting stronger prompts that yield high-quality content.

Remember to experiment with layering prompts, iterating on the output, and continually refining your prompting techniques.

This will help you stay ahead of the curve in the ever-changing world of SEO.

More resources:

Featured Image: Tapati Rinchumrus/Shutterstock

SEO

Measuring Content Impact Across The Customer Journey

Understanding the impact of your content at every touchpoint of the customer journey is essential – but that’s easier said than done. From attracting potential leads to nurturing them into loyal customers, there are many touchpoints to look into.

So how do you identify and take advantage of these opportunities for growth?

Watch this on-demand webinar and learn a comprehensive approach for measuring the value of your content initiatives, so you can optimize resource allocation for maximum impact.

You’ll learn:

- Fresh methods for measuring your content’s impact.

- Fascinating insights using first-touch attribution, and how it differs from the usual last-touch perspective.

- Ways to persuade decision-makers to invest in more content by showcasing its value convincingly.

With Bill Franklin and Oliver Tani of DAC Group, we unravel the nuances of attribution modeling, emphasizing the significance of layering first-touch and last-touch attribution within your measurement strategy.

Check out these insights to help you craft compelling content tailored to each stage, using an approach rooted in first-hand experience to ensure your content resonates.

Whether you’re a seasoned marketer or new to content measurement, this webinar promises valuable insights and actionable tactics to elevate your SEO game and optimize your content initiatives for success.

View the slides below or check out the full webinar for all the details.

SEO

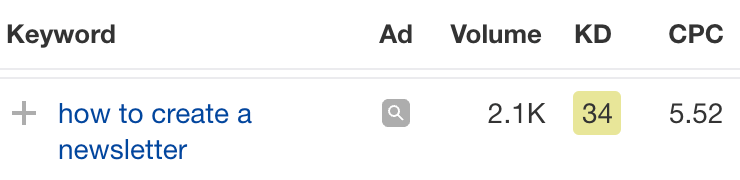

How to Find and Use Competitor Keywords

Competitor keywords are the keywords your rivals rank for in Google’s search results. They may rank organically or pay for Google Ads to rank in the paid results.

Knowing your competitors’ keywords is the easiest form of keyword research. If your competitors rank for or target particular keywords, it might be worth it for you to target them, too.

There is no way to see your competitors’ keywords without a tool like Ahrefs, which has a database of keywords and the sites that rank for them. As far as we know, Ahrefs has the biggest database of these keywords.

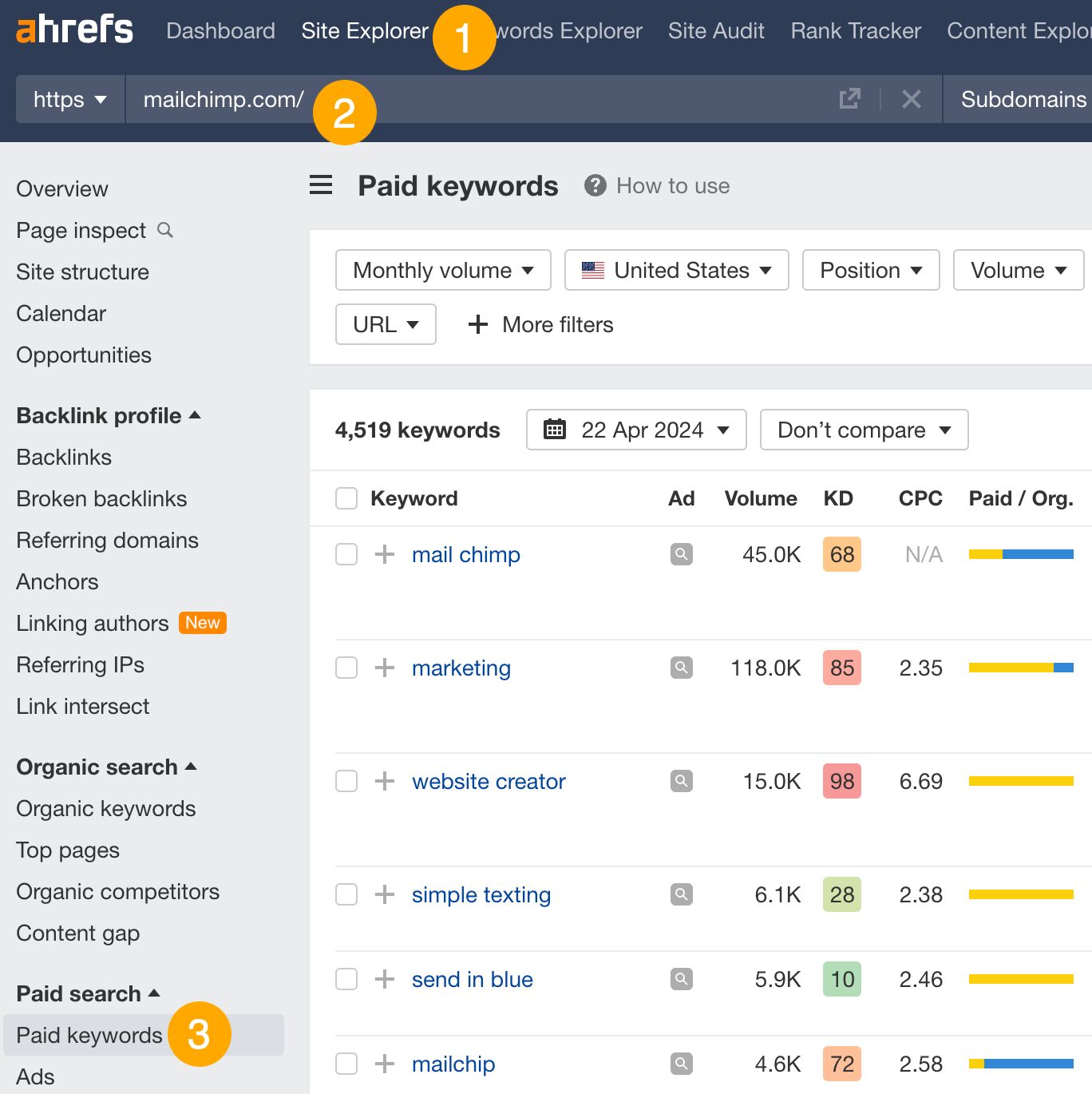

How to find all the keywords your competitor ranks for

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Organic keywords report

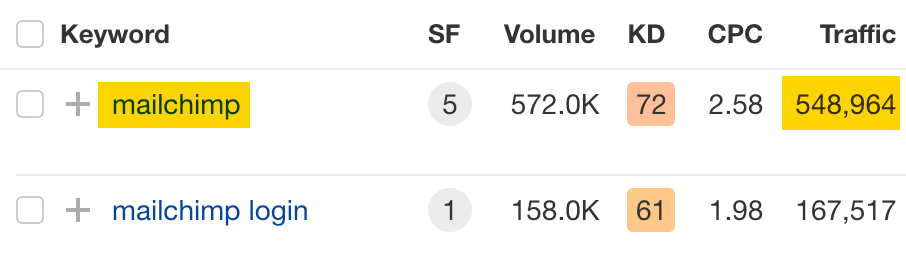

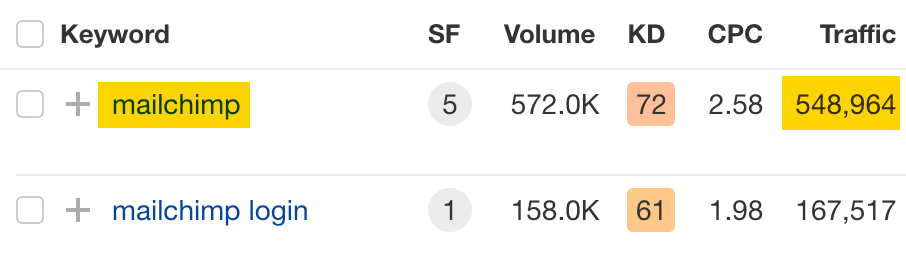

The report is sorted by traffic to show you the keywords sending your competitor the most visits. For example, Mailchimp gets most of its organic traffic from the keyword “mailchimp.”

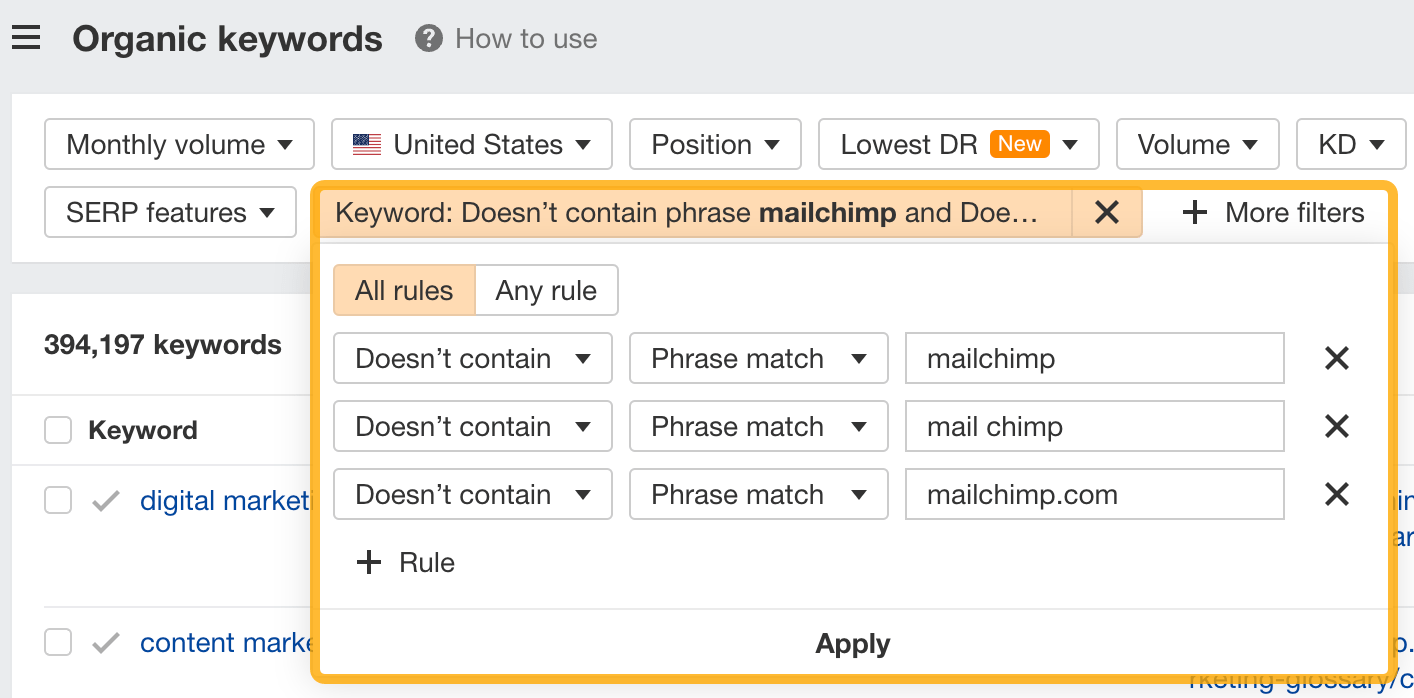

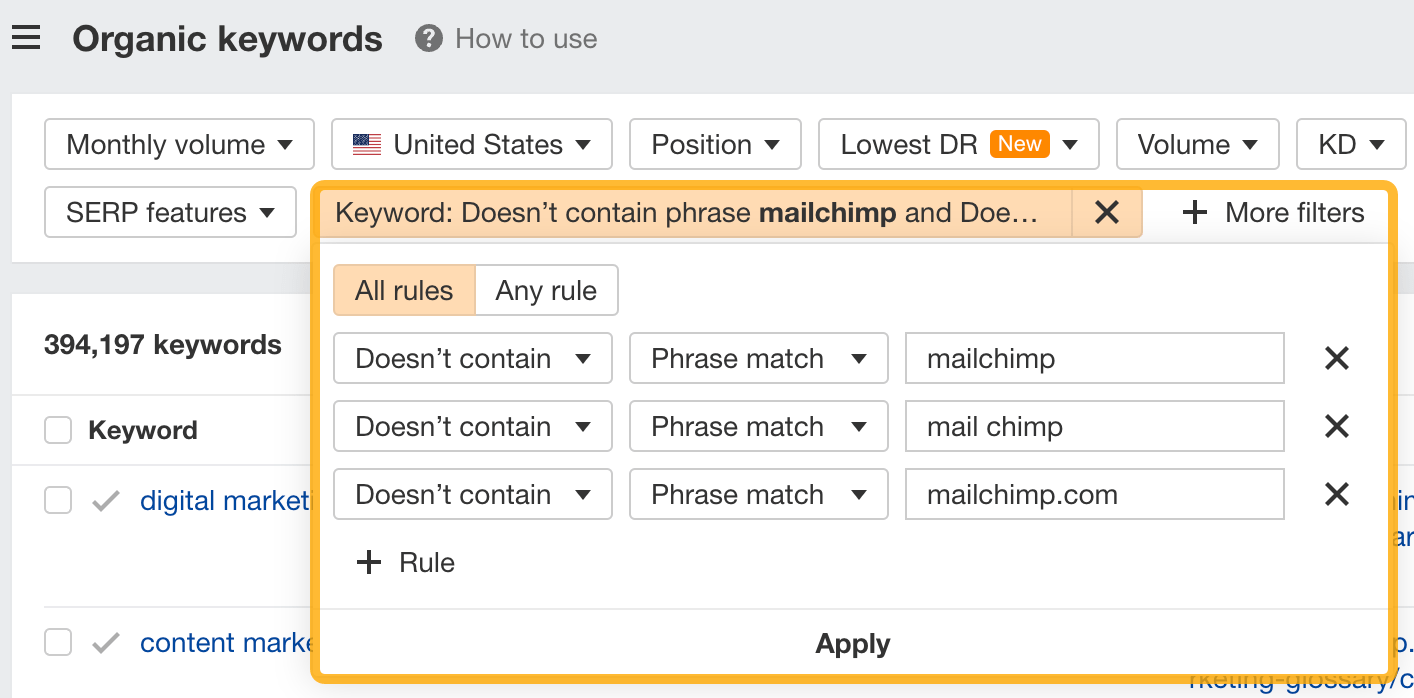

Since you’re unlikely to rank for your competitor’s brand, you might want to exclude branded keywords from the report. You can do this by adding a Keyword > Doesn’t contain filter. In this example, we’ll filter out keywords containing “mailchimp” or any potential misspellings:

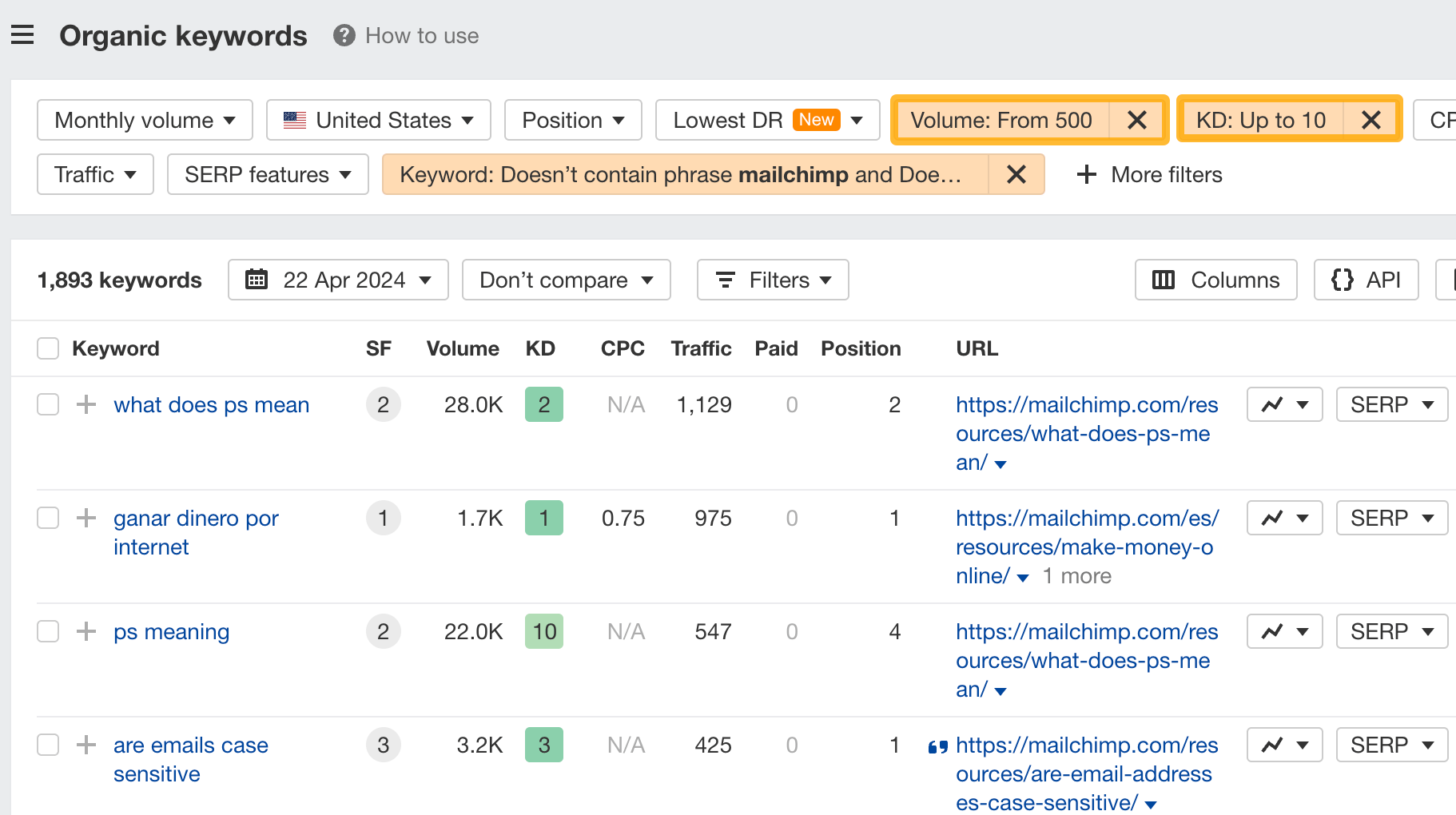

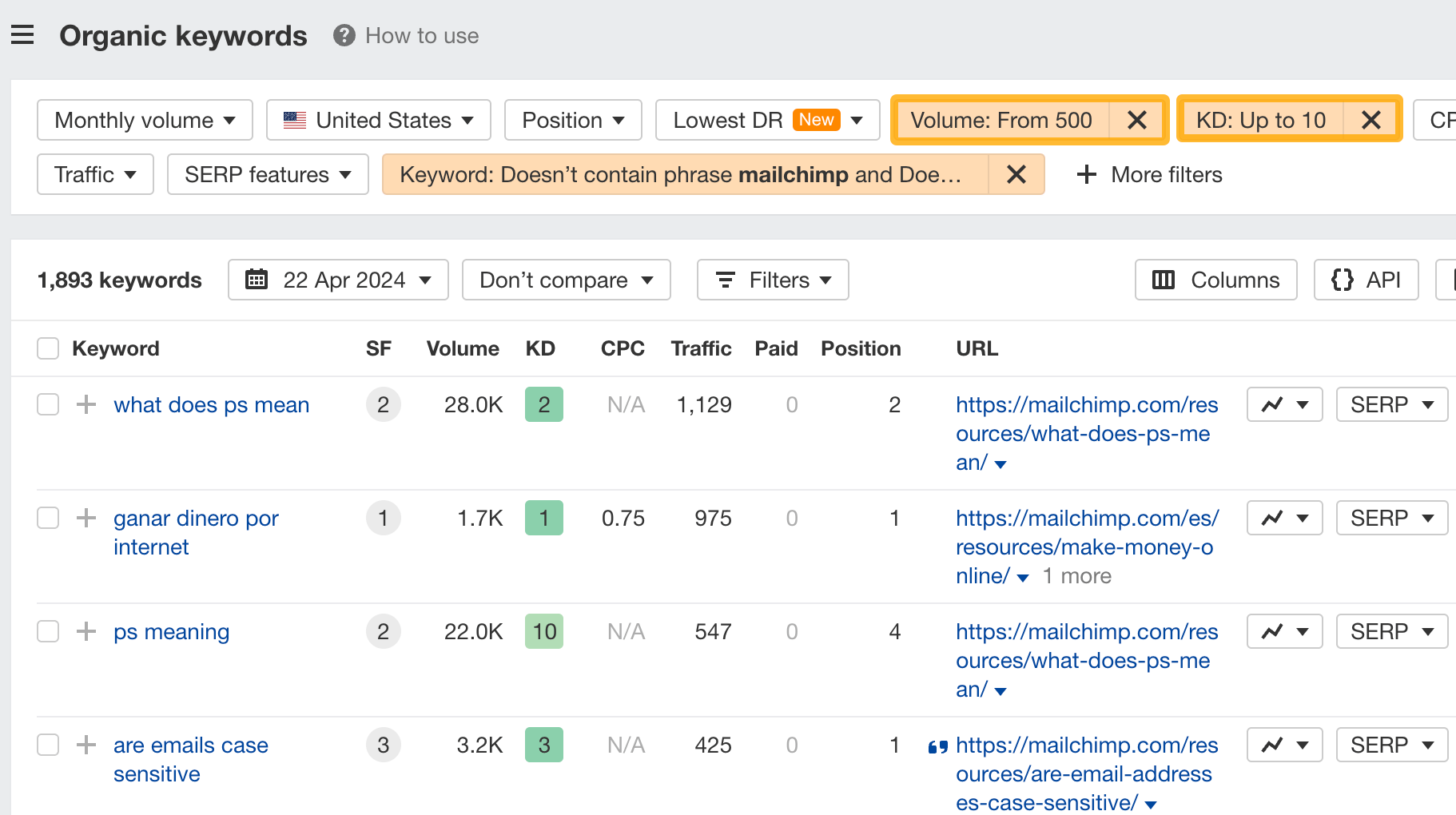

If you’re a new brand competing with one that’s established, you might also want to look for popular low-difficulty keywords. You can do this by setting the Volume filter to a minimum of 500 and the KD filter to a maximum of 10.

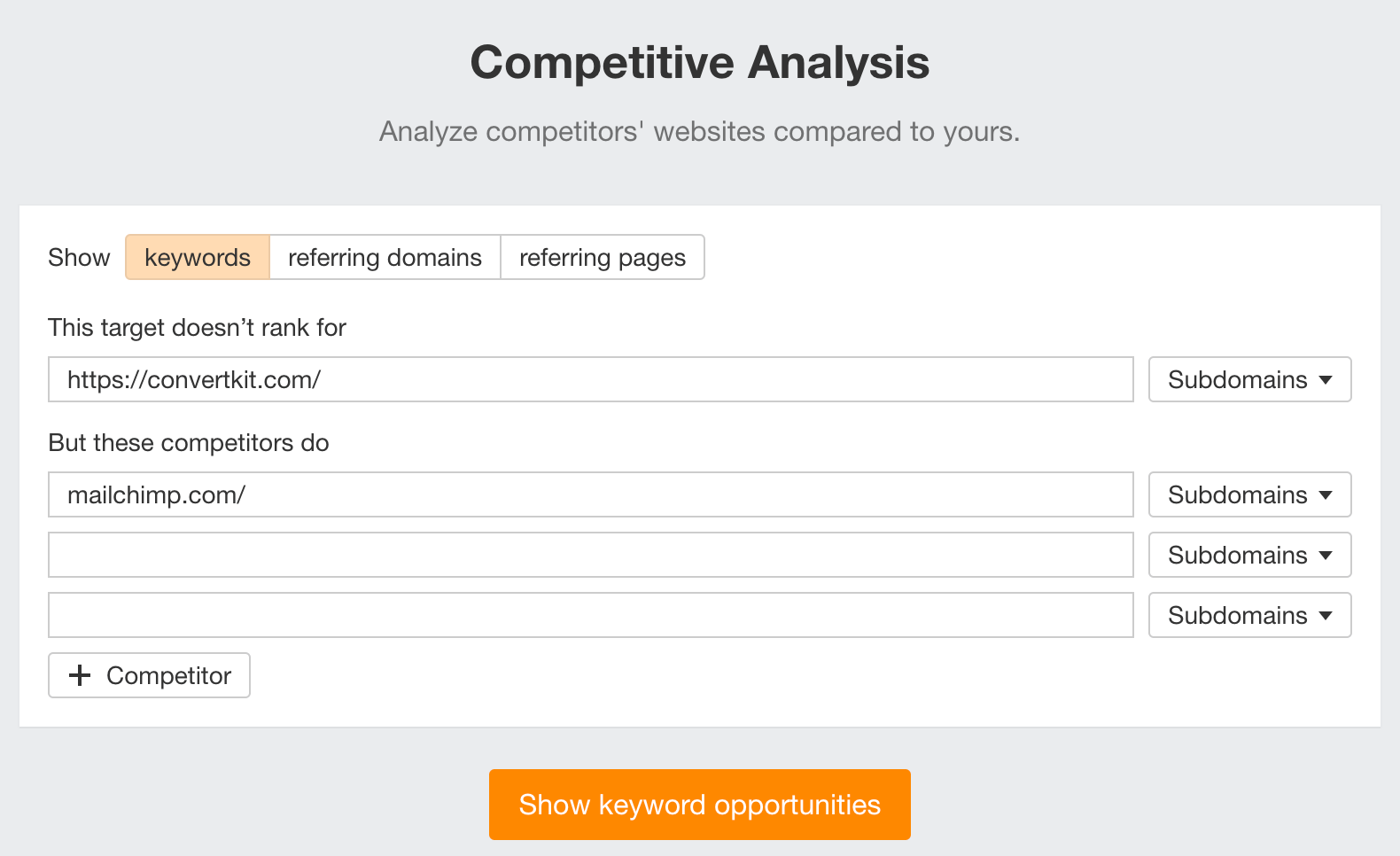

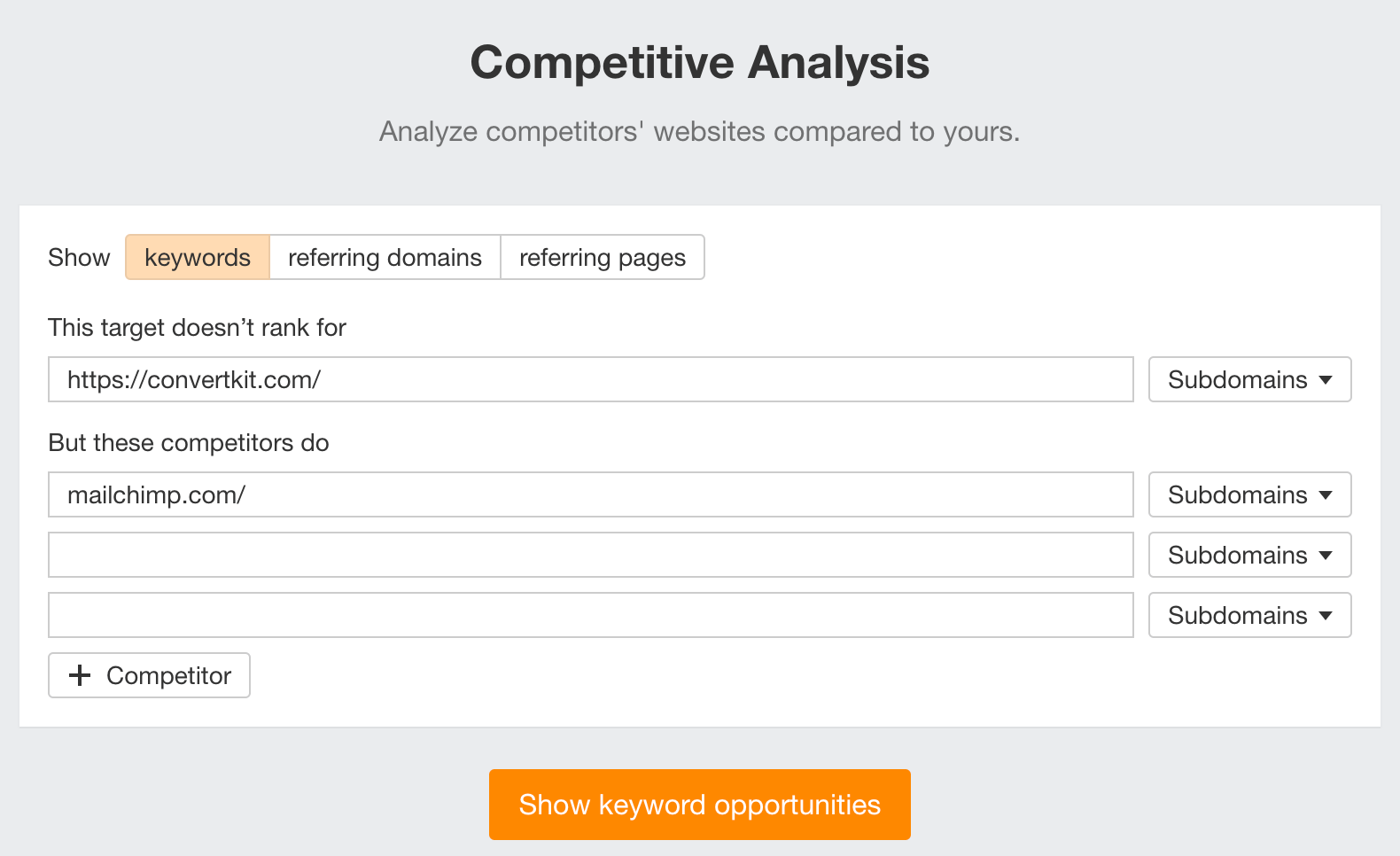

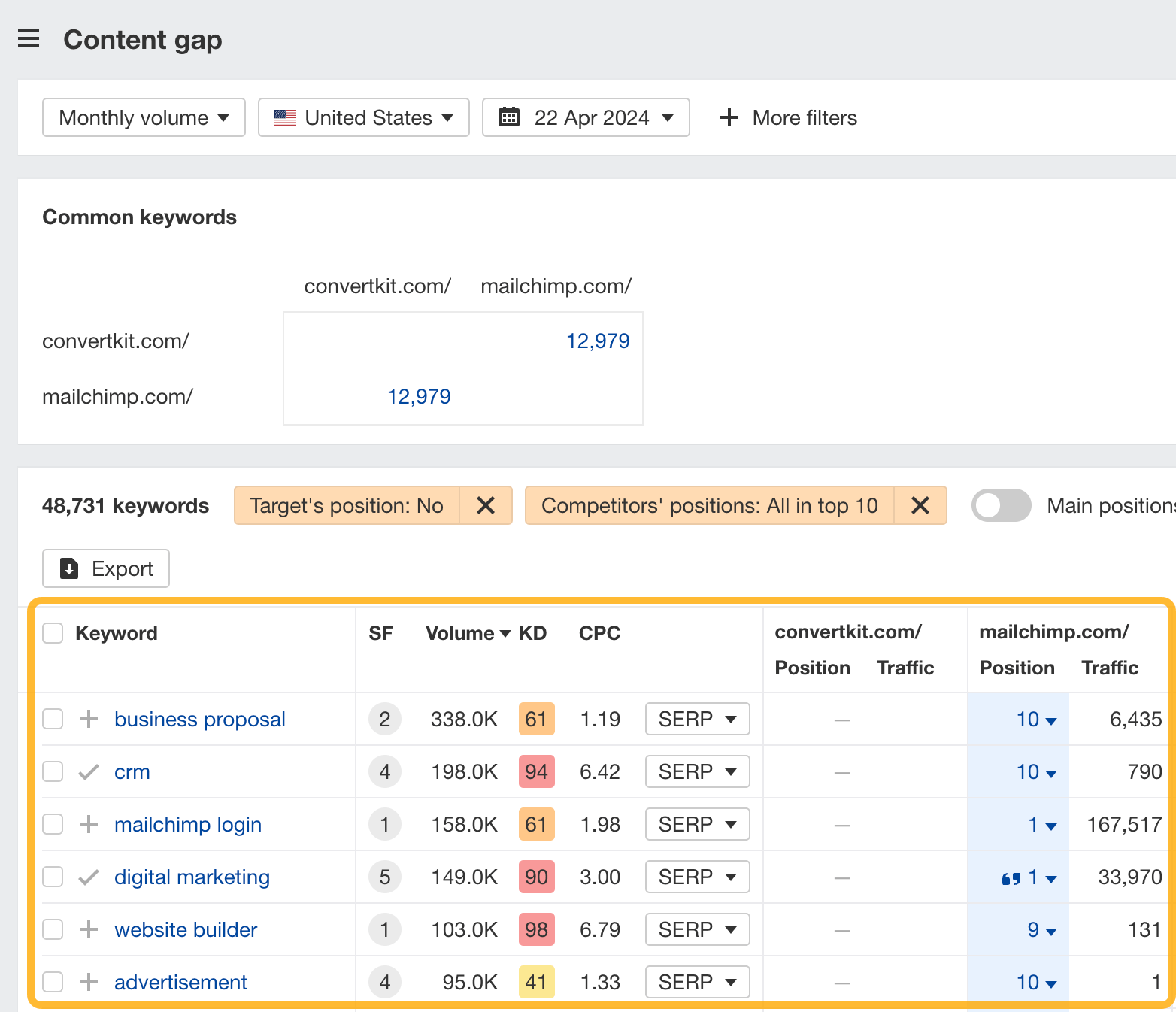

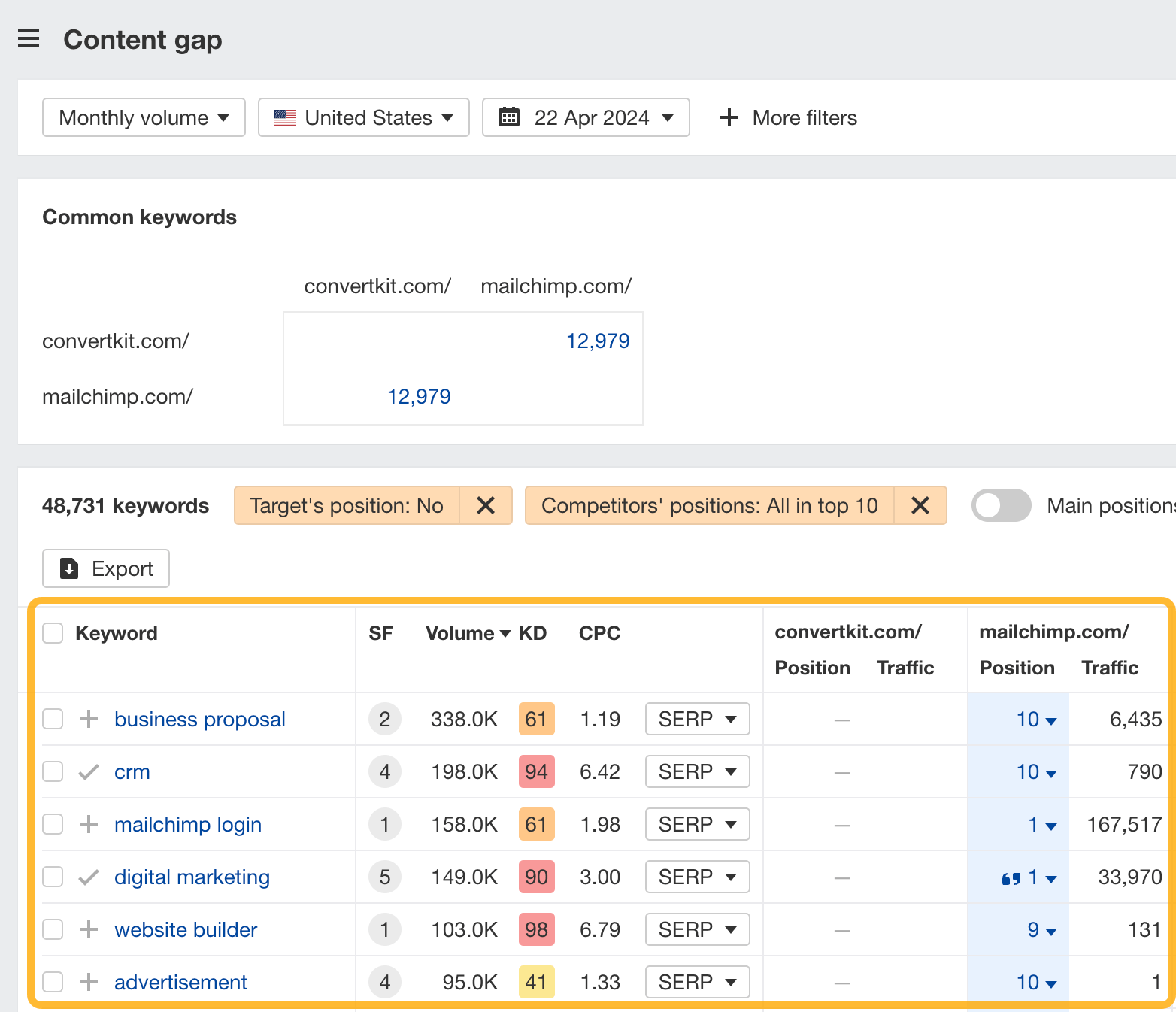

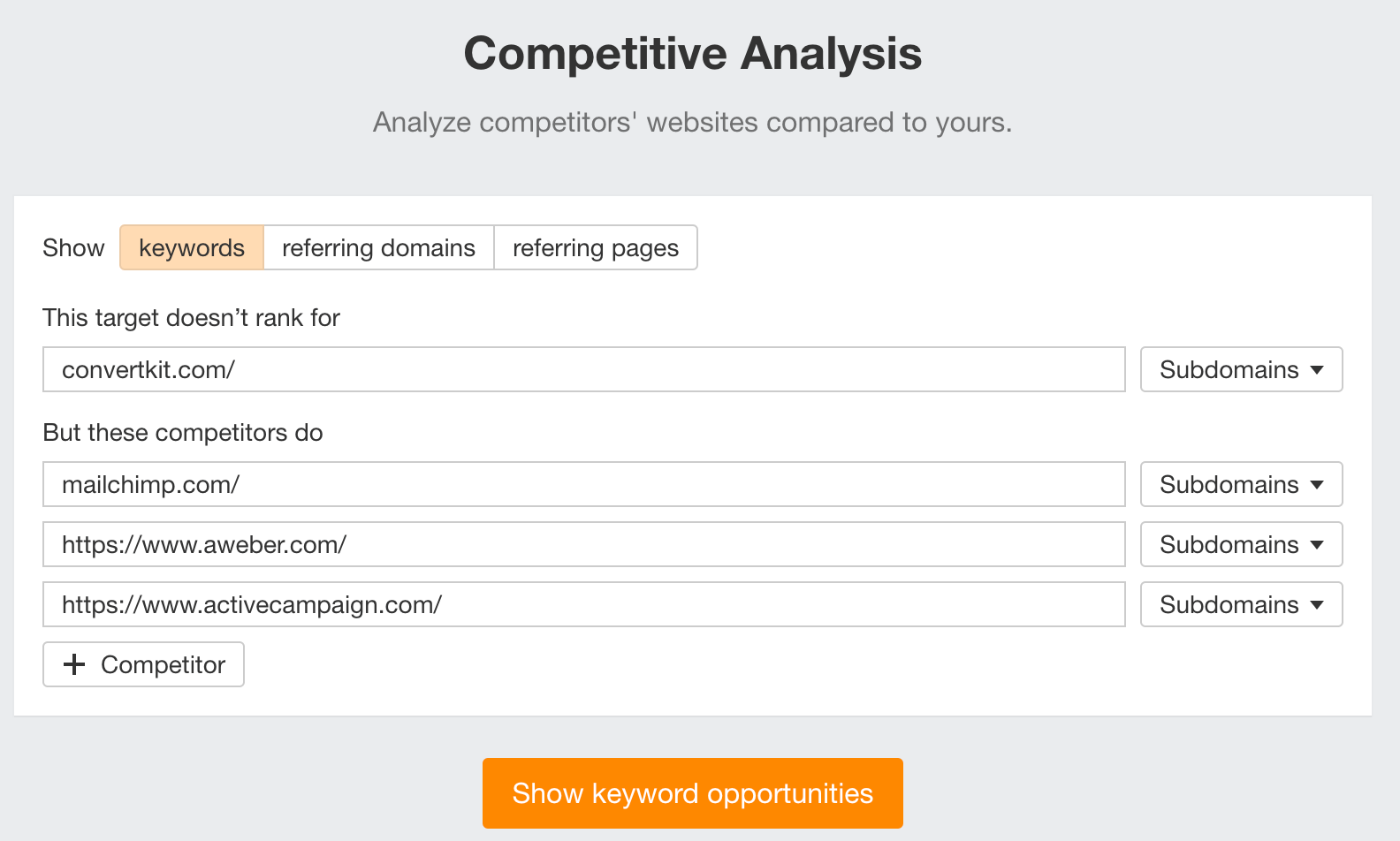

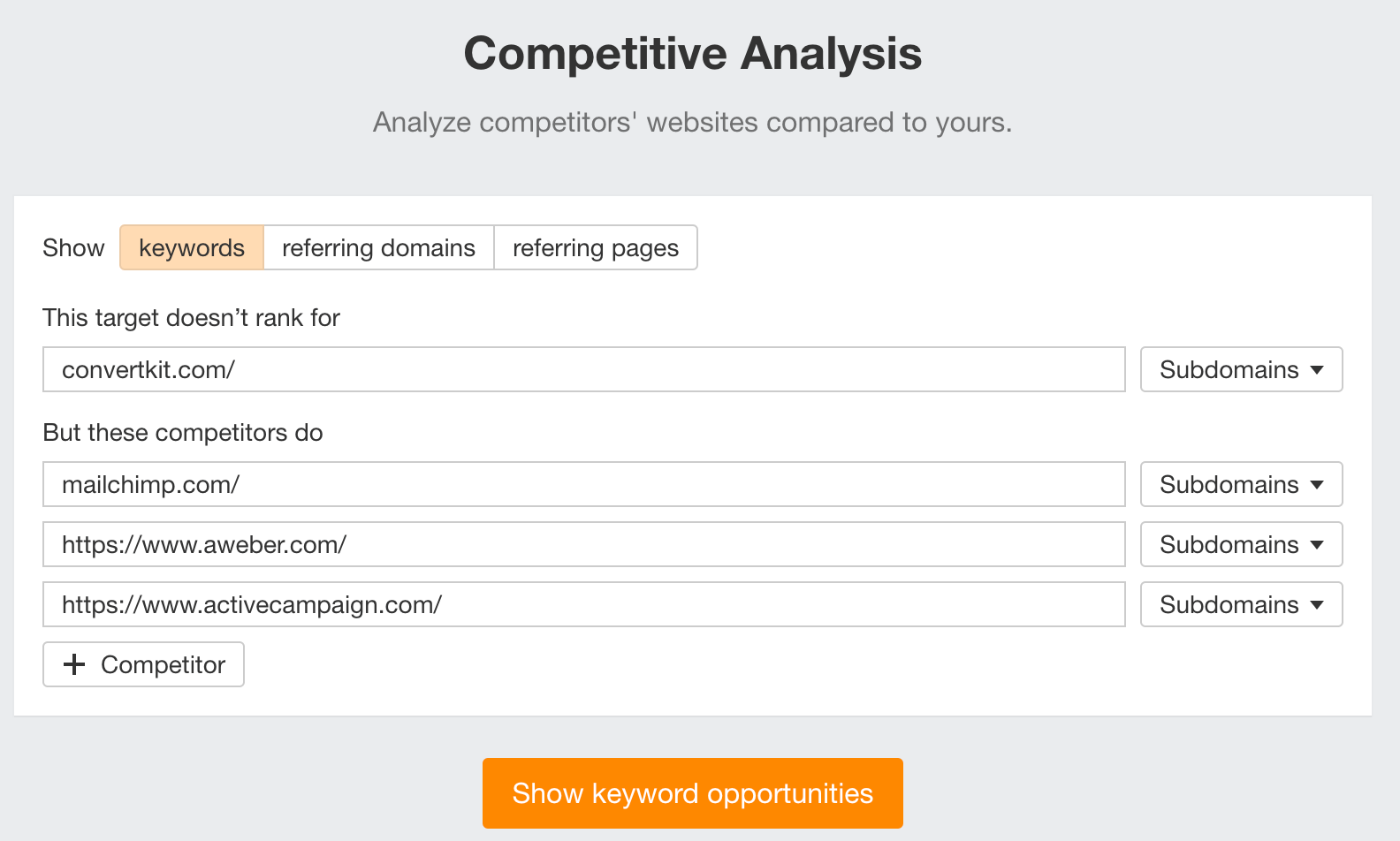

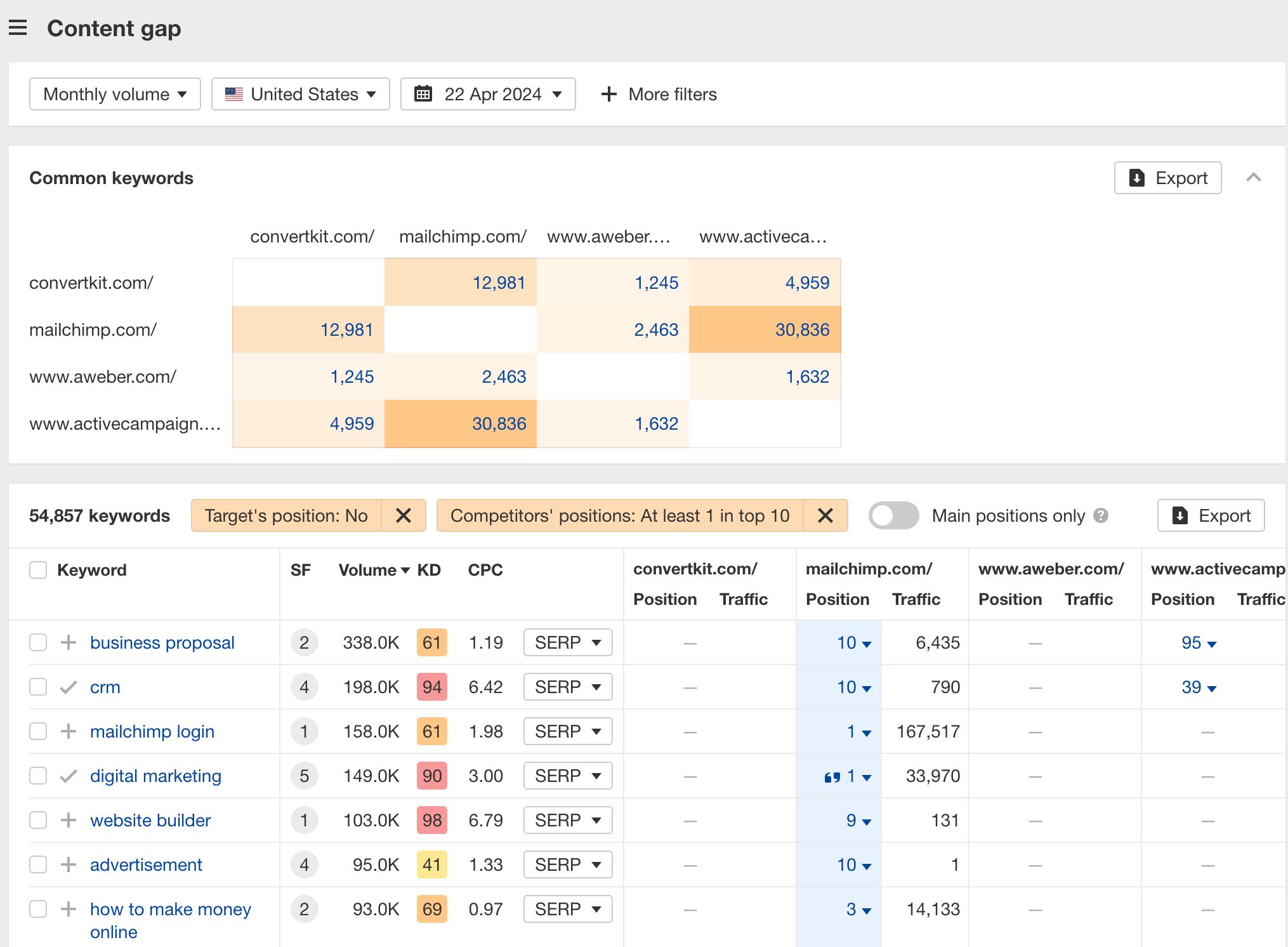

How to find keywords your competitor ranks for, but you don’t

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter your competitor’s domain in the But these competitors do section

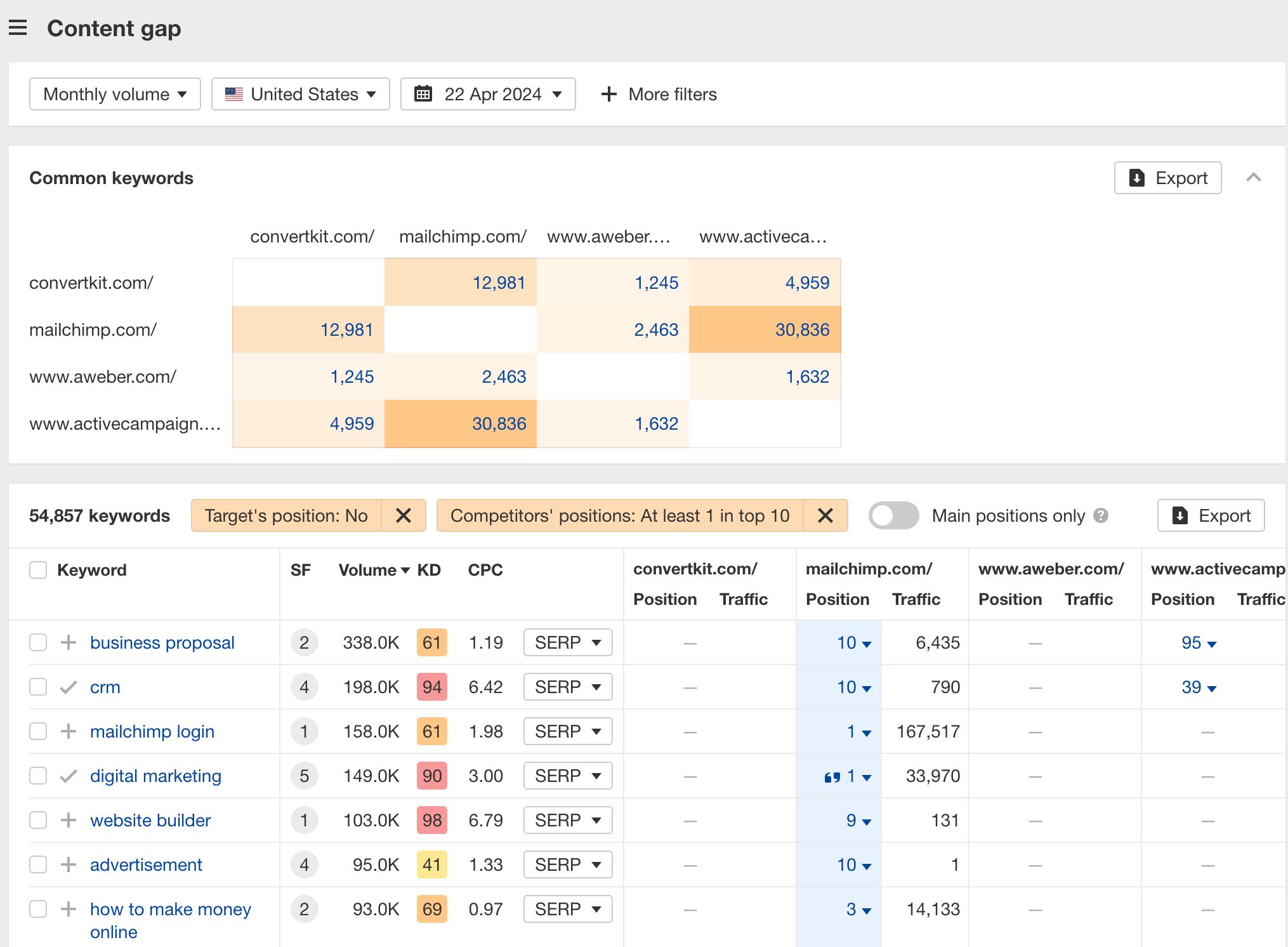

Hit “Show keyword opportunities,” and you’ll see all the keywords your competitor ranks for, but you don’t.

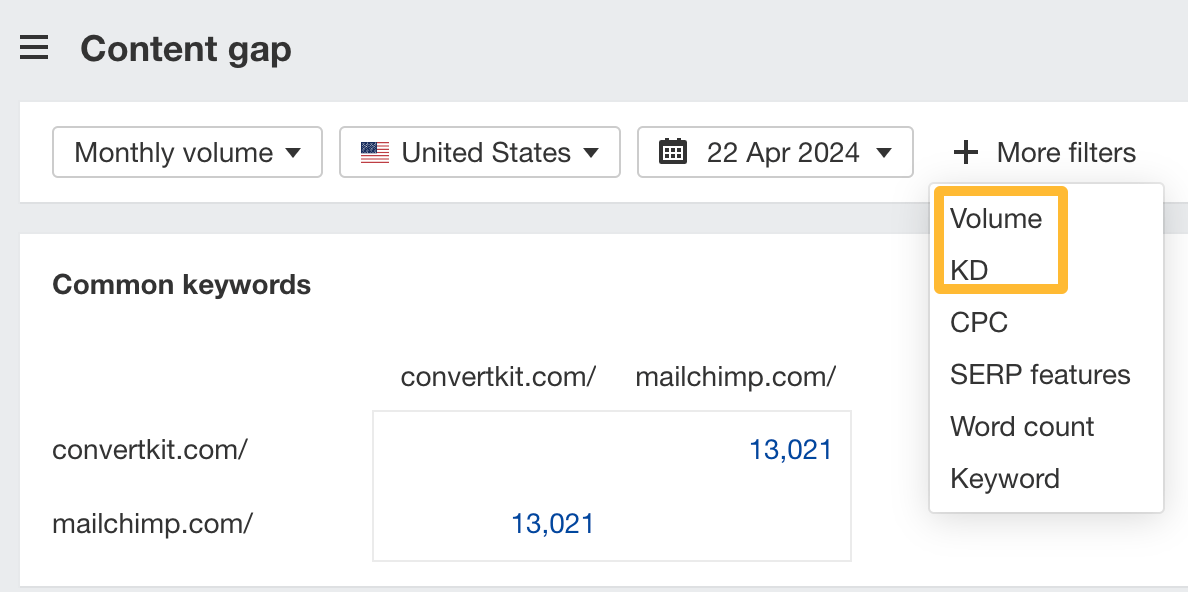

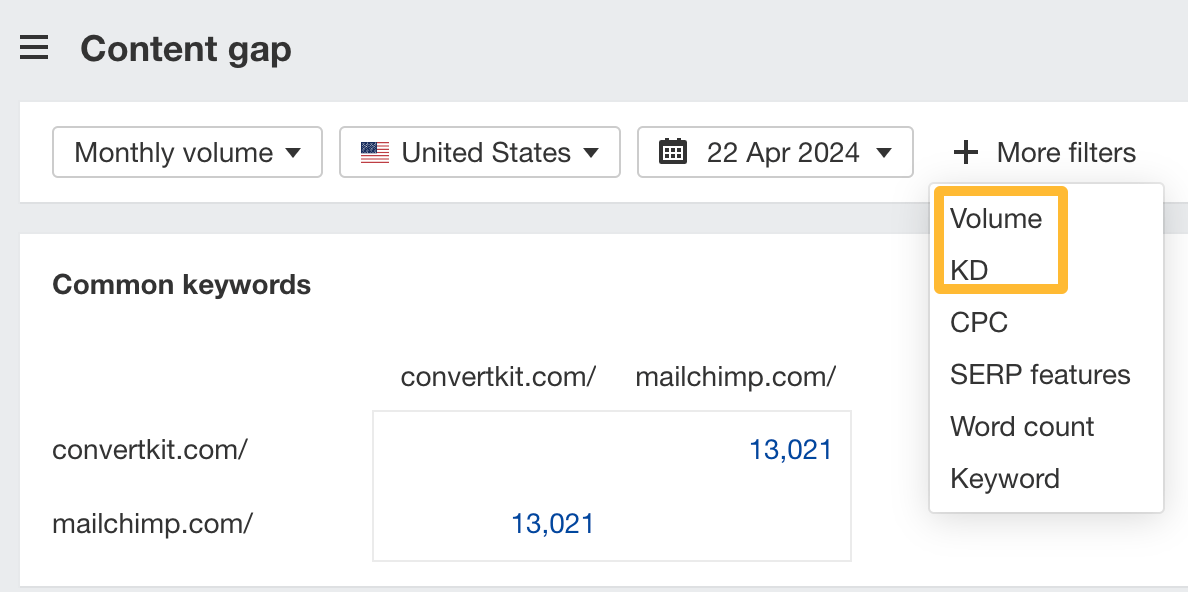

You can also add a Volume and KD filter to find popular, low-difficulty keywords in this report.

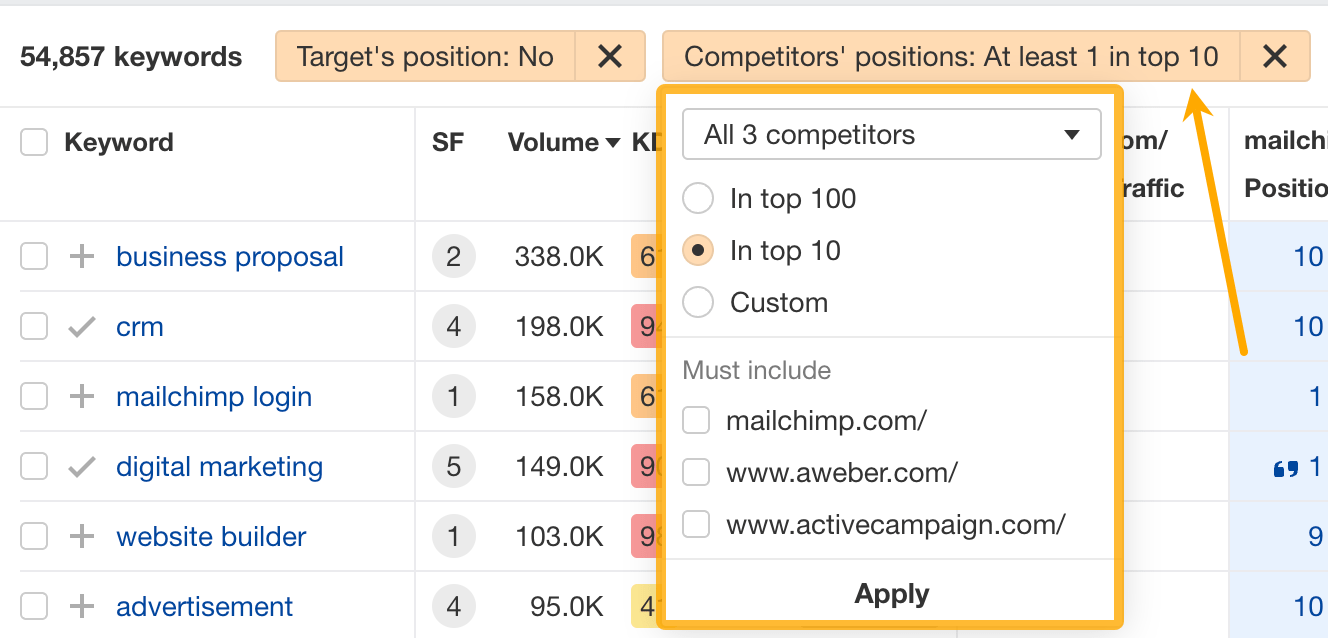

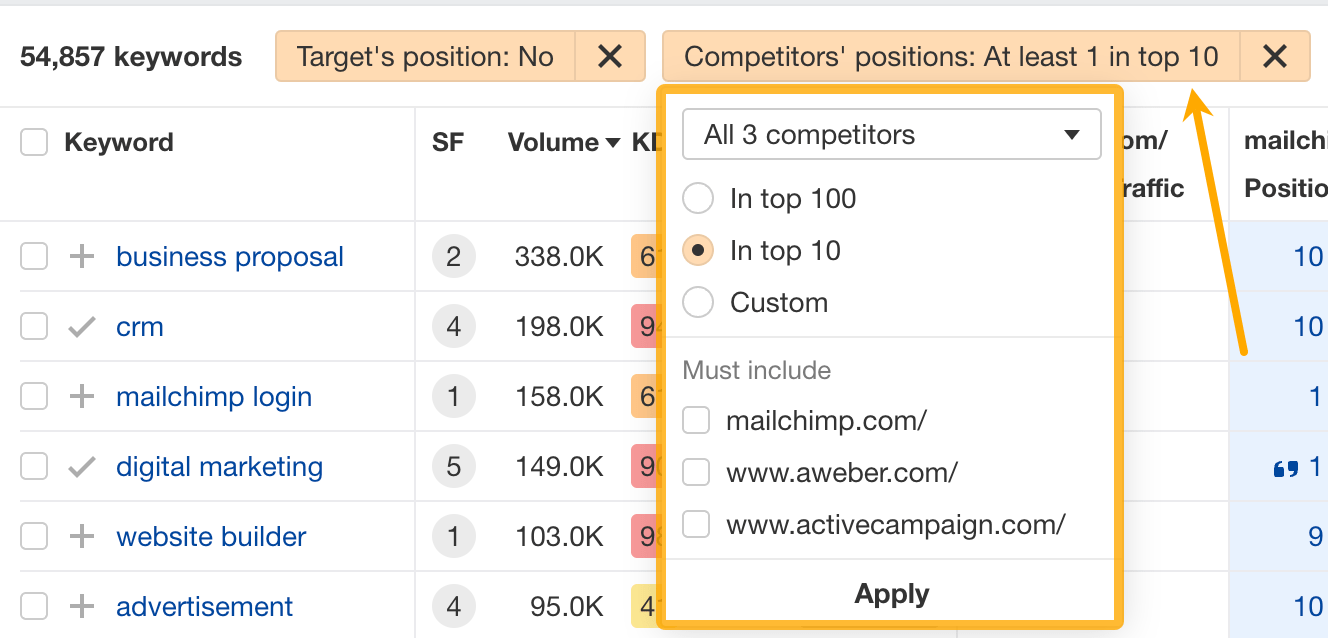

How to find keywords multiple competitors rank for, but you don’t

- Go to Competitive Analysis

- Enter your domain in the This target doesn’t rank for section

- Enter the domains of multiple competitors in the But these competitors do section

You’ll see all the keywords that at least one of these competitors ranks for, but you don’t.

You can also narrow the list down to keywords that all competitors rank for. Click on the Competitors’ positions filter and choose All 3 competitors:

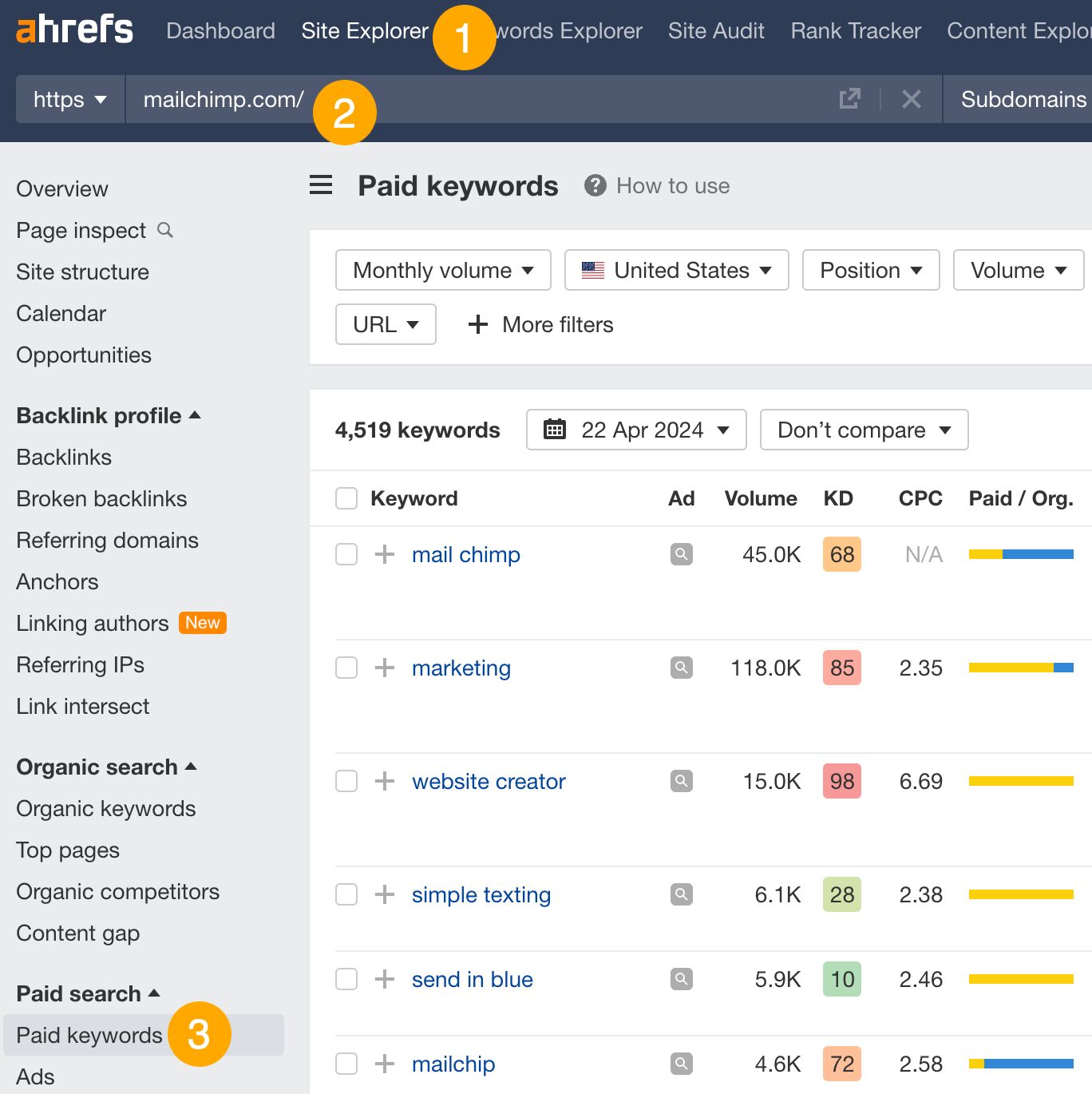

- Go to Ahrefs’ Site Explorer

- Enter your competitor’s domain

- Go to the Paid keywords report

This report shows you the keywords your competitors are targeting via Google Ads.

Since your competitor is paying for traffic from these keywords, it may indicate that they’re profitable for them—and could be for you, too.

You know what keywords your competitors are ranking for or bidding on. But what do you do with them? There are basically three options.

1. Create pages to target these keywords

You can only rank for keywords if you have content about them. So, the most straightforward thing you can do for competitors’ keywords you want to rank for is to create pages to target them.

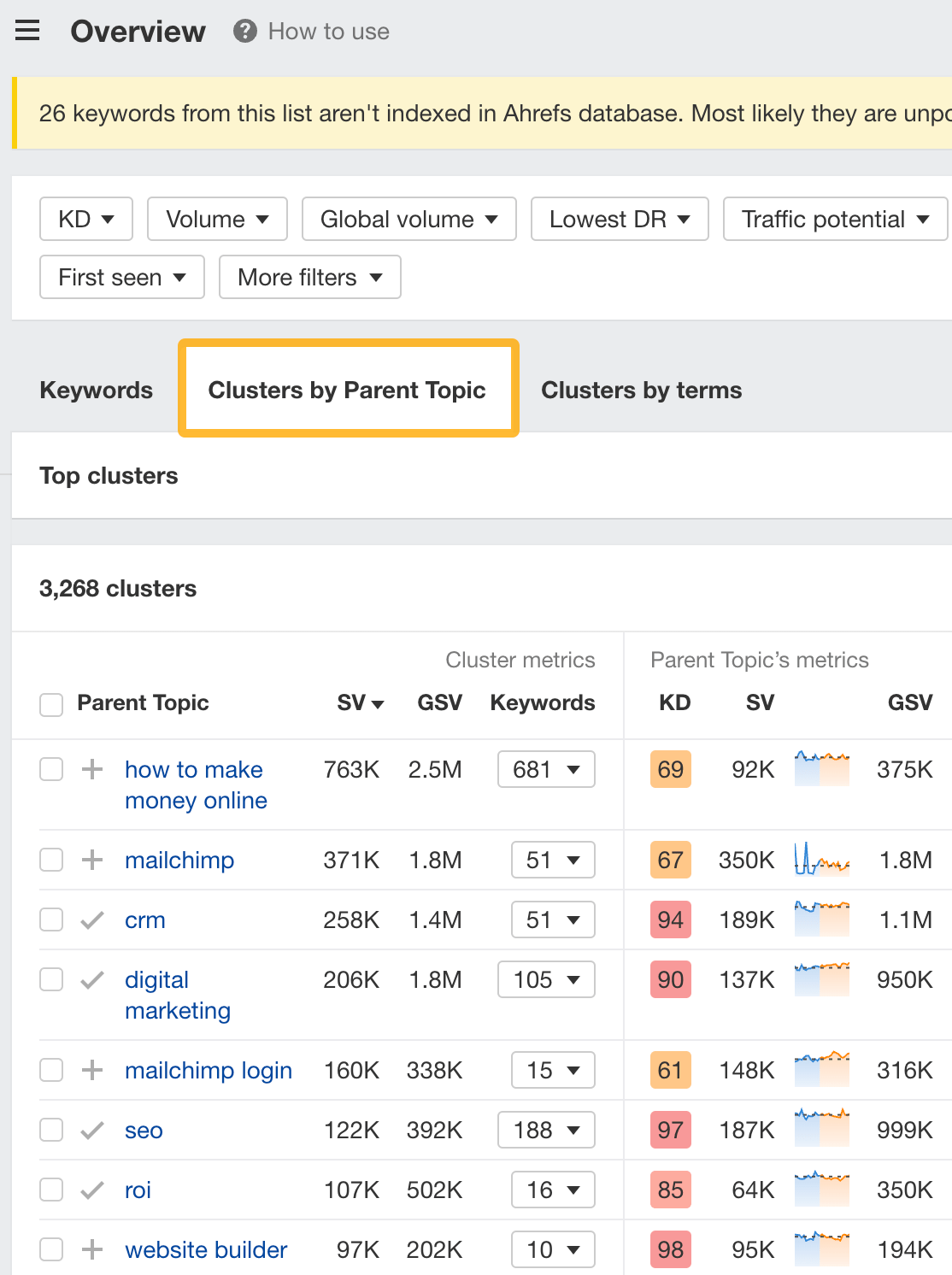

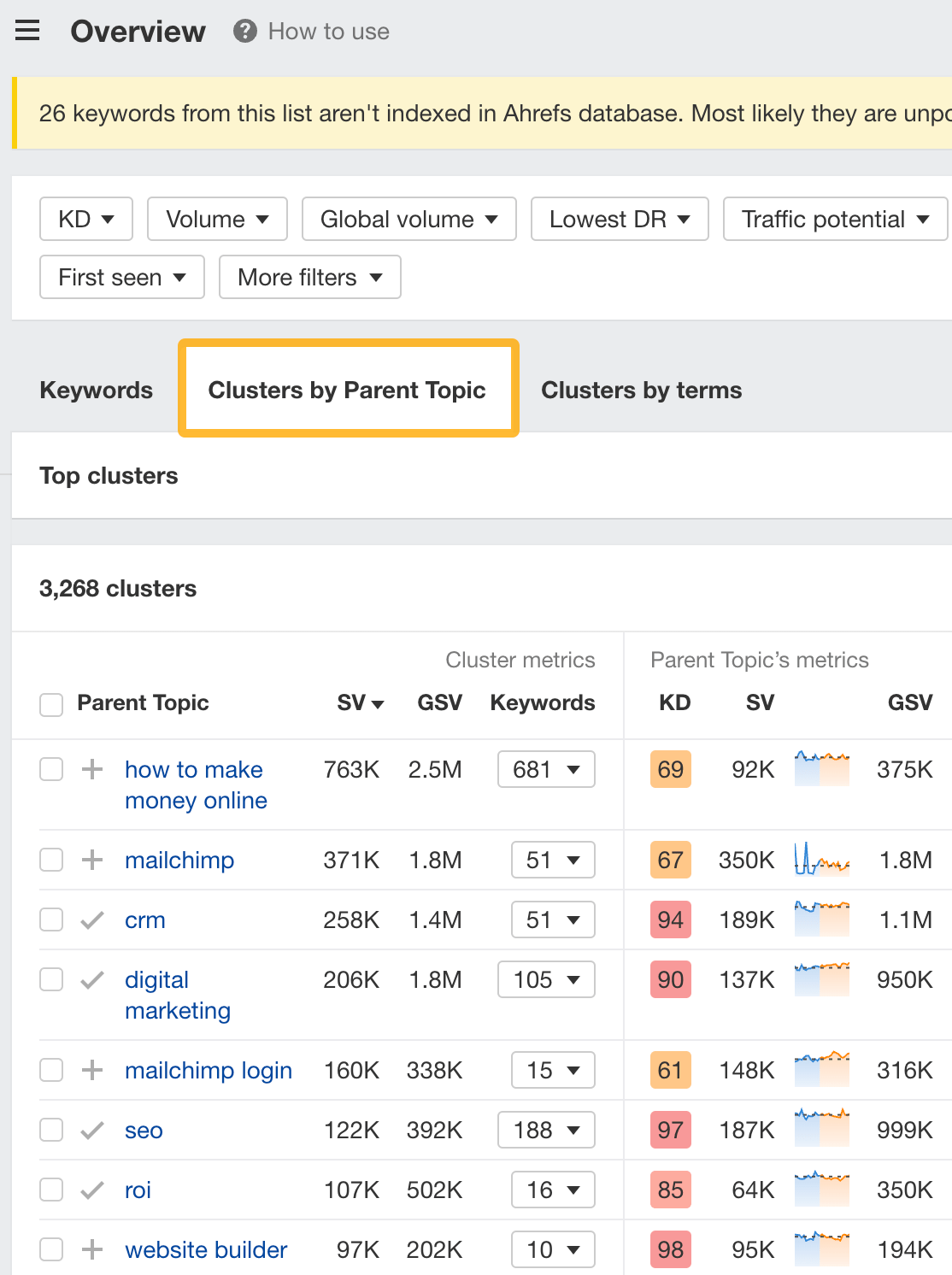

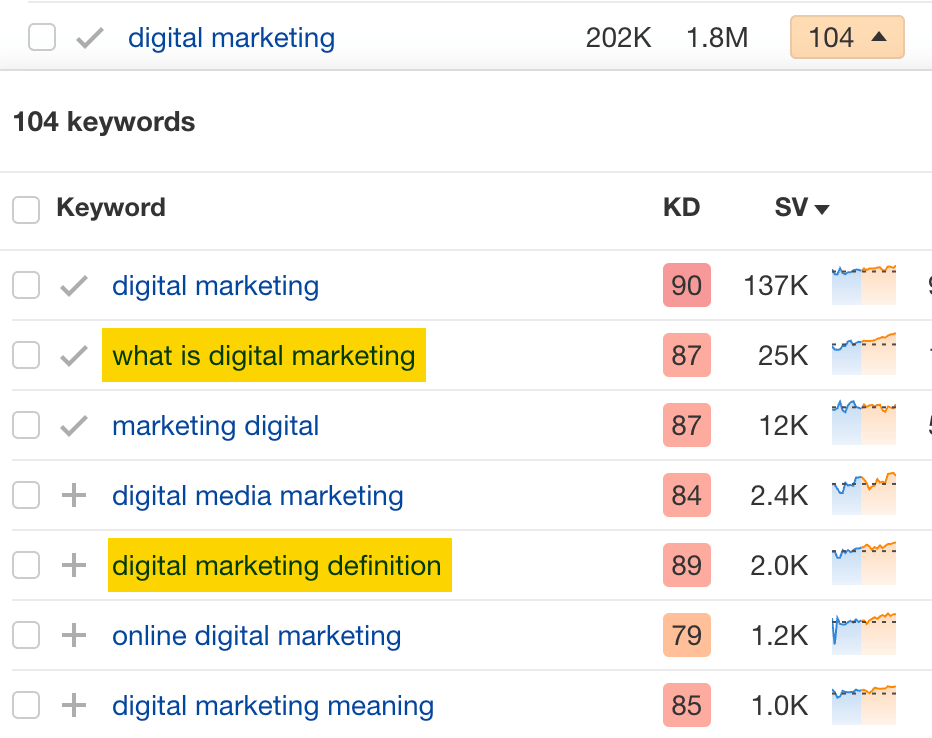

However, before you do this, it’s worth clustering your competitor’s keywords by Parent Topic. This will group keywords that mean the same or similar things so you can target them all with one page.

Here’s how to do that:

- Export your competitor’s keywords, either from the Organic Keywords or Content Gap report

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

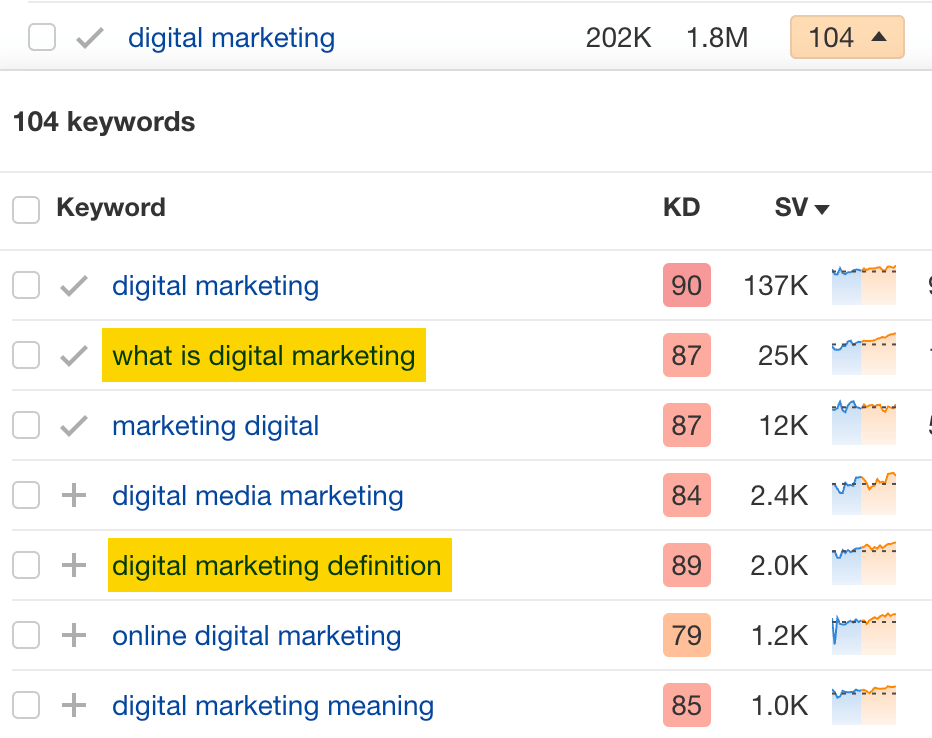

For example, MailChimp ranks for keywords like “what is digital marketing” and “digital marketing definition.” These and many others get clustered under the Parent Topic of “digital marketing” because people searching for them are all looking for the same thing: a definition of digital marketing. You only need to create one page to potentially rank for all these keywords.

2. Optimize existing content by filling subtopics

You don’t always need to create new content to rank for competitors’ keywords. Sometimes, you can optimize the content you already have to rank for them.

How do you know which keywords you can do this for? Try this:

- Export your competitor’s keywords

- Paste them into Keywords Explorer

- Click the “Clusters by Parent Topic” tab

- Look for Parent Topics you already have content about

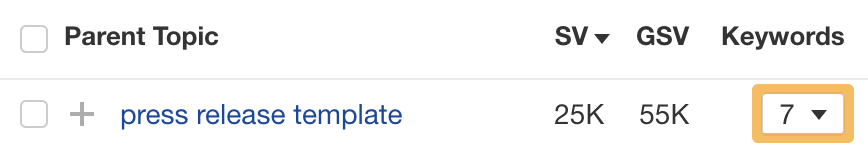

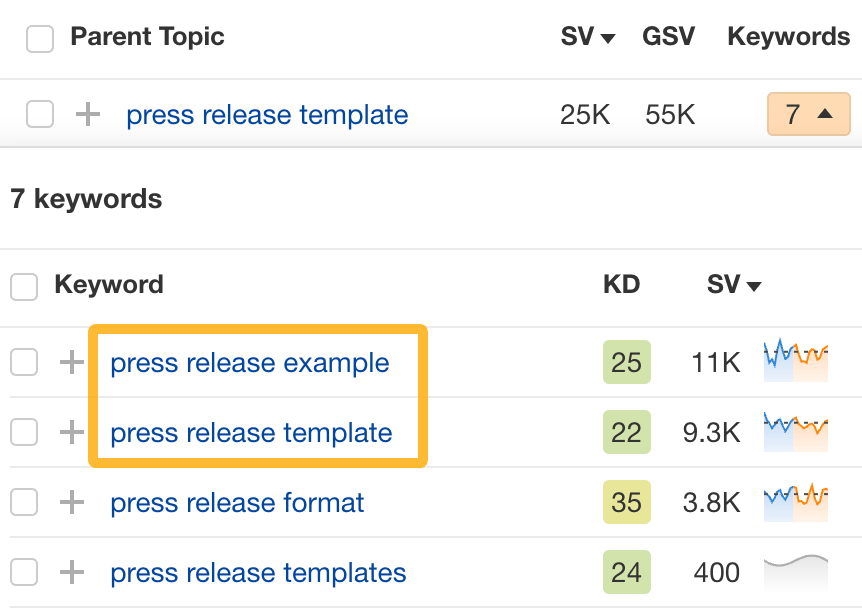

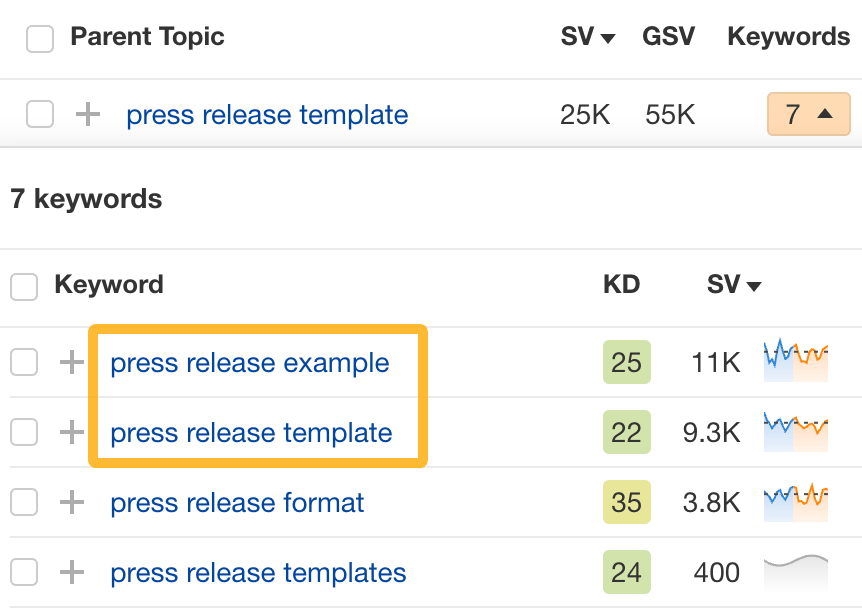

For example, if we analyze our competitor, we can see that seven keywords they rank for fall under the Parent Topic of “press release template.”

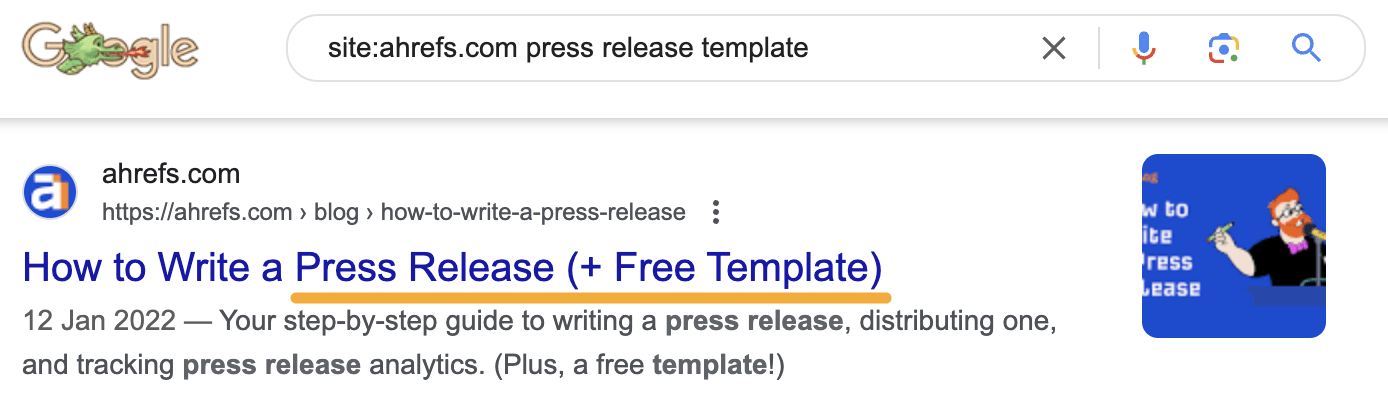

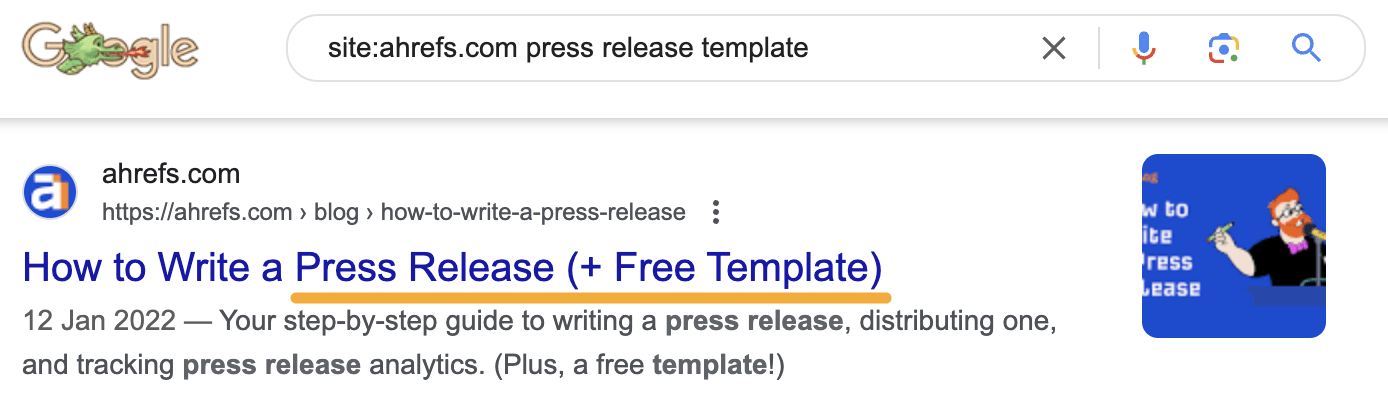

If we search our site, we see that we already have a page about this topic.

If we click the caret and check the keywords in the cluster, we see keywords like “press release example” and “press release format.”

To rank for the keywords in the cluster, we can probably optimize the page we already have by adding sections about the subtopics of “press release examples” and “press release format.”

3. Target these keywords with Google Ads

Paid keywords are the simplest—look through the report and see if there are any relevant keywords you might want to target, too.

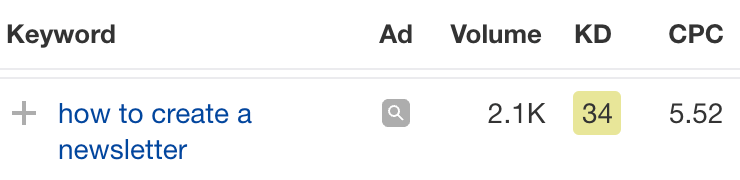

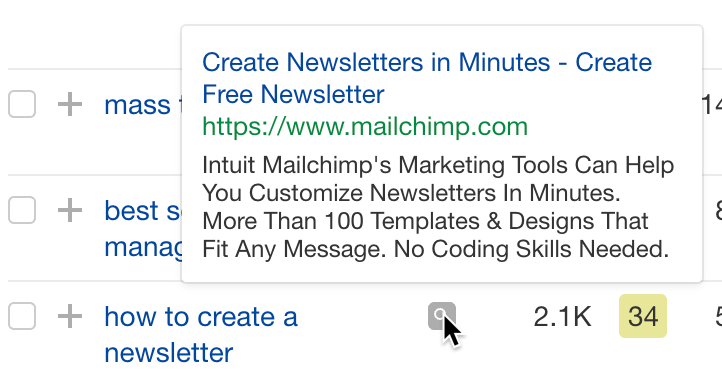

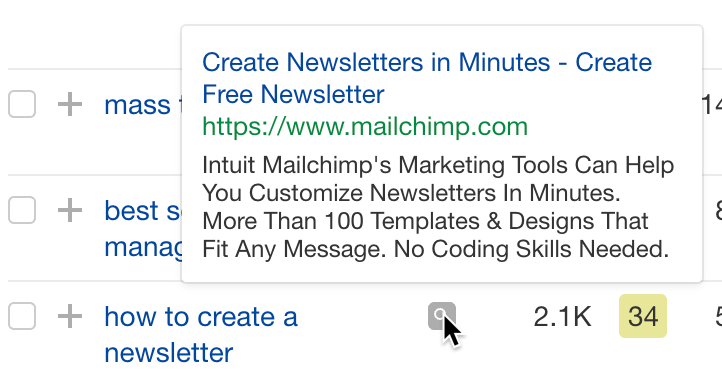

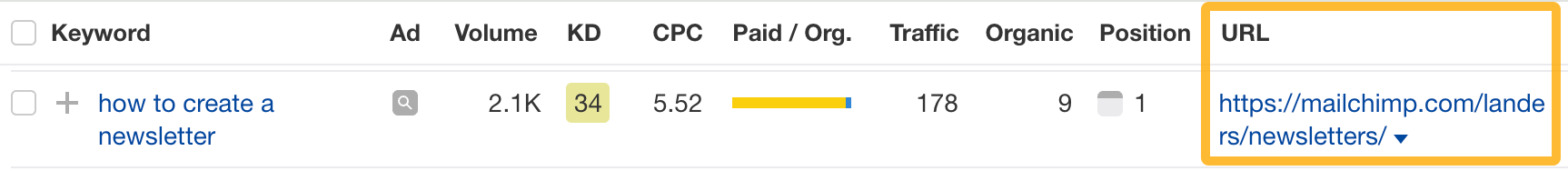

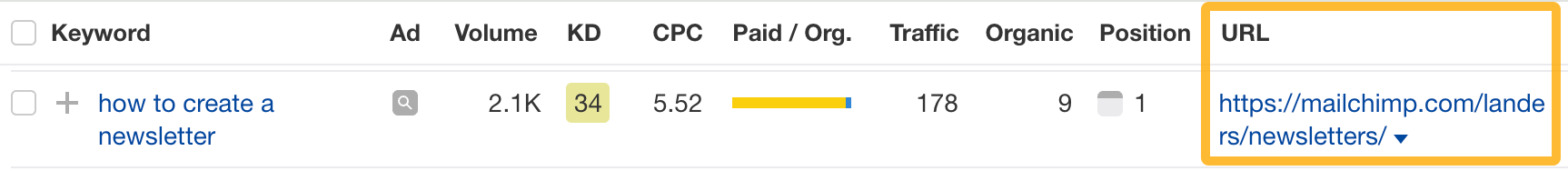

For example, Mailchimp is bidding for the keyword “how to create a newsletter.”

If you’re ConvertKit, you may also want to target this keyword since it’s relevant.

If you decide to target the same keyword via Google Ads, you can hover over the magnifying glass to see the ads your competitor is using.

You can also see the landing page your competitor directs ad traffic to under the URL column.

Learn more

Check out more tutorials on how to do competitor keyword analysis:

-

PPC5 days ago

PPC5 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

MARKETING7 days ago

MARKETING7 days agoStreamlining Processes for Increased Efficiency and Results

-

PPC7 days ago

PPC7 days ago97 Marvelous May Content Ideas for Blog Posts, Videos, & More

-

SEARCHENGINES7 days ago

Daily Search Forum Recap: April 17, 2024

-

SEO7 days ago

SEO7 days agoAn In-Depth Guide And Best Practices For Mobile SEO

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 18, 2024

-

MARKETING6 days ago

MARKETING6 days agoEcommerce evolution: Blurring the lines between B2B and B2C

-

SEARCHENGINES4 days ago

Daily Search Forum Recap: April 19, 2024

You must be logged in to post a comment Login