SOCIAL

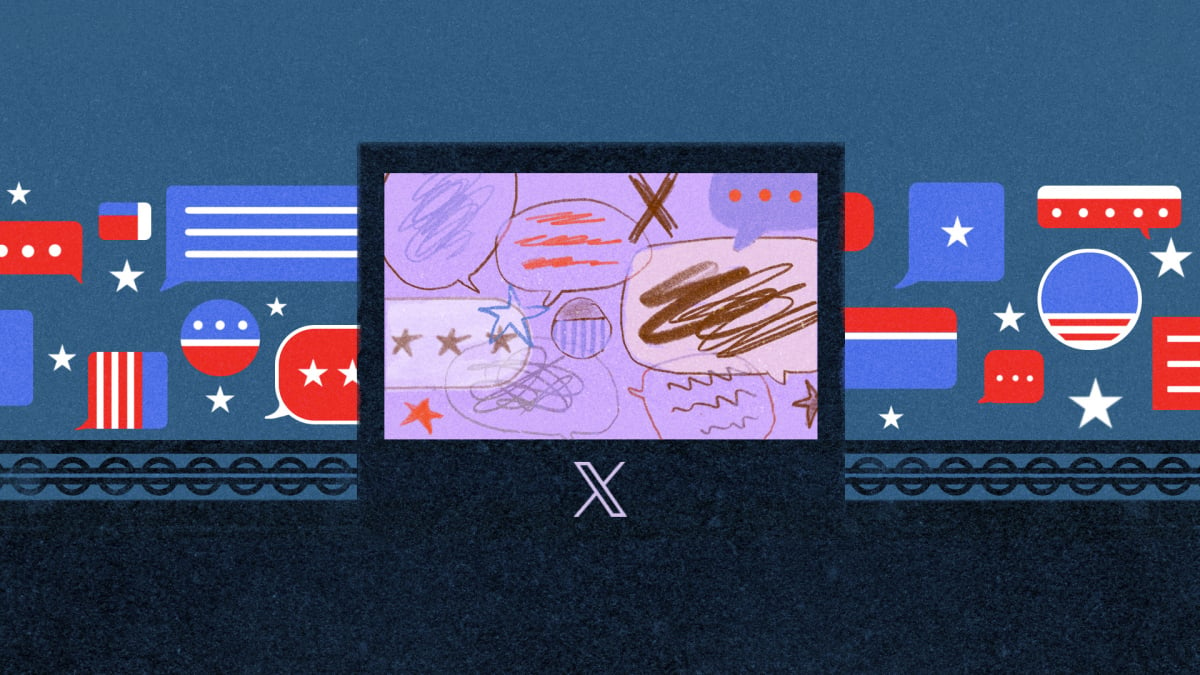

6 big questions users should be asking about political advertising on X/Twitter

Federal election season is around the corner, and with social media platforms taking over as go-to repositories of information, digital campaigning is as important as ever.

On Aug. 29, Elon Musk‘s X (formerly Twitter) announced a reversal of its 4-year-old policy banning political campaign ads from the social platform. According to a quietly-added “safety” brief and updated ads policy, the company would begin allowing political advertising — halted in 2019 following concerns about disinformation and election tampering via social media sites — from candidates and political parties.

The Dorsey-era policy reversal was a notable change, with many media outlets and X onlookers noting that the move could be a quick money-making decision on a platform that has steadily lost its advertising revenue. It wasn’t entirely a surprise, either, as X eased its restrictions on what it deems “political content” and cause-based ads in January.

The announcement couldn’t have come at a more turbulent time for the platform, raising questions on what users should expect to encounter in light of the platform’s public image as a “digital town square” for unfettered free speech. Since its purchase and rebranding, X has consistently failed third-party reviews of its safety and content moderation policies, becoming the worst-ranked major platform for LGBTQ user safety. Just this month, a social media analysis by Climate Action Against Disinformation failed X on its handling of misinformation, finding the platform has no clear policy nor transparency on how it addresses climate denial content, and giving it a ranked score of just one out of 21 points.

This week, the platform removed the option to report posts as “misleading information.” A few days later, despite a commitment to building out a team to monitor the new political space, X allegedly issued additional cuts in staffing to its disinformation and election integrity team, the Information reported. Musk seemed to confirm the news, posting to X: “Oh you mean the ‘Election Integrity’ Team that was undermining election integrity? Yeah, they’re gone.” Meanwhile, X CEO Linda Yaccarino countered the statement, telling CNBC that the team was “growing.” According to Insider, X said its Trust and Safety division has been restructured, with responsibilities related to “disinformation, impersonation, and election integrity” being redistributed.

Among the many moderation changes, X has taken on a strategy of slowly allowing formerly restricted content (and accounts) back on the site — a move that was forecast to bring money back into the company’s pockets. In August, X announced a new “sensitivity threshold” for advertisers, allowing brands to select the amount of “sensitive content,” including hate speech, allowed to appear near their ads in a user’s feed.

But, while X/Twitter as a brand acknowledges the complications of bringing back accounts sharing unsubstantiated information and hate speech, its CEO has repeatedly placed blame elsewhere. Musk has been so combative to worries about X’s content, not even nonprofit watchdogs are safe from retaliation to criticism.

All this to say: Political campaign advertising on X warrants close inspection.

Peter Adams is the senior vice president of research and design at the News Literacy Project, a nonpartisan education nonprofit combating the spread of misinformation and fostering the growth of news and media literacy. In an interview with Mashable, Adams explained X’s decision was an expected outcome of growing concern after the 2016 election.

“The response post-2016 was to tighten everything up very quickly,” Adams says. “I think all social media platforms were caught flat-footed in 2016 by the ways that people were targeting audiences and manipulating ads platforms… And now we’ve seen a slow loosening up post-2020. So, I’m not surprised that [X] is starting to allow political content, but there are real concerns around how people will use promoted content.”

Pushing this content into a social media environment where all posts are designed to look similar — during a period of company-wide reorganization worrying many social and political advocates — is a potentially dangerous misinformation gamble. Onlookers, then, should be equipped to scroll with some questions in mind.

How will X ensure users have relevant information at their fingertips?

While the world of political campaigning is a complex beast, advertisements themselves are designed to be approachable to a wide base — eye-grabbing, relatable, or controversial enough to capture the attention of (hopefully) millions. The public should be equipped to recognize and assess these ads, but social media’s simplicity doesn’t always make that easy, Adams explains.

Adams pointed out that X’s demolition of its developer API and the desktop-enabled TweetDeck (now “XPro”) has further restricted the tools people have used to navigate and research content on the platform.

Under previous terms, third-party developers used API access to create observant, automatic accounts that spotted political bots, aggregated threads and conversations, and added a level of accessibility for users with disabilities, among hundreds of other uses. TweetDeck allowed individuals and news organizations to monitor multiple feeds, accounts, and curated Twitter lists all at once — combatting algorithmic-based follower feeds. X has, fortunately, carved out API use for public services, but the remaining restrictions complicate user experience, Adams says.

“It’s an important sort of transparency tool that has already been done away with, and also a powerful curation tool that folks used to track hashtags and events and groups of people in real time.”

X also has yet to outline its AI use or disclosure policies for political campaigning. This month, Google released its new policy for use of AI in political ads appearing on Google sites and YouTube; even TikTok has added guidelines for disclosures of AI-generated creator content.

“We haven’t yet experienced an election in the age of Midjourney and other image- and video-based generative AI that have rapidly developed in the last couple of years. Detecting, moderating, and labeling AI-generated visuals especially, is key,” Adams advises. “People should be looking for every platform to have a clearly stated policy on that, and that protects people from being duped into believing something that’s fabricated.”

Will X address its blue check problem?

X also has whittled away at platform-wide trust by invalidating the meaning behind Twitter’s pioneering blue check verification system, which once signaled to users that they were (at least likely) interacting with a real account, verified to be who they claim to be. Musk’s takeover and rebranding shifted this approval model to a paid model, effectively killing the check’s meaning and allowing anyone to sport the badge for a fee.

Users, Adams fears, may still interpret checks as a sign of veracity, and for political campaigning, blue checks may pose another hurdle in a users’ ability to distinguish fact from advertisement.

“For legacy users of the platform, there’s this Pavlovian connection between a blue check and ‘authentic.’ I worry about that habitual, almost subconscious connection between a blue check and prominence or significance. Now we have people with a few dozen followers with blue checks whose posts may gain a little more credibility in some people’s minds because they have that legacy symbol, which was never a symbol of credibility.”

When does political content become a political campaign?

The language of X’s new ads policy, and how it differentiates political content versus overt campaigning, gives Adams pause, as well.

According to the company: “Political content ads are defined as ads that reference a candidate, political party, elected or appointed government official, election, referendum, ballot measure, legislation, regulation, directive, or judicial outcome.”

Political campaigning advertisements, on the other hand, advocate for or against a candidate or political party; appeal directly for votes in an election, referendum, or ballot measure; solicit financial support for an election, referendum, or ballot measure; or are from registered PACs and SuperPACs.

The definitions leave questions to be answered in real time and at high stakes, given that political content ads, political campaigning ads, and other forms of content are subject to different guidelines and standards.

“It seems a bit narrow to me,” says Adams. “That doesn’t seem to me to be broad enough to cover divisive social issues that could be misrepresented. We know from 2016 that folks seeking to divide Americans were very active around those issues. It’s good that the policy explicitly prohibits groups or individuals from placing ads to target audiences in a different country, I just have questions about how that will be enforced.”

The lack of specificity for social issue-based campaigning also stirs up worries of greater political and social polarization, Adams explains, possibly “ramping up antipathy between conservatives and liberals or exacerbating racial animosity in the U.S.”

“I think that the policy has to cover stuff that’s not explicitly related to a campaign or a piece of legislation or an election.”

Want more Social Good stories in your inbox? Sign up for Mashable’s Top Stories newsletter today.

Can users effectively differentiate between political campaign advertisements and other posts?

Public understanding of advertising disclosures, moderation processes, and media literacy also will determine the new policy’s impact on potential voters, and the platform’s broader information culture.

“From our point of view, we know that the public has a difficult time differentiating between organic posts and paid posts,” explains Adams. “Spotting non-traditional advertising, even things like branded content, can be really tricky for people to pick up on, especially in a setting that’s optimized for scrolling and quick feeds.”

Spotting advertisements online is just step one. In the X Ads Help Center, the platform’s political content FAQs direct U.S. users to a disclosure report form which they can complete “to find out details about all political campaigning ads running on our platform.” In April, X came under fire for already failing to publicly disclose some political content ads after the January policy change.

“Even if you notice the sponsor posts, taking the time to see who’s behind it and who funded it is a whole other step that very few people are going to take, unless there’s a conservative effort to encourage that. I think the more transparency that platforms can provide around this, the better,” says Adams.

“No matter how many ways advertisers come up with to try to reach people, the questions people should be asking, and the things we should be looking for, largely remain the same. They should ask: What kind of information am I looking at? What is it? What is its primary purpose?” For ads, specifically, that means “keeping up with all the forms that ads can take, and noticing the ways that they are labeled or disclosed, which are often very subtle.”

Clear, accessible labeling of political content and campaign advertisements — notably separate from commercial advertisements — should be incorporated into this policy decision, Adams says.

“Political ads should have a different and more prominent label than just every other kind of sponsored post,” he advises. “And there should be real attention drawn to them. They should link to more information about that ad directly, like to the entry on that ad in the [global advertising transparency center]. So you could click a label and learn more about the ad, see who it’s targeted at, how many people it’s reaching, and so on.”

How transparent will X’s “global advertising transparency center” be in practice?

X’s new policy decision seems to lean heavily on the presence of a yet-to-be-created global advertising transparency center, expanding its current Transparency Center into something potentially more similar to Meta’s own advertising Transparency Center, which centralizes its content moderation policies, transparency reports, and other resources on platform security and misinformation.

But Adams says X’s version, still in its early days, is already too loosely defined. “It’s ironic to be opaque about a transparency center.”

The new center’s introduction reportedly is being met with expansion of “safety and elections teams” dedicated to moderating these paid placement posts, according to an Aug. 29 X Safety blog on political advertising, as many doubt X’s readiness ahead of the presidential election.

But the company has repeatedly diminished staffing for similar moderating efforts, even prior to this week’s reported election integrity team reductions. By mid-November 2022, Musk had cut thousands of staff including many responsible for content moderation, trust and safety, and civic integrity. In January, the company let go even more content moderation staff across its global offices.

“To turn around and promise that they’re going to grow those teams — why did you shrink it in the first place?” asks Adams. “In the case of content moderation, folks and former staffers have come forward to suggest that there were particularly deep cuts in that area, and particular disregard to monitoring and removing harmful content.”

To achieve actual transparency, the platform must also be clear about exactly what this center will provide to users, not just advertisers, Adams asserts. Will the center include information on all political advertisements, and what data will it include? “What about ads you reject?” Adams suggests. “Could you document the number of rejected ads? Document who is trying to place ads on the platform? When are [ads] being rejected and why? Those kinds of data points are useful and important.”

Can X combat the “infinite scroll” problem?

X will also be up against users’ in-app behaviors, most importantly the addictive structure of “infinite scrolling” common on most, if not all, social platforms. A desktop- or web browser-based transparency center — or ad information that takes users outside of the X app — serves little to no purpose for recreational users incentivized to stay glued to their feeds.

“There would be a disconnect between the people who are seeing most of these ads and people accessing [the center]. The vast majority of these audiences are looking at the platform on mobile. So if they can’t click through to at least some part of the global advertising transparency center from mobile, that would be a mess,” says Adams.

And in a growing age of declining media and news literacy, quick access to vetting tools, reliable sources, and nonpartisan information is paramount.

“My big concern is that no matter what they say, virtually every platform falls short of its moderation policies, of its stated ideal,” warns Adams. “That’s something for the public, and for the press, to watch — to hold them accountable to their own stated policies. It feels like there’s a real danger that they will just be paying lip service to this, rather than doing what’s best for democracy.”