SOCIAL

Meta Releases New Insights into its Evolving Efforts to Detect Coordinated Manipulation Programs

Meta has shared some new insights into its ongoing efforts to combat coordinated misinformation networks operating across its platforms, which became a major focus for the company following the 2016 US Election, and the revelations that Russian-backed teams had sought to sway the opinions of American voters.

As explained by Meta:

“Since 2017, we’ve reported on over 150 influence operations with details on each network takedown so that people know about the threats we see – whether they come from nation states, commercial firms or unattributed groups. Information sharing enabled our teams, investigative journalists, government officials and industry peers to better understand and expose internet-wide security risks, including ahead of critical elections.”

Meta publishes a monthly round-up of the networks that it’s detected and removed, via automated, user-reported, and other collaborative means, which has broadened its net in working to catch out these groups.

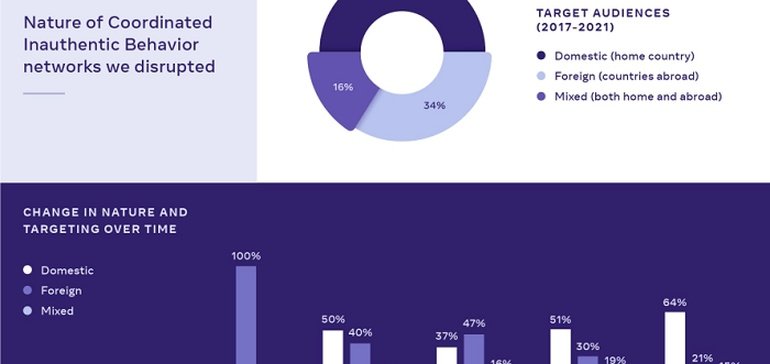

And some interesting trends have emerged in Meta’s enforcement data over time – first off, Meta has provided this overview of where the groups that it has detected and taken action on have originated from.

As you can see, while there have been various groups detected within Russia’s borders, there’s also been a cluster of activity originating from Iran and the surrounding regions, while more recently, Meta has taken action against several groups operating in Mexico.

But even more interesting is Meta’s data on the regions that these groups have been targeting, with a clear shift away from foreign interference, and towards domestic misinformation initiatives.

As shown in these charts, there’s been a significant move away from international pushes, with localized operations becoming more prevalent, at least in terms of what Meta’s teams have been able to detect.

Which is the other side of the research – those looking to utilize Meta’s platforms for such purpose are always evolving their tactics, in order to avoid detection, and it could be that more groups are still operating outside of Meta’s scope, so this may not be a complete view of misinformation campaign trends, as such.

But Meta has been upping its game, and it does appear to be paying off, with more coordinated misinformation pushes being caught out, and more action being taken to hold perpetrators accountable, in an effort to disincentivize similar programs in future.

But really, it’s going to keep happening. Facebook has reach to almost 3 billion people, while Instagram has over a billion users (reportedly now over 2 billion, though Meta has not confirmed this), and that’s before you consider WhatsApp, which has more than 2 billion users in its own right. At such scale, each of these platforms offers a massive opportunity for amplification of politically-motivated messaging, and while bad actors are able to tap into the amplification potential that each app provides, they will continue to seek ways to do so.

Which is a side effect of operating such popular networks, and one that Meta, for a long time, had either overlooked or refused to see. Most social networks were founded on the principle of connecting the world, and bringing people together, and that core ethos is what motivates all of their innovations and processes, with a view to a better society through increased community understanding, in global terms.

That’s an admirable goal, but the flip side of that is that social platforms also enable those with bad motivations to also connect and establish their own networks, and expand their potentially dangerous messaging throughout the same networks.

The clash of idealism and reality has often seemed to flummox social platform CEOs, who, again, would prefer to see the potential good over all else. Crypto networks are now in a similar boat, with massive potential to connect the world, and bring people together, but equally, the opportunity to facilitate money laundering, large-scale scams, tax evasion and potentially worse.

Getting the balance right is difficult, but as we now know, through experience, the impacts of failing to see these gaps can be significant.

Which is why these efforts are so important, and it’s interesting to note both the increasing push from Meta’s teams, and the evolution in tactics from bad actors.

My view? Localized groups, after learning how Russian groups sought to influence the US election, have sought to utilize the same tactics on a local level, meaning that past enforcement has also inadvertently highlighted how Meta’s platforms can be used for such purpose.

That’s likely to continue to be the case moving forward, and hopefully, Meta’s evolving actions will ensure better detection and removal of these initiatives before they can take effect.

You can read Meta’s Coordinated Misinformation Report for December 2021 here.

Source link

![Scale Efforts and Drive Revenue [Webinar] AI & Automation for SEO: Scale Efforts and Drive Revenue](https://articles.entireweb.com/wp-content/uploads/2024/10/Scale-Efforts-and-Drive-Revenue-Webinar-400x240.png)

![Scale Efforts and Drive Revenue [Webinar] AI & Automation for SEO: Scale Efforts and Drive Revenue](https://articles.entireweb.com/wp-content/uploads/2024/10/Scale-Efforts-and-Drive-Revenue-Webinar-80x80.png)

You must be logged in to post a comment Login