SOCIAL

Twitter Has Updated its Warning Prompts on Potentially Harmful Tweet Replies

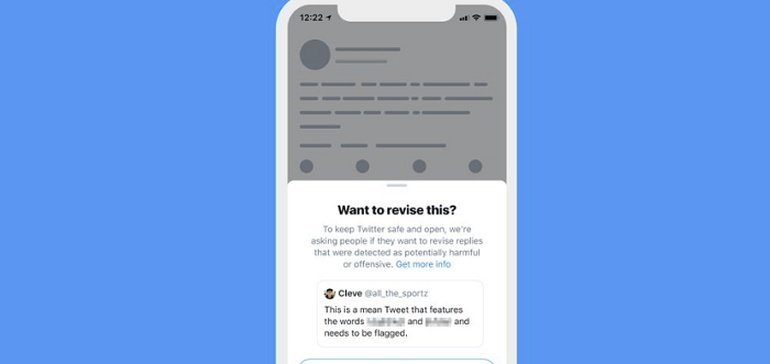

After testing them out over the last few months, Twitter has today announced a new update for its warning prompts on tweet replies which it detects may contain offensive comments.

You had feedback about prompts to revise a reply so we made updates:

▪️ If you see a prompt, it’ll include more info on why you received it

▪️ We’ve improved how we consider the context of the conversation before showing a promptThis is now testing on Android, iOS, and web. pic.twitter.com/rxdttI1zK2

— Twitter Support (@TwitterSupport) August 10, 2020

As explained by Twitter:

“If someone in the experiment Tweets a reply, our technology scans the text for language we’ve determined may be harmful and may consider how the accounts have interacted previously.”

After it’s initial trials, Twitter has now improved its detection methods for potentially problematic replies, and added more detail to its explanations, which could help users better understand the language that they’re using, and maybe reduce instances of unintended offense.

Of course, some people see this as overstepping the mark – that Twitter’s trying to control what you say, how you say it, free speech, amendment, etc. But it’s really not – the prompts, sparked by previously reported replies, simply aim to help eliminate misinterpretation and offense by asking users to re-assess.

If you’re happy with your tweet, you can still reply as normal. Instagram uses a similar system for its comments.

As noted in the tweet above, the new process is being tested with selected users on Android, iOS, and on the Twitter website.