TECHNOLOGY

Unlocking the Power of Generative AI with Qlik’s OpenAI Connectors

Generative AI tools have been publicly available for a couple of years, but this class of AI received huge fanfare towards the end of 2022 as OpenAI launched a chatbot called ChatGPT, based on a large language model (LLM).

Impressive on release, it hinted at how companies large and small will benefit from the power of LLMs. But how do companies harness LLMs in combination with their existing data? That’s where connecting OpenAI capabilities with enterprise databases comes into play – and for that, you need an OpenAI connector.

What is OpenAI, and Open AI Connectors?

OpenAI is a non-profit research laboratory founded in 2015 by Elon Musk, Sam Altman, Ilya Sutskever, and others. The organization developed several powerful artificial intelligence systems, including a chatbot called ChatGPT and image generator DALL-E – both examples of generative AI.

ChatGPT is an LLM chatbot trained on a massive dataset of text and code. It can generate text, translate languages, write different kinds of creative content, and answer your questions in an informative way. It is classified as generative AI – in other words, ChatGPT creates new, fresh content in response to a prompt the user enters into the ChatGPT console.

In turn, an OpenAI connector is any tool that links OpenAI’s capabilities with another technology platform. In other words, through an OpenAI connector, companies can integrate generative AI tools such as ChatGPT into their everyday workloads.

Users can then harness ChatGPT capabilities outside of the ChatGPT message box, and it also enables ChatGPT to provide answers based on prompts that contain internal enterprise data. We’ll examine why OpenAI connectors matter so much in a next section, but first let’s see what it is that sets generative AI apart from the AI most likely already included in an enterprise analytics platform.

How Does Generative AI Differ From Other AI Such as ML?

Linking enterprise technology platforms to the external services that offer the leading generative AI models matters, because generative AI is fundamentally different from traditional AI in terms of objectives, use cases, and benefits.

Traditional AI is usually designed to perform specific tasks based on predefined rules and patterns, excelling at pattern recognition and analysis but not creating anything new. Examples include voice assistants, recommendation engines, and search algorithms.

Generative AI, on the other hand, creates new and original content by generating fresh text, images, music, or computer code. For example, OpenAI’s GPT-4 can produce human-like text almost indistinguishable from text written by a person – based on GPT-4’s language model.

While traditional AI powers chatbots, recommendation systems, and predictive analytics, generative AI has vast potential in art and design, marketing, education, and research, revolutionizing fields where creation and innovation are key. Because these models work so very differently the use cases are also very different too.

Generative AI in the Enterprise

Since ChatGPT’s release there’s been intense focus on the benefits of generative AI in the business environment. Though some of the noise around generative AI is hype, there are also plenty of real-world enterprise use cases that can really deliver in the business setting.

McKinsey & Company ‘s analysis of a range of use cases suggests that generative AI will add trillions to the world’s economy. It will take time to see exactly where generative AI proves most impactful, but initial indications suggest that generative AI will contribute efficiency enhancement or new capabilities in:

For many of these use cases, of course, companies need to find a way to expose generative AI capabilities to their internal data sets – going beyond the ability to paste simple information into a ChatGPT conversation. That is where OpenAI connectors come in.

Looking at the new AI connectors for Qlik

Qlik has a long track record of offering cutting-edge tools to enhance the data analytics platform it offers businesses. As Qlik celebrates its 30th anniversary, we can see an expansion of the company’s machine learning and AI capabilities to ensure customers can leverage the latest industry innovations.

In June, Qlik released two OpenAI connectors. First, Qlik OpenAI Analytics Connector allows real-time access to generative content in Qlik Sense apps by securely integrating natural language insights from OpenAI into analytics apps. Users can incorporate third-party data into existing models, ask questions to ChatGPT with Qlik data, and more.

The Qlik OpenAI Connector for Application Automation helps developers improve their workflows using AI and LLM-generated content for creating expressions, commands, or scripts. That includes sentiment analysis across Qlik data, translation, and summarizing external text for internal audiences – for market analysis, for example.

How Do OpenAI Connectors Work in Practice?

Thanks to Qlik’s open platform and the integration capabilities – now including OpenAI – the company’s clients and partners can leverage many ways to employ generative AI in their analytics. This includes having the resources to create and develop their own cutting-edge solutions.

Additionally, this also provides the capability to have full governance and authority over the interaction with OpenAI, encompassing the data they transmit and the manner in which they opt to utilize it within the Qlik Cloud.

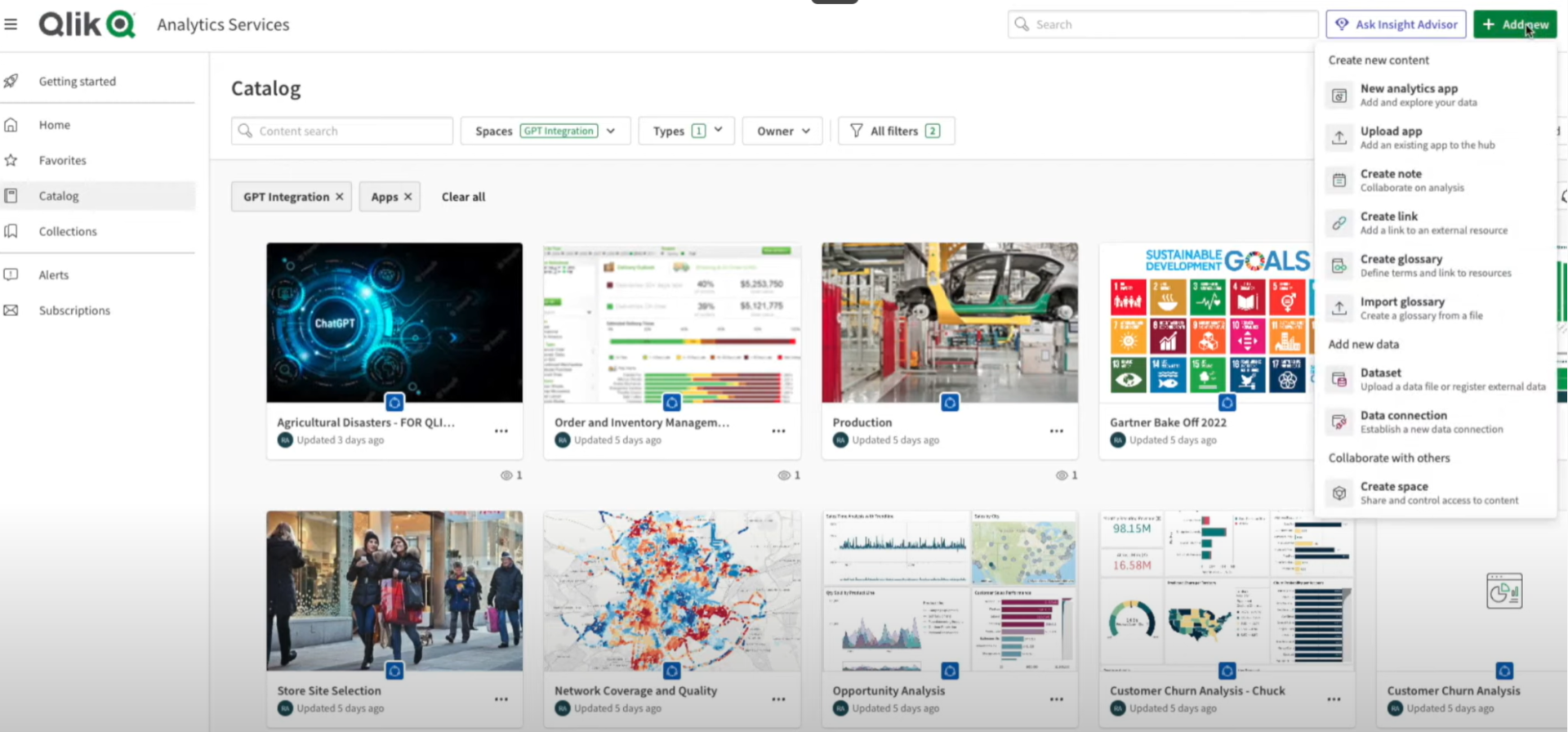

This YouTube video (snapshot above) demonstrates the power of generative AI with ChatGPT by leveraging ChatGPT to extract valuable insights directly from Qlik Cloud. The example, looks at the top 10 agricultural disasters and shows how, through a few simple steps, a user can trigger a natural language query where Qlik communicates with ChatGPT.

Qlik can turn the ChatGPT response into a data set and it will be dropped into the catalog right here. And fully available to anyone who has access to the data sitting in that space. In addition, because Qlik’s imported the ChatGPT response we also have a description of what that data is, so Qlik can understand the new data set within the context of your organization. As the business use cases of Generative AI become more tangible, it’s a fitting development to mark 30 years of innovation at Qlik.

Generative AI Brings New Capabilities to the Enterprise

Writing database scripts can sometimes be challenging for users and one of the advantages of OpenAI connectors is that users can now write queries in natural language. We can apply that capability, bring in enterprise data, and the opportunities become endless with several charts generated by Qlik’s Insight Advisor.

And this is all just a start! and so I would encourage you to experiment with the OpenAI connectors yourself. Qlik provides a simple tutorial here, or if you’re familiar with JSON code you can view a more technical example here. Happy experimenting! Many thanks, Sally

About the Author

A highly experienced chief technology officer, professor in advanced technologies, and a global strategic advisor on digital transformation, Sally Eaves specialises in the application of emergent technologies, notably AI, 5G, cloud, security, and IoT disciplines, for business and IT transformation, alongside social impact at scale, especially from sustainability and DEI perspectives.

An international keynote speaker and author, Sally was an inaugural recipient of the Frontier Technology and Social Impact award, presented at the United Nations, and has been described as the “torchbearer for ethical tech”, founding Aspirational Futures to enhance inclusion, diversity, and belonging in the technology space and beyond. Sally is also the chair for the Global Cyber Trust at GFCYBER.