Google’s New Technology Helps Create Powerful Ranking-Algorithms

Google has announced the release of improved technology that makes it easier and faster to research and develop new algorithms that can be deployed quickly.

This gives Google the ability to rapidly create new anti-spam algorithms, improved natural language processing and ranking related algorithms and be able to get them into production faster than ever.

Improved TF-Ranking Coincides with Dates of Recent Google Updates

This is of interest because Google has rolled out several spam fighting algorithms and two core algorithm updates in June and July 2021. Those developments directly followed the May 2021 publication of this new technology.

The timing could be coincidental but considering everything that the new version of Keras-based TF-Ranking does, it may be important to familiarize oneself with it in order to understand why Google has increased the pace of releasing new ranking-related algorithm updates.

New Version of Keras-based TF-Ranking

Google announced a new version of TF-Ranking that can be used to improve neural learning to rank algorithms as well as natural language processing algorithms like BERT.

It’s a powerful way to create new algorithms and to amplify existing ones, so to speak, and to do it in a way that is incredibly fast.

TensorFlow Ranking

According to Google, TensorFlow is a machine learning platform.

In a YouTube video from 2019, the first version of TensorFlow Ranking was described as:

“The first open source deep learning library for learning to rank (LTR) at scale.”

The innovation of the original TF-Ranking platform was that it changed how relevant documents were ranked.

Previously relevant documents were compared to each other in what is called pairwise ranking. The probability of one document being relevant to a query was compared to the probability of another item.

This was a comparison between pairs of documents and not a comparison of the entire list.

The innovation of TF-Ranking is that it enabled the comparison of the entire list of documents at a time, which is called multi-item scoring. This approach allows better ranking decisions.

Improved TF-Ranking Allows Fast Development of Powerful New Algorithms

Google’s article published on their AI Blog says that the new TF-Ranking is a major release that makes it easier than ever to set up learning to rank (LTR) models and get them into live production faster.

This means that Google can create new algorithms and add them to search faster than ever.

The article states:

“Our native Keras ranking model has a brand-new workflow design, including a flexible ModelBuilder, a DatasetBuilder to set up training data, and a Pipeline to train the model with the provided dataset.

These components make building a customized LTR model easier than ever, and facilitate rapid exploration of new model structures for production and research.”

TF-Ranking BERT

When an article or research paper states that the results were marginally better, offers caveats and states that more research was needed, that is an indication that the algorithm under discussion might not be in use because it’s not ready or a dead-end.

That is not the case of TFR-BERT, a combination of TF-Ranking and BERT.

BERT is a machine learning approach to natural language processing. It’s a way to to understand search queries and web page content.

BERT is one of the most important updates to Google and Bing in the last few years.

The article states that combining TF-R with BERT to optimize the ordering of list inputs generated “significant improvements.”

This statement that the results were significant is important because it raises the probability that something like this is currently in use.

The implication is that Keras-based TF-Ranking made BERT more powerful.

According to Google:

“Our experience shows that this TFR-BERT architecture delivers significant improvements in pretrained language model performance, leading to state-of-the-art performance for several popular ranking tasks…”

TF-Ranking and GAMs

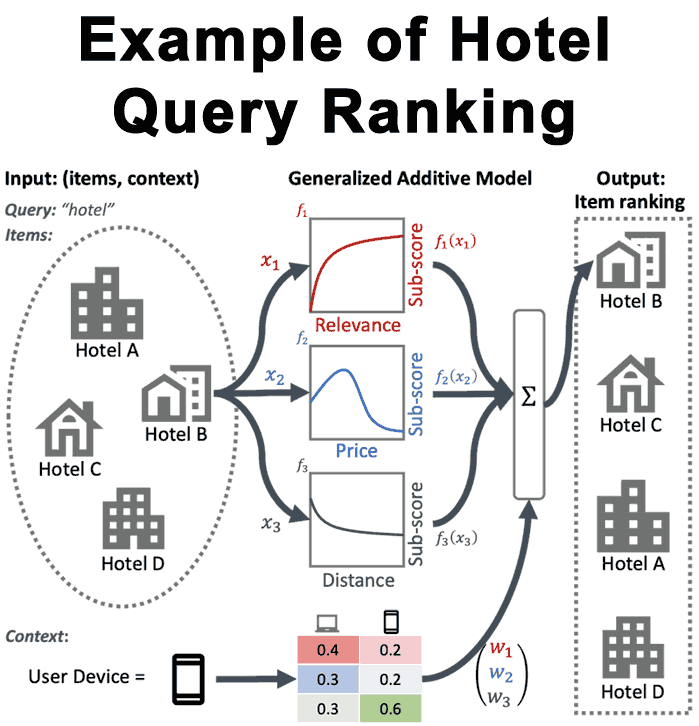

There’s another kind of algorithm, called Generalized Additive Models (GAMs), that TF-Ranking also improves and makes an even more powerful version than the original.

One of the things that makes this algorithm important is that it is transparent in that everything that goes into generating the ranking can be seen and understood.

Google explained the importance for transparency like this:

“Transparency and interpretability are important factors in deploying LTR models in ranking systems that can be involved in determining the outcomes of processes such as loan eligibility assessment, advertisement targeting, or guiding medical treatment decisions.

In such cases, the contribution of each individual feature to the final ranking should be examinable and understandable to ensure transparency, accountability and fairness of the outcomes.”

The problem with GAMs is that it wasn’t known how to apply this technology to ranking type problems.

In order to solve this problem and be able to use GAMs in a ranking setting, TF-Ranking was used to create neural ranking Generalized Additive Models (GAMs) that is more open to how web pages are ranked.

Google calls this, Interpretable Learning-to-Rank.

Here’s what the Google AI article says:

“To this end, we have developed a neural ranking GAM — an extension of generalized additive models to ranking problems.

Unlike standard GAMs, a neural ranking GAM can take into account both the features of the ranked items and the context features (e.g., query or user profile) to derive an interpretable, compact model.

For example, in the figure below, using a neural ranking GAM makes visible how distance, price, and relevance, in the context of a given user device, contribute to the final ranking of the hotel.

Neural ranking GAMs are now available as a part of TF-Ranking…”

I asked Jeff Coyle, co-founder of AI content optimization technology MarketMuse (@MarketMuseCo), about TF-Ranking and GAMs.

Jeffrey, who has a computer science background as well as decades of experience in search marketing, noted that GAMs is an important technology and improving it was an important event.

Mr. Coyle shared:

“I’ve spent significant time researching the neural ranking GAMs innovation and the possible impact on context analysis (for queries) which has been a long-term goal of Google’s scoring teams.

Neural RankGAM and related technologies are deadly weapons for personalization (notably user data and context info, like location) and for intent analysis.

With keras_dnn_tfrecord.py available as a public example, we get a glimpse at the innovation at a basic level.

I recommend that everyone check out that code.”

Outperforming Gradient Boosted Decision Trees (BTDT)

Beating the standard in an algorithm is important because it means that the new approach is an achievement that improves the quality of search results.

In this case the standard is gradient boosted decision trees (GBDTs), a machine learning technique that has several advantages.

But Google also explains that GBDTs also have disadvantages:

“GBDTs cannot be directly applied to large discrete feature spaces, such as raw document text. They are also, in general, less scalable than neural ranking models.”

In a research paper titled, Are Neural Rankers still Outperformed by Gradient Boosted Decision Trees? the researchers state that neural learning to rank models are “by a large margin inferior” to… tree-based implementations.”

Google’s researchers used the new Keras-based TF-Ranking to produce what they called, Data Augmented Self-Attentive Latent Cross (DASALC) model.

DASALC is important because it is able to match or surpass the current state of the art baselines:

“Our models are able to perform comparatively with the strong tree-based baseline, while outperforming recently published neural learning to rank methods by a large margin. Our results also serve as a benchmark for neural learning to rank models.”

Keras-based TF-Ranking Speeds Development of Ranking Algorithms

The important takeaway is that this new system speeds up the research and development of new ranking systems, which includes identifying spam to rank them out of the search results.

The article concludes:

“All in all, we believe that the new Keras-based TF-Ranking version will make it easier to conduct neural LTR research and deploy production-grade ranking systems.”

Google has been innovating at an increasingly faster rate these past few months, with several spam algorithm updates and two core algorithm updates over the course of two months.

These new technologies may be why Google has been rolling out so many new algorithms to improve spam fighting and ranking websites in general.

Citations

Google AI Blog Article

Advances in TF-Ranking

Google’s New DASALC Algorithm

Are Neural Rankers still Outperformed by Gradient Boosted Decision Trees?

TensorFlow Ranking v0.4.0 GitHub page

https://github.com/tensorflow/ranking/releases/tag/v0.4.0

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-400x240.png)

![How AEO Will Impact Your Business's Google Visibility in 2026 Why Your Small Business’s Google Visibility in 2026 Depends on AEO [Webinar]](https://articles.entireweb.com/wp-content/uploads/2026/01/How-AEO-Will-Impact-Your-Businesss-Google-Visibility-in-2026-80x80.png)