MARKETING

6 Best Data Orchestration Tools to Transform Your Business

Data exists everywhere!

We use data every day — in different forms — to make informed decisions. It could be through counting your steps on a fitness app or tracking the estimated delivery date of your package. In fact, the data volume from internet activity alone is expected to reach an estimated 180 zettabytes by 2025.

Companies use data the same way but on a larger scale. They collect information about their targeted audiences through different sources, such as websites, CRM, and social media. This data is then analyzed and shared across various teams, systems, external partners, and vendors.

With the large volumes of data they handle, organizations need a reliable automation tool to process and analyze the data before use. Data orchestration tools are one of the most important in this process of software procurement.

What is Data Orchestration and Data Pipelines

Data orchestration is an automated process of data pipeline workflow. To break it down, let’s understand what goes on in a data pipeline.

Data moves from its raw state to a final form within the pipeline through a series of ETL workflows. ETL stands for Extract-Transform-Load. The ETL process collects data from multiple sources (extracts), cleans and packages the data (transforms), and saves the data to a database or warehouse (loads) where it is ready to be analyzed. Before this, data engineers had to create, schedule, and manually monitor the progress of data pipelines. But with data orchestration, each step in the workflow is automated.

Data orchestration is collecting and organizing siloed data from multiple data storage points and making it accessible and prepared for data analysis tools. With this automation act, businesses can streamline data from numerous sources to make calculated decisions.

The data orchestration pipeline is a game-changer in the data technology environment. The increase in cloud adoption from today’s data-driven company culturehas pushed the need for companies to embrace data orchestration globally.

Why is Data Orchestration Important

Data orchestration is the solution to the time-consuming management of data, giving organizations a way to keep their stacks connected while data flows smoothly.

“Data orchestration provides the answer to making your data more useful and available. But ultimately, it goes beyond simple data management. In the end, orchestration is about using data to drive actions, to create real business value.”

— Steven Hillion, Head of Data at Astronomer

As activities in an organization increase with the expansion of the customer base, it becomes challenging to cope with the high volume of data coming in. One example can be found in marketing. With the increased reliance on customer segmentation for successful campaigns, multiple sources of data can make it difficult to separate your prospects with speed and finesse.

Here’s how data orchestration can help:

- Disparate data sources. Data orchestration automates the process of gathering and preparing data coming from multiple sources without introducing human error.

- Breaks down silos. Many businesses have their data siloed, which can be a location, region, an organization, or a cloud application. Data orchestration breaks down these silos and makes the data accessible to the organization.

- Removes data bottlenecks. Data orchestration eliminates the bottlenecks arising from the downtime of analyzing and preparing data due to the automation of this process.

- Enforces data governance. The data orchestration tool connects all your data systems across geographical regions with different rules and regulations regarding data privacy. It ensures that the data collected complies with GDPR, CCPA, etc., laws on ethical data gathering.

- Gives faster insights. Automating each workflow stage in the data pipeline using data orchestration gives data engineers and analysts more time to draw and perform actionable insights, to enable data-based decision-making.

- Provides real-time information. Data can be extracted and processed the moment it is created, giving room for real-time data analysis or data storage.

- Scalability. Automation of the workflow helps organizations scale data use through synchronization across data silos.

- Monitoring the workflow progress. With data orchestration, the data pipeline is equipped with alerts to identify and amend issues as quickly as they occur.

Best Tools Data Orchestration Tools

Data orchestration tools clean, sort, arrange and publish your data into a data store. When choosing marketing automation tools for your business, two main things come to mind: what they can do and how much they cost.

Let’s look at some of the best ETL tools for your business.

1. Shipyard

Shipyard is a modern data orchestration platform that helps data engineers connect and automate tools and build reliable data operations. It creates powerful data workflows that extract, transform, and load data from a data warehouse to other tools to automate business processes.

The tool connects data stacks with up to 50+ low-code integrations. It orchestrates work between multiple external systems like Lambda, Cloud Functions, DBT Cloud, and Zapier. With a few simple inputs from these integrations, you can build data pipelines that connect to your data stack in minutes.

Some of Shipyard’s key features are:

- Built-in notifications and error-handling

- Automatic scheduling and on-demand triggers

- Share-able, reusable blueprints

- Isolated, scaling resources for each solution

- Detailed historical logging

- Streamlined UI for management

- In-depth admin controls and permissions

Pricing:

Shipyard currently offers two plans:

- Developer — Free

- Team — Starting from $50 per month

2. Luigi

Developed by Spotify, Luigi builds data pipelines in Python and handles dependency resolution, visualization, workflow management, failures, and command line integration. If you need an all-python tool that takes care of workflow management in batch processing, then Luigi is perfect for you.

It’s open source and used by famous companies like Stripe, Giphy, and Foursquare. Giphy says they love Luigi for “being a powerful, simple-to-use Python-based task orchestration framework”.

Some of its key features are:

- Python-based

- Task-and-target semantics to define dependencies

- Uses a single node for a directed graph and data-structure standard

- Light-weight, therefore, requires less time for management

- Allows users to define tasks, commands, and conditional paths

- Data pipeline visualization

Pricing:

Luigi is an open-source tool, so it’s free.

3. Apache Airflow

If you’re looking to schedule automated workflows through the command line, look no further than Apache Airflow. It’s a free and open-source software tool that facilitates workflow development, scheduling, and monitoring.

Most users prefer Apache Airflow because of its open-source community and a large library of pre-built integrations to third-party data processing tools (Example: Apache Spark, Hadoop). The greater flexibility when building workflows is another reason why this is a customer favorite.

Some of its key features are:

- Easy to use

- Robust integrations with data cloud stacks like AWS, Microsoft Azure

- Streamlines UI that monitors, schedules, and manages your workflows

- Standard python features allow you to maintain total flexibility when building your workflows

- Its latest version, Apache Airflow 2.0, has unique features like smart sensors, Full Rest API, Task Flow API, and some UI/UX improvements.

Pricing:

Free

4. Keboola

Keboola is a data orchestration tool built for enterprises and managed by a team of highly specialized engineers. It enables teams to focus on collaboration and get insights through automated workflows, collaborative workspaces, and secure experimentation.

The platform is user-friendly, so non-technical people can also easily build their data orchestration pipelines without the need for cloud engineering skills. It has a pay-as-you-go plan that scales with your needs and is integrated with the most commonly used tools.

Some of its key features are:

- Runs transformations in Python, SQL, and R

- No-code data pipeline automation

- Offers various pre-built integrations

- Data lineage and version control, so you don’t need to switch platforms as your data grows

Pricing:

Keboola currently has two plans:

5. Fivetran

Fivetran has an in-house orchestration system that powers the workflows required to extract and load data safely and efficiently. It enables data orchestration from a single platform with minimal configuration and code. Their easy-to-use platform keeps up with API changes and pulls fresh, rich data in minutes.

The tool is integrated with some of the best data source connectors, which analyze data immediately. Their pipelines automatically and continuously update, freeing you to focus on business insights instead of ETL.

Some of its key features are:

- Integrated with DBT scheduling

- Includes data lineage graphs to track how data moves and changes from connector to warehouse to BI tool

- Supports event data flow data

- Alerts and notifications for simplified troubleshooting

Pricing:

Fivetran has flexible price plans where you only pay for what you use:

- Starter — $120 per month

- Standard Select — $60 per month

- Standard — $180 per month

- Enterprise — $240 per month

- Business Critical — Request a demo

6. Dagster

A second-generation data orchestration tool, Dagster can detect and improve data awareness by anticipating the actions triggered by each data type. It aims to enhance data engineers’ and analysts’ development, testing, and overall collaboration experience. It can also accelerate development, scale your workload with flexible infrastructure, and understand the state of jobs and data with integrated observability.

Despite being a new addition to the market, many companies like VMware, Mapbox, and Doordash trust Dagster for their business’s productivity. Mapbox’s data software engineer, Ben Pleasonton says, “With Dagster, we’ve brought a core process that used to take days or weeks of developer time down to 1-2 hours.”

Some of its key features are:

- Greater fluidity and easy to integrate

- Run monitoring

- Easy-to-use APIs

- DAG-based workflow

- Various integration options with popular tools like DBT, Spark, Airflow, and Panda

Pricing:

Dagster is an open-source platform, so it’s free.

In conclusion…

Companies are increasingly relying on the best AI marketing tools for a sustainable, forward-thinking business. Leveraging automation has helped them accelerate their business operations, and data orchestration tools specifically have provided them with greater insights to run their business better.

Choosing the right ETL tools for your business largely depends on your existing data infrastructure. While our top picks are some of the best in the world, ensure you research well and select the best one to help your business get the most out of its data.

MARKETING

Streamlining Processes for Increased Efficiency and Results

How can businesses succeed nowadays when technology rules? With competition getting tougher and customers changing their preferences often, it’s a challenge. But using marketing automation can help make things easier and get better results. And in the future, it’s going to be even more important for all kinds of businesses.

So, let’s discuss how businesses can leverage marketing automation to stay ahead and thrive.

Benefits of automation marketing automation to boost your efforts

First, let’s explore the benefits of marketing automation to supercharge your efforts:

Marketing automation simplifies repetitive tasks, saving time and effort.

With automated workflows, processes become more efficient, leading to better productivity. For instance, automation not only streamlines tasks like email campaigns but also optimizes website speed, ensuring a seamless user experience. A faster website not only enhances customer satisfaction but also positively impacts search engine rankings, driving more organic traffic and ultimately boosting conversions.

Automation allows for precise targeting, reaching the right audience with personalized messages.

With automated workflows, processes become more efficient, leading to better productivity. A great example of automated workflow is Pipedrive & WhatsApp Integration in which an automated welcome message pops up on their WhatsApp

within seconds once a potential customer expresses interest in your business.

Increases ROI

By optimizing campaigns and reducing manual labor, automation can significantly improve return on investment.

Leveraging automation enables businesses to scale their marketing efforts effectively, driving growth and success. Additionally, incorporating lead scoring into automated marketing processes can streamline the identification of high-potential prospects, further optimizing resource allocation and maximizing conversion rates.

Harnessing the power of marketing automation can revolutionize your marketing strategy, leading to increased efficiency, higher returns, and sustainable growth in today’s competitive market. So, why wait? Start automating your marketing efforts today and propel your business to new heights, moreover if you have just learned ways on how to create an online business

How marketing automation can simplify operations and increase efficiency

Understanding the Change

Marketing automation has evolved significantly over time, from basic email marketing campaigns to sophisticated platforms that can manage entire marketing strategies. This progress has been fueled by advances in technology, particularly artificial intelligence (AI) and machine learning, making automation smarter and more adaptable.

One of the main reasons for this shift is the vast amount of data available to marketers today. From understanding customer demographics to analyzing behavior, the sheer volume of data is staggering. Marketing automation platforms use this data to create highly personalized and targeted campaigns, allowing businesses to connect with their audience on a deeper level.

The Emergence of AI-Powered Automation

In the future, AI-powered automation will play an even bigger role in marketing strategies. AI algorithms can analyze huge amounts of data in real-time, helping marketers identify trends, predict consumer behavior, and optimize campaigns as they go. This agility and responsiveness are crucial in today’s fast-moving digital world, where opportunities come and go in the blink of an eye. For example, we’re witnessing the rise of AI-based tools from AI website builders, to AI logo generators and even more, showing that we’re competing with time and efficiency.

Combining AI-powered automation with WordPress management services streamlines marketing efforts, enabling quick adaptation to changing trends and efficient management of online presence.

Moreover, AI can take care of routine tasks like content creation, scheduling, and testing, giving marketers more time to focus on strategic activities. By automating these repetitive tasks, businesses can work more efficiently, leading to better outcomes. AI can create social media ads tailored to specific demographics and preferences, ensuring that the content resonates with the target audience. With the help of an AI ad maker tool, businesses can efficiently produce high-quality advertisements that drive engagement and conversions across various social media platforms.

Personalization on a Large Scale

Personalization has always been important in marketing, and automation is making it possible on a larger scale. By using AI and machine learning, marketers can create tailored experiences for each customer based on their preferences, behaviors, and past interactions with the brand.

This level of personalization not only boosts customer satisfaction but also increases engagement and loyalty. When consumers feel understood and valued, they are more likely to become loyal customers and brand advocates. As automation technology continues to evolve, we can expect personalization to become even more advanced, enabling businesses to forge deeper connections with their audience. As your company has tiny homes for sale California, personalized experiences will ensure each customer finds their perfect fit, fostering lasting connections.

Integration Across Channels

Another trend shaping the future of marketing automation is the integration of multiple channels into a cohesive strategy. Today’s consumers interact with brands across various touchpoints, from social media and email to websites and mobile apps. Marketing automation platforms that can seamlessly integrate these channels and deliver consistent messaging will have a competitive edge. When creating a comparison website it’s important to ensure that the platform effectively aggregates data from diverse sources and presents it in a user-friendly manner, empowering consumers to make informed decisions.

Omni-channel integration not only betters the customer experience but also provides marketers with a comprehensive view of the customer journey. By tracking interactions across channels, businesses can gain valuable insights into how consumers engage with their brand, allowing them to refine their marketing strategies for maximum impact. Lastly, integrating SEO services into omni-channel strategies boosts visibility and helps businesses better understand and engage with their customers across different platforms.

The Human Element

While automation offers many benefits, it’s crucial not to overlook the human aspect of marketing. Despite advances in AI and machine learning, there are still elements of marketing that require human creativity, empathy, and strategic thinking.

Successful marketing automation strikes a balance between technology and human expertise. By using automation to handle routine tasks and data analysis, marketers can focus on what they do best – storytelling, building relationships, and driving innovation.

Conclusion

The future of marketing automation looks promising, offering improved efficiency and results for businesses of all sizes.

As AI continues to advance and consumer expectations change, automation will play an increasingly vital role in keeping businesses competitive.

By embracing automation technologies, marketers can simplify processes, deliver more personalized experiences, and ultimately, achieve their business goals more effectively than ever before.

MARKETING

Will Google Buy HubSpot? | Content Marketing Institute

Google + HubSpot. Is it a thing?

This week, a flurry of news came down about Google’s consideration of purchasing HubSpot.

The prospect dismayed some. It delighted others.

But is it likely? Is it even possible? What would it mean for marketers? What does the consideration even mean for marketers?

Well, we asked CMI’s chief strategy advisor, Robert Rose, for his take. Watch this video or read on:

Why Alphabet may want HubSpot

Alphabet, the parent company of Google, apparently is contemplating the acquisition of inbound marketing giant HubSpot.

The potential price could be in the range of $30 billion to $40 billion. That would make Alphabet’s largest acquisition by far. The current deal holding that title happened in 2011 when it acquired Motorola Mobility for more than $12 billion. It later sold it to Lenovo for less than $3 billion.

If the HubSpot deal happens, it would not be in character with what the classic evil villain has been doing for the past 20 years.

At first glance, you might think the deal would make no sense. Why would Google want to spend three times as much as it’s ever spent to get into the inbound marketing — the CRM and marketing automation business?

At a second glance, it makes a ton of sense.

I don’t know if you’ve noticed, but I and others at CMI spend a lot of time discussing privacy, owned media, and the deprecation of the third-party cookie. I just talked about it two weeks ago. It’s really happening.

All that oxygen being sucked out of the ad tech space presents a compelling case that Alphabet should diversify from third-party data and classic surveillance-based marketing.

Yes, this potential acquisition is about data. HubSpot would give Alphabet the keys to the kingdom of 205,000 business customers — and their customers’ data that almost certainly numbers in the tens of millions. Alphabet would also gain access to the content, marketing, and sales information those customers consumed.

Conversely, the deal would provide an immediate tip of the spear for HubSpot clients to create more targeted programs in the Alphabet ecosystem and upload their data to drive even more personalized experiences on their own properties and connect them to the Google Workspace infrastructure.

When you add in the idea of Gemini, you can start to see how Google might monetize its generative AI tool beyond figuring out how to use it on ads on search results pages.

What acquisition could mean for HubSpot customers

I may be stretching here but imagine this world. As a Hubspoogle customer, you can access an interface that prioritizes your owned media data (e.g., your website, your e-commerce catalog, blog) when Google’s Gemini answers a question).

Recent reports also say Google may put up a paywall around the new premium features of its artificial intelligence-powered Search Generative Experience. Imagine this as the new gating for marketing. In other words, users can subscribe to Google’s AI for free, but Hubspoogle customers can access that data and use it to create targeted offers.

The acquisition of HubSpot would immediately make Google Workspace a more robust competitor to Microsoft 365 Office for small- and medium-sized businesses as they would receive the ADDED capability of inbound marketing.

But in the world of rented land where Google is the landlord, the government will take notice of the acquisition. But — and it’s a big but, I cannot lie (yes, I just did that). The big but is whether this acquisition dance can happen without going afoul of regulatory issues.

Some analysts say it should be no problem. Others say, “Yeah, it wouldn’t go.” Either way, would anybody touch it in an election year? That’s a whole other story.

What marketers should realize

So, what’s my takeaway?

It’s a remote chance that Google will jump on this hard, but stranger things have happened. It would be an exciting disruption in the market.

The sure bet is this. The acquisition conversation — as if you needed more data points — says getting good at owned media to attract and build audiences and using that first-party data to provide better communication and collaboration with your customers are a must.

It’s just a matter of time until Google makes a move. They might just be testing the waters now, but they will move here. But no matter what they do, if you have your customer data house in order, you’ll be primed for success.

HANDPICKED RELATED CONTENT:

Cover image by Joseph Kalinowski/Content Marketing Institute

MARKETING

5 Psychological Tactics to Write Better Emails

Welcome to Creator Columns, where we bring expert HubSpot Creator voices to the Blogs that inspire and help you grow better.

I’ve tested 100s of psychological tactics on my email subscribers. In this blog, I reveal the five tactics that actually work.

You’ll learn about the email tactic that got one marketer a job at the White House.

You’ll learn how I doubled my 5 star reviews with one email, and why one strange email from Barack Obama broke all records for donations.

5 Psychological Tactics to Write Better Emails

Imagine writing an email that’s so effective it lands you a job at the White House.

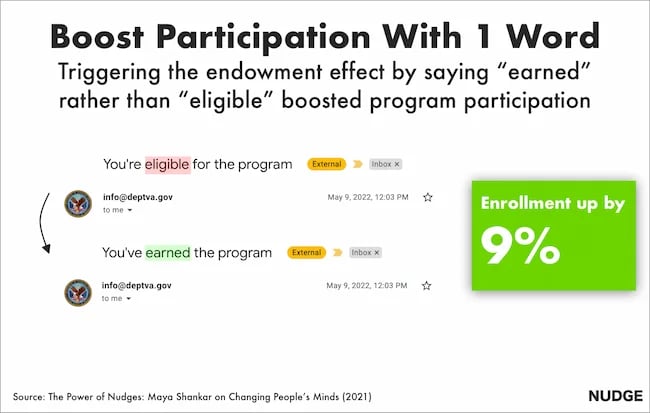

Well, that’s what happened to Maya Shankar, a PhD cognitive neuroscientist. In 2014, the Department of Veterans Affairs asked her to help increase signups in their veteran benefit scheme.

Maya had a plan. She was well aware of a cognitive bias that affects us all—the endowment effect. This bias suggests that people value items higher if they own them. So, she changed the subject line in the Veterans’ enrollment email.

Previously it read:

- Veterans, you’re eligible for the benefit program. Sign up today.

She tweaked one word, changing it to:

- Veterans, you’ve earned the benefits program. Sign up today.

This tiny tweak had a big impact. The amount of veterans enrolling in the program went up by 9%. And Maya landed a job working at the White House

Inspired by these psychological tweaks to emails, I started to run my own tests.

Alongside my podcast Nudge, I’ve run 100s of email tests on my 1,000s of newsletter subscribers.

Here are the five best tactics I’ve uncovered.

1. Show readers what they’re missing.

Nobel prize winning behavioral scientists Daniel Kahneman and Amos Tversky uncovered a principle called loss aversion.

Loss aversion means that losses feel more painful than equivalent gains. In real-world terms, losing $10 feels worse than how gaining $10 feels good. And I wondered if this simple nudge could help increase the number of my podcast listeners.

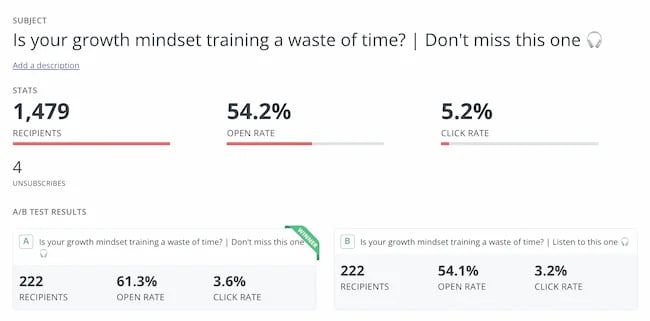

For my test, I tweaked the subject line of the email announcing an episode. The control read:

“Listen to this one”

In the loss aversion variant it read:

“Don’t miss this one”

It is very subtle loss aversion. Rather than asking someone to listen, I’m saying they shouldn’t miss out. And it worked. It increased the open rate by 13.3% and the click rate by 12.5%. Plus, it was a small change that cost me nothing at all.

2. People follow the crowd.

In general, humans like to follow the masses. When picking a dish, we’ll often opt for the most popular. When choosing a movie to watch, we tend to pick the box office hit. It’s a well-known psychological bias called social proof.

I’ve always wondered if it works for emails. So, I set up an A/B experiment with two subject lines. Both promoted my show, but one contained social proof.

The control read: New Nudge: Why Brands Should Flaunt Their Flaws

The social proof variant read: New Nudge: Why Brands Should Flaunt Their Flaws (100,000 Downloads)

I hoped that by highlighting the episode’s high number of downloads, I’d encourage more people to listen. Fortunately, it worked.

The open rate went from 22% to 28% for the social proof version, and the click rate, (the number of people actually listening to the episode), doubled.

3. Praise loyal subscribers.

The consistency principle suggests that people are likely to stick to behaviours they’ve previously taken. A retired taxi driver won’t swap his car for a bike. A hairdresser won’t change to a cheap shampoo. We like to stay consistent with our past behaviors.

I decided to test this in an email.

For my test, I attempted to encourage my subscribers to leave a review for my podcast. I sent emails to 400 subscribers who had been following the show for a year.

The control read: “Could you leave a review for Nudge?”

The consistency variant read: “You’ve been following Nudge for 12 months, could you leave a review?”

My hypothesis was simple. If I remind people that they’ve consistently supported the show they’ll be more likely to leave a review.

It worked.

The open rate on the consistency version of the email was 7% higher.

But more importantly, the click rate, (the number of people who actually left a review), was almost 2x higher for the consistency version. Merely telling people they’d been a fan for a while doubled my reviews.

4. Showcase scarcity.

We prefer scarce resources. Taylor Swift gigs sell out in seconds not just because she’s popular, but because her tickets are hard to come by.

Swifties aren’t the first to experience this. Back in 1975, three researchers proved how powerful scarcity is. For the study, the researchers occupied a cafe. On alternating weeks they’d make one small change in the cafe.

On some weeks they’d ensure the cookie jar was full.

On other weeks they’d ensure the cookie jar only contained two cookies (never more or less).

In other words, sometimes the cookies looked abundantly available. Sometimes they looked like they were almost out.

This changed behaviour. Customers who saw the two cookie jar bought 43% more cookies than those who saw the full jar.

It sounds too good to be true, so I tested it for myself.

I sent an email to 260 subscribers offering free access to my Science of Marketing course for one day only.

In the control, the subject line read: “Free access to the Science of Marketing course”

For the scarcity variant it read: “Only Today: Get free access to the Science of Marketing Course | Only one enrol per person.”

130 people received the first email, 130 received the second. And the result was almost as good as the cookie finding. The scarcity version had a 15.1% higher open rate.

5. Spark curiosity.

All of the email tips I’ve shared have only been tested on my relatively small audience. So, I thought I’d end with a tip that was tested on the masses.

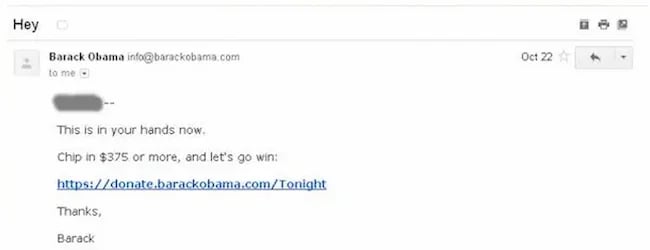

Back in 2012, Barack Obama and his campaign team sent hundreds of emails to raise funds for his campaign.

Of the $690 million he raised, most came from direct email appeals. But there was one email, according to ABC news, that was far more effective than the rest. And it was an odd one.

The email that drew in the most cash, had a strange subject line. It simply said “Hey.”

The actual email asked the reader to donate, sharing all the expected reasons, but the subject line was different.

It sparked curiosity, it got people wondering, is Obama saying Hey just to me?

Readers were curious and couldn’t help but open the email. According to ABC it was “the most effective pitch of all.”

Because more people opened, it raised more money than any other email. The bias Obama used here is the curiosity gap. We’re more likely to act on something when our curiosity is piqued.

Loss aversion, social proof, consistency, scarcity and curiosity—all these nudges have helped me improve my emails. And I reckon they’ll work for you.

It’s not guaranteed of course. Many might fail. But running some simple a/b tests for your emails is cost free, so why not try it out?

This blog is part of Phill Agnew’s Marketing Cheat Sheet series where he reveals the scientifically proven tips to help you improve your marketing. To learn more, listen to his podcast Nudge, a proud member of the Hubspot Podcast Network.

-

WORDPRESS6 days ago

WORDPRESS6 days agoTurkish startup ikas attracts $20M for its e-commerce platform designed for small businesses

-

PPC7 days ago

PPC7 days agoA History of Google AdWords and Google Ads: Revolutionizing Digital Advertising & Marketing Since 2000

-

MARKETING6 days ago

MARKETING6 days agoRoundel Media Studio: What to Expect From Target’s New Self-Service Platform

-

SEO5 days ago

SEO5 days agoGoogle Limits News Links In California Over Proposed ‘Link Tax’ Law

-

MARKETING6 days ago

MARKETING6 days agoUnlocking the Power of AI Transcription for Enhanced Content Marketing Strategies

-

SEARCHENGINES5 days ago

Daily Search Forum Recap: April 12, 2024

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Search Results Can Be Harmful & Dangerous In Some Cases

-

SEO4 days ago

SEO4 days ago10 Paid Search & PPC Planning Best Practices

![5 Psychological Tactics to Write Better Emails → Download Now: The Beginner's Guide to Email Marketing [Free Ebook]](https://articles.entireweb.com/wp-content/uploads/2023/02/11-Free-Email-Hacks-to-Step-Up-Your-Productivity.png)

You must be logged in to post a comment Login