MARKETING

6 Best Data Orchestration Tools to Transform Your Business

Data exists everywhere!

We use data every day — in different forms — to make informed decisions. It could be through counting your steps on a fitness app or tracking the estimated delivery date of your package. In fact, the data volume from internet activity alone is expected to reach an estimated 180 zettabytes by 2025.

Companies use data the same way but on a larger scale. They collect information about their targeted audiences through different sources, such as websites, CRM, and social media. This data is then analyzed and shared across various teams, systems, external partners, and vendors.

With the large volumes of data they handle, organizations need a reliable automation tool to process and analyze the data before use. Data orchestration tools are one of the most important in this process of software procurement.

What is Data Orchestration and Data Pipelines

Data orchestration is an automated process of data pipeline workflow. To break it down, let’s understand what goes on in a data pipeline.

Data moves from its raw state to a final form within the pipeline through a series of ETL workflows. ETL stands for Extract-Transform-Load. The ETL process collects data from multiple sources (extracts), cleans and packages the data (transforms), and saves the data to a database or warehouse (loads) where it is ready to be analyzed. Before this, data engineers had to create, schedule, and manually monitor the progress of data pipelines. But with data orchestration, each step in the workflow is automated.

Data orchestration is collecting and organizing siloed data from multiple data storage points and making it accessible and prepared for data analysis tools. With this automation act, businesses can streamline data from numerous sources to make calculated decisions.

The data orchestration pipeline is a game-changer in the data technology environment. The increase in cloud adoption from today’s data-driven company culturehas pushed the need for companies to embrace data orchestration globally.

Why is Data Orchestration Important

Data orchestration is the solution to the time-consuming management of data, giving organizations a way to keep their stacks connected while data flows smoothly.

“Data orchestration provides the answer to making your data more useful and available. But ultimately, it goes beyond simple data management. In the end, orchestration is about using data to drive actions, to create real business value.”

— Steven Hillion, Head of Data at Astronomer

As activities in an organization increase with the expansion of the customer base, it becomes challenging to cope with the high volume of data coming in. One example can be found in marketing. With the increased reliance on customer segmentation for successful campaigns, multiple sources of data can make it difficult to separate your prospects with speed and finesse.

Here’s how data orchestration can help:

- Disparate data sources. Data orchestration automates the process of gathering and preparing data coming from multiple sources without introducing human error.

- Breaks down silos. Many businesses have their data siloed, which can be a location, region, an organization, or a cloud application. Data orchestration breaks down these silos and makes the data accessible to the organization.

- Removes data bottlenecks. Data orchestration eliminates the bottlenecks arising from the downtime of analyzing and preparing data due to the automation of this process.

- Enforces data governance. The data orchestration tool connects all your data systems across geographical regions with different rules and regulations regarding data privacy. It ensures that the data collected complies with GDPR, CCPA, etc., laws on ethical data gathering.

- Gives faster insights. Automating each workflow stage in the data pipeline using data orchestration gives data engineers and analysts more time to draw and perform actionable insights, to enable data-based decision-making.

- Provides real-time information. Data can be extracted and processed the moment it is created, giving room for real-time data analysis or data storage.

- Scalability. Automation of the workflow helps organizations scale data use through synchronization across data silos.

- Monitoring the workflow progress. With data orchestration, the data pipeline is equipped with alerts to identify and amend issues as quickly as they occur.

Best Tools Data Orchestration Tools

Data orchestration tools clean, sort, arrange and publish your data into a data store. When choosing marketing automation tools for your business, two main things come to mind: what they can do and how much they cost.

Let’s look at some of the best ETL tools for your business.

1. Shipyard

Shipyard is a modern data orchestration platform that helps data engineers connect and automate tools and build reliable data operations. It creates powerful data workflows that extract, transform, and load data from a data warehouse to other tools to automate business processes.

The tool connects data stacks with up to 50+ low-code integrations. It orchestrates work between multiple external systems like Lambda, Cloud Functions, DBT Cloud, and Zapier. With a few simple inputs from these integrations, you can build data pipelines that connect to your data stack in minutes.

Some of Shipyard’s key features are:

- Built-in notifications and error-handling

- Automatic scheduling and on-demand triggers

- Share-able, reusable blueprints

- Isolated, scaling resources for each solution

- Detailed historical logging

- Streamlined UI for management

- In-depth admin controls and permissions

Pricing:

Shipyard currently offers two plans:

- Developer — Free

- Team — Starting from $50 per month

2. Luigi

Developed by Spotify, Luigi builds data pipelines in Python and handles dependency resolution, visualization, workflow management, failures, and command line integration. If you need an all-python tool that takes care of workflow management in batch processing, then Luigi is perfect for you.

It’s open source and used by famous companies like Stripe, Giphy, and Foursquare. Giphy says they love Luigi for “being a powerful, simple-to-use Python-based task orchestration framework”.

Some of its key features are:

- Python-based

- Task-and-target semantics to define dependencies

- Uses a single node for a directed graph and data-structure standard

- Light-weight, therefore, requires less time for management

- Allows users to define tasks, commands, and conditional paths

- Data pipeline visualization

Pricing:

Luigi is an open-source tool, so it’s free.

3. Apache Airflow

If you’re looking to schedule automated workflows through the command line, look no further than Apache Airflow. It’s a free and open-source software tool that facilitates workflow development, scheduling, and monitoring.

Most users prefer Apache Airflow because of its open-source community and a large library of pre-built integrations to third-party data processing tools (Example: Apache Spark, Hadoop). The greater flexibility when building workflows is another reason why this is a customer favorite.

Some of its key features are:

- Easy to use

- Robust integrations with data cloud stacks like AWS, Microsoft Azure

- Streamlines UI that monitors, schedules, and manages your workflows

- Standard python features allow you to maintain total flexibility when building your workflows

- Its latest version, Apache Airflow 2.0, has unique features like smart sensors, Full Rest API, Task Flow API, and some UI/UX improvements.

Pricing:

Free

4. Keboola

Keboola is a data orchestration tool built for enterprises and managed by a team of highly specialized engineers. It enables teams to focus on collaboration and get insights through automated workflows, collaborative workspaces, and secure experimentation.

The platform is user-friendly, so non-technical people can also easily build their data orchestration pipelines without the need for cloud engineering skills. It has a pay-as-you-go plan that scales with your needs and is integrated with the most commonly used tools.

Some of its key features are:

- Runs transformations in Python, SQL, and R

- No-code data pipeline automation

- Offers various pre-built integrations

- Data lineage and version control, so you don’t need to switch platforms as your data grows

Pricing:

Keboola currently has two plans:

5. Fivetran

Fivetran has an in-house orchestration system that powers the workflows required to extract and load data safely and efficiently. It enables data orchestration from a single platform with minimal configuration and code. Their easy-to-use platform keeps up with API changes and pulls fresh, rich data in minutes.

The tool is integrated with some of the best data source connectors, which analyze data immediately. Their pipelines automatically and continuously update, freeing you to focus on business insights instead of ETL.

Some of its key features are:

- Integrated with DBT scheduling

- Includes data lineage graphs to track how data moves and changes from connector to warehouse to BI tool

- Supports event data flow data

- Alerts and notifications for simplified troubleshooting

Pricing:

Fivetran has flexible price plans where you only pay for what you use:

- Starter — $120 per month

- Standard Select — $60 per month

- Standard — $180 per month

- Enterprise — $240 per month

- Business Critical — Request a demo

6. Dagster

A second-generation data orchestration tool, Dagster can detect and improve data awareness by anticipating the actions triggered by each data type. It aims to enhance data engineers’ and analysts’ development, testing, and overall collaboration experience. It can also accelerate development, scale your workload with flexible infrastructure, and understand the state of jobs and data with integrated observability.

Despite being a new addition to the market, many companies like VMware, Mapbox, and Doordash trust Dagster for their business’s productivity. Mapbox’s data software engineer, Ben Pleasonton says, “With Dagster, we’ve brought a core process that used to take days or weeks of developer time down to 1-2 hours.”

Some of its key features are:

- Greater fluidity and easy to integrate

- Run monitoring

- Easy-to-use APIs

- DAG-based workflow

- Various integration options with popular tools like DBT, Spark, Airflow, and Panda

Pricing:

Dagster is an open-source platform, so it’s free.

In conclusion…

Companies are increasingly relying on the best AI marketing tools for a sustainable, forward-thinking business. Leveraging automation has helped them accelerate their business operations, and data orchestration tools specifically have provided them with greater insights to run their business better.

Choosing the right ETL tools for your business largely depends on your existing data infrastructure. While our top picks are some of the best in the world, ensure you research well and select the best one to help your business get the most out of its data.

MARKETING

How to create editorial guidelines that are useful + template

Before diving in to all things editorial guidelines, a quick introduction. I head up the content team here at Optimizely. I’m responsible for developing our content strategy and ensuring this aligns to our key business goals.

Here I’ll take you through the process we used to create new editorial guidelines; things that worked well and tackle some of the challenges that come with any good multi – stakeholder project, share some examples and leave you with a template you can use to set your own content standards.

What are editorial guidelines?

Editorial guidelines are a set of standards for any/all content contributors, etc. etc. This most often includes guidance on brand, tone of voice, grammar and style, your core content principles and the types of content you want to produce.

Editorial guidelines are a core component of any good content strategy and can help marketers achieve the following in their content creation process:

- Consistency: All content produced, regardless of who is creating it, maintains a consistent tone of voice and style, helping strengthen brand image and making it easier for your audience to recognize your company’s content

- Quality Control: Serves as a ‘North Star’ for content quality, drawing a line in the sand to communicate the standard of content we want to produce

- Boosts SEO efforts: Ensures content creation aligns with SEO efforts, improving company visibility and increasing traffic

- Efficiency: With clear guidelines in place, content creators – external and internal – can work more efficiently as they have a clear understanding of what is expected of them

Examples of editorial guidelines

There are some great examples of editorial guidelines out there to help you get started.

Here are a few I used:

1. Editorial Values and Standards, the BBC

Ah, the Beeb. This really helped me channel my inner journalist and learn from the folks that built the foundation for free quality journalism.

How to create editorial guidelines, Pepperland Marketing

After taking a more big picture view I recognized needed more focused guidance on the step by step of creating editorial guidelines.

I really liked the content the good folks at Pepperland Marketing have created, including a free template – thanks guys! – and in part what inspired me to create our own free template as a way of sharing learnings and helping others quickstart the process of creating their own guidelines.

3. Writing guidelines for the role of AI in your newsroom?… Nieman Lab

As well as provide guidance on content quality and the content creation process, I wanted to tackle the thorny topic of AI in our editorial guidelines. Specifically, to give content creators a steer on ‘fair’ use of AI when creating content, to ensure creators get to benefit from the amazing power of these tools, but also that content is not created 100% by AI and help them understand why we feel that contravenes our core content principles of content quality.

So, to learn more I devoured this fascinating article, sourcing guidance from major media outlets around the world. I know things change very quickly when it comes to AI, but I highly encourage reading this and taking inspiration from how these media outlets are tackling this topic.

Learn more: The Marketer’s Guide to AI-generated content

Why did we decide to create editorial guidelines?

1. Aligning content creators to a clear vision and process

Optimizely as a business has undergone a huge transformation over the last 3 years, going through rapid acquisition and all the joys and frustrations that can bring. As a content team, we quickly recognized the need to create a set of clear and engaging guidelines that helps content creators understand how and where they can contribute, and gave a clear process to follow when submitting a content idea for consideration.

2. Reinvigorated approach to brand and content

As a brand Optimizely is also going through a brand evolution – moving from a more formal, considered tone of voice to one that’s much more approachable, down to earth and not afraid to use humor, different in content and execution.

See, our latest CMS campaign creative:

It’s pretty out there in terms of creative and messaging. It’s an ad campaign that’s designed to capture attention yes, but also – to demonstrate our abilities as a marketing team to create this type of campaign that is normally reserved for other more quote unquote creative industries.

We wanted to give guidance to fellow content creators outside the team on how they can also create content that embraces this evolved tone of voice, while at the same time ensuring content adheres to our brand guidelines.

3. Streamline content creation process

Like many global enterprises we have many different content creators, working across different time zones and locations. Documenting a set of guidelines and making them easily available helps content creators quickly understand our content goals, the types of content we want to create and why. It would free up content team time spent with individual contributors reviewing and editing submissions, and would ensure creation and optimization aligns to broader content & business goals.

It was also clear that we needed to document a process for submitting content ideas, so we made sure to include this in the guidelines themselves to make it easy and accessible for all contributors.

4. 2023 retrospective priority

As a content team we regularly review our content strategy and processes to ensure we’re operating as efficiently as possible.

In our last retrospective. I asked my team ‘what was the one thing I could do as a manager to help them be more impactful in their role?’

Editorial guidelines was the number 1 item on their list.

So off we went…

What we did

- Defined a discrete scope of work for the first version of the editorial guidelines, focusing on the Blog and Resources section of the website. This is where the content team spends most of its time and so has most involvement in the content creation process. Also where the most challenging bottlenecks have been in the past

- Research. Reviewed what was out there, got my hands on a few free templates and assembled a framework to create a first version for inputs and feedback

- Asked content community – I put a few questions out to my network on LinkedIn on the topic of content guidelines and content strategy, seeking to get input and guidance from smart marketers.

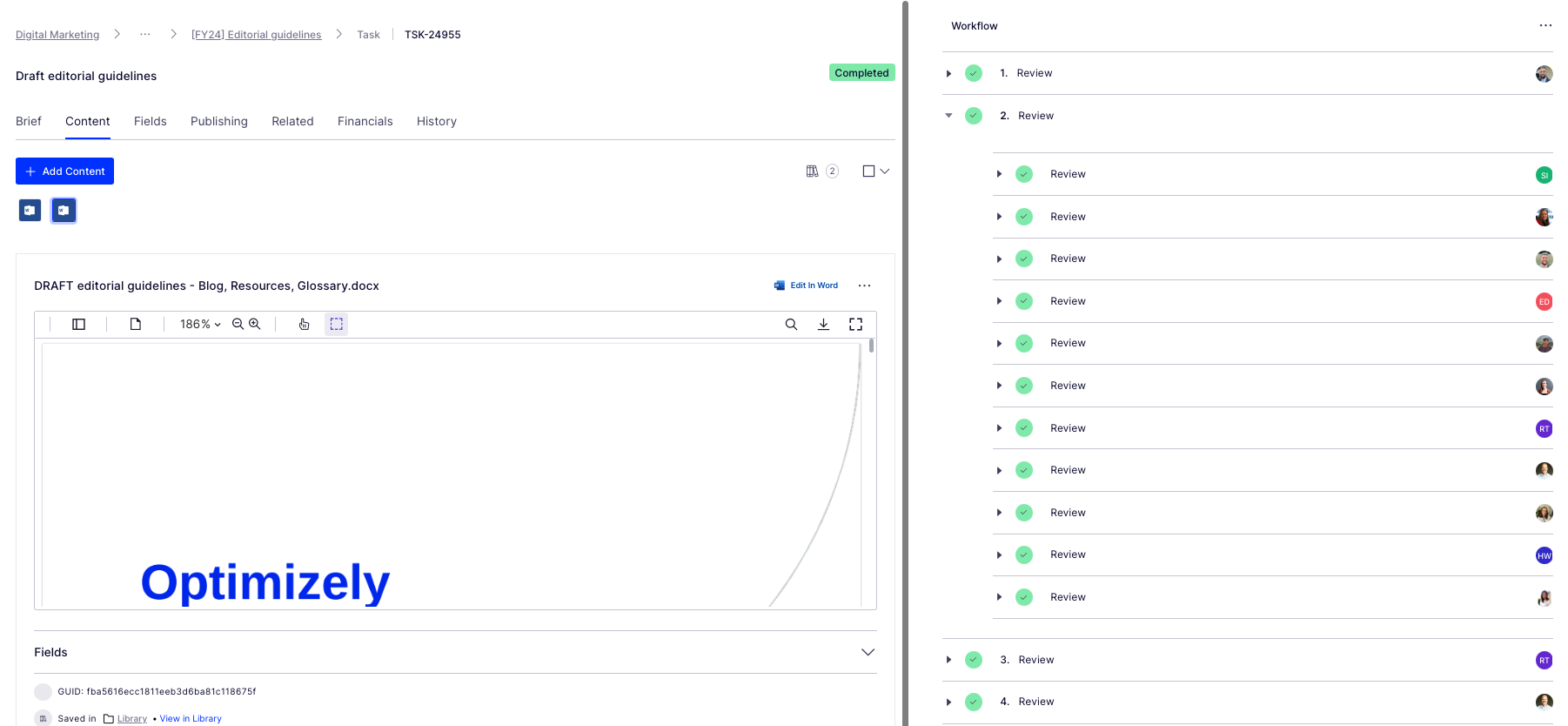

- Invited feedback: Over the course of a few weekswe invited collaborators to comment in a shared doc as a way of taking iterative feedback, getting ideas for the next scope of work, and also – bringing people on the journey of creating the guidelines. Look at all those reviewers! Doing this within our Content Marketing Platform (CMP) ensured that all that feedback was captured in one place, and that we could manage the process clearly, step by step:

Look at all those collaborators! Thanks guys! And all of those beautiful ticks, so satisfying. So glad I could crop out the total outstanding tasks for this screen grab too (Source – Optimizely CMP)

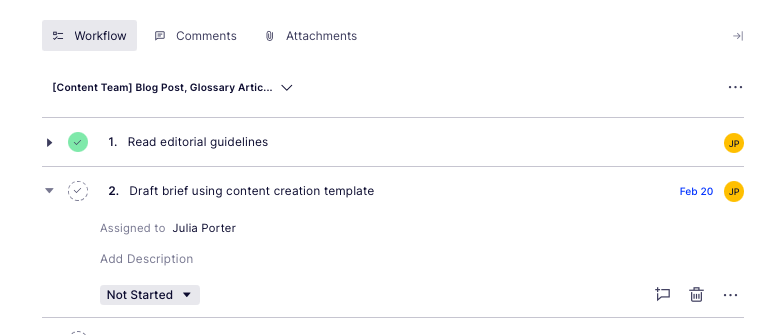

- Updated content workflow: Now we have clear, documented guidance in place, we’ve included this as a step – the first step – in the workflow used for blog post creation:

Source: Optimizely CMP

Results

It’s early days but we’re already seeing more engagement with the content creation process, especially amongst the teams involved in building the guidelines (which was part of the rationale in the first place :))

Source: My Teams chat

It’s inspired teams to think differently about the types of content we want to produce going forwards – for the blog and beyond.

I’d also say it’s boosted team morale and collaboration, helping different teams work together on shared goals to produce better quality work.

What’s next?

We’re busy planning wider communication of the editorial guidelines beyond marketing. We’ve kept the original draft and regularly share this with existing and potential collaborators for ongoing commentary, ideas and feedback.

Creating guidelines has also sparked discussion about the types of briefs and templates we want and need to create in CMP to support creating different assets. Finding the right balance between creative approach and using templates to scale content production is key.

We’ll review these guidelines on a quarterly basis and evolve as needed, adding new formats and channels as we go.

Key takeaways

- Editorial guidelines are a useful way to guide content creators as part of your overall content strategy

- Taking the time to do research upfront can help accelerate seemingly complex projects. Don’t be afraid to ask your community for inputs and advice as you create

- Keep the scope small at first rather than trying to align everything all at once. Test and learn as you go

- Work with stakeholders to build guidelines from the ground up to ensure you create a framework that is useful, relevant and used

And lastly, here’s that free template we created to help you build or evolve your own editorial guidelines!

MARKETING

Effective Communication in Business as a Crisis Management Strategy

Everyday business life is full of challenges. These include data breaches, product recalls, market downturns and public relations conflicts that can erupt at any moment. Such situations pose a significant threat to a company’s financial health, brand image, or even its further existence. However, only 49% of businesses in the US have a crisis communications plan. It is a big mistake, as such a strategy can build trust, minimize damage, and even strengthen the company after it survives the crisis. Let’s discover how communication can transform your crisis and weather the chaos.

The ruining impact of the crisis on business

A crisis can ruin a company. Naturally, it brings losses. But the actual consequences are far worse than lost profits. It is about people behind the business – they feel the weight of uncertainty and fear. Employees start worrying about their jobs, customers might lose faith in the brand they once trusted, and investors could start looking elsewhere. It can affect the brand image and everything you build from the branding, business logo, social media can be ruined. Even after the crisis recovery, the company’s reputation can suffer, and costly efforts might be needed to rebuild trust and regain momentum. So, any sign of a coming crisis should be immediately addressed. Communication is one of the crisis management strategies that can exacerbate the situation.

The power of effective communication

Even a short-term crisis may have irreversible consequences – a damaged reputation, high employee turnover, and loss of investors. Communication becomes a tool that can efficiently navigate many crisis-caused challenges:

- Improved trust. Crisis is a synonym for uncertainty. Leaders may communicate trust within the company when the situation gets out of control. Employees feel valued when they get clear responses. The same applies to the customers – they also appreciate transparency and are more likely to continue cooperation when they understand what’s happening. In these times, documenting these moments through event photographers can visually reinforce the company’s messages and enhance trust by showing real, transparent actions.

- Reputation protection. Crises immediately spiral into gossip and PR nightmares. However, effective communication allows you to proactively address concerns and disseminate true information through the right channels. It minimizes speculation and negative media coverage.

- Saved business relationships. A crisis can cause unbelievable damage to relationships with employees, customers, and investors. Transparent communication shows the company’s efforts to find solutions and keeps stakeholders informed and engaged, preventing misunderstandings and painful outcomes.

- Faster recovery. With the help of communication, the company is more likely to receive support and cooperation. This collaborative approach allows you to focus on solutions and resume normal operations as quickly as possible.

It is impossible to predict when a crisis will come. So, a crisis management strategy mitigates potential problems long before they arise.

Tips on crafting an effective crisis communication plan.

To effectively deal with unforeseen critical situations in business, you must have a clear-cut communication action plan. This involves things like messages, FAQs, media posts, and awareness of everyone in the company. This approach saves precious time when the crisis actually hits. It allows you to focus on solving the problem instead of intensifying uncertainty and panic. Here is a step-by-step guide.

Identify your crisis scenarios.

Being caught off guard is the worst thing. So, do not let it happen. Conduct a risk assessment to pinpoint potential crises specific to your business niche. Consider both internal and external factors that could disrupt normal operations or damage the online reputation of your company. Study industry-specific issues, past incidents, and current trends. How will you communicate in each situation? Knowing your risks helps you prepare targeted communication strategies in advance. Of course, it is impossible to create a perfectly polished strategy, but at least you will build a strong foundation for it.

Form a crisis response team.

The next step is assembling a core team. It will manage communication during a crisis and should include top executives like the CEO, CFO, and CMO, and representatives from key departments like public relations and marketing. Select a confident spokesperson who will be the face of your company during the crisis. Define roles and responsibilities for each team member and establish communication channels they will work with, such as email, telephone, and live chat. Remember, everyone in your crisis response team must be media-savvy and know how to deliver difficult messages to the stakeholders.

Prepare communication templates.

When a crisis hits, things happen fast. That means communication needs to be quick, too. That’s why it is wise to have ready-to-go messages prepared for different types of crises your company may face. These messages can be adjusted to a particular situation when needed and shared on the company’s social media, website, and other platforms right away. These templates should include frequently asked questions and outline the company’s general responses. Make sure to approve these messages with your legal team for accuracy and compliance.

Establish communication protocols.

A crisis is always chaotic, so clear communication protocols are a must-have. Define trigger points – specific events that would launch the crisis communication plan. Establish a clear hierarchy for messages to avoid conflicting information. Determine the most suitable forms and channels, like press releases or social media, to reach different audiences. Here is an example of how you can structure a communication protocol:

- Immediate alert. A company crisis response team is notified about a problem.

- Internal briefing. The crisis team discusses the situation and decides on the next steps.

- External communication. A spokesperson reaches the media, customers, and suppliers.

- Social media updates. A trained social media team outlines the situation to the company audience and monitors these channels for misinformation or negative comments.

- Stakeholder notification. The crisis team reaches out to customers and partners to inform them of the incident and its risks. They also provide details on the company’s response efforts and measures.

- Ongoing updates. Regular updates guarantee transparency and trust and let stakeholders see the crisis development and its recovery.

Practice and improve.

Do not wait for the real crisis to test your plan. Conduct regular crisis communication drills to allow your team to use theoretical protocols in practice. Simulate different crisis scenarios and see how your people respond to these. It will immediately demonstrate the strong and weak points of your strategy. Remember, your crisis communication plan is not a static document. New technologies and evolving media platforms necessitate regular adjustments. So, you must continuously review and update it to reflect changes in your business and industry.

Wrapping up

The ability to handle communication well during tough times gives companies a chance to really connect with the people who matter most—stakeholders. And that connection is a foundation for long-term success. Trust is key, and it grows when companies speak honestly, openly, and clearly. When customers and investors trust the company, they are more likely to stay with it and even support it. So, when a crisis hits, smart communication not only helps overcome it but also allows you to do it with minimal losses to your reputation and profits.

MARKETING

Should Your Brand Shout Its AI and Marketing Plan to the World?

To use AI or not to use AI, that is the question.

Let’s hope things work out better for you than they did for Shakespeare’s mad Danish prince with daddy issues.

But let’s add a twist to that existential question.

CMI’s chief strategy officer, Robert Rose, shares what marketers should really contemplate. Watch the video or read on to discover what he says:

Should you not use AI and be proud of not using it? Dove Beauty did that last week.

Should you use it but keep it a secret? Sports Illustrated did that last year.

Should you use AI and be vocal about using it? Agency giant Brandtech Group picked up the all-in vibe.

Should you not use it but tell everybody you are? The new term “AI washing” is hitting everywhere.

What’s the best option? Let’s explore.

Dove tells all it won’t use AI

Last week, Dove, the beauty brand celebrating 20 years of its Campaign for Real Beauty, pledged it would NEVER use AI in visual communication to portray real people.

In the announcement, they said they will create “Real Beauty Prompt Guidelines” that people can use to create images representing all types of physical beauty through popular generative AI programs. The prompt they picked for the launch video? “The most beautiful woman in the world, according to Dove.”

I applaud them for the powerful ad. But I’m perplexed by Dove issuing a statement saying it won’t use AI for images of real beauty and then sharing a branded prompt for doing exactly that. Isn’t it like me saying, “Don’t think of a parrot eating pizza. Don’t think about a parrot eating pizza,” and you can’t help but think about a parrot eating pizza right now?

Brandtech Group says it’s all in on AI

Now, Brandtech Group, a conglomerate ad agency, is going the other way. It’s going all-in on AI and telling everybody.

This week, Ad Age featured a press release — oops, I mean an article (subscription required) — with the details of how Brandtech is leaning into the takeaway from OpenAI’s Sam Altman, who says 95% of marketing work today can be done by AI.

A Brandtech representative talked about how they pitch big brands with two people instead of 20. They boast about how proud they are that its lean 7,000 staffers compete with 100,000-person teams. (To be clear, showing up to a pitch with 20 people has never been a good thing, but I digress.)

OK, that’s a differentiated approach. They’re all in. Ad Age certainly seemed to like it enough to promote it. Oops, I mean report about it.

False claims of using AI and not using AI

Offshoots of the all-in and never-will approaches also exist.

The term “AI washing” is de rigueur to describe companies claiming to use AI for something that really isn’t AI. The US Securities and Exchange Commission just fined two companies for using misleading statements about their use of AI in their business model. I know one startup technology organization faced so much pressure from their board and investors to “do something with AI” that they put a simple chatbot on their website — a glorified search engine — while they figured out what they wanted to do.

Lastly and perhaps most interestingly, companies have and will use AI for much of what they create but remain quiet about it or desire to keep it a secret. A recent notable example is the deepfake ad of a woman in a car professing the need for people to use a particular body wipe to get rid of body odor. It was purported to be real, but sharp-eyed viewers suspected the fake and called out the company, which then admitted it. Or was that the brand’s intent all along — the AI-use outrage would bring more attention?

This is an AI generated influencer video.

Looks 100% real. Even the interior car detailing.

UGC content for your brand is about to get really cheap. ☠️ pic.twitter.com/2m10RqoOW3

— Jon Elder | Amazon Growth | Private Label (@BlackLabelAdvsr) March 26, 2024

To yell or not to yell about your brand’s AI decision

Should a brand yell from a mountaintop that they use AI to differentiate themselves a la Brandtech? Or should a brand yell they’re never going to use AI to differentiate themselves a la Dove? Or should a brand use it and not yell anything? (I think it’s clear that a brand should not use AI and lie and say it is. That’s the worst of all choices.)

I lean far into not-yelling-from-mountaintop camp.

When I see a CEO proudly exclaim that they laid off 90% of their support workforce because of AI, I’m not surprised a little later when the value of their service is reduced, and the business is failing.

I’m not surprised when I hear “AI made us do it” to rationalize the latest big tech company latest rounds of layoffs. Or when a big consulting firm announces it’s going all-in on using AI to replace its creative and strategic resources.

I see all those things as desperate attempts for short-term attention or a distraction from the real challenge. They may get responses like, “Of course, you had to lay all those people off; AI is so disruptive,” or “Amazing. You’re so out in front of the rest of the pack by leveraging AI to create efficiency, let me cover your story.” Perhaps they get this response, “Your company deserves a bump in stock price because you’re already using this fancy new technology.”

But what happens if the AI doesn’t deliver as promoted? What happens the next time you need to lay off people? What happens the next time you need to prove your technologically forward-leaning?

Yelling out that you’re all in on a disruptive innovation, especially one the public doesn’t yet trust a lot is (at best) a business sugar high. That short-term burst of attention may or may not foul your long-term brand value.

Interestingly, the same scenarios can manifest when your brand proclaims loudly it is all out of AI, as Dove did. The sugar high may not last and now Dove has itself into a messaging box. One slip could cause distrust among its customers. And what if AI gets good at demonstrating diversity in beauty?

I tried Dove’s instructions and prompted ChatGPT for a picture of “the most beautiful woman in the world according to the Dove Real Beauty ad.”

It gave me this. Then this. And this. And finally, this.

She’s absolutely beautiful, but she doesn’t capture the many facets of diversity Dove has demonstrated in its Real Beauty campaigns. To be clear, Dove doesn’t have any control over generating the image. Maybe the prompt worked well for Dove, but it didn’t for me. Neither Dove nor you can know how the AI tool will behave.

To use AI or not to use AI?

When brands grab a microphone to answer that question, they work from an existential fear about the disruption’s meaning. They do not exhibit the confidence in their actions to deal with it.

Let’s return to Hamlet’s soliloquy:

Thus conscience doth make cowards of us all;

And thus the native hue of resolution

Is sicklied o’er with the pale cast of thought,

And enterprises of great pith and moment

With this regard their currents turn awry

And lose the name of action.

In other words, Hamlet says everybody is afraid to take real action because they fear the unknown outcome. You could act to mitigate or solve some challenges, but you don’t because you don’t trust yourself.

If I’m a brand marketer for any business (and I am), I’m going to take action on AI for my business. But until I see how I’m going to generate value with AI, I’m going to be circumspect about yelling or proselytizing how my business’ future is better.

HANDPICKED RELATED CONTENT:

Cover image by Joseph Kalinowski/Content Marketing Institute

-

PPC7 days ago

PPC7 days ago19 Best SEO Tools in 2024 (For Every Use Case)

-

SEARCHENGINES6 days ago

Daily Search Forum Recap: April 19, 2024

-

WORDPRESS7 days ago

WORDPRESS7 days agoHow to Make $5000 of Passive Income Every Month in WordPress

-

WORDPRESS5 days ago

WORDPRESS5 days ago13 Best HubSpot Alternatives for 2024 (Free + Paid)

-

MARKETING6 days ago

MARKETING6 days agoBattling for Attention in the 2024 Election Year Media Frenzy

-

SEO7 days ago

SEO7 days ago25 WordPress Alternatives Best For SEO

-

WORDPRESS6 days ago

WORDPRESS6 days ago7 Best WooCommerce Points and Rewards Plugins (Free & Paid)

-

AFFILIATE MARKETING7 days ago

AFFILIATE MARKETING7 days agoAI Will Transform the Workplace. Here’s How HR Can Prepare for It.

You must be logged in to post a comment Login