OTHER

How LLMs Enhance Customer Experience & Business Value

The rise of the LLM and Generative AI models brings the era of mass hyper personalisation at scale.

Increasingly customers are expecting fast and effective responses whether in relation to customer support matters or for helpful and tailored recommendations, product search and discovery and information.

Large Language Models (LLMs) have been key for advancing Generative AI as the natural language capabilities of computing machines has rapidly advanced.

Advancing computing capabilities across language has been a key aspect of AI development in recent times and is set to continue in 2024. Language capabilities for AI agents brings the ability to personalise the engagement for the customer and within the internal operation side for the enterprise staff to assist them in their work and expedite tasks more efficiently.

McKinsey have estimated in a report entitled ‘The economic potential of generative AI: The next productivity frontier’ that Generative AI may add up to $2.6 to $4.4 trillion to the global economy on an annual basis and that potentially up to ¾ of the total annual value of use cases will result marketing and sales, customer operations, R&D and software engineering.

BCG points out how Generative AI may generate content, improve efficiency (For example summarise documents) and personalise experiences by identifying patterns in a given customer’s behaviour and generating tailored information for that particular person. However, BCG also notes that the extended adoption of Generative AI may result in challenges for organisations that have not implemented suitable governance arrangements. These include bias and toxicity, data leakage whereby sensitive, proprietary data may be entered into a Generative AI model and reappear in the public domain, and a lack of transparency.

Therefore, it is important for businesses that their offerings provide reliable and trustworthy solutions for their external customers and internal operational needs. With this in mind it is worth considering OTTES:

-

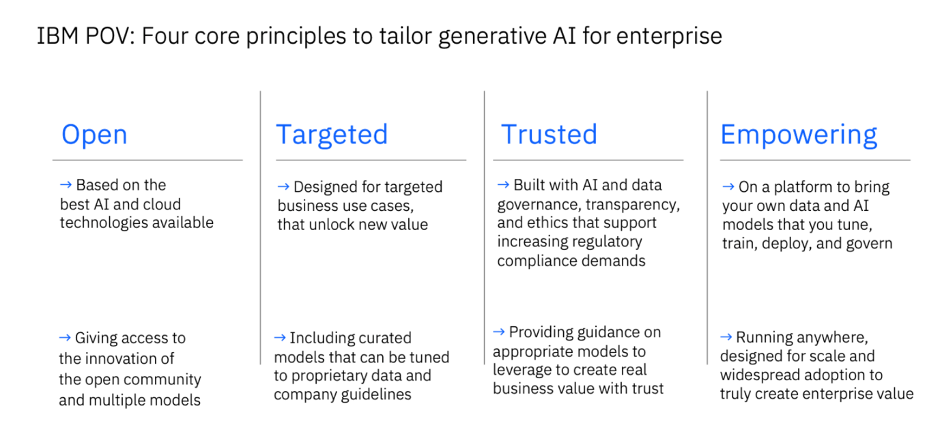

Open: whereby the model has enough variation to meet the needs of enterprise use cases and requirements for compliance obligations.

-

Trusted: whereby customers may apply their own proprietary data, develop an AI model and, in the process, ensure that ethical, regulatory and legal matters are managed appropriately.

-

Targeted: solution that is designed for business use cases with the intention to unlock new value.

-

Empowering: the model allows the user to become a value creator across training, fine-tuning, deployment and data governance.

-

Sustainability: working with a LLM provider who is committed to achieving net-zero emissions of greenhouse gasses.

I am delighted to collaborate with IBM watsonx and to set out how OTTE with an LLM is enabled by watsonx. Furthermore, I am also delighted that IBM have committed to sustainability with a target to hit net-zero operational greenhouse gasses by 2030 and have reported that the firm reduced operational greenhouse gas emissions by 61% since 2010;

In addition, IBM have set out the following core principles for Generative AI for enterprise:

What is Natural Language Processing (NLP)? And what is a Large Language Model (LLM)

NLP is the area of computer science and in particular Artificial Intelligence (AI) that enables computers the ability to understand text and speech in a similar manner to humans.

Natural Language Understanding (NLU) and Natural Language Generation (NLG) are subsets of NLP. NLU focuses on deriving understanding from language (speech or text). NLG is applied towards the generation of language (speech or text) that is capable of being understood by humans.

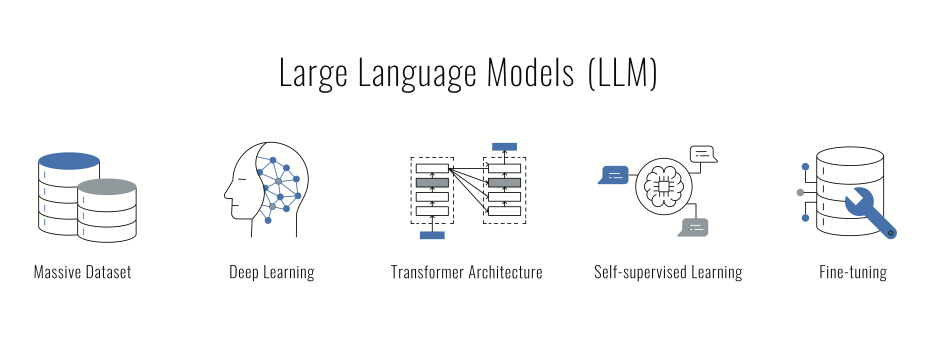

LLM refers to a type of foundation model that has been trained on a vast set of data enabling the model to understand and generate NLP related tasks and even wider tasks (multimodal, multitasking).

Many firms, including IBM, have been developing NLP capabilities over an extended period of time along with the powerful Machine Learning and in particular the Deep Neural Network architecture required for advancing NLP. In particular the Transformer with Self-Attention Mechanism that LLMs typically apply.

The paper Attention is All you need (2017) introduced the concept and for more on how Transformers with the Self-Attention Mechanism work see:

Transformers are giant models and building a foundation LLM model from scratch requires large amounts of data and powerful server capabilities, and software engineering expertise (data engineers, natural language processing experts).

The attention of the public and many C-Teams has been captured by the likes of Open AI’s Chat GPT-3 and GPT-4, and the developer community has also been excited by the likes of Meta’s Llama models and other models available via open source.

There are firms who seek easily implementable solutions from a reliable and trustworthy enterprise supplier with a strong balance sheet.

Furthermore, there are those firms from the Small and Medium Enterprise (SME) segments who could massively benefit from the opportunities that LLMs offer but don’t have the internal resources to access and scale the more complex models.

For firms in the above categories seeking a solution from a reliable and secure counterparty, IBM have launched the Granite model series on watsonx.ai as the Generative AI offering from IBM services including watsonx Assistant and watsonx Orchestrate.

Application of LLMs

LLMs provide understanding and text generation as well as further types of content generation utilising huge amounts of data for the purposes of training. From a use case perspective, LLMs may infer from context, coherently generate responses that are relevant to the context as well as text summarization, language translation, sentiment analysis, Q&A answering, code generation as well as writing creatively.

This ability is due to the vast size of the LLMs (often billions of parameters) and building and training an LLM from scratch is typically a task that will require significant resources (data, time, engineers, servers with GPUs and money). Hence the reason why firms (in particular those that are outside of the tech sector) are using services from LLMs available in the market.

The section below sets out how, according to IBM, watsonx may enable the user to work with LLM technology that is secure, reliable and relatively easy to adopt.

An Overview of Watsonx

Further advantages of IBM watsonx are set out below:

In essence one may surmise that the key advantage of the Granite model series for enterprise is business-targeted, IBM-developed foundation models built from sound data.

Embeddable AI Partnership: Independent Software Company (ISV) and Managed Service Providers (MSP)

Furthermore, a software company can look at embedding watsonx into their AI commercial software solutions. Partnership opportunities allow for solutions to be elevated via hybrid cloud and AI technology allowing for expansion into new markets and established go-to market tools enabling strategies for sales amplification.

Moreover, the NLP solutions from watsonx may accelerate business value generated via AI through a flexible portfolio of services, applications and libraries. A particular advantage of the solution is that it makes the technology accessible to those who are not data scientists including non-technical users thereby enhancing productivity and business processes.

Use Case Examples: Retail, E-Commerce, Marketing, Advertising Sectors

Brands and firms can now engage with customers in a more meaningful and customised manner. LLM models can help provide useful information and helpful advice to potential customers about the product or service. Brands can use an LLM to create personalised campaigns and targeted promotions.

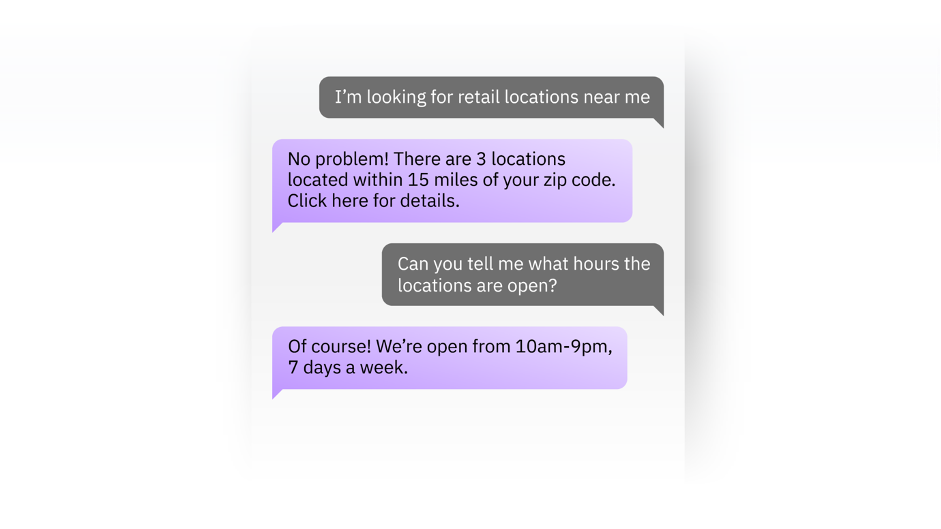

Furthermore, LLM models can be used to generate content whether social media posts and blogs or more creative idea content such as poetry that may engage the potential customer. Moreover, LLMs can be applied towards more capable and effective chatbots that can operate 24/7 and assist with customer queries thereby improving the customer experience and user engagement.

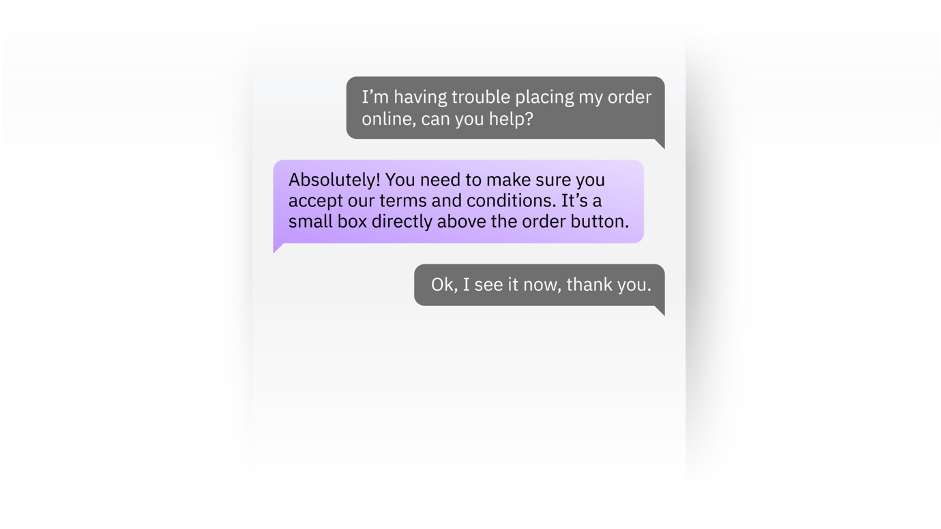

An example of enhanced customer support for the retail sector from watsonx-assistant is provided below, whereby the enterprise may inform every interaction, for example enable a customer to discover where the nearest stores are to them:

Source: IBM

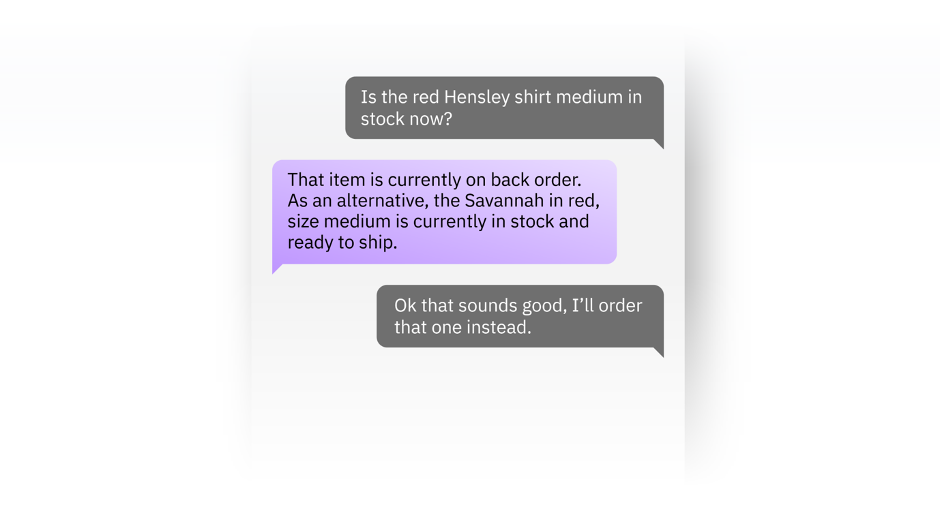

A further example is to advise on every possibility: with a meaningful and useful proactive engagement to inform customers with recommendations and accurately inform them about the relevant possibilities.

Source: IBM

And to guide every experience: by accurately responding to customer inquiries that results in personalised guidance for the customer and captures sales leads in a meaningful manner by understanding customer intent and personalised follow-up engagement by an agent.

Source: IBM

Case studies are provided by:

-

Camping World implementation to reshape call centres that resulted in an increase in customer engagement by 40% across all platforms and reduced waiting times to 33 seconds with a 33% increase in agent efficiency reported.

-

Stiky providing 24×7 customer service, answering up to 90% of queries automatically, conducting on average 165conversations daily and 92% positive customer satisfaction rating (CSAT).

-

Bestseller India to boost fashion forecasting.

Furthermore, it is worth noting that according to Google “Consumers are 40% more likely to spend more than they originally planned when their retail experience is highly personalized to their needs.”

Use Case Examples: Banking Financial Services / Fintech

LLMs may provide insights from analyst reports, news articles, social media content and industry publications.

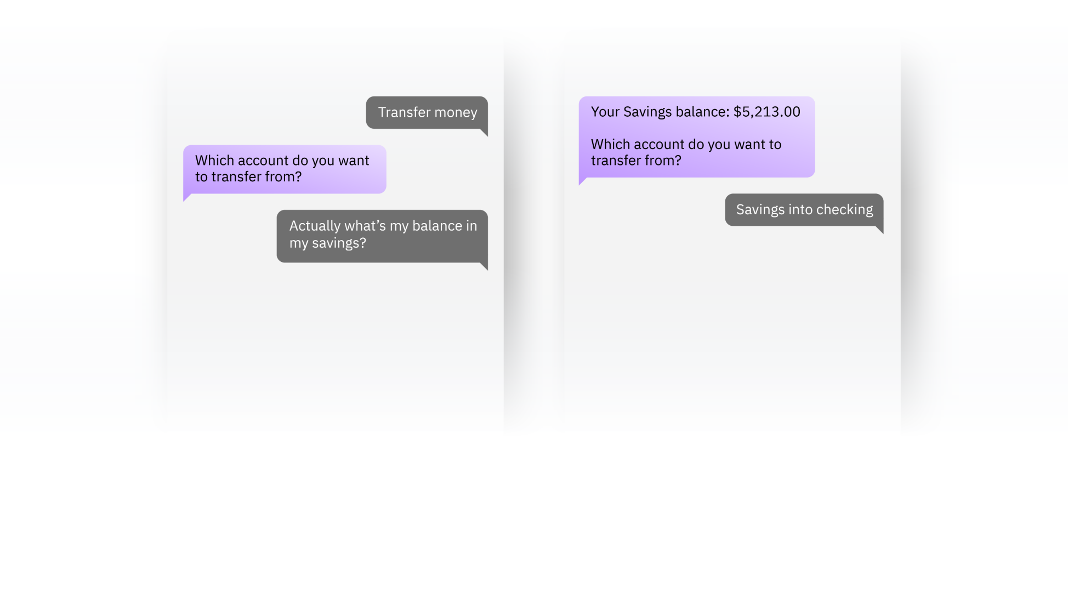

The watsonx service may be applied towards empowering customer self-service so that customers may gain rapid access to core banking actions such as searching branch locations, checking balances in their account, payments, transfers and independently resolve their support issues.

Source: IBM

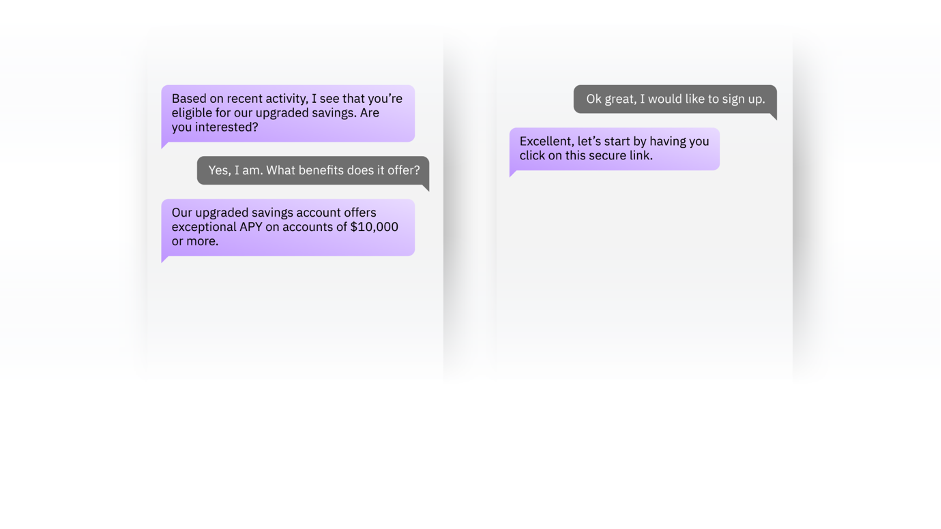

A further example is provided by contextualizing experiences to drive outcomes in the banking experience for example providing suggestions that are relevant and guidance that is considered helpful as responses to the customer thereby improving the customer experience (CX).

Source: IBM

Moreover, the watsonx agent may also be applied towards suggesting helpful next steps whereby customers may be provided with intelligent recommendations and proactively informed about opportunities enabling them to accurately understand all the contextual possibilities.

Source: IBM

An advantage of this process is the delivery of human-like interactions irrespective of where the customer inquiry arrives, or the language spoken, with the examples provided by watsonx Assistant applying natural language processing (NLP) to enhance customer engagements towards those expected from human levels and quick responses (effectively in real-time).

Case study examples are provided by:

Use Case Examples: Insurance / Insurtech

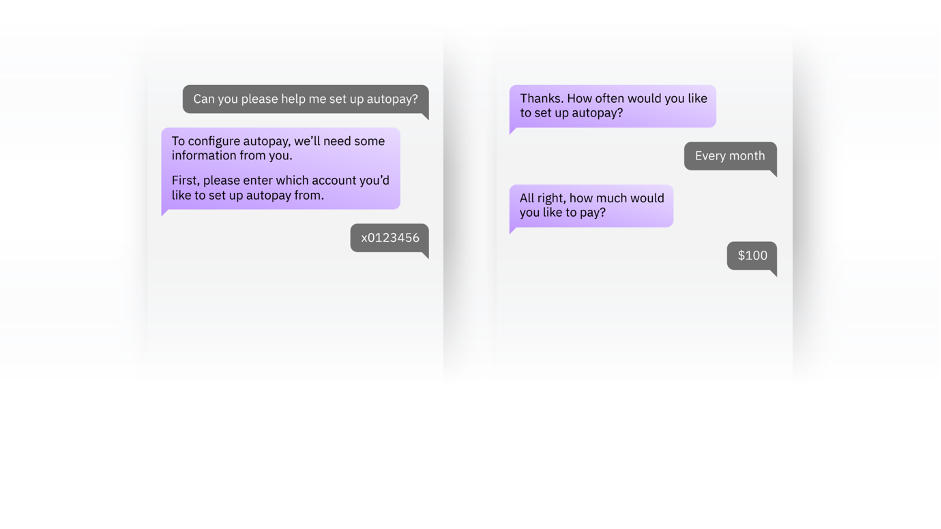

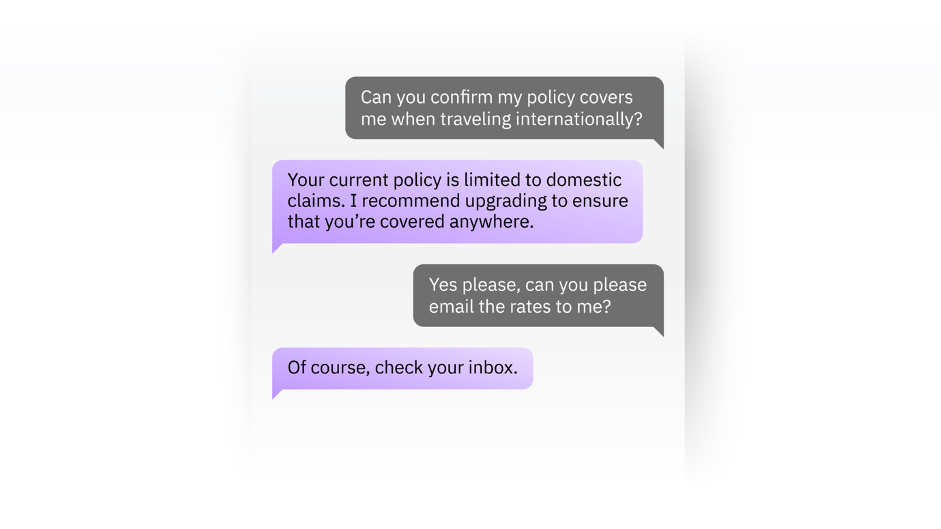

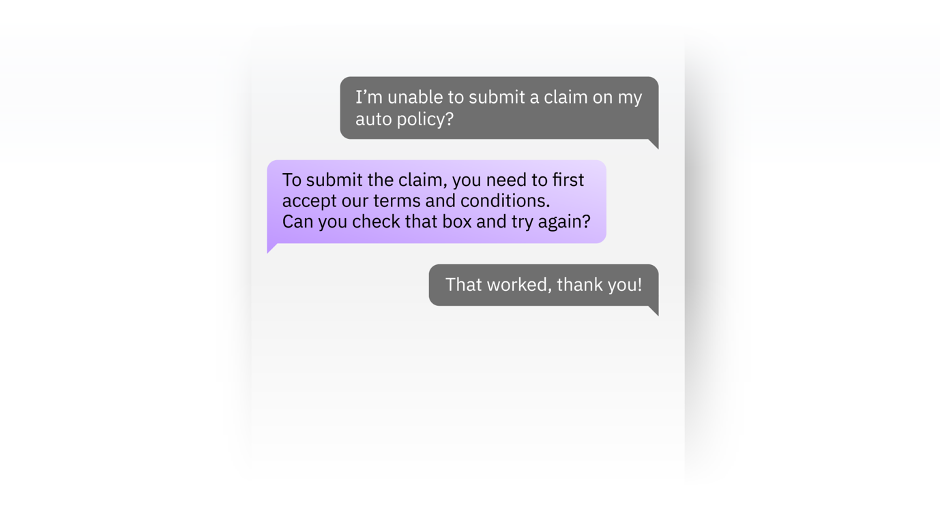

Use case examples include agent assist that enables customers to ask basic queries such as the ability to reset password or seek information about their insurance policy and furthermore provide rapid responses to requests for quotes and pricing, coverage checks, processing of claims and the handling of matters relating to the policy.

Source: IBM

Moreover, insurance firms may also apply watsonx towards digital customer support to enable customer engagement and integrate an AI agent within existing systems and processes.

Source: IBM

Case study examples include:

IBM commissioned Forrester TEI and found that watsonx Assistant customers saw the following benefits:

Increasingly enterprises are facing a race to adopt Generative AI capabilities or get left behind by their competitors, in particular if rivals can offer enhanced CX and customer engagement.

However, a business whether a large corporation or an SME must carefully evaluate which option and criteria best suits their needs. As noted, many firms will lack the internal resources and core competences to match a technology major to develop a state of the art LLM. Equally many such firms will seek to evaluate the open-source model solutions available with an evaluation of the architecture needs of a pipeline and engineering resources needed for a real-world production level system and governance requirements. For these firms a trusted counterparty who may deliver reliably and securely is key.

IBM watsonx have summarised how they meet OTTES criteria set out at the outset of this article:

-

Open: according to IBM, watsonx is based on the best open technologies available, providing model variety to cover enterprise use cases and compliance requirements.

-

Trusted: IBM state that watsonx is designed with principles of transparency, responsibility and governance enabling customers to build AI models trained on their own trusted data to help manage legal, regulatory, ethical and inaccuracy concerns.

-

Targeted: IBM point out that watsonx is designed for enterprise and targeted at business domains; watsonx is designed for business use cases that unlock new value.

-

Empowering: watsonx allows the user go beyond just being an AI user and become an AI value creator, allowing you to train, fine-tune and deploy, and govern the data and AI models you bring to the platform and own completely the value they create according to IBM.

-

Sustainability: as noted above, IBM targets to hit net-zero operational GHGs by 2030.

About the Author

Imtiaz Adam is a Hybrid Strategist and Data Scientist. He is focussed on the latest developments in artificial intelligence and machine learning techniques with a particular focus on deep learning. Imtiaz holds an MSc in Computer Science with research in AI (Distinction) University of London, MBA (Distinction), Sloan in Strategy Fellow London Business School, MSc Finance with Quantitative Econometric Modelling (Distinction) at Cass Business School. He is the Founder of Deep Learn Strategies Limited, and served as Director & Global Head of a business he founded at Morgan Stanley in Climate Finance & ESG Strategic Advisory. He has a strong expertise in enterprise sales & marketing, data science, and corporate & business strategist.

#IBMPartner

Source for performance data is IBM. Results may vary and are provided on a non-reliance basis and although the author has made reasonable efforts to research the information, the author makes no representations, warranties or guarantees, whether express or implied is provided in relation to the content in this article.

OTHER

Why Malia Obama Received Major Criticism Over A Secret Facebook Page Dissing Trump

Given the divisive nature of both the Obama and Trump administrations, it’s unsurprising that reactions to Malia Obama’s alleged secret Facebook account would be emotional. Many online users were quick to jump to former President Donald Trump’s defense, with one user writing: “Dear Malia: Do you really think that anyone cares whether you and/or your family likes your father’s successor? We’re all trying to forget you and your family.”

Others pointed out the double standard held by those who condemn Trump for hateful rhetoric but praise people like Malia who speak out against her father’s successor in what they believe to be hateful rhetoric. Some users seemed bent on criticizing Malia simply because they don’t like her or her father, proving that the eldest Obama daughter couldn’t win for losing regarding the public’s perception of her or her online presence.

The secret Facebook situation is not all that dissimilar to critics who went after Malia for her professional name at the 2024 Sundance Film Festival. In this instance, people ironically accused Malia of using her family’s name to get into the competitive festival while also condemning her for opting not to use her surname, going by Malia Ann instead.

OTHER

Best Practices for Data Center Decommissioning and IT Asset Disposition

Data center decommissioning is a complicated process that requires careful planning and experienced professionals.

If you’re considering shutting down or moving your data center, here are some best practices to keep in mind:

Decommissioning a Data Center is More than Just Taking Down Physical Equipment

Decommissioning a data center is more than just taking down physical equipment. It involves properly disposing of data center assets, including servers and other IT assets that can contain sensitive information. The process also requires a team with the right skills and experience to ensure that all data has been properly wiped from storage media before they’re disposed of.

Data Centers Can be Decommissioned in Phases, Which Allows For More Flexibility

When you begin your data center decommissioning process, it’s important to understand that it’s not an event. Instead, it’s a process that takes place over time and in phases. This flexibility allows you to adapt as circumstances change and make adjustments based on your unique situation. For example:

-

You may start by shutting down parts of the facility (or all) while keeping others running until they are no longer needed or cost-effective to keep running.

-

When you’re ready for full shutdown, there could be some equipment still in use at other locations within the company (such as remote offices). These can be moved back into storage until needed again.

Data Center Decommissioning is Subject to Compliance Guidelines

Data center decommissioning is subject to compliance guidelines. Compliance guidelines may change, but they are always in place to ensure that your organization is following industry standards and best practices.

-

Local, state and federal regulations: You should check local ordinances regarding the disposal of any hazardous materials that were used in your data center (such as lead-based paint), as well as any other applicable laws related to environmental impact or safety issues. If you’re unsure about how these might affect your plans for a decommissioned facility, consult an attorney who specializes in this area of law before proceeding with any activities related to IT asset disposition or building demolition.

-

Industry standards: There are many industry associations dedicated specifically toward helping businesses stay compliant with legal requirements when moving forward with projects such as data center decommissioning.

-

Internal policies & procedures: Make sure everyone on staff understands how important it is not just from a regulatory standpoint but also from an ethical one; nobody wants their name associated with anything inappropriate!

Companies Should Consider Safety and Security During the Decommissioning Process

Data center decommissioning is a complex process that involves several steps. Companies need to consider the risks associated with each step of the process, and they should have a plan in place to mitigate these risks. The first step of data center decommissioning is identifying all assets and determining which ones will be reused or repurposed. At this point, you should also determine how long it will take for each asset to be repurposed or recycled so that you can estimate how much money it will cost for this part of your project (this can be done through an estimate based on previous experience).

The second step involves removing any hazardous materials from electronic equipment before it’s sent off site for recycling; this includes chemicals used in manufacturing processes like lead-free solder paste adhesives used on circuit boards made from tin-based alloys containing up 80% pure tin ingots stamped out into flat sheets called “pucks”. Once these chemicals have been removed from whatever device needs them taken off their surfaces then those devices can safely go through any other necessary processes such as grinding away excess plastic housing material using high pressure water jets until only its bare frame remains intact without any cracks where moisture might collect inside later causing corrosion damage over time due too much moisture exposure.

With Proper Planning and an Effective Team, You’ll Help Protect Your Company’s Future

Data center decommissioning is a complex process that should be handled by a team of experts with extensive experience in the field. With proper planning, you can ensure a smooth transition from your current data center environment to the next one.

The first step toward a successful data center decommissioning project is to create a plan for removing hardware and software assets from the building, as well as documenting how these assets were originally installed in the facility. This will allow you or another team member who may inherit some of these assets later on down the line to easily find out where they need to go when it’s time for them to be moved again (or disposed).

Use Professional Data Center Decommissioning Companies

In order to ensure that you get the most out of your data center decommissioning project, it’s important to use a professional data center decommissioning company. A professional data center decommissioning company has experience with IT asset disposition and can help you avoid mistakes in the process. They also have the tools and expertise needed to efficiently perform all aspects of your project, from pre-planning through finalizing documentation.

Proper Planning Will Help Minimize the Risks of Data Center Decommissioning

Proper planning is the key to success when it comes to the data center decommissioning process. It’s important that you don’t wait until the last minute and rush through this process, as it can lead to mistakes and wasted time. Proper planning will help minimize any risks associated with shutting down or moving a data center, keeping your company safe from harm and ensuring that all necessary steps are taken before shutdown takes place.

To Sum Up

The key to a successful ITAD program is planning ahead. The best way to avoid unexpected costs and delays is to plan your ITAD project carefully before you start. The best practices described in this article will help you understand what it takes to decommission an entire data center or other large facility, as well as how to dispose of their assets in an environmentally responsible manner.

OTHER

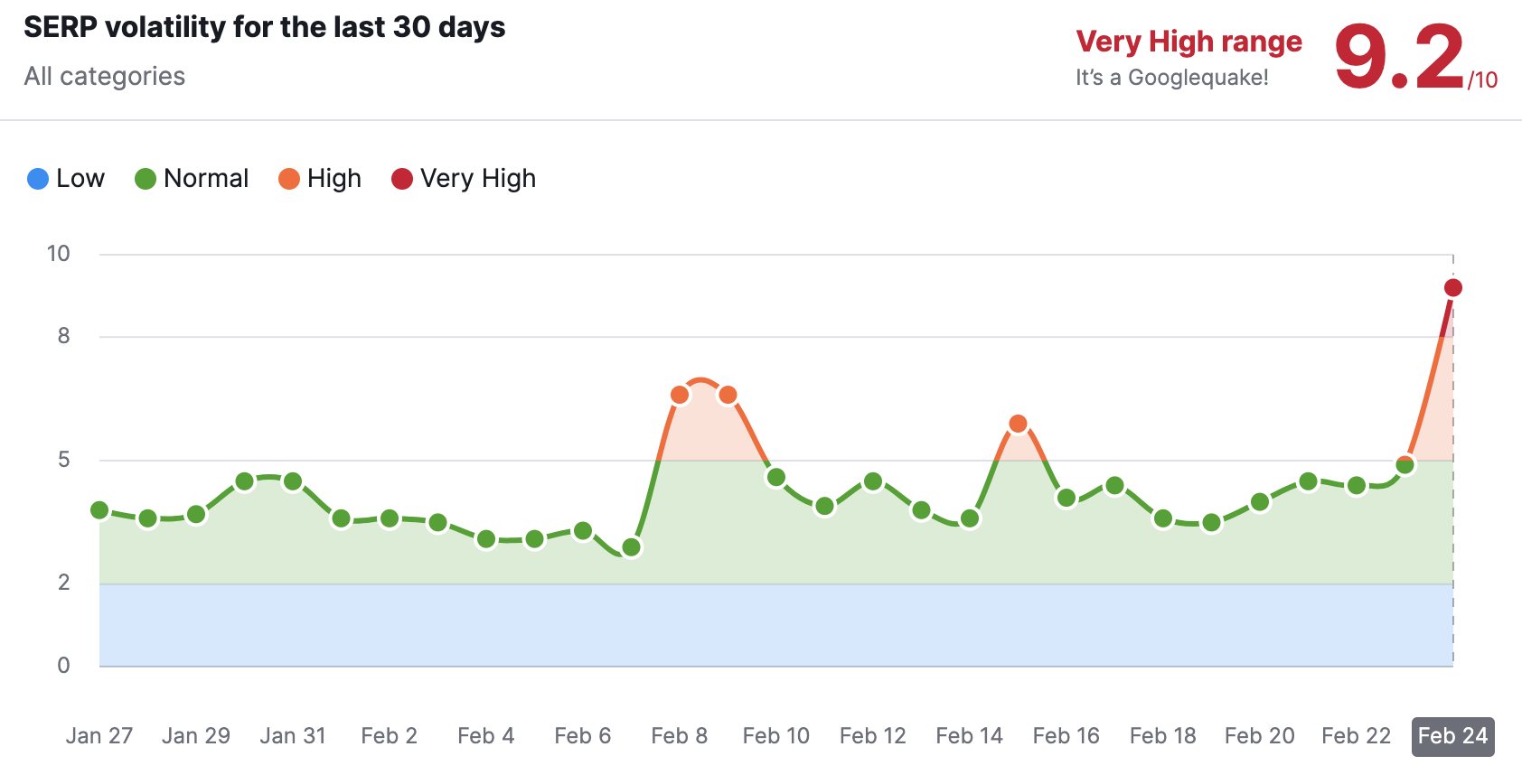

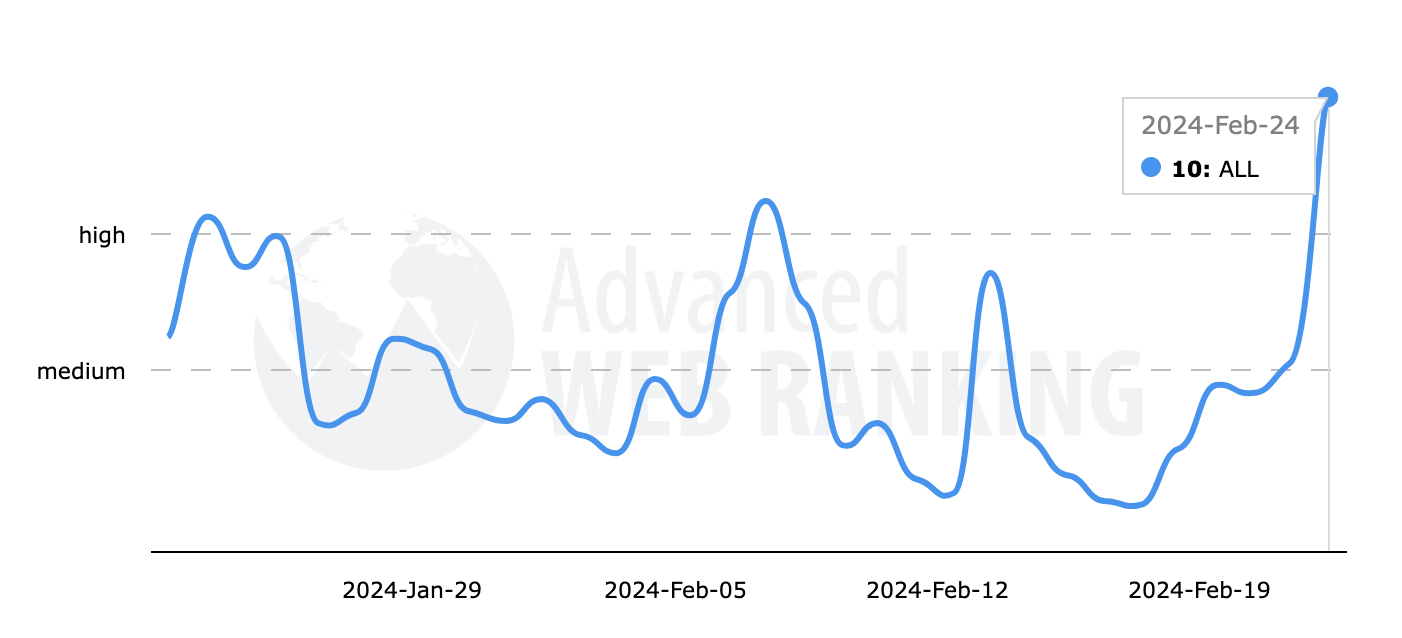

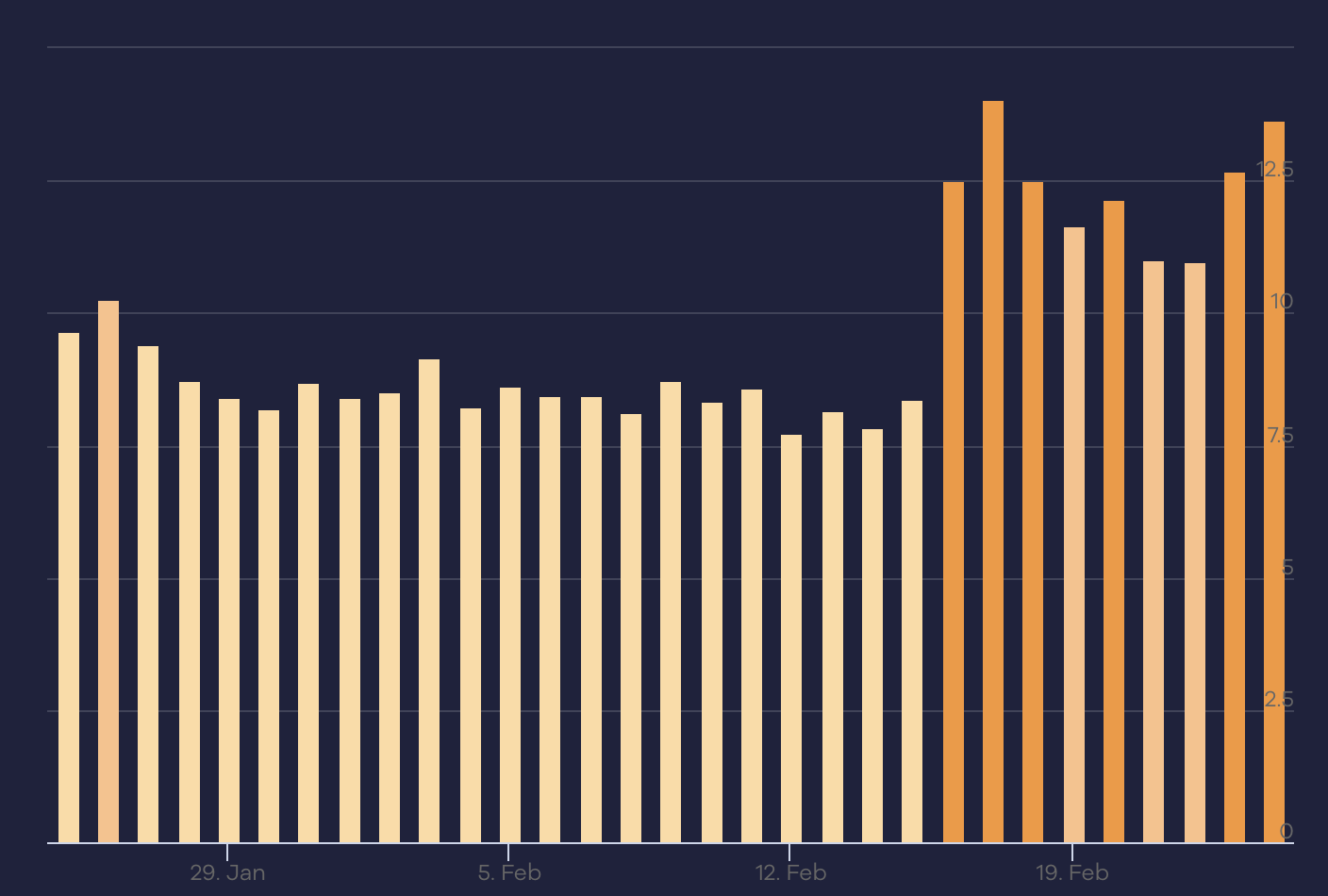

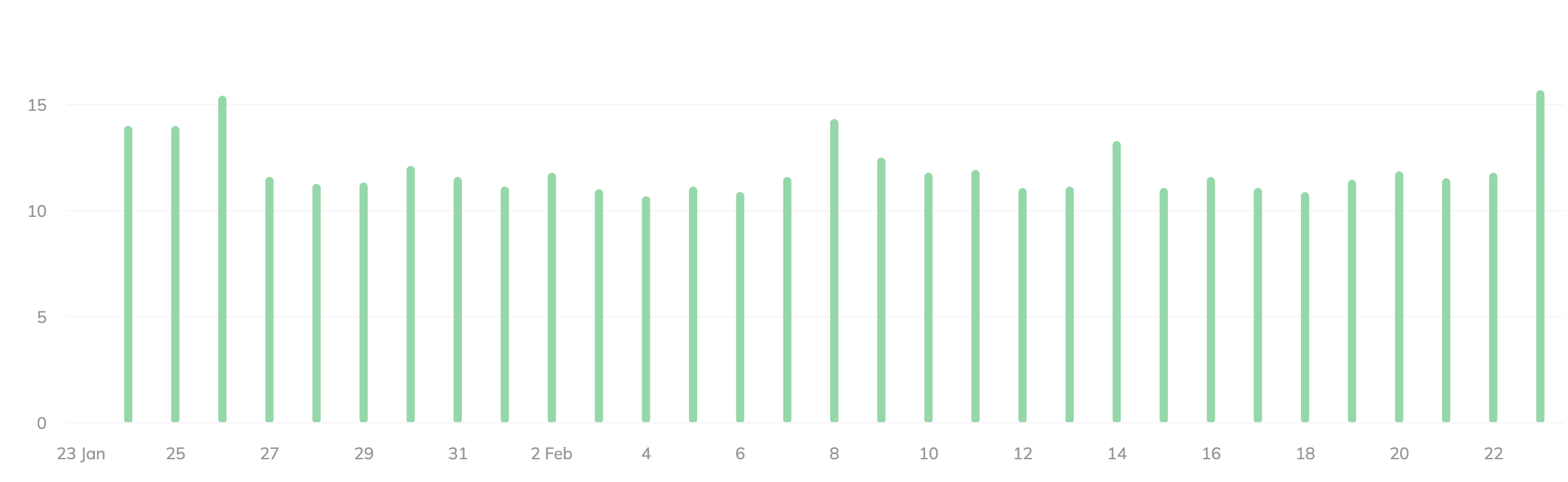

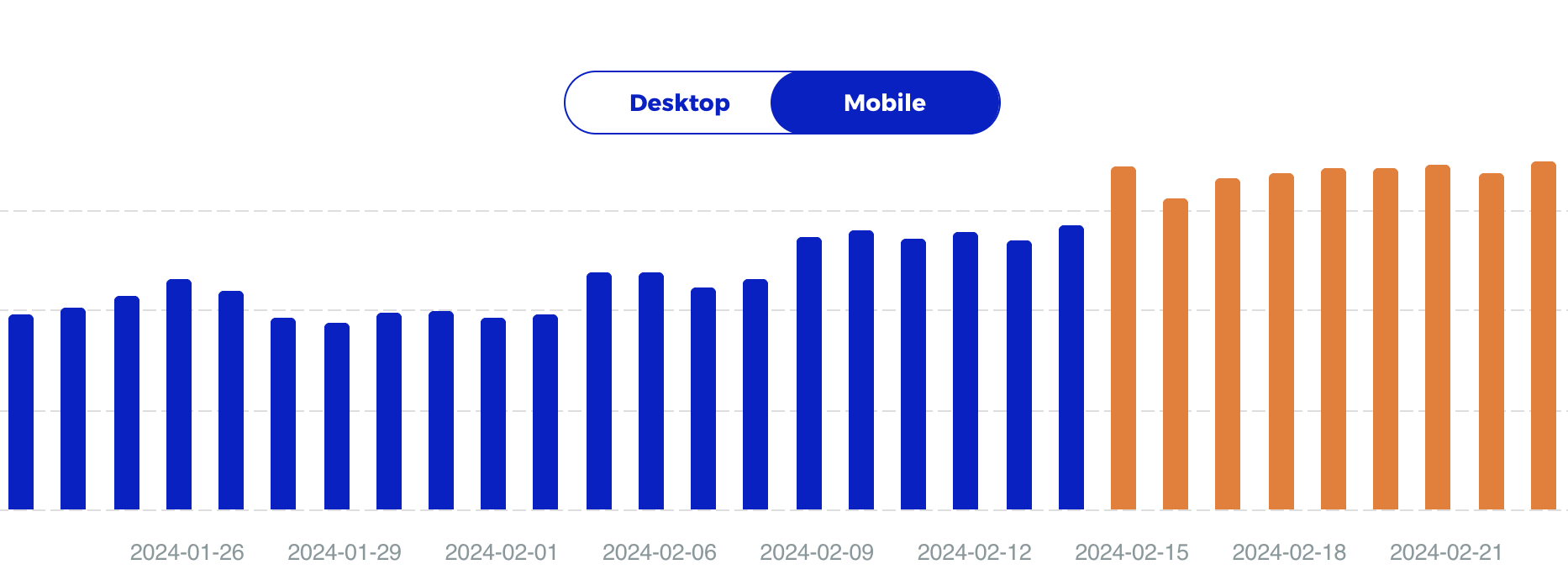

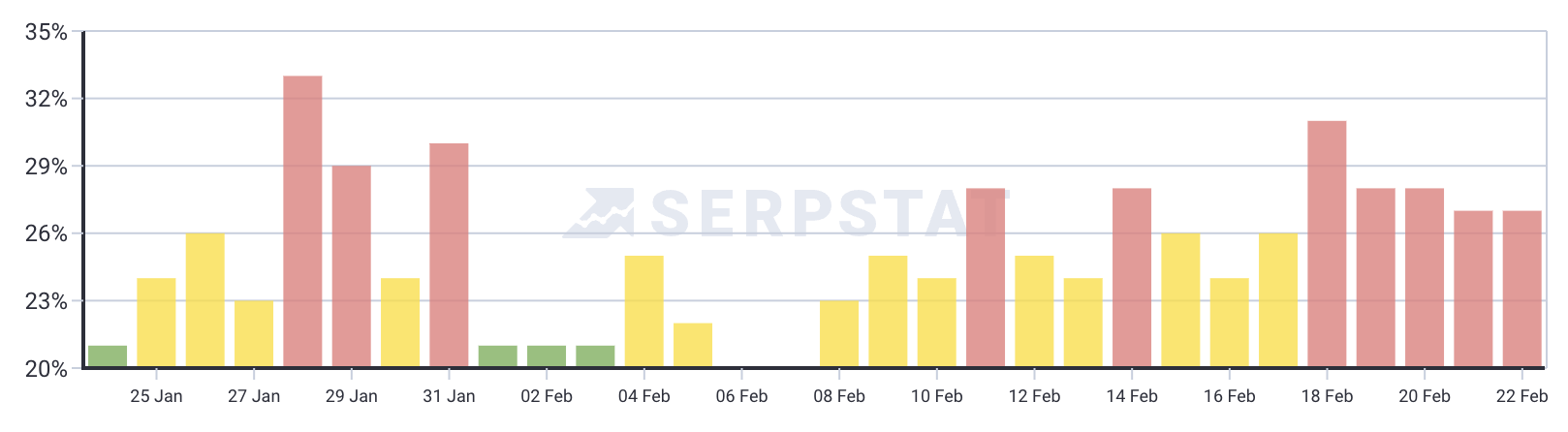

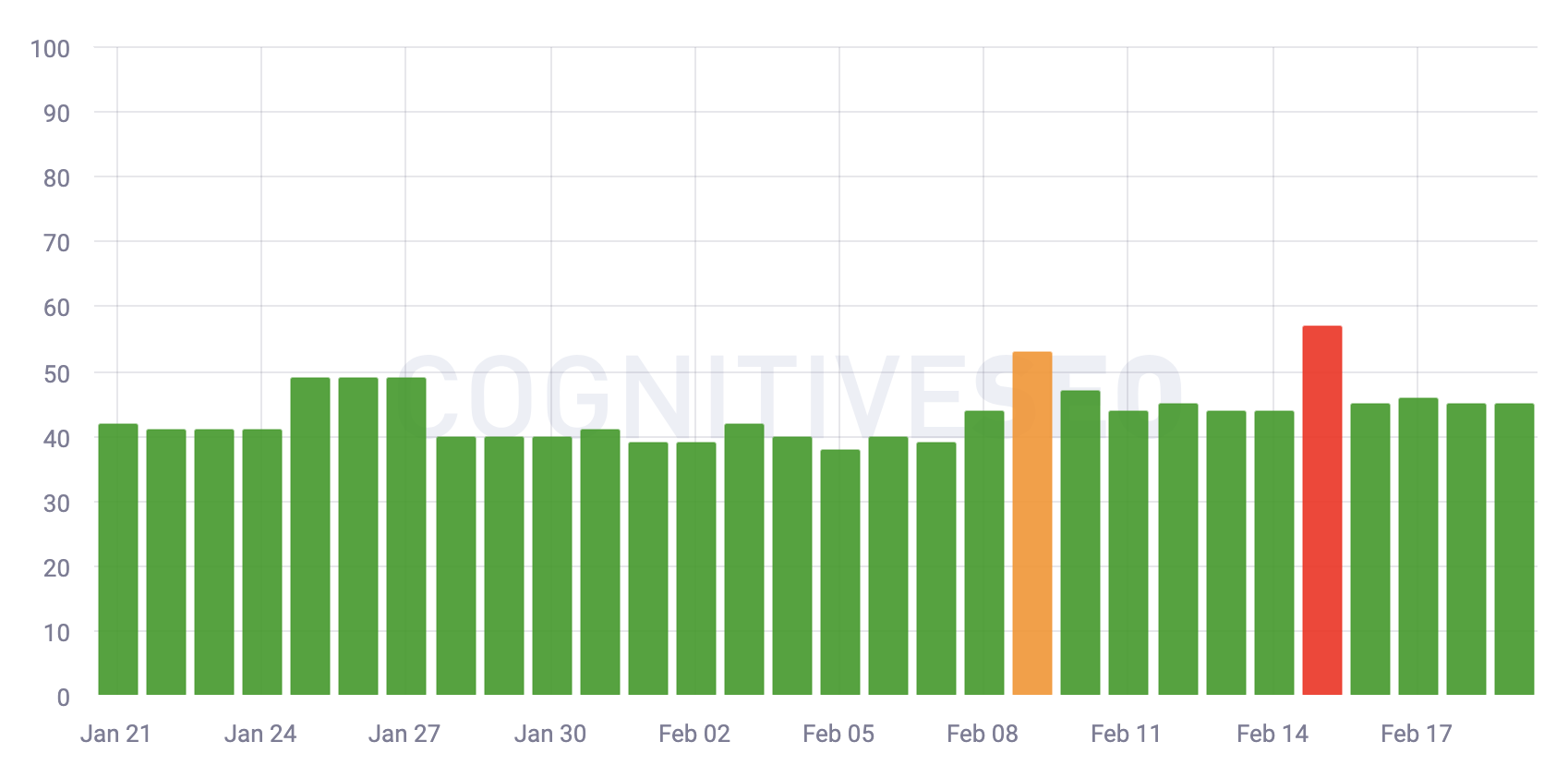

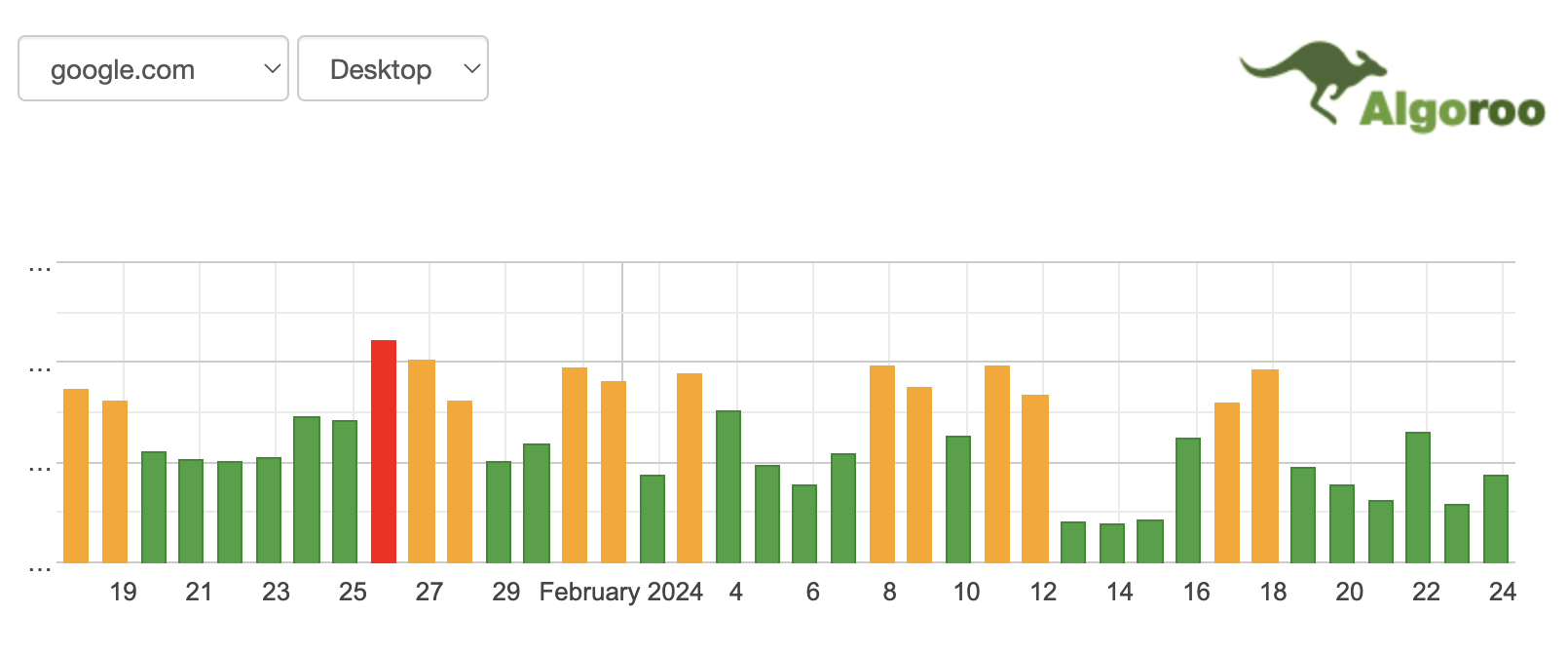

Massive Volatility Reported – Google Search Ranking Algorithm Update

I am seeing some massive volatility being reported today after seeing a spike in chatter within the SEO community on Friday. I have not seen the third-party Google tracking tools show this much volatility in a long time. I will say the tracking tools are way more heated than the chatter I am seeing, so something might be off here.

Again, I saw some initial chatter from within the SEO forums and on this site starting on Friday. I decided not to cover it on Friday because the chatter was not at the levels that would warrant me posting something. Plus, while some of the tools started to show a lift in volatility, most of the tools did not yet.

To be clear, Google has not confirmed any update is officially going on.

Well, that changed today, and the tools are all superheated today.

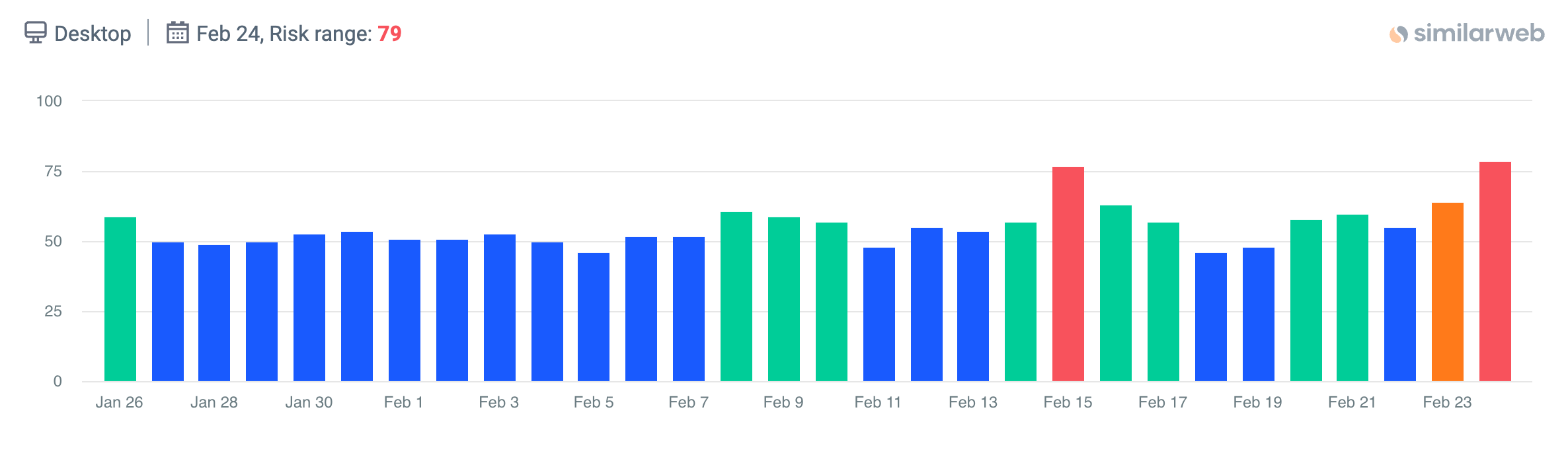

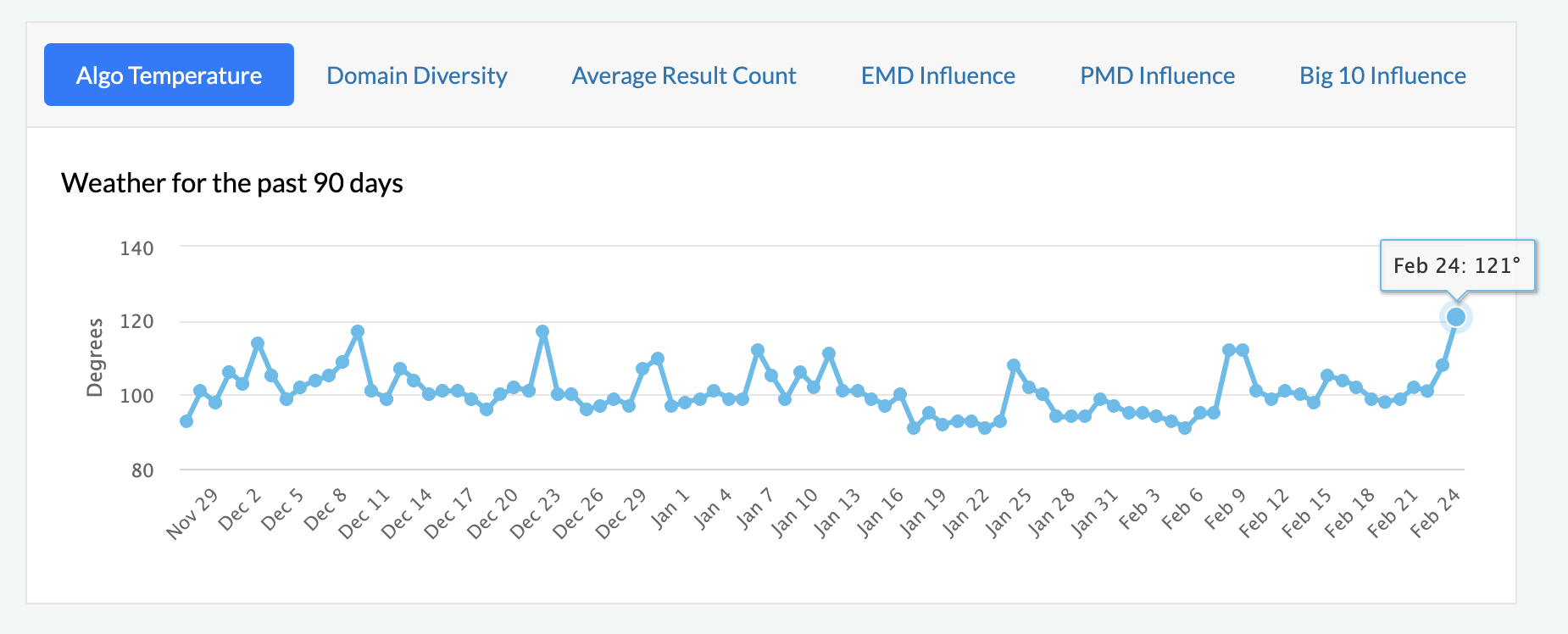

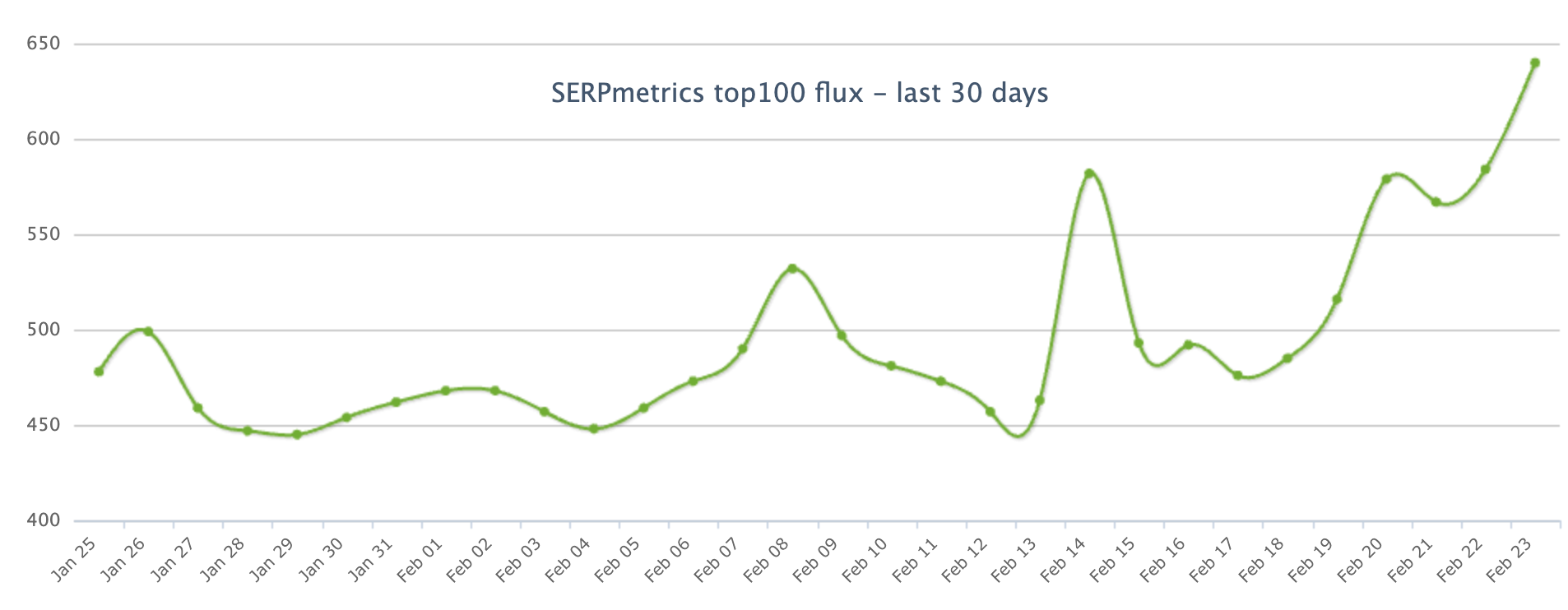

Google Tracking Tools:

Let’s start with what the tools are showing:

So most of these tools are incredibly heated, signaling that they are showing massive changes in the search result positions in the past couple of days.

SEO Chatter

Here is some of the chatter from various comments on this site and on WebmasterWorld since Friday:

Speaking of, is anyone seeing some major shuffling going on in the SERPs today? It’s a Friday so of course Google is playing around again.

Something is going on.

Pages are still randomly dropping out of the index for 8-36h at a time. Extremely annoying.

Speaking of, is anyone seeing some major shuffling going on in the SERPs today? It’s a Friday so of course Google is playing around again

In SerpRobot I’m seeing a steady increase in positions in February, for UK desktop and mobile, reaching almost the ranks from the end of Sep 2023. Ahrefs shows a slight increase in overall keywords and ranks.

In the real world, nothing seems to happen.

yep, traffic has nearly come to a stop. But exactly the same situation happened to us last Friday as well.

USA traffic continues to be whacked…starting -70% today.

In my case, US traffic is almost zero (15 % from 80%) and the rest is kind of the same I guess. Traffic has dropped from 4K a day to barely scrapping 1K now. But a lot is just bots since payment-wise, the real traffic seems to be about 400-500. And … that’s how a 90% reduction looks like.

Something is happening now. Google algo is going crazy again. Is anyone else noticing?

Since every Saturday at 12 noon the Google traffic completely disappears until Sunday, everything looks normal to me.

This update looks like a weird one and no, Google has not confirmed any update is going on.

What are you all noticing?

Forum discussion at WebmasterWorld.

-

MARKETING7 days ago

MARKETING7 days agoAdvertising in local markets: A playbook for success

-

SEARCHENGINES7 days ago

SEARCHENGINES7 days agoGoogle Core Update Flux, AdSense Ad Intent, California Link Tax & More

-

SEARCHENGINES6 days ago

SEARCHENGINES6 days agoGoogle Needs Very Few Links To Rank Pages; Links Are Less Important

-

SEO6 days ago

SEO6 days agoHow to Become an SEO Lead (10 Tips That Advanced My Career)

-

PPC5 days ago

PPC5 days ago10 Most Effective Franchise Marketing Strategies

-

MARKETING6 days ago

MARKETING6 days agoHow to Use AI For a More Effective Social Media Strategy, According to Ross Simmonds

-

AFFILIATE MARKETING7 days ago

AFFILIATE MARKETING7 days agoSet Your Team up for Success and Let Them Browse the Internet Faster

-

PPC6 days ago

PPC6 days agoBiggest Trends, Challenges, & Strategies for Success

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-400x240.webp)

![The Current State of Google’s Search Generative Experience [What It Means for SEO in 2024] person typing on laptop with](https://articles.entireweb.com/wp-content/uploads/2024/04/The-Current-State-of-Googles-Search-Generative-Experience-What-It.webp-80x80.webp)