SEARCHENGINES

Daily Search Forum Recap: October 31, 2022

Here is a recap of what happened in the search forums today, through the eyes of the Search Engine Roundtable and other search forums on the web.

A new Google local ranking study shows keywords in reviews does not improve local rankings in Google Search. Want to see a site that got hit hard by the Google spam update, we got one. Google is showing on-time delivery and order accuracy data in search listings. Google Search Console’s geographic setting is still available but it likely does not work. Google has new fall decorations for some search results. And a new vlog is out today, this one with Rick Mariano.

Search Engine Roundtable Stories:

Other Great Search Threads:

- The Google cache is not a diagnostics tool., John Mueller on Twitter

- October 2022 Google Search Observations, WebmasterWorld

- All of the new tricks and treats in today’s #GoogleDoodle are ghastly good—in fact, it’s the stalk of the town See if you can keep your ghoul and avoid getting lost in the new corn maze game arena, Google Doodles on Twitter

- Worried about many duplicate urls indexed, but blocked by robots.txt? Via @johnmu: If blocked, Google doesn’t know what’s on the page content-wise. If you have to dig for those via advanced site queries, then users typically w, Glenn Gabe on Twitter

- If you have SEO / Search questions, please drop them into our new SEO office hours form at https://t.co/KeLoq7p10V — we’ll be using this as a basis for the next office hours episodes. Tha, John Mueller on Twitter

- It’s up to you to convince users & search engines. Go for it, make something awesome., John Mueller on Twitter

Search Engine Land Stories:

Other Great Search Stories:

Analytics

Industry & Business

Links & Content Marketing

Local & Maps

Mobile & Voice

SEO

PPC

Other Search

Feedback:

Have feedback on this daily recap; let me know on Twitter @rustybrick or @seroundtable, you can follow us on Facebook and make sure to subscribe to the YouTube channel, Apple Podcasts, Spotify, Google Podcasts or just contact us the old fashion way.

Source: www.seroundtable.com

AI

How AI is Transforming SEO and What Website Owners Need to Know

The digital landscape is changing fast, and artificial intelligence (AI) is at the forefront of this transformation. One of the fields AI is impacting significantly is Search Engine Optimization (SEO). In recent years, search engines have become increasingly intelligent in how they rank content, and AI is playing a major role in these changes.

For website owners, understanding how AI will impact SEO is essential to stay competitive and visible online. This article explores how AI is influencing SEO today, what we can expect in the near future, and the practical steps website owners can take to optimize their sites in an AI-driven SEO landscape.

The Role of AI in Modern SEO

SEO has evolved from keyword stuffing to a more complex practice involving quality content, user intent, and technical optimization. Today, search engines are looking at more than just keywords; they are focusing on understanding the intent and context behind a user’s search. This shift has been made possible by advances in AI and machine learning technologies.

AI helps search engines process and rank enormous amounts of data, allowing them to better interpret what users are looking for. Some notable AI technologies shaping SEO include:

- Natural Language Processing (NLP): NLP enables search engines to understand language more naturally, helping them interpret search queries more accurately.

- Deep Learning: This subset of AI allows search engines to improve their understanding over time by learning from vast amounts of data.

- Machine Learning Algorithms: Algorithms like Google’s RankBrain and BERT analyze search behavior and content to provide more relevant results.

These technologies are improving how search engines analyze content, making it crucial for website owners to keep up with AI-driven changes in SEO.

Key Ways AI is Impacting SEO

AI is already changing SEO in various ways. Let’s look at some of the main areas impacted by AI and what it means for website owners.

1. User Intent and Content Relevance

One of the biggest impacts of AI on SEO is how it helps search engines understand user intent. Modern algorithms don’t just match keywords; they analyze user behavior and context to provide results that answer the user’s underlying question.

This means that website owners need to prioritize content that addresses specific user needs. Instead of focusing solely on keywords, it’s essential to create content that answers common questions, solves problems, or fulfills a particular need that users may have.

Tip for Website Owners: Use AI-powered tools like chatbots and analytics platforms to understand what your users are looking for on your site. Tailor your content to align with those needs for improved relevance and engagement.

2. Personalization

AI allows search engines to personalize results based on individual preferences, search history, and location. For instance, someone searching for “best restaurants” in New York might see different results than someone searching for the same term in Los Angeles.

For SEO, this means a greater focus on localized and targeted content. Website owners need to consider how their content can appeal to specific demographics or regions to make the most of AI-driven personalization in search results.

Tip for Website Owners: Use AI-powered SEO tools to understand your audience’s demographics better. Consider adding location-based keywords or creating content specific to your main audience groups.

3. Voice Search Optimization

As AI-powered voice assistants like Siri, Alexa, and Google Assistant become more popular, voice search is changing how people interact with search engines. Voice searches tend to be longer and more conversational than text-based searches, and they often focus on local queries.

This shift toward voice search requires website owners to optimize for natural, conversational phrases and questions. Content that mirrors spoken language and answers specific questions has a better chance of ranking for voice search queries.

Tip for Website Owners: Consider using “long-tail” keywords and question-based phrases in your content. Tools like Answer the Public and Google’s “People Also Ask” feature can help identify popular questions related to your niche.

4. Content Quality and User Engagement Signals

AI has enabled search engines to assess content quality in ways that were previously difficult. Google, for example, uses AI to monitor user engagement metrics like click-through rates, bounce rates, and time spent on a page. Content that engages users is ranked higher because it’s more likely to be valuable.

This shift makes it critical for website owners to focus on content quality. Engaging and informative content not only attracts users but also keeps them on the page longer, which signals to AI-powered search engines that the content is valuable.

Tip for Website Owners: Focus on creating high-quality, easy-to-read content that keeps users engaged. Add visuals, infographics, or videos to make the content more interesting and shareable.

5. Image and Video Recognition

Search engines are now capable of recognizing and indexing images and videos through AI-driven technologies like image recognition. This means that visual content, once overlooked by search engines, is now a critical component of SEO.

For example, Google Lens allows users to search for information about objects simply by taking a photo, which has opened up new SEO possibilities for image-based content. Website owners can optimize images by using descriptive file names, alt text, and relevant captions.

Tip for Website Owners: Optimize images and videos by adding descriptive alt text and captions. Consider using AI-driven image recognition tools to ensure that visual content aligns with keywords and SEO strategies.

How AI Will Shape SEO in the Future

As AI continues to advance, we can expect even more changes in SEO. Here’s a look at some potential future trends and what they mean for website owners.

1. Predictive SEO

In the future, AI may enable “predictive SEO,” where algorithms can forecast popular keywords or trends before they peak. By analyzing data patterns and user behavior, AI could help website owners predict what users will search for, allowing them to create content proactively.

What This Means for Website Owners: Predictive SEO could make it easier to stay ahead of trends and anticipate the content users are likely to search for. Using predictive analytics tools, website owners can be more strategic about the content they create.

2. AI-Generated Content and Content Curation

AI is increasingly capable of generating high-quality content. Tools like GPT-3 and other advanced language models can write coherent articles, product descriptions, and more. While AI-generated content isn’t yet perfect, it could be a valuable tool for generating SEO-friendly content quickly and efficiently.

What This Means for Website Owners: In the future, website owners might use AI tools to supplement human-written content, allowing for quicker content creation. However, quality control will still be essential, as search engines are likely to favor content that feels authentic and engages readers.

3. Advanced User Behavior Analysis

AI-powered algorithms will become even better at analyzing user behavior, tracking every click, scroll, and interaction. This advanced tracking could lead to a more dynamic SEO landscape, where search engine rankings adjust in near real-time based on user engagement data.

What This Means for Website Owners: Continuous user engagement will be essential for maintaining high rankings. Website owners will need to be proactive in analyzing user behavior and refining their content to keep up with what resonates most with their audience.

4. Natural Language Processing (NLP) and Multilingual SEO

NLP capabilities in AI are advancing quickly, and search engines will become more adept at interpreting language, meaning SEO may become more language-neutral in the future. This could allow websites in one language to rank well in another, depending on user demand and relevance.

What This Means for Website Owners: To maximize reach, website owners might need to focus on multilingual SEO. Investing in high-quality translations and optimizing for a global audience can open up new SEO opportunities.

Practical Tips for SEO in an AI-Driven Future

Given the changes AI is bringing to SEO, website owners can take proactive steps to stay ahead:

- Focus on User-Centric Content: AI prioritizes content that genuinely answers users’ questions. Rather than focusing only on keywords, make sure your content addresses specific user needs and is easy to understand.

- Utilize AI Tools for Content Strategy: AI-powered tools like SEMrush, Ahrefs, and SurferSEO can help you analyze data trends, identify keyword opportunities, and optimize content effectively.

- Optimize for Voice Search: As more people use voice search, optimize your content for natural, conversational language. Include phrases that match how people speak rather than how they type.

- Enhance Visual SEO: Use descriptive file names, alt tags, and captions for images. Consider incorporating more videos and graphics to improve user engagement.

- Monitor User Engagement Metrics: Track metrics like bounce rate, time on page, and click-through rates to assess content quality. Make improvements based on user behavior to keep your content relevant.

- Experiment with AI-Generated Content: If your site needs frequent updates, consider using AI-generated content as a supplement to human-written content. Ensure it’s well-edited to meet quality standards.

Final Thoughts

AI’s impact on SEO is only beginning, but it’s clear that it will continue shaping how search engines rank content and how website owners approach optimization. By staying informed and adapting to these changes, website owners can not only maintain but improve their visibility in search engine results. Whether it’s optimizing for user intent, embracing predictive analytics, or enhancing visual content, AI provides numerous tools to create a richer, more engaging user experience.

For website owners willing to stay ahead of the curve, AI offers exciting new possibilities for SEO – transforming it from a keyword-driven strategy to a dynamic, user-centered approach that enhances both site performance and user satisfaction.

This article was written by the Entireweb Team.

SEARCHENGINES

Google Search Ranking Volatility October 26th & 27th & 23rd & 24th

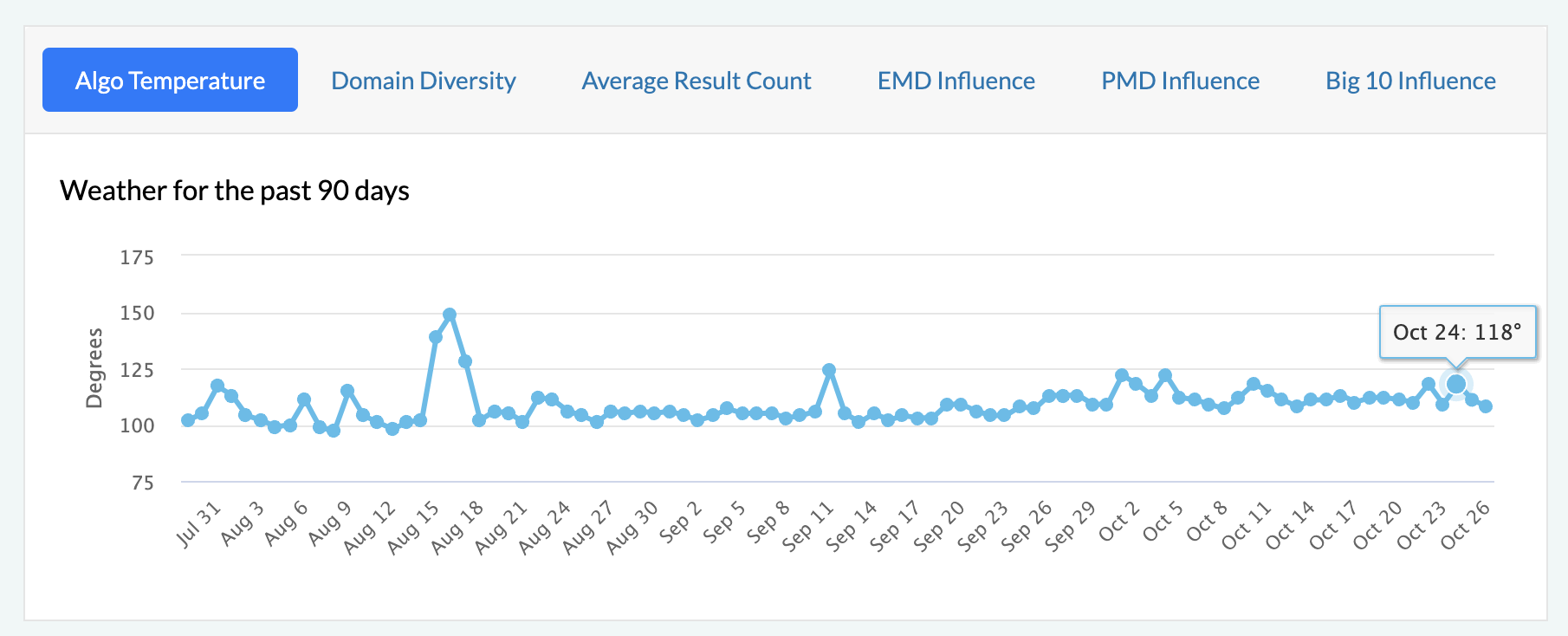

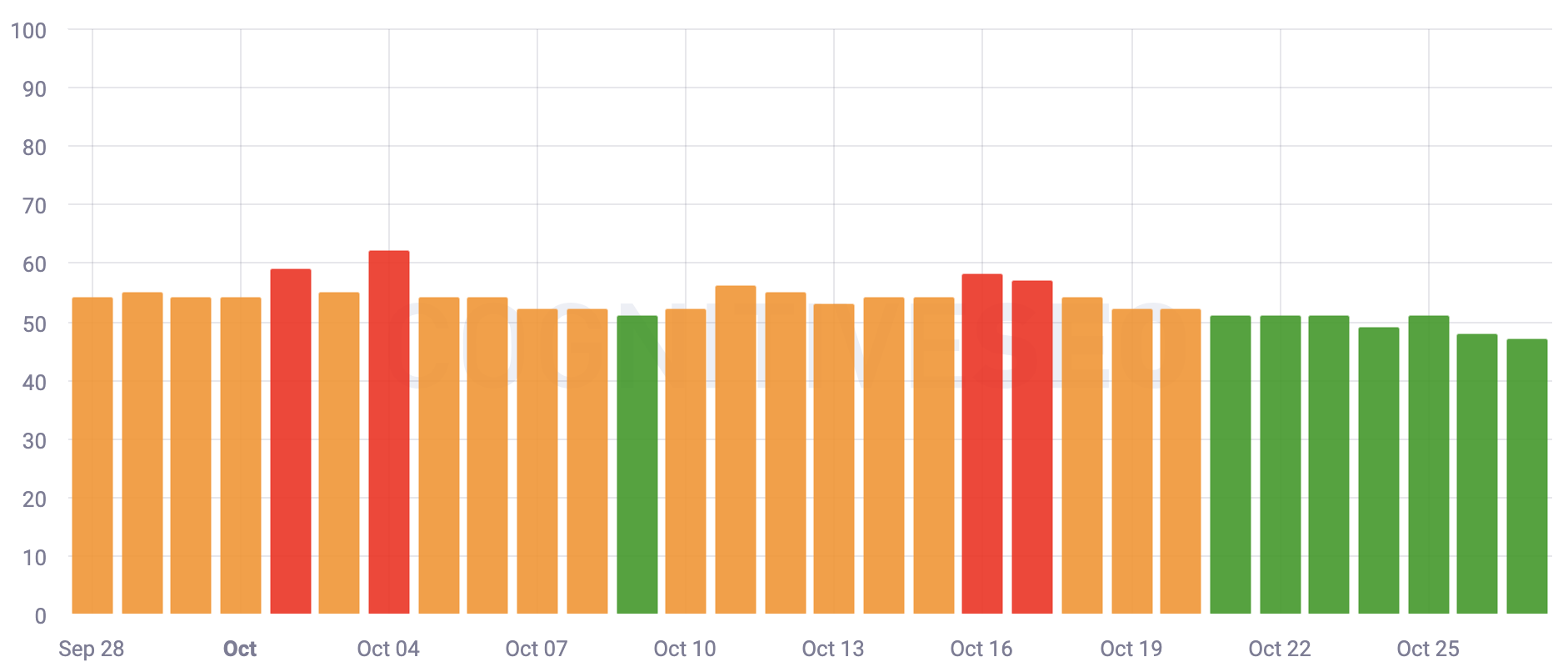

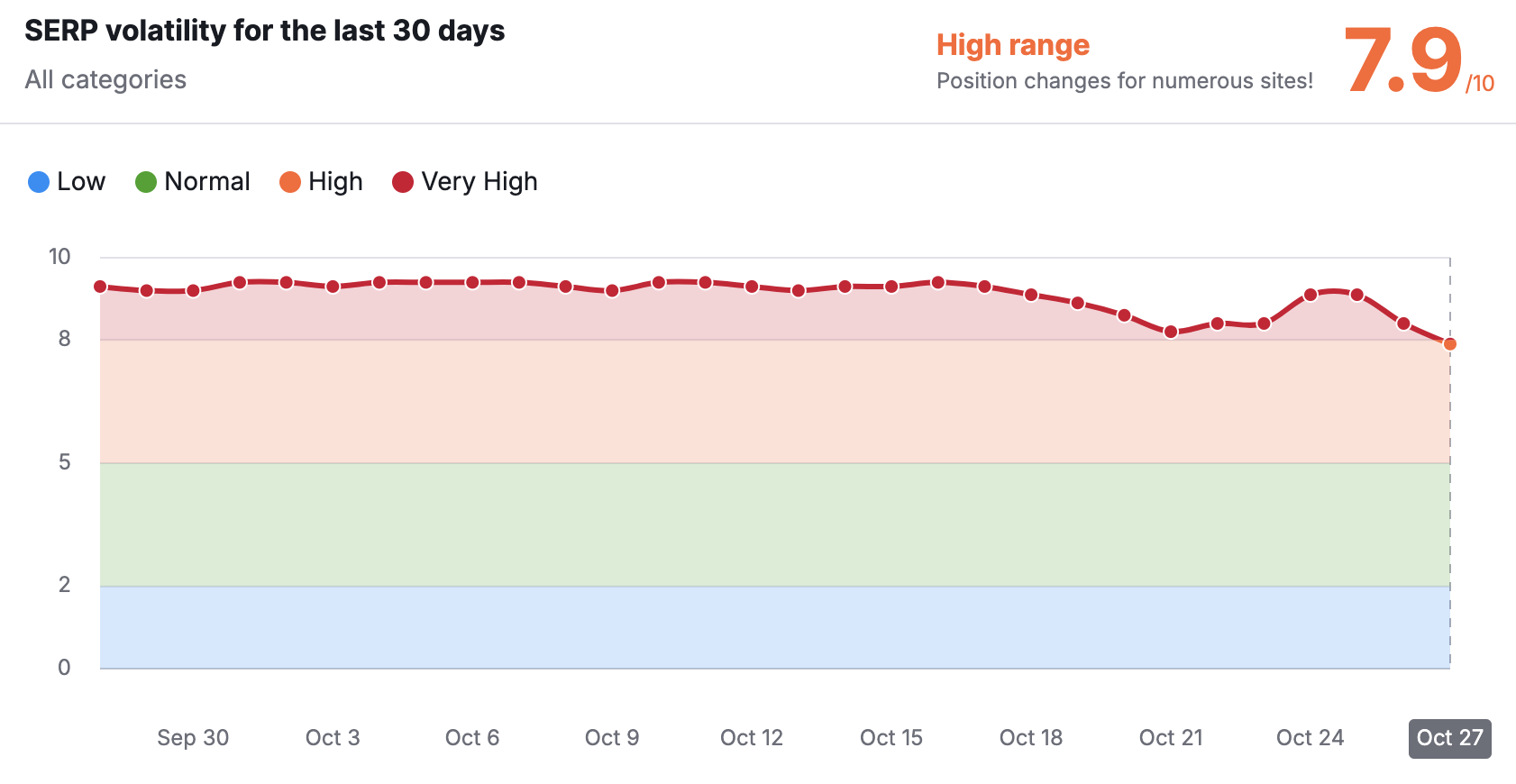

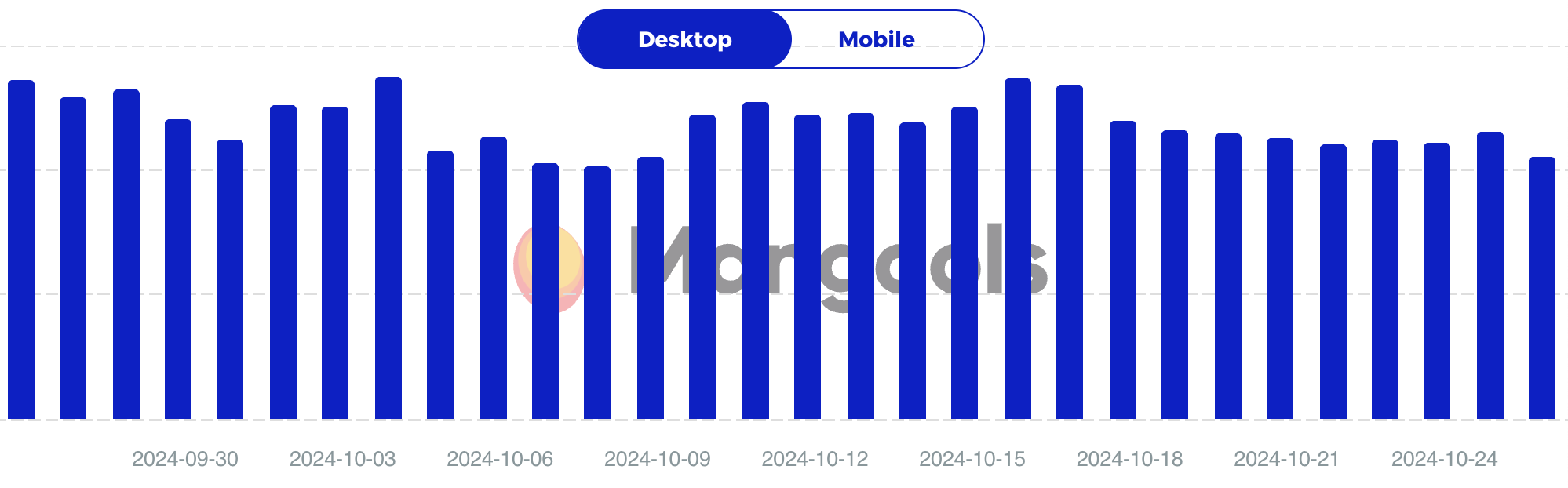

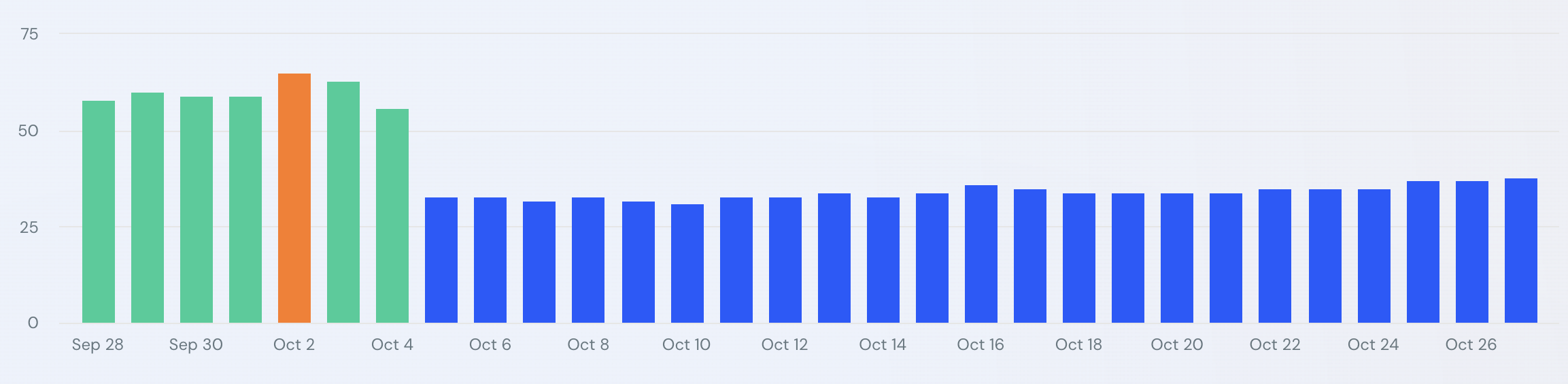

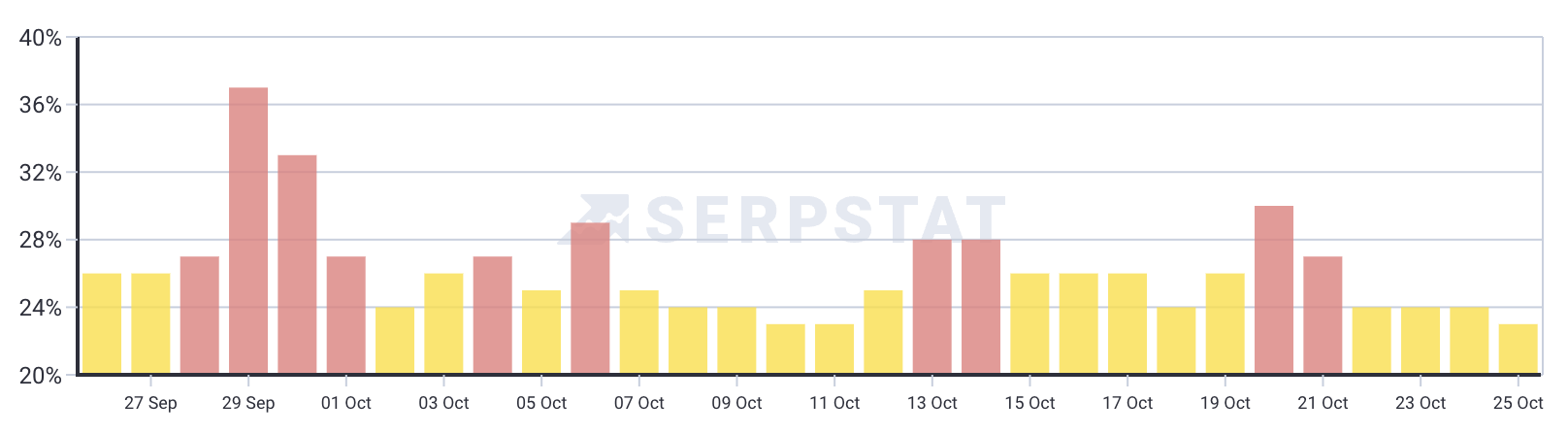

While I was offline, it seems we had two bouts of Google Search ranking volatility, one starting around Wednesday, October 23rd and 24th, and then again in the past 24 hours, starting on October 26th and 27th. I mean, this is a lot of the same as my previous Google Search ranking algorithm update coverage.

Again, I am seeing new intense chatter from the SEO community about big ranking changes in the past 24 hours and also from when I went offline on the 23rd and 24th of October.

As a reminder, we covered October 19th and 20th volatility and then on October 15th, October 10th and then before that on October 2nd which lasted a couple of days. The Google August 2024 core update started on August 15th and officially completed on September 3rd. But it was still super volatile the day after it completed and also weeks after it completed and it has not cooled.

We saw big signals on and around September 6th, September 10th or so and maybe around September 14th. We also saw movement around September 18th, last weekend and Septmeber 25th and September 28th or so.

Here is what I am seeing now.

SEO Chatter

Here is some of the chatter in the past 24 hours from both WebmasterWorld and over here:

Google shut down organic traffic completely today in Brazil. The Semrush sensor is normal(8,9), but the traffic is stopped.

Wow, traffic just died today. It’s like my traffic went on a weekend break too.

The worst thing is close to Christmas, it’s as if my website doesn’t even exist.

Why my category pages with little to no data getting indexed and appearing on first page while pages with content are no where to be found? This new update has totally messed up SERPs. Anyone else observing this behavior?

My “informational” site seems to be holding steady rank and traffic wise but seeing competitors drop from page one to 3 then back again to original position a few hours later, its like total flux and nothing I have ever seen, constant movement for months now, hour by hour…. What are they doing?

There is more but here is some of the chatter from Wednesday and Thursday:

Really low traffic today, according to GA.

Update today? Huge drop over here!

Does anyone understand the current chaos? Despite a good ranking, my traffic has dropped drastically since Friday and every day it gets less. The same applies to a number of other website owners I’ve spoken to in the last few days. Especially considering that Google traffic has increased dramatically since August. In the shop the same: until Friday, sales were good, now it’s just zombie traffic again.

Huge swings today again, yesterday it looked like they did a roll back to around March…. now the past few hours it looks like a roll back of a rollback. I think this is the new norm and another money grab by G to have organics in a constant state of flux to prevent any company/site having any sort of stability if not using ads?!

Drop today confirmed since yesterday.

Sheeesh. @rustybrick I’m seeing HUGE movement in last 24 hours, both gains and losses (naturally).— Taylor Kurtz (@RealTaylorKurtz) October 24, 2024

Yep, lots of volatility. For example, here’s a site heavily impacted by the September helpful content update (HCUX) that surged with the August core update, then dropped when a number of other HCUX sites dropped back down AFTER the August core update completed, but now surged… https://t.co/kBavOmX586 pic.twitter.com/f6O8S7YtuO— Glenn Gabe (@glenngabe) October 27, 2024

Google Tracking Tools

The tracking tools seem at their normal high levels, with nothing really jumping out as massive. So, it is a bit unusual not to see the spike in chatter along with the spike with the tools. Here is what they show:

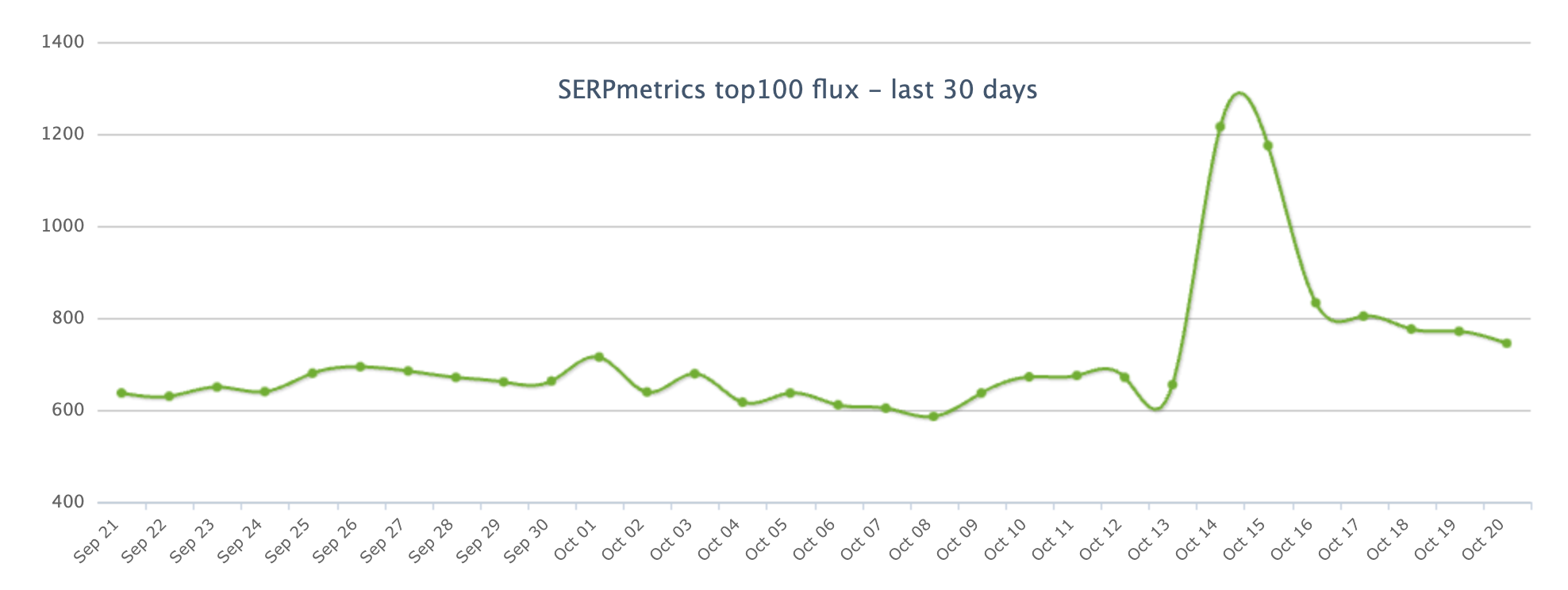

SERPmetrics (not updated in a week or so):

So what are you all seeing?

I am covering this with a rare Sunday story as I catch up on other stories throughout the week.

Forum discussion at WebmasterWorld.

SEARCHENGINES

Google Ranking Movement, Sitelinks Search Box Going Away, Gen-AI In Bing & Google, Ad News & More

This week, we had more Google search ranking volatility to report on – surprise, surprise. Google is deprecating its Sitelinks Search Box next month. Google may have penalized Fortune Recommends over site reputation abuse. Google is showing generative AI throughout the search results. Google has this People’s Insights box that shows online forums for unprofessional medical advice. Bing Search is adding AI-enhanced summaries to its search results. There are several bugs with the Bing Webmaster Tools API and documentation. Google Ads performance max won’t take priority over search campaigns when in the same account. Also, Google Ads has sharable ad previews, asset experiments for Performance Max with product feeds and test adding assets and using Final URL expansion. Google search ads now uses travel feeds for hotel ads. Google has a watch video icon for video ads in search. Google to pause ads from showing after the electoral polls close on November 5th. Google Local Service Ads is testing request competitive quotes. Google Merchant Center is testing audience insights. Google Ads API version 18 is out. Nick Fox is the new head of Google Search, he is replacing the super controversial Prabhakar Raghavan. There is a new SEO board game coming out for the holidays. And I am offline today, when this was published, for a holiday, this was pre-written, pre-recorded and scheduled to go live today.s That was the search news this week at the Search Engine Roundtable.

SPONSOR: This week’s video recap is sponsored by Duda, the Professional Website Builder You Can Call Your Own.

Make sure to subscribe to our video feed or subscribe directly on iTunes, Apple Podcasts, Spotify, Google Podcasts or your favorite podcast player to be notified of these updates and download the video in the background. Here is the YouTube version of the feed For the original iTunes version, click here.

Search Topics of Discussion:

Please do subscribe on YouTube or subscribe via iTunes or on your favorite RSS reader. Don’t forget to comment below with the right answer and good luck!

You must be logged in to post a comment Login